- Skip to main content

- Skip to search

- Skip to select language

- Sign up for free

- English (US)

Codecs used by WebRTC

The WebRTC API makes it possible to construct websites and apps that let users communicate in real time, using audio and/or video as well as optional data and other information. To communicate, the two devices need to be able to agree upon a mutually-understood codec for each track so they can successfully communicate and present the shared media. This guide reviews the codecs that browsers are required to implement as well as other codecs that some or all browsers support for WebRTC.

Containerless media

WebRTC uses bare MediaStreamTrack objects for each track being shared from one peer to another, without a container or even a MediaStream associated with the tracks. Which codecs can be within those tracks is not mandated by the WebRTC specification. However, RFC 7742 specifies that all WebRTC-compatible browsers must support VP8 and H.264 's Constrained Baseline profile for video, and RFC 7874 specifies that browsers must support at least the Opus codec as well as G.711 's PCMA and PCMU formats.

These two RFCs also lay out options that must be supported for each codec, as well as specific user comfort features such as echo cancellation. This guide reviews the codecs that browsers are required to implement as well as other codecs that some or all browsers support for WebRTC.

While compression is always a necessity when dealing with media on the web, it's of additional importance when videoconferencing in order to ensure that the participants are able to communicate without lag or interruptions. Of secondary importance is the need to keep the video and audio synchronized, so that the movements and any ancillary information (such as slides or a projection) are presented at the same time as the audio that corresponds.

General codec requirements

Before looking at codec-specific capabilities and requirements, there are a few overall requirements that must be met by any codec configuration used with WebRTC.

Unless the SDP specifically signals otherwise, the web browser receiving a WebRTC video stream must be able to handle video at 20 FPS at a minimum resolution of 320 pixels wide by 240 pixels tall. It's encouraged that video be encoded at a frame rate and size no lower than that, since that's essentially the lower bound of what WebRTC generally is expected to handle.

SDP supports a codec-independent way to specify preferred video resolutions ( RFC 6236 . This is done by sending an a=imageattr SDP attribute to indicate the maximum resolution that is acceptable. The sender is not required to support this mechanism, however, so you have to be prepared to receive media at a different resolution than you requested. Beyond this simple maximum resolution request, specific codecs may offer further ways to ask for specific media configurations.

Supported video codecs

WebRTC establishes a baseline set of codecs which all compliant browsers are required to support. Some browsers may choose to allow other codecs as well.

Below are the video codecs which are required in any fully WebRTC-compliant browser, as well as the profiles which are required and the browsers which actually meet the requirement.

For details on WebRTC-related considerations for each codec, see the sub-sections below by following the links on each codec's name.

Complete details of what video codecs and configurations WebRTC is required to support can be found in RFC 7742: WebRTC Video Processing and Codec Requirements . It's worth noting that the RFC covers a variety of video-related requirements, including color spaces (sRGB is the preferred, but not required, default color space), recommendations for webcam processing features (automatic focus, automatic white balance, automatic light level), and so on.

Note: These requirements are for web browsers and other fully-WebRTC compliant products. Non-WebRTC products that are able to communicate with WebRTC to some extent may or may not support these codecs, although they're encouraged to by the specification documents.

In addition to the mandatory codecs, some browsers support additional codecs as well. Those are listed in the following table.

VP8, which we describe in general in the main guide to video codecs used on the web , has some specific requirements that must be followed when using it to encode or decode a video track on a WebRTC connection.

Unless signaled otherwise, VP8 will use square pixels (that is, pixels with an aspect ratio of 1:1).

Other notes

The network payload format for sharing VP8 using RTP (such as when using WebRTC) is described in RFC 7741: RTP Payload Format for VP8 Video .

AVC / H.264

Support for AVC's Constrained Baseline (CB) profile is required in all fully-compliant WebRTC implementations. CB is a subset of the main profile, and is specifically designed for low-complexity, low-delay applications such as mobile video and videoconferencing, as well as for platforms with lower performing video processing capabilities.

Our overview of AVC and its features can be found in the main video codec guide.

Special parameter support requirements

AVC offers a wide array of parameters for controlling optional values. In order to improve reliability of WebRTC media sharing across multiple platforms and browsers, it's required that WebRTC endpoints that support AVC handle certain parameters in specific ways. Sometimes this means a parameter must (or must not) be supported. Sometimes it means requiring a specific value for a parameter, or that a specific set of values be allowed. And sometimes the requirements are more intricate.

Parameters which are useful but not required

These parameters don't have to be supported by the WebRTC endpoint, and their use is not required either. Their use can improve the user experience in various ways, but don't have to be used. Indeed, some of these are pretty complicated to use.

If specified and supported by the software, the max-br parameter specifies the maximum video bit rate in units of 1,000 bps for VCL and 1,200 bps for NAL. You'll find details about this on page 47 of RFC 6184 .

If specified and supported by the software, max-cpb specifies the maximum coded picture buffer size. This is a fairly complicated parameter whose unit size can vary. See page 45 of RFC 6184 for details.

If specified and supported, max-dpb indicates the maximum decoded picture buffer size, given in units of 8/3 macroblocks. See RFC 6184, page 46 for further details.

If specified and supported by the software, max-fs specifies the maximum size of a single video frame, given as a number of macroblocks.

If specified and supported by the software, this value is an integer specifying the maximum rate at which macroblocks should be processed per second (in macroblocks per second).

If specified and supported by the software, this specifies an integer stating the maximum static macroblock processing rate in static macroblocks per second (using the hypothetical assumption that all macroblocks are static macroblocks).

Parameters with specific requirements

These parameters may or may not be required, but have some special requirement when used.

All endpoints are required to support mode 1 (non-interleaved mode). Support for other packetization modes is optional, and the parameter itself is not required to be specified.

Sequence and picture information for AVC can be sent either in-band or out-of-band. When AVC is used with WebRTC, this information must be signaled in-band; the sprop-parameter-sets parameter must therefore not be included in the SDP.

Parameters which must be specified

These parameters must be specified whenever using AVC in a WebRTC connection.

All WebRTC implementations are required to specify and interpret this parameter in their SDP, identifying the sub-profile used by the codec. The specific value that is set is not defined; what matters is that the parameter be used at all. This is useful to note, since in RFC 6184 ("RTP Payload Format for H.264 Video"), profile-level-id is entirely optional.

Other requirements

For the purposes of supporting switching between portrait and landscape orientations, there are two methods that can be used. The first is the video orientation (CVO) header extension to the RTP protocol. However, if this isn't signaled as supported in the SDP, then it's encouraged that browsers support Display Orientation SEI messages, though not required.

Unless signaled otherwise, the pixel aspect ratio is 1:1, indicating that pixels are square.

The payload format used for AVC in WebRTC is described in RFC 6184: RTP Payload Format for H.264 Video . AVC implementations for WebRTC are required to support the special "filler payload" and "full frame freeze" SEI messages; these are used to support switching among multiple input streams seamlessly.

Supported audio codecs

The audio codecs which RFC 7874 mandates that all WebRTC-compatible browsers must support are shown in the table below.

See below for more details about any WebRTC-specific considerations that exist for each codec listed above.

It's useful to note that RFC 7874 defines more than a list of audio codecs that a WebRTC-compliant browser must support; it also provides recommendations and requirements for special audio features such as echo cancellation, noise reduction, and audio leveling.

Note: The list above indicates the minimum required set of codecs that all WebRTC-compatible endpoints are required to implement. A given browser may also support other codecs; however, cross-platform and cross-device compatibility may be at risk if you use other codecs without carefully ensuring that support exists in all browsers your users might choose.

In addition to the mandatory audio codecs, some browsers support additional codecs as well. Those are listed in the following table.

Internet Low Bitrate Codec ( iLBC ) is an open-source narrow-band codec developed by Global IP Solutions and now Google, designed specifically for streaming voice audio. Google and some other browser developers have adopted it for WebRTC.

The Internet Speech Audio Codec ( iSAC ) is another codec developed by Global IP Solutions and now owned by Google, which has open-sourced it. It's used by Google Talk, QQ, and other instant messaging clients and is specifically designed for voice transmissions which are encapsulated within an RTP stream.

Comfort noise ( CN ) is a form of artificial background noise which is used to fill gaps in a transmission instead of using pure silence. This helps to avoid a jarring effect that can occur when voice activation and similar features cause a stream to stop sending data temporarily—a capability known as Discontinuous Transmission (DTX). In RFC 3389 , a method for providing an appropriate filler to use during silences.

Comfort Noise is used with G.711, and may potentially be used with other codecs that don't have a built-in CN feature. Opus, for example, has its own CN capability; as such, using RFC 3389 CN with the Opus codec is not recommended.

An audio sender is never required to use discontinuous transmission or comfort noise.

The Opus format, defined by RFC 6716 is the primary format for audio in WebRTC. The RTP payload format for Opus is found in RFC 7587 . You can find more general information about Opus and its capabilities, and how other APIs can support Opus, in the corresponding section of our guide to audio codecs used on the web .

Both the speech and general audio modes should be supported. Opus's scalability and flexibility are useful when dealing with audio that may have varying degrees of complexity. Its support of in-band stereo signals allows support for stereo without complicating the demultiplexing process.

The entire range of bit rates supported by Opus (6 kbps to 510 kbps) is supported in WebRTC, with the bit rate allowed to be dynamically changed. Higher bit rates typically improve quality.

Bit rate recommendations

Given a 20 millisecond frame size, the following table shows the recommended bit rates for various forms of media.

The bit rate may be adjusted at any time. In order to avoid network congestion, the average audio bit rate should not exceed the available network bandwidth (minus any other known or anticipated added bandwidth requirements).

G.711 defines the format for Pulse Code Modulation ( PCM ) audio as a series of 8-bit integer samples taken at a sample rate of 8,000 Hz, yielding a bit rate of 64 kbps. Both µ-law and A-law encodings are allowed.

G.711 is defined by the ITU and its payload format is defined in RFC 3551, section 4.5.14 .

WebRTC requires that G.711 use 8-bit samples at the standard 64 kbps rate, even though G.711 supports some other variations. Neither G.711.0 (lossless compression), G.711.1 (wideband capability), nor any other extensions to the G.711 standard are mandated by WebRTC.

Due to its low sample rate and sample size, G.711 audio quality is generally considered poor by modern standards, even though it's roughly equivalent to what a landline telephone sounds like. It is generally used as a least common denominator to ensure that browsers can achieve an audio connection regardless of platforms and browsers, or as a fallback option in general.

Specifying and configuring codecs

Getting the supported codecs.

Because a given browser and platform may have different availability among the potential codecs—and may have multiple profiles or levels supported for a given codec—the first step when configuring codecs for an RTCPeerConnection is to get the list of available codecs. To do this, you first have to establish a connection on which to get the list.

There are a couple of ways you can do this. The most efficient way is to use the static method RTCRtpSender.getCapabilities() (or the equivalent RTCRtpReceiver.getCapabilities() for a receiver), specifying the type of media as the input parameter. For example, to determine the supported codecs for video, you can do this:

Now codecList is an array codec objects, each describing one codec configuration. Also present in the list will be entries for retransmission (RTX), redundant coding (RED), and forward error correction (FEC).

If the connection is in the process of starting up, you can use the icegatheringstatechange event to watch for the completion of ICE candidate gathering, then fetch the list.

The event handler for icegatheringstatechange is established; in it, we look to see if the ICE gathering state is complete , indicating that no further candidates will be collected. The method RTCPeerConnection.getSenders() is called to get a list of all the RTCRtpSender objects used by the connection.

With that in hand, we walk through the list of senders, looking for the first one whose MediaStreamTrack indicates that it's kind is video , indicating that the track's data is video media. We then call that sender's getParameters() method and set codecList to the codecs property in the returned object, and then return to the caller.

If no video track is found, we set codecList to null .

On return, then, codecList is either null to indicate that no video tracks were found or it's an array of RTCRtpCodecParameters objects, each describing one permitted codec configuration. Of special importance in these objects: the payloadType property, which is a one-byte value which uniquely identifies the described configuration.

Note: The two methods for obtaining lists of codecs shown here use different output types in their codec lists. Be aware of this when using the results.

Customizing the codec list

Once you have a list of the available codecs, you can alter it and then send the revised list to RTCRtpTransceiver.setCodecPreferences() to rearrange the codec list. This changes the order of preference of the codecs, letting you tell WebRTC to prefer a different codec over all others.

In this sample, the function changeVideoCodec() takes as input the MIME type of the codec you wish to use. The code starts by getting a list of all of the RTCPeerConnection 's transceivers.

Then, for each transceiver, we get the kind of media represented by the transceiver from the RTCRtpSender 's track's kind . We also get the lists of all codecs supported by the browser for both sending and receiving video, using the getCapabilities() static method of both RTCRtpSender and RTCRtpReceiver .

If the media is video, we call a method called preferCodec() for both the sender's and receiver's codec lists; this method rearranges the codec list the way we want (see below).

Finally, we call the RTCRtpTransceiver 's setCodecPreferences() method to specify that the given send and receive codecs are allowed, in the newly rearranged order.

That's done for each transceiver on the RTCPeerConnection ; once all of the transceivers have been updated, we call the onnegotiationneeded event handler, which will create a new offer, update the local description, send the offer along to the remote peer, and so on, thereby triggering the renegotiation of the connection.

The preferCodec() function called by the code above looks like this to move a specified codec to the top of the list (to be prioritized during negotiation):

This code is just splitting the codec list into two arrays: one containing codecs whose MIME type matches the one specified by the mimeType parameter, and the other with all the other codecs. Once the list has been split up, they're concatenated back together with the entries matching the given mimeType first, followed by all of the other codecs. The rearranged list is then returned to the caller.

Default codecs

Unless otherwise specified, the default—or, more accurately, preferred—codecs requested by each browser's implementation of WebRTC are shown in the table below.

Choosing the right codec

Before choosing a codec that isn't one of the mandatory codecs (VP8 or AVC for video and Opus or PCM for audio), you should seriously consider the potential drawbacks: in particular, only these codecs can be generally assumed to be available on essentially all devices that support WebRTC.

If you choose to prefer a codec other than the mandatory ones, you should at least allow for fallback to one of the mandatory codecs if support is unavailable for the codec you prefer.

In general, if it's available and the audio you wish to send has a sample rate greater than 8 kHz, you should strongly consider using Opus as your primary codec. For voice-only connections in a constrained environment, using G.711 at an 8 kHz sample rate can provide an acceptable experience for conversation, but typically you'll use G.711 as a fallback option, since there are other options which are more efficient and sound better, such as Opus in its narrowband mode.

There are a number of factors that come into play when deciding upon a video codec (or set of codecs) to support.

Licensing terms

Before choosing a video codec, make sure you're aware of any licensing requirements around the codec you select; you can find information about possible licensing concerns in our main guide to video codecs used on the web . Of the two mandatory codecs for video—VP8 and AVC/H.264—only VP8 is completely free of licensing requirements. If you select AVC, make sure you're; aware of any potential fees you may need to pay; that said, the patent holders have generally said that most typical website developers shouldn't need to worry about paying the license fees, which are typically focused more on the developers of the encoding and decoding software.

Warning: The information here does not constitute legal advice! Be sure to confirm your exposure to liability before making any final decisions where potential exists for licensing issues.

Power needs and battery life

Another factor to consider—especially on mobile platforms—is the impact a codec may have on battery life. If a codec is handled in hardware on a given platform, that codec is likely to allow for much better battery life and less heat production.

For example, Safari for iOS and iPadOS introduced WebRTC with AVC as the only supported video codec. AVC has the advantage, on iOS and iPadOS, of being able to be encoded and decoded in hardware. Safari 12.1 introduced support for VP8 within IRC, which improves interoperability, but at a cost—VP8 has no hardware support on iOS devices, so using it causes increased processor impact and reduced battery life.

Performance

Fortunately, VP8 and AVC perform similarly from an end-user perspective, and are equally adequate for use in videoconferencing and other WebRTC solutions. The final decision is yours. Whichever you choose, be sure to read the information provided in this article about any particular configuration issues you may need to contend with for that codec.

Keep in mind that choosing a codec that isn't on the list of mandatory codecs likely runs the risk of selecting a codec which isn't supported by a browser your users might prefer. See the article Handling media support issues in web content to learn more about how to offer support for your preferred codecs while still being able to fall back on browsers that don't implement that codec.

Security implications

There are interesting potential security issues that come up while selecting and configuring codecs. WebRTC video is protected using Datagram Transport Layer Security ( DTLS ), but it is theoretically possible for a motivated party to infer the amount of change that's occurring from frame to frame when using variable bit rate (VBR) codecs, by monitoring the stream's bit rate and how it changes over time. This could potentially allow a bad actor to infer something about the content of the stream, given the ebb and flow of the bit rate.

For more about security considerations when using AVC in WebRTC, see RFC 6184, section 9: RTP Payload Format for H.264 Video: Security Considerations .

RTP payload format media types

It may be useful to refer to the IANA 's list of RTP payload format media types; this is a complete list of the MIME media types defined for potential use in RTP streams, such as those used in WebRTC. Most of these are not used in WebRTC contexts, but the list may still be useful.

See also RFC 4855 , which covers the registry of media types.

- Introduction to WebRTC protocols

- WebRTC connectivity

- Guide to video codecs used on the web

- Guide to audio codecs used on the web

- Digital video concepts

- Digital audio concepts

On the Road to WebRTC 1.0, Including VP8

Mar 12, 2019

by Youenn Fablet

Safari 11 was the first Safari version to support WebRTC. Since then, we have worked to continue improving WebKit’s implementation and compliance with the spec. I am excited to announce major improvements to WebRTC in Safari 12.1 on iOS 12.2 and macOS 10.14.4 betas, including VP8 video codec support, video simulcast support and Unified Plan SDP (Session Description Protocol) experimental support.

VP8 Video Codec

The VP8 video codec is widely used in existing WebRTC solutions. It is now supported as a WebRTC-only video codec in Safari 12.1 on both iOS and macOS betas. By supporting both VP8 and H.264, Safari 12.1 can exchange video with any other WebRTC endpoint. H.264 is the default codec for Safari because it is backed by hardware acceleration and tuned for real-time communication. This provides a great user experience and power efficiency. We found that, on an iPhone 7 Plus in laboratory conditions, the use of H.264 on a 720p video call increases the battery life by up to an hour compared to VP8. With H.264, VP8 and Unified Plan, Safari can mix H.264 and VP8 on a single connection. It is as simple as doing the following:

Video Simulcast

To further improve WebRTC support for multi-party video conferencing, simulcast is now supported for both H.264 and VP8. Kudos to the libwebrtc community, including Cosmo Software , for making great progress in that important area. Simulcast is a technique that encodes the same video content with different encoding parameters, typically different frame sizes and bit rates. This is particularly useful when the same content is sent to several clients through a central server, called an SFU . As the clients might have different constraints (screen size, network conditions), the SFU is able to send the most suitable stream to each client. Each individual encoding can be controlled using RTCRtpSender.setParameters on the sender side. Simulcast is currently activated using SDP munging . Future work should allow simulcast activation using RTCPeerConnection.addTransceiver , as per specification.

Unified Plan

WebRTC uses SDP as the format for negotiating the configuration of a connection. While previous versions of Safari used Plan B SDP only, Safari is now transitioning to the standardized version known as Unified Plan SDP. Unified Plan SDP can express WebRTC configurations in a much more flexible way, as each audio or video stream transmission can be configured independently.

If your website uses at most one audio and one video track per connection, this transition should not require any major changes. If your website uses connections with more audio or video tracks, adoption may be required. With Unified Plan SDP enabled, the support of the WebRTC 1.0 API, in particular the transceiver API, is more complete and spec-compliant than ever. To detect whether Safari uses Unified Plan, you can use feature detection:

Unified Plan is an experimental feature that is currently turned on by default in Safari Technology Preview and turned off by default in Safari in iOS 12.2 and macOS 10.14.4 betas. This can be turned on using the Develop menu on macOS and Safari settings on iOS.

Additional API updates

Safari also comes with additional improvements, including better support of capture device selection, experimental support of the screen capture API , and deprecation of the WebRTC legacy API.

Web applications sometimes want to select the same capture devices used on a previous call. Device IDs will remain stable across browsing sessions as soon as navigator.mediaDevices.getUserMedia is successfully called once. Your web page can implement persistent device selection as follows:

Existing fingerprinting mitigations remain in place, including the filtering of information provided by navigator.mediaDevices.enumerateDevices as detailed in the blog post, “A Closer Look Into WebRTC” .

Initial support of the screen capture API is now available as an experimental feature in Safari Technology Preview. By calling navigator.mediaDevices.getDisplayMedia , a web application can capture the main screen on macOS.

Following the strategy detailed in “A Closer Look Into WebRTC” , the WebRTC legacy API was disabled by default in Safari 12.0. Support for the WebRTC legacy API is removed from iOS 12.2 and macOS 10.14.4 betas. Should your application need support of the WebRTC legacy API, we recommend the use of the open source adapter.js library as a polyfill.

We always appreciate your feedback. Send us bug reports , or feel free to tweet @webkit on Twitter, or email [email protected] .

You are using an outdated browser. Please upgrade your browser to improve your experience.

Apple adds WebM Web Audio support to Safari in latest iOS 15 beta

Currently available as an option in the Experimental WebKit Features section of Safari's advanced settings, WebM Web Audio and the related WebM MSE parser are two parts of the wider WebM audiovisual media file format developed by Google.

An open-source initiative, WebM presents a royalty-free alternative to common web video streaming technology and serves as a container for the VP8 and VP9 video codecs. As it relates to Safari, WebM Web Audio provides support for the Vorbis and Opus audio codecs.

Code uncovered by 9to5Mac reveals the WebM audio codec should be enabled by default going forward, suggesting that Apple will officially adopt the standard when iOS 15 sees release.

Apple added support for the WebM video codec on Mac when a second macOS Big Sur 11.3 beta was issued in February . The video portion of WebM has yet to see implementation on iOS, but that could soon change with the adoption of WebM's audio assets.

WebM dates back to 2010, but Apple has been reluctant to bake the format into its flagship operating systems. Late co-founder Steve Jobs once called the format "a mess" that "wasn't ready for prime time."

As AppleInsider noted when WebM hit macOS, Apple might be angling to support high-resolution playback from certain streaming services like YouTube, which rely on VP9 to stream 4K content. The validation of WebM Web Audio is a step in that direction.

Apple is expected to launch iOS 15 this fall alongside a slate of new iPhone and Apple Watch models.

Sponsored Content

Clean junk files from your Mac with Intego Washing Machine X9

Top stories.

Apple's iOS 18 AI will be on-device preserving privacy, and not server-side

How iOS Web Distribution works in the EU in iOS 17.5

iPhone 16 Pro 256GB rumor makes sense, but is by a known falsifier

Apple is researching how to make the ultimate MagSafe wallet and iPhone carrying case

When to expect every Mac to get the AI-based M4 processor

Featured deals.

Deals: get a free $40 gift card with a Costco membership

Latest comparisons.

M3 15-inch MacBook Air vs M3 14-inch MacBook Pro — Ultimate buyer's guide

M3 MacBook Air vs M1 MacBook Air — Compared

M3 MacBook Air vs M2 MacBook Air — Compared

Latest news.

Fear of Nintendo's wrath is keeping emulators off of the App Store

Despite Apple's recent rule change, it has been a bumpy few days for emulators on the App Store as small developers fear the wrath of Nintendo and others.

Jerusalem Flag autoprediction bug is fixed in iOS 17.5 developer beta

Apple's second iOS 17.5 developer beta has fixed a bug that showed the Palestinian flag in the predictive text system when users typed in "Jerusalem."

Apple rolls out second beta round, including EU Web Distribution in iOS 17.5

Apple has shifted onto the second round of developer betas, with the latest iteration of iOS 17.5 including Apple's Web Distribution system.

Second developer beta of visionOS 1.2 has arrived

Owners of the Apple Vision Pro can now test out the second developer build of the visionOS 1.2 operating system.

The new iOS 17.5 beta introduces app sideloading from websites in the EU and Apple has announced both what eligible developers have to do, and what users can expect to see.

Apple will reportedly update its entire Mac line to the M4 processor, beginning in late 2024 and concluding with the Mac Pro in the second half of 2025.

Today only: pick up a lifetime Babbel subscription for $149, a discount of $450 off retail

This $450 cash discount on a lifetime Babbel language learning subscription is back for a limited time only, giving you access to the All Languages plan for $149.97.

Future Apple Vision Pro could help the user get life-saving medical advice

Apple is developing technology for wearable devices like Apple Vision Pro that measure and monitor biometric data and location to provide tailored and potentially lifesaving advice.

Latest Videos

The best game controllers for iPhone, iPad, Mac, and Apple TV

How to get the best video capture possible on iPhone 15 Pro with ProRes

Latest reviews.

Ugreen DXP8800 Plus network attached storage review: Good hardware, beta software

Espresso 17 Pro review: Magnetic & modular portable Mac monitor

Journey Loc8 MagSafe Finder Wallet review: an all-in-one Find My wallet

{{ title }}

{{ summary }}

WebM video format

Multimedia format designed to provide a royalty-free, high-quality open video compression format for use with HTML5 video. WebM supports the video codec VP8 and VP9.

WebP image format

Image format (based on the VP8 video format) that supports lossy and lossless compression, as well as animation and alpha transparency. WebP generally has better compression than JPEG, PNG and GIF and is designed to supersede them. [AVIF](/avif) and [JPEG XL](/jpegxl) are designed to supersede WebP.

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

webrtcHacks

[10 years of] guides and information for WebRTC developers

Guide apple , code , getUserMedia , ios , Safari Chad Phillips · September 7, 2018

Guide to WebRTC with Safari in the Wild (Chad Phillips)

It has been more than a year since Apple first added WebRTC support to Safari. My original post reviewing the implementation continues to be popular here, but it does not reflect some of the updates since the first limited release. More importantly, given its differences and limitations, many questions still remained on how to best develop WebRTC applications for Safari.

I ran into Chad Phillips at Cluecon (again) this year and we ended up talking about his arduous experience making WebRTC work on Safari. He had a great, recent list of tips and tricks so I asked him to share it here.

Chad is a long-time open source guy and contributor to the FreeSWITCH product. He has been involved with WebRTC development since 2015. He recently launched MoxieMeet , a videoconferencing platform for online experiential events, where he is CTO and developed a lot of the insights for this post.

{“editor”, “ chad hart “}

In June of 2017, Apple became the last major vendor to release support for WebRTC, paving the (still bumpy) road for platform interoperability.

And yet, more than a year later, I continue to be surprised by the lack of guidance available for developers to integrate their WebRTC apps with Safari/iOS. Outside of a couple posts by the Webkit team, some scattered StackOverflow questions, the knowledge to be gleaned from scouring the Webkit bug reports for WebRTC, and a few posts on this very website , I really haven’t seen much support available. This post is an attempt to begin rectifying the gap.

I have spent many months of hard work integrating WebRTC in Safari for a very complex videoconferencing application. Most of my time was spent getting iOS working, although some of the below pointers also apply to Safari on MacOS.

This post assumes you have some level of experience with implementing WebRTC — it’s not meant to be a beginner’s how to, but a guide for experienced developers to smooth the process of integrating their apps with Safari/iOS. Where appropriate I’ll point to related issues filed in the Webkit bug tracker so that you may add your voice to those discussions, as well as some other informative posts.

I did an awful lot of bushwacking in order to claim iOS support in my app, hopefully the knowledge below will make a smoother journey for you!

Some good news first

First, the good news:

- Apple’s current implementation is fairly solid

- For something simple like a 1-1 audio/video call, the integration is quite easy

Let’s have a look at some requirements and trouble areas.

General Guidelines and Annoyances

Use the current webrtc spec.

If you’re building your application from scratch, I recommend using the current WebRTC API spec (it’s undergone several iterations). The following resources are great in this regard:

- https://developer.mozilla.org/en-US/docs/Web/API/WebRTC_API

- https://github.com/webrtc/samples

For those of you running apps with older WebRTC implementations, I’d recommend you upgrade to the latest spec if you can, as the next release of iOS disables the legacy APIs by default. In particular, it’s best to avoid the legacy addStream APIs, which make it more difficult to manipulate tracks in a stream.

More background on this here: https://blog.mozilla.org/webrtc/the-evolution-of-webrtc/

iPhone and iPad have unique rules – test both

Since the iPhone and iPad have different rules and limitations, particularly around video, I’d strongly recommend that you test your app on both devices. It’s probably smarter to start by getting it working fully on the iPhone, which seems to have more limitations than the iPad.

More background on this here: https://webkit.org/blog/6784/new-video-policies-for-ios

Let the iOS madness begin

It’s possible that may be all you need to get your app working on iOS. If not, now comes the bad news: the iOS implementation has some rather maddening bugs/restrictions, especially in more complex scenarios like multiparty conference calls.

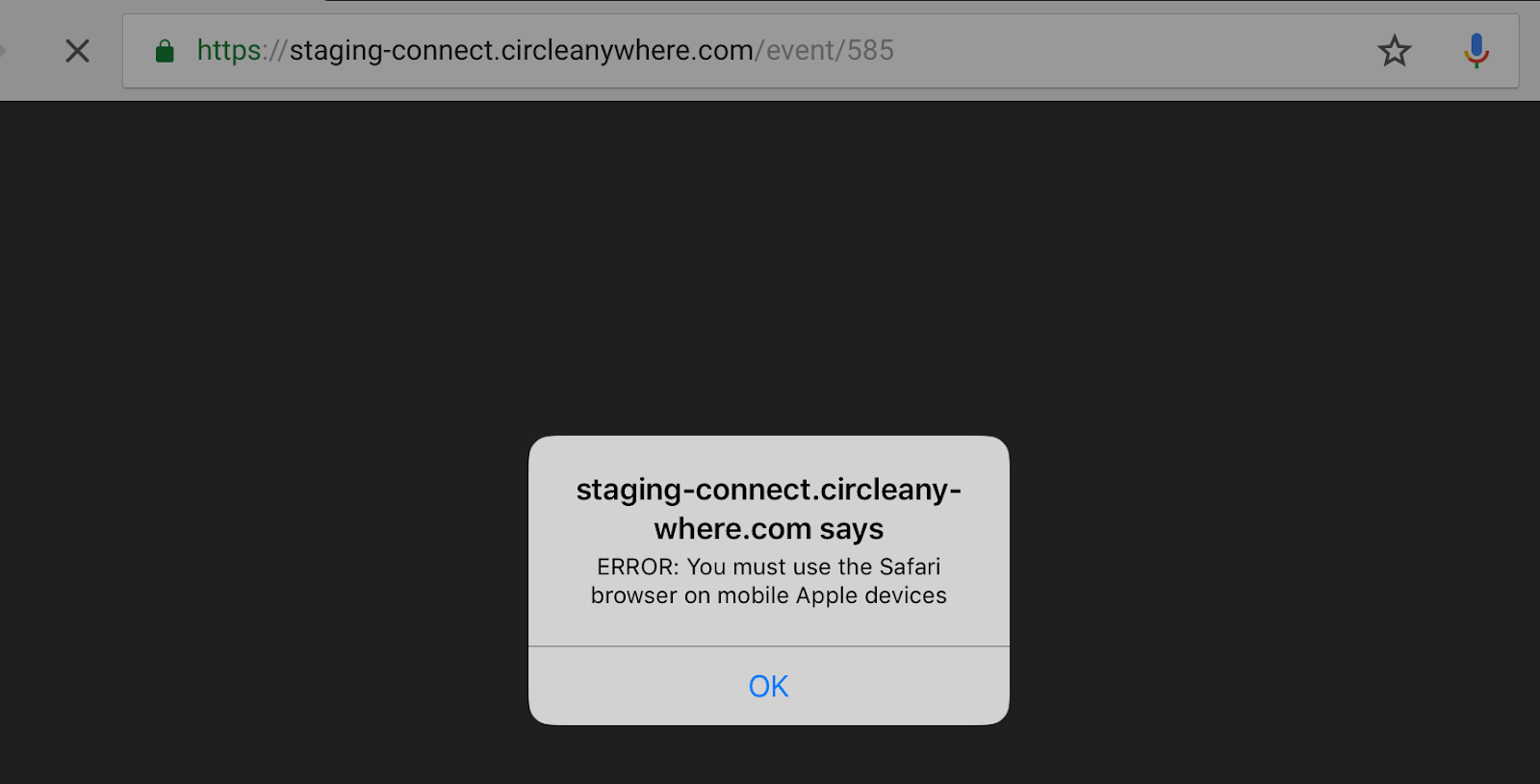

Other browsers on iOS missing WebRTC integration

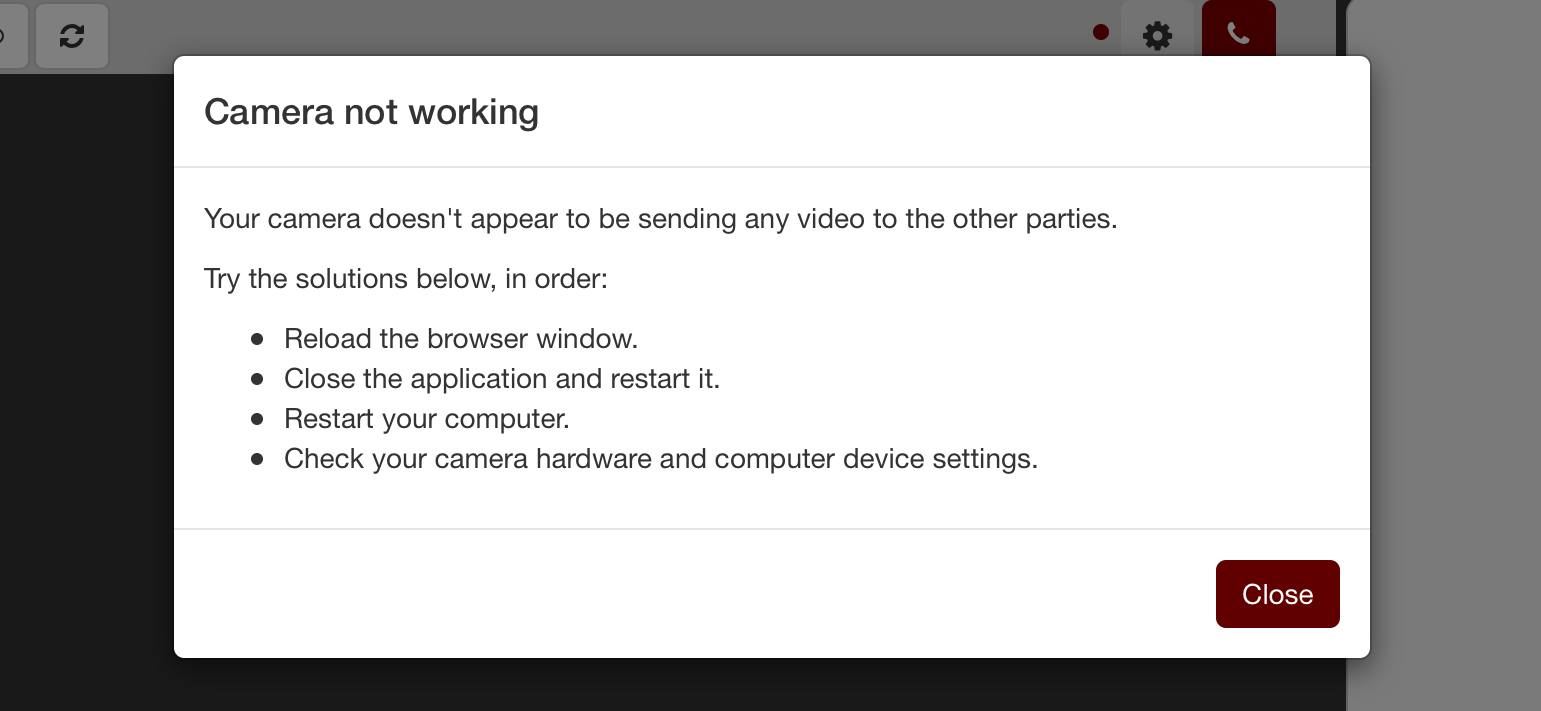

The WebRTC APIs have not yet been exposed to iOS browsers using WKWebView . In practice, this means that your web-based WebRTC application will only work in Safari on iOS, and not in any other browser the user may have installed (Chrome, for example), nor in an ‘in-app’ version of Safari.

To avoid user confusion, you’ll probably want to include some helpful user error message if they try to open your app in another browser/environment besides Safari proper.

Related issues:

- https://bugs.webkit.org/show_bug.cgi?id=183201

- https://bugs.chromium.org/p/chromium/issues/detail?id=752458

No beforeunload event, use pagehide

According to this Safari event documentation , the unload event has been deprecated, and the beforeunload event has been completely removed in Safari. So if you’re using these events, for example, to handle call cleanup, you’ll want to refactor your code to use the pagehide event on Safari instead.

source: https://gist.github.com/thehunmonkgroup/6bee8941a49b86be31a787fe8f4b8cfe

Getting & playing media, playsinline attribute.

Step one is to add the required playsinline attribute to your video tags, which allows the video to start playing on iOS. So this:

Becomes this:

playsinline was originally only a requirement for Safari on iOS, but now you might need to use it in some cases in Chrome too – see Dag-Inge’s post for more on that..

See the thread here for details on this issue requirement: https://github.com/webrtc/samples/issues/929

Autoplay rules

Next you’ll need to be aware of the Webkit WebRTC rules on autoplaying audio/video. The main rules are:

- MediaStream-backed media will autoplay if the web page is already capturing.

- MediaStream-backed media will autoplay if the web page is already playing audio

- A user gesture is required to initiate any audio playback – WebRTC or otherwise.

This is good news for the common use case of a video call, since you’ve most likely already gotten permission from the user to use their microphone/camera, which satisfies the first rule. Note that these rules work alongside the base autoplay rules for MacOS and iOS, so it’s good to be aware of them as well.

Related webkit posts:

- https://webkit.org/blog/7763/a-closer-look-into-webrtc

- https://webkit.org/blog/7734/auto-play-policy-changes-for-macos

- https://webkit.org/blog/6784/new-video-policies-for-ios

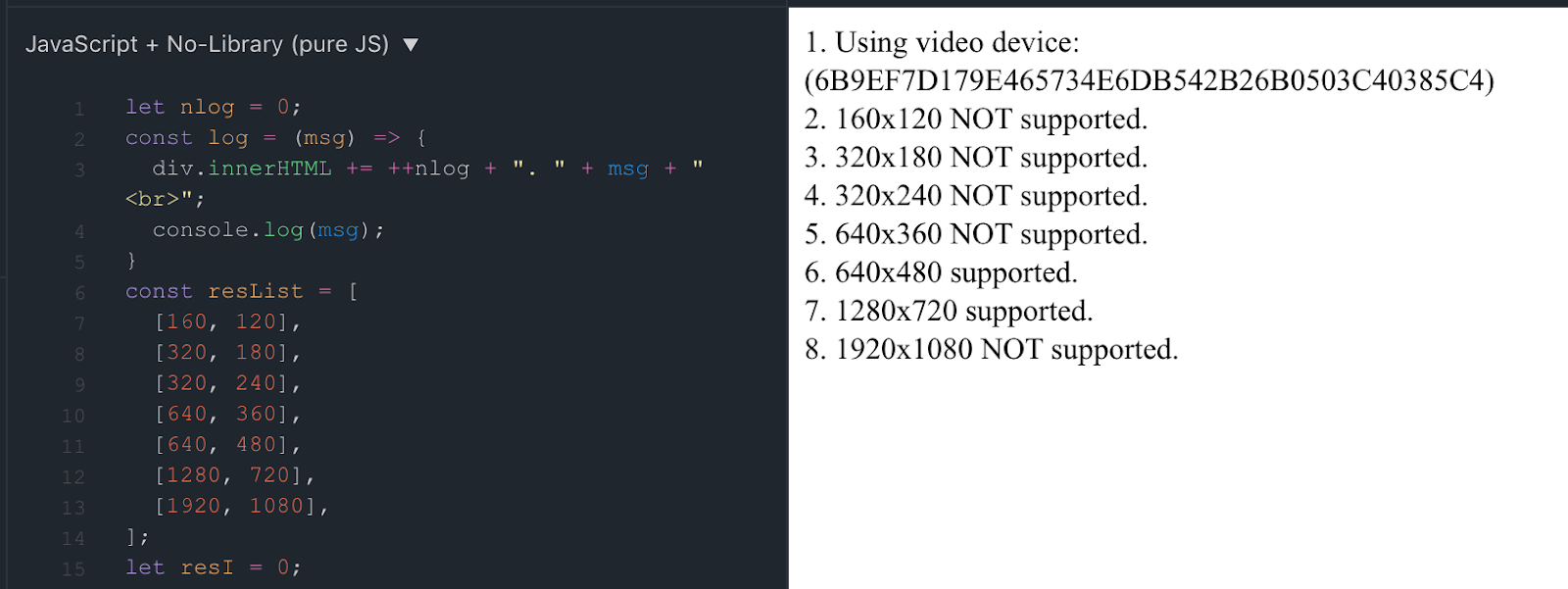

No low/limited video resolutions

UPDATE 2019-08-18:

Unfortunately this bug has only gotten worse in iOS 12, as their attempt to fix it broke the sending of video to peer connections for non-standard resolutions. On the positive side the issue does seem to be fully fixed in the latest iOS 13 Beta: https://bugs.webkit.org/show_bug.cgi?id=195868

Visiting https://jsfiddle.net/thehunmonkgroup/kmgebrfz/15/ (or the webrtcHack’s WebRTC-Camera-Resolution project) in a WebRTC-compatible browser will give you a quick analysis of common resolutions that are supported by the tested device/browser combination. You’ll notice that in Safari on both MacOS and iOS, there aren’t any available low video resolutions such as the industry standard QQVGA, or 160×120 pixels. These small resolutions are pretty useful for serving thumbnail-sized videos — think of the filmstrip of users in a Google Hangouts call, for example.

Now you could just send whatever the lowest available native resolution is along the peer connection and let the receiver’s browser downscale the video, but you’ll run the risk of saturating the download bandwidth for users that have less speedy internet in mesh/SFU scenarios.

I’ve worked around this issue by restricting the bitrate of the sent video, which is a fairly quick and dirty compromise. Another solution that would take a bit more work is to handle downscaling the video stream in your app before passing it to the peer connection, although that will result in the client’s device spending some CPU cycles.

Example code:

- https://webrtc.github.io/samples/src/content/peerconnection/bandwidth/

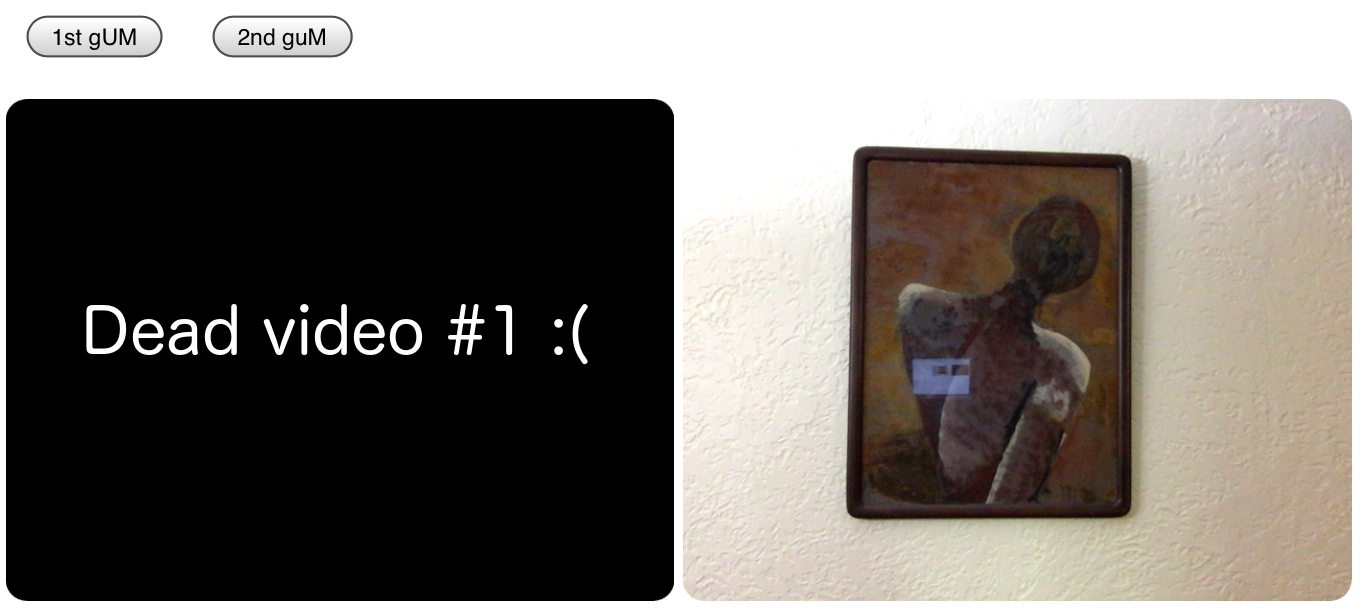

New getUserMedia() request kills existing stream track

If your application grabs media streams from multiple getUserMedia ( ) requests, you are likely in for problems with iOS. From my testing, the issue can be summarized as follows: if getUserMedia ( ) requests a media type requested in a previous getUserMedia ( ) , the previously requested media track’s muted property is set to true, and there is no way to programmatically unmute it. Data will still be sent along a peer connection, but it’s not of much use to the other party with the track muted! This limitation is currently expected behavior on iOS.

I was able to successfully work around it by:

- Grabbing a global audio/video stream early on in my application’s lifecycle

- Using MediaStream . clone ( ) , MediaStream . addTrack ( ) , MediaStream . removeTrack ( ) to create/manipulate additional streams from the global stream without calling getUserMedia ( ) again.

source: https://gist.github.com/thehunmonkgroup/2c3be48a751f6b306f473d14eaa796a0

See this post for more: https://developer.mozilla.org/en-US/docs/Web/API/MediaStream and

this related issue: https://bugs.webkit.org/show_bug.cgi?id=179363

Managing Media Devices

Media device ids change on page reload.

This has been improved as of iOS 12.2, where device IDs are now stable across browsing sessions after getUserMedia ( ) has been called once. However, device IDs are still not preserved across browser sessions, so this improvement isn’t really helpful for storing a user’s device preferences longer term. For more info, see https://webkit.org/blog/8672/on-the-road-to-webrtc-1-0-including-vp8/

Many applications include support for user selection of audio/video devices. This eventually boils down to passing the deviceId to getUserMedia ( ) as a constraint.

Unfortunately for you as a developer, as part of Webkit’s security protocols, random deviceId ’s are generated for all devices on each new page load. This means, unlike every other platform, you can’t simply stuff the user’s selected deviceId into persistent storage for future reuse.

The cleanest workaround I’ve found for this issue is:

- Store both device . deviceId and device . label for the device the user selects

- Try using the saved deviceId

- If that fails, enumerate the devices again, and try looking up the deviceId from the saved device label.

On a related note: Webkit further prevents fingerprinting by only exposing a user’s actual available devices after the user has granted device access. In practice, this means you need to make a getUserMedia ( ) call before you call enumerateDevices ( ) .

source: https://gist.github.com/thehunmonkgroup/197983bc111677c496bbcc502daeec56

Related issue: https://bugs.webkit.org/show_bug.cgi?id=179220

Related post: https://webkit.org/blog/7763/a-closer-look-into-webrtc

Speaker selection not supported

Webkit does not yet support HTMLMediaElement . setSinkId ( ) , which is the API method used for assigning audio output to a specific device. If your application includes support for this, you’ll need to make sure it can handle cases where the underlying API support is missing.

source: https://gist.github.com/thehunmonkgroup/1e687259167e3a48a55cd0f3260deb70

Related issue: https://bugs.webkit.org/show_bug.cgi?id=179415

PeerConnections & Calling

Beware, no vp8 support.

Support for VP8 has now been added as of iOS 12.2. See https://webkit.org/blog/8672/on-the-road-to-webrtc-1-0-including-vp8/

While the W3C spec clearly states that support for the VP8 video codec (along with the H.264 codec) is to be implemented, Apple has thus far chosen to not support it. Sadly, this is anything but a technical issue, as libwebrtc includes VP8 support, and Webkit actively disables it.

So at this time, my advice to achieve the best interoperability in various scenarios is:

- Multiparty MCU – make sure that H.264 is a supported codec

- Multiparty SFU – use H.264

- Multiparty Mesh and peer to peer – pray everyone can negotiate a common codec

I say best interop because while this gets you a long way, it won’t be all the way. For example, Chrome for Android does not support software H.264 encoding yet. In my testing, many (but not all) Android phones have hardware H.264 encoding, but those that are missing hardware encoding will not work in Chrome for Android.

Associated bug reports:

- https://bugs.webkit.org/show_bug.cgi?id=167257

- https://bugs.webkit.org/show_bug.cgi?id=173141

- https://bugs.chromium.org/p/chromium/issues/detail?id=719023

Send/receive only streams

As previously mentioned, iOS doesn’t support the legacy WebRTC APIs. However, not all browser implementations fully support the current specification either.

As of this writing, a good example is creating a send only audio/video peer connection. iOS doesn’t support the legacy RTCPeerConnection . createOffer ( ) options of offerToReceiveAudio / offerToReceiveVideo , and the current stable Chrome doesn’t support the RTCRtpTransceiver spec by default.

Other more esoteric bugs and limitations

There are certainly other corner cases you can hit that seem a bit out of scope for this post. However, an excellent resource should you run aground is the Webkit issue queue, which you can filter just for WebRTC-related issues: https://bugs.webkit.org/buglist.cgi?component=WebRTC&list_id=4034671&product=WebKit&resolution=—

Remember, Webkit/Apple’s implementation is young

It’s still missing some features (like the speaker selection mentioned above), and in my testing isn’t as stable as the more mature implementation in Google Chrome.

There have also been some major bugs — capturing audio was completely broken for the majority of the iOS 12 Beta release cycle (thankfully they finally fixed that in Beta 8).

Apple’s long-term commitment to WebRTC as a platform isn’t clear, particularly because they haven’t released much information about it beyond basic support. As an example, the previously mentioned lack of VP8 support is troubling with respect to their intention to honor the agreed upon W3C specifications.

These are things worth thinking about when considering a browser-native implementation versus a native app. For now, I’m cautiously optimistic, and hopeful that their support of WebRTC will continue, and extend into other non-Safari browsers on iOS.

{“author”: “ Chad Phillips “}

Related Posts

Reader Interactions

September 7, 2018 at 9:42 am

One of the most detailed posts I’ve seen on the subject; thank you Chad, for sharing.

September 11, 2018 at 7:04 am

Please also note that Safari does not support data channels.

September 11, 2018 at 12:40 pm

@JSmitty, all of the ‘RTCDataChannel’ examples at https://webrtc.github.io/samples/ do work in Safari on MacOS, but do not currently work in Safari on iOS 11/12. I’ve filed https://bugs.webkit.org/show_bug.cgi?id=189503 and https://github.com/webrtc/samples/issues/1123 — would like to get some feedback on those before I incorporate this info into the post. Thanks for the heads up!

September 26, 2018 at 2:44 pm

OK, so I’ve confirmed data channels DO work in Safari on iOS, but there’s a caveat: iOS does not include local ICE candidates by default, and many of the data channel examples I’ve seen depend on that, as they’re merely sending data between two peer connections on the same device.

See https://bugs.webkit.org/show_bug.cgi?id=189503#c2 for how to temporarily enable local ICE on iOS.

January 22, 2020 at 4:21 pm

Great article. Thanks Chad & Chad for sharing your expertise.

As to DataChannel support. Looks like Safari officially still doesn’t support it according to the support matrix. https://developer.mozilla.org/en-US/docs/Web/API/RTCDataChannel

My own testing shows that DataChannel works between two Safari browser windows. However at this time (Jan 2020) it does not work between Chrome and Safari windows. Also fails between Safari and aiortc (Python WebRTC provider). DataChannel works fine between Chrome and aiortc.

A quick way to test this problem is via sharedrop.io Transferring files works fine between same brand browser windows, but not across brands.

Hope Apple is working on the compatibility issues with Chrome.

September 13, 2018 at 2:37 pm

Nice summary Chad. Thanks for this! –

September 18, 2018 at 4:29 pm

Very good post, Chad. Just what I was looking for. Thanks for sharing this knowledge. 🙂

October 4, 2018 at 10:11 am

Thanks for this Chad, currently struggling with this myself, where a portable ‘web’ app is being written.. I’m hopeful it will creep into wkwebview soon!

October 5, 2018 at 2:43 am

Thanks for detailing the issues.

One suggestion for any future article would be including the iOS Safari limitation on simultaneous playing of multiple elements with audio present.

This means refactoring so that multiple (remote) audio sources are rendered by a single element.

October 5, 2018 at 9:46 am

There’s a good bit of detail/discussion about this limitation here: https://bugs.webkit.org/show_bug.cgi?id=176282

Media servers that mix the audio are a good solution.

December 18, 2018 at 1:10 pm

The same issue I’m facing New getUserMedia() request kills existing stream track. Let’s see whether it helps me or not.

December 19, 2018 at 6:23 am

iOS calling getUserMedia() again kills video display of first getUserMedia(). This is the issue I’m facing but I want to pass the stream from one peer to another peer.

April 26, 2019 at 12:07 am

Thank you Chad for sharing this, I was struggling with the resolution issue on iOS and I was not sure why I was not getting the full hd streaming. Hope this will get supported soon.

May 21, 2019 at 12:54 am

VP8 is a nightmare. I work on a platform where we publish user-generated content, including video, and the lack of support for VP8 forces us to do expensive transcoding on these videos. I wonder why won’t vendors just settle on a universal codec for mobile video.

August 18, 2019 at 2:17 pm

VP8 is supported as of iOS 12.2: https://webkit.org/blog/8672/on-the-road-to-webrtc-1-0-including-vp8/

July 3, 2019 at 3:38 am

Great Post! Chad I am facing an issue with iOS Safari, The issue is listed below. I am using KMS lib for room server handling and calling, There wasn’t any support for Safari / iOS safari in it, I added adapter.js (shim) to make my application run on Safari and iOS (Safari). After adding it worked perfectly on Safari and iOS, but when more than 2 persons join the call, The last added remote stream works fine but the existing remote stream(s) get struck/disconnected which means only peer to peer call works fine but not multiple remote streams. Can you please guide how to handle multiple remote streams in iOS (Safari). Thanks

July 3, 2019 at 1:45 pm

Your best bet is probably to search the webkit bugtracker, and/or post a bug there.

August 7, 2019 at 6:40 pm

No low/limited video resolutions: 1920×1080 not supported -> are you talking about IOS12 ? Because I’m doing 4K on IOS 12.3.1 with janus echo test with iphone XS Max (only one with 4K front cam) Of course if I run your script on my MBP it will say fullHD not supported -> because the cam is only 720p.

August 18, 2019 at 2:20 pm

That may be a standard camera resolution on that particular iPhone. The larger issue has been that only resolutions natively supported by the camera have been available, leading to difficultly in reliably selecting resolutions in apps, especially lower resolutions like those used in thumbnails.

Thankfully, this appears to be fully addressed in the latest beta of iOS 13.

April 18, 2020 at 7:01 am

How many days of work I lost before find this article. It’s amazing and explain a lot the reasons of all the strange bugs in iOS. Thank you so much.

September 21, 2020 at 11:38 am

Hi, i’m having issues with Safari on iOS. In the video tag, adding autoplay and playsinline doesn’t work on our Webrtc implementation. Obviously it works fine in any browser on any other platform.

I need to add the controls tag, then manually go to full screen and press play.

Is there a way to play the video inside the web page ?

December 9, 2020 at 2:35 am

First of all, thanks for detailing the issues.

This article is unique to provide many insides for WebRTC/Safari related issues. I learned a lot and applied some the techniques in our production application.

But I had very unique case which I am struggling with right now, as you might guess with Safari. I would be very grateful if you can help me or at least to guide to the right direction.

We have webrtc-based one-2-one video chat, one side always mobile app (host) who is the initiator and the other side is always browser both desktop and mobile. Making the app working across different networks was pain in the neck up to recently, but managed to fix this by changing some configurations. So the issue was in different networks WebRTC was not generating relay and most of the time server reflexive candidates, as you know without at least stun provided candidates parties cannot establish any connection. Solution was simple as though it look a lot of search on google, ( https://github.com/pion/webrtc/issues/810 ), we found out that mobile data providers mostly assigning IPv6 to mobile users. And when they used mobile data plan instead of local wifi, they could not connect to each other. By the way, we are using cloud provider for STUN/TURN servers (Xirsys). And when we asked their technical support team they said their servers should handle IPv6 based requests, but in practice it did not work. So we updated RTCPeerConnection configurations, namely, added optional constraints (and this optional constraints are also not provided officially, found them from other non official sources), the change was just disabling IPv6 on both mobile app (iOS and Android) and browser. After this change, it just worked perfectly until we found out Safari was not working at all. So we reverted back for Safari and disabled IPv6 for other cases (chrome, firefox, Android browsers)

const iceServers = [ { urls: “stun:” }, { urls: [“turn:”,”turn:”,… ], credential: “secret”, username: “secret” } ];

let RTCConfig; // just dirty browser detection const ua = navigator.userAgent.toLocaleLowerCase(); const isSafari = ua.includes(“safari”) && !ua.includes(“chrome”);

if (isSafari) { RTCConfig = iceServers; } else { RTCConfig = { iceServers, constraints: { optional: [{ googIPv6: false }] } }; }

if I wrap iceServers array inside envelop object and optional constraints and use it in new RTCPeerConnection(RTCConfig); is is throwing error saying: Attempted to assign readonly property pointing into => safari_shim.js : 255

Can you please help with this issue, our main customers use iPhone, so making our app work in Safari across different networks are very critical to our business. If you provide some kind of paid consultation, it is also ok for us

Looking forward to hearing from you

July 13, 2022 at 2:42 pm

Thanks for the great summary regarding Safari/IOS. The work-around for low-bandwidth issue is very interesting. I played with the sample. It worked as expected. It’s played on the same device, isn’t it? When I tried to add a similar “a=AS:500\r\n” to the sdp and tested it on different devices – one being windows laptop with browser: Chrome, , another an ipad with browser: Safari – it seemed not working. The symptom was: the stream was not received or sent. In a word, the connections for media communications was not there. I checked the sdp, it’s like,

sdp”: { “type”: “offer”, “sdp”: ” v=0\r\n o=- 3369656808988177967 2 IN IP4 127.0.0.1\r\n s=-\r\n t=0 0\r\n a=group:BUNDLE 0 1 2\r\n a=extmap-allow-mixed\r\n a=msid-semantic: WMS 7BLOSVujr811EZHSiFZI2t8yMML8LpOgo0in\r\n m=audio 9 UDP/TLS/RTP/SAVPF 111 63 103 104 9 0 8 106 105 13 110 112 113 126\r\n c=IN IP4 0.0.0.0\r\n b=AS:500\r\n … }

Also I didn’t quite understand the statement in the article. “I’ve worked around this issue by restricting the bitrate of the sent video, which is a fairly quick and dirty compromise. Another solution that would take a bit more work is to handle downscaling the video stream in your app before passing it to the peer connection” – don’t the both scenarios work on the sent side?

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Developing for Safari 11

We recommend migrating your application to the API provided by our preferred video partner, Zoom. We've prepared this migration guide (link takes you to an external page) to assist you in minimizing any service disruption.

Version Compatibility

Safari, from version 11, on macOS and iOS is supported in twilio-video.js 1.2.1 and greater. Earlier versions of Safari are not compatible with twilio-video.js because they do not support WebRTC.

Safari, from version 12.1, includes support for VP8 and VP8 simulcast. twilio-video.js 1.2.1 will automatically offer VP8 when supported by Safari. However, if you are looking at adding VP8 simulcast on Safari 12.1+, twilio-video.js 1.17.0 or higher is required.

The rest of this document discusses best practices for Safari < 12.1 as those versions do not include VP8.

Codec Selection

Safari supports only the h.264 codec.

Programmable Video uses WebRTC, a standard set of browser APIs for real-time audio and video in the browser. Chrome, Firefox, Edge, and Safari web browsers all support WebRTC APIs, but each has its own nuances.

When it comes to video encoding, Chrome, Edge, and Firefox support two video codecs: VP8 and H.264. Safari only supports H.264 today. This means that other browsers and mobile apps must send H.264-encoded video if they want Safari users to see the video tracks they share.

Our goal is to make it so that you don't need to worry about codec selection, but there are a few things you'll want to know as you plan support for Safari < 12.1 in your application.

H.264 in Peer-to-Peer Rooms

If your app uses Peer-to-Peer Rooms, the codec selection should be seamless: Chrome and Firefox both support H.264, and will automatically send and receive H.264 video tracks to any Safari < 12.1 users who join the Room.

If you ship a native mobile version of your app and use Peer-to-Peer Rooms, you'll need to use version 2.0.0-preview1 of our iOS or Android SDK to send and receive H.264 in a Peer-to-Peer Room. Earlier versions of our native SDKs will not be able to send or receive video to Safari < 12.1 devices, because they do not support H.264.

You can use the codec preferences API to force the browser to use a specific video codec.

H.264 in Group Rooms

If your app uses Group Rooms, you'll need to make a decision about how you want to support Safari < 12.1.

When this option is set, all endpoints must use H.264 codec when participating in the Room. See the Rooms REST API for more information.

Interoperability Between Safari < 12.1 and iOS and Android Native Apps

We've added support for H.264 codec in our Android and iOS SDKs starting at version 2.0.0-preview1. If you ship a native mobile version of your app, and you want it to be able to talk to Safari, you'll need to update to 2.0.0-preview1 or higher.

Keep in mind that if you create Rooms with only H.264 support as described above, apps running earlier versions of our mobile SDKs will not be able to connect.

Other Safari < 12.1 Considerations

Safari < 12.1 can only capture audio and video from one tab at a time.

Keep this in mind especially while you're developing your app and testing locally--you'll need to use a second browser if you want to test bi-directional video on your local machine.

Safari < 12.1 will not allow you to capture audio and video on insecure sites

Your site must be served over HTTPS in order to access the user's microphone and camera. This can make development difficult, so see the tips below for details.

Tips for Developing on Safari < 12.1

If you're building a video app for Safari users, we recommend downloading the Safari Technology Preview (link takes you to an external page) . The Technology Preview release has some additional options that make development and debugging a bit easier-you can find the options under the menu Develop > WebRTC.

A couple of useful options:

- Enabling Media Capture on Insecure Sites lets you capture audio and video from the microphone and camera without using HTTPS.

- Use Mock Capture Devices simulates audio and video input in the browser, which can come in handy for troubleshooting or automated testing.

Read more about developing WebRTC applications for Safari < 12.1 on the WebKit blog (link takes you to an external page) .

[SOLVED] Play .webm video on safari

Does anyone know how to play .webm videos on Safari

Normally, for video textures, I use mp4 files. There are dozens of converters on the net, so it shouldn’t be too tough.

But i need to play transparent video. Webm supports transparent video

Safari hasn’t fully implemented Webm support yet, so not much you can do until Apple adds that in place. There used to be a number of plug-ins for desktop Safari that enabled Webm playback but support for plug-ins has been dropped.

For the moment if you would like to play a transparent video in Playcanvas you could use some form of chromakey shader with a green-screen mp4 video:

As you mentioned the quality is poor that’s why i moved to transparent video.Is there any other way of playing .webm videos in IOS safari or Chrome. It works fine in android

https://caniuse.com/#search=webm

Partial Support: Only supports VP8 used in WebRTC.

Chrome and the other browsers on iOS still use the webview of Safari for rendering (Apple security rule). So basically they can’t have a different feature set than what Safari provides.

Indeed there is partial support of the Google VP8 codec in WebRTC, as Will said, on iOS though I have no idea if that can be used to decode a file, most likely it used only to compress the camera stream among peers.

Thank you so much Leonidas and will.After surfing some blogs i came to an end i can’t do it.Let me do it for android only

Agora Releases VP9 Video Support for Safari

Agora is excited to be the first real-time video platform-as a-service (PaaS) provider to release full support for VP9 in browsers including Safari. Full VP9 support comes with the release of Web SDK 14.9.2. VP9 provides twice the quality of the VP8 codec for the same bitrate. In my 12 years of WebRTC expertise this is a truly impressive milestone for the industry.

What is VP9 and why is it important?

VP9 is a video coding format developed by Google. VP9 is the successor to VP8, which is currently the default for real-time video on the web. VP9 enables twice the compression of VP8 and is customized for video resolutions greater than 1080p (such as UHD).

VP9 is a significant advancement in the world of video codecs for several reasons:

- Improved Compression: VP9 provides better compression than its predecessor (VP8) and is often compared favorably to HEVC/H.265 in terms of compression efficiency. Better compression means smaller file sizes without compromising video quality, which leads to faster streaming and reduced bandwidth usage.

- Adaptive Streaming: VP9 is well-suited for adaptive bitrate streaming, such as YouTube’s Dynamic Adaptive Streaming over HTTP (DASH). This adaptability ensures that videos stream smoothly across various network conditions.

- Support for 4K and Beyond: VP9 is designed to handle high-resolution video, making it an excellent choice for 4K streaming and even resolutions beyond that.

- Broad Adoption: Major platforms, like YouTube, have adopted VP9 due to its efficiency, leading to a significant portion of internet traffic being encoded in VP9.

- Power Efficiency: For mobile devices and other battery-powered gadgets, VP9 is designed to decode efficiently, conserving power and extending battery life.

- Web Integration: Being a product from Google, VP9 has robust support in browsers, particularly in Chrome. This integration is essential for the web, especially for platforms that rely heavily on video content.

In summary, VP9 is a big deal because it represents a combination of cost-saving (due to being royalty-free), technological advancement (with improved compression and resolution support), and widespread industry adoption, all of which benefits both content creators and consumers.

Challenges with VP9 on Safari

While VP9 has been around since 2016, it has presented challenges with a lack of hardware support and Safari browser support. To this day, Safari does not provide network and compute adaptation other than to reduce the outgoing frame-rate and bit-rate. This means that a video stream coming from an iOS device will not look good on the receiving end when the bitrate drops or the device isn’t capable of encoding 720p video at 30fps.

Comparing VP9 to VP8

This comparison shows video coming from two identical iPhone 11s connected to the same Wi-Fi access point in a high packet loss office environment. On the left is VP8 and on the right is VP9. The bit-rate is limited to 1mbps on each device and the difference in quality is impressive.

Agora now offers full VP9 support for Safari

Agora has now solved the issues with VP9 in the Safari browser by providing dynamic resolution scaling on Safari 16.0 and later versions for desktop and mobile to adapt to the needs of a low-compute device or limited uplink. This allows the VP9 picture in Safari to remain stable with a high frame rate and no blockiness as seen in Google Chrome and other browsers.

VP9 is the latest innovation from Agora that ensures video quality is maintained in all environments. Real-time video quality is vital for ensuring a seamless user experience, fostering clear and effective communication, and reflecting professionalism. In contexts ranging from business meetings to telemedicine, high-quality video is essential for accuracy, engagement, and credibility.

As technology advances, users increasingly expect superior video quality as a standard. It’s essential to provide a seamless user experience that fosters clear and effective communication and reduces visual strain during prolonged usage. To find out more of Agora’s other innovations in real-time video quality, space: Revolutionizing Live Video Quality: Agora Unveils Next-Gen Enhancements .

Looks like no one’s replied in a while. To start the conversation again, simply ask a new question.

Safari 15.0 VP9 support

I am using macOS Catalina due to design preferences. But Safari has updated to version 15.0, which claims support for the VP9 codec, which allows you to watch 4K content on YouTube. For some reason, I do not have such an opportunity - the maximum available resolution is 1080p. This is weird as my friend uses an iMac on Catalina and has 4K. My device is a MacBook Pro 16" late 2019.

I would appreciate your reply.

MacBook Pro 16″, macOS 10.15

Posted on Oct 18, 2021 1:18 PM

Loading page content

Page content loaded

Oct 18, 2021 4:38 PM in response to Mansurius

There have recently been many reports of a broad range of new problems on this support site about the latest release of Safari v15. It is included in the downloads for Big Sur and Catalina. If you are experiencing these problems, you can use another browser such as Firefox or Chrome and they will work for you until a newer version of Safari is released. While some of the same issues keep arising, they are not consistent and some computers (like mine) seem to experience no problems.

- Skip to main content

- Web video codec guide

Due to the sheer size of uncompressed video data, it's necessary to compress it significantly in order to store it, let alone transmit it over a network. Imagine the amount of data needed to store uncompressed video:

- A single frame of high definition (1920x1080) video in full color (4 bytes per pixel) is 8,294,400 bytes.

- At a typical 30 frames per second, each second of HD video would occupy 248,832,000 bytes (~249 MB).

- A minute of HD video would need 14.93 GB of storage.

- A fairly typical 30 minute video conference would need about 447.9 GB of storage, and a 2-hour movie would take almost 1.79 TB (that is, 1790 GB) .

Not only is the required storage space enormous, but the network bandwidth needed to transmit an uncompressed video like that would be enormous, at 249 MB/sec—not including audio and overhead. This is where video codecs come in. Just as audio codecs do for the sound data, video codecs compress the video data and encode it into a format that can later be decoded and played back or edited.

Most video codecs are lossy , in that the decoded video does not precisely match the source. Some details may be lost; the amount of loss depends on the codec and how it's configured, but as a general rule, the more compression you achieve, the more loss of detail and fidelity will occur. Some lossless codecs do exist, but they are typically used for archival and storage for local playback rather than for use on a network.

This guide introduces the video codecs you're most likely to encounter or consider using on the web, summaries of their capabilities and any compatibility and utility concerns, and advice to help you choose the right codec for your project's video.

Common codecs

The following video codecs are those which are most commonly used on the web. For each codec, the containers (file types) that can support them are also listed. Each codec provides a link to a section below which offers additional details about the codec, including specific capabilities and compatibility issues you may need to be aware of.

Factors affecting the encoded video

As is the case with any encoder, there are two basic groups of factors affecting the size and quality of the encoded video: specifics about the source video's format and contents, and the characteristics and configuration of the codec used while encoding the video.

The simplest guideline is this: anything that makes the encoded video look more like the original, uncompressed, video will generally make the resulting data larger as well. Thus, it's always a tradeoff of size versus quality. In some situations, a greater sacrifice of quality in order to bring down the data size is worth that lost quality; other times, the loss of quality is unacceptable and it's necessary to accept a codec configuration that results in a correspondingly larger file.

Effect of source video format on encoded output

The degree to which the format of the source video will affect the output varies depending on the codec and how it works. If the codec converts the media into an internal pixel format, or otherwise represents the image using a means other than simple pixels, the format of the original image doesn't make any difference. However, things such as frame rate and, obviously, resolution will always have an impact on the output size of the media.

Additionally, all codecs have their strengths and weaknesses. Some have trouble with specific kinds of shapes and patterns, or aren't good at replicating sharp edges, or tend to lose detail in dark areas, or any number of possibilities. It all depends on the underlying algorithms and mathematics.

The degree to which these affect the resulting encoded video will vary depending on the precise details of the situation, including which encoder you use and how it's configured. In addition to general codec options, the encoder could be configured to reduce the frame rate, to clean up noise, and/or to reduce the overall resolution of the video during encoding.

Effect of codec configuration on encoded output

The algorithms used do encode video typically use one or more of a number of general techniques to perform their encoding. Generally speaking, any configuration option that is intended to reduce the output size of the video will probably have a negative impact on the overall quality of the video, or will introduce certain types of artifacts into the video. It's also possible to select a lossless form of encoding, which will result in a much larger encoded file but with perfect reproduction of the original video upon decoding.

In addition, each encoder utility may have variations in how they process the source video, resulting in differences in the output quality and/or size.

The options available when encoding video, and the values to be assigned to those options, will vary not only from one codec to another but depending on the encoding software you use. The documentation included with your encoding software will help you to understand the specific impact of these options on the encoded video.

Compression artifacts

Artifacts are side effects of a lossy encoding process in which the lost or rearranged data results in visibly negative effects. Once an artifact has appeared, it may linger for a while, because of how video is displayed. Each frame of video is presented by applying a set of changes to the currently-visible frame. This means that any errors or artifacts will compound over time, resulting in glitches or otherwise strange or unexpected deviations in the image that linger for a time.

To resolve this, and to improve seek time through the video data, periodic key frames (also known as intra-frames or i-frames ) are placed into the video file. The key frames are full frames, which are used to repair any damage or artifact residue that's currently visible.

Aliasing is a general term for anything that upon being reconstructed from the encoded data does not look the same as it did before compression. There are many forms of aliasing; the most common ones you may see include:

Color edging

Color edging is a type of visual artifact that presents as spurious colors introduced along the edges of colored objects within the scene. These colors have no intentional color relationship to the contents of the frame.

Loss of sharpness

The act of removing data in the process of encoding video requires that some details be lost. If enough compression is applied, parts or potentially all of the image could lose sharpness, resulting in a slightly fuzzy or hazy appearance.

Lost sharpness can make text in the image difficult to read, as text—especially small text—is very detail-oriented content, where minor alterations can significantly impact legibility.

Lossy compression algorithms can introduce ringing , an effect where areas outside an object are contaminated with colored pixels generated by the compression algorithm. This happens when an algorithm that uses blocks that span across a sharp boundary between an object and its background. This is particularly common at higher compression levels.

Note the blue and pink fringes around the edges of the star above (as well as the stepping and other significant compression artifacts). Those fringes are the ringing effect. Ringing is similar in some respects to mosquito noise , except that while the ringing effect is more or less steady and unchanging, mosquito noise shimmers and moves.

RInging is another type of artifact that can make it particularly difficult to read text contained in your images.

Posterizing

Posterization occurs when the compression results in the loss of color detail in gradients. Instead of smooth transitions through the various colors in a region, the image becomes blocky, with blobs of color that approximate the original appearance of the image.

Note the blockiness of the colors in the plumage of the bald eagle in the photo above (and the snowy owl in the background). The details of the feathers is largely lost due to these posterization artifacts.

Contouring or color banding is a specific form of posterization in which the color blocks form bands or stripes in the image. This occurs when the video is encoded with too coarse a quantization configuration. As a result, the video's contents show a "layered" look, where instead of smooth gradients and transitions, the transitions from color to color are abrupt, causing strips of color to appear.

In the example image above, note how the sky has bands of different shades of blue, instead of being a consistent gradient as the sky color changes toward the horizon. This is the contouring effect.

Mosquito noise

Mosquito noise is a temporal artifact which presents as noise or edge busyness that appears as a flickering haziness or shimmering that roughly follows outside the edges of objects with hard edges or sharp transitions between foreground objects and the background. The effect can be similar in appearance to ringing .

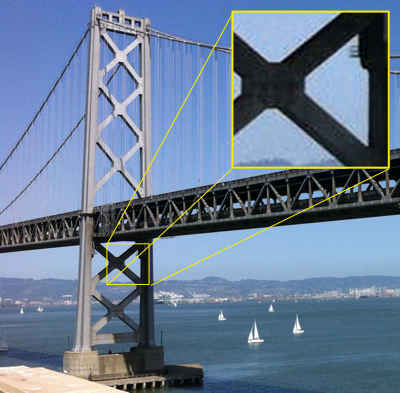

The photo above shows mosquito noise in a number of places, including in the sky surrounding the bridge. In the upper-right corner, an inset shows a close-up of a portion of the image that exhibits mosquito noise.