Time-frequency analysis using wavelets, Hanning windows and multitapers

Introduction, defining data segments of interest, reading and preprocessing the raw data, inspect the erfs, time-frequency analysis, (1) time-frequency analysis with hanning taper, fixed window length, visualization, (2) time-frequency analysis with hanning taper, frequency-dependent window length, (3) time-frequency analysis using morlet wavelets, (4) time-frequency analysis using multi-tapering, summary and suggested further reading.

In this tutorial we will demonstrate time-frequency analysis of a single subject's MEG data using a Hanning window, multitapers and wavelets. This tutorial also shows how to visualize time-frequency results (TFRs).

We assume that the reader already knows how to do basic preprocessing in FieldTrip. Since there are multiple events (left/right cue, fixation, stimulus onset, speed change, left/right response), the sequence of triggers in the dataset is considerably more complex than in the somatosensory experiment on the same subject. We will show how to parse the sequence of triggers, select the data segments of interest and preprocess the data.

There is no information in this tutorial about how to compare conditions, how to average over subjects, how to analyze at the source level or how to do statistical analysis on the time-frequency data. Some of these issues are covered in other tutorials (see Summary and suggested further reading).

Oscillatory components contained in the ongoing EEG or MEG signal often show power changes relative to experimental events. These signals are not necessarily phase-locked to the event and will not be represented in event related fields and potentials. The goal of this analysis is to compute and visualize event related changes in power by calculating time-frequency representations (TFRs). This will be done using analysis based on Fourier analysis and wavelets. The Fourier analysis will include the application of multitapers, which allow a better control of time and frequency smoothing.

Calculating time-frequency representations of power is done using a sliding time window. This can be done according to two principles: either the time window has a fixed length independent of frequency, or the time window decreases in length with increased frequency. For each time window the power is calculated. Prior to calculating the power one or more tapers are multiplied with the data. The aim of the tapers is to reduce spectral leakage and control the frequency smoothing.

We will work on a dataset of an visual change-detection experiment. Magneto-encephalography (MEG) data was collected using the 306-channel Neuromag Triux MEG system at NatMEG. The subject was instructed to fixate on the screen and press a left- or right-hand button upon a speed change of an inward moving stimulus Each trial started with the presentation of a cue pointing either rightward or leftward, indicating the response hand. Subsequently a fixation cross appeared. After a baseline interval of 1s, an inward moving circular sine-wave grating was presented. After an unpredictable delay, the grating changed speed, after which the subjects had to press a button with the cued hand. In a small fraction of the trials there was no speed change and subjects should not respond.

The dataset allows for a number of different questions, e.g.

- What is the effect of cueueing?

- What is the effect of stimulus onset?

- What is the effect of the ongoing visual stimulation and the maintained attention?

- What is the effect of the response target, i.e. the speed change?

These questions require different analysis strategies on different sections of the data. Hence we will spend some time on segmenting and preparing the data for the different analyses, although we will focus on question 3.

To calculate the time-frequency analysis for the example dataset we will perform the following steps:

- Read the data into Matlab using ft_definetrial and ft_preprocessing

- Compute the power values for each frequency bin and each time bin using the function ft_freqanalysis

- Visualize the results. This can be done by creating time-frequency plots for one ( ft_singleplotTFR ) or several channels ( ft_multiplotTFR ), or by creating a topographic plot for a specified time- and frequency interval ( ft_topoplotTFR ).

In this tutorial four strategies for time-frequency analysis will be demonstrated, numbered 1 to 4.

The first step is to read the data using the function ft_preprocessing , which requires the specification of the trials by ft_definetrial .

Let us start by looking at the sequence of triggers that is present in the data:

Along the horizontal axis is time in the experiment, along the vertical axis is the trigger value. Each dot represents one trigger.

There are in total 14 different triggers present in the data:

You can zoom in in the figure to show the trigger sequence of a few trials.

Looking at the triggers, you can recognize a certain diagonal 'stripe' structure that repeats itself. Fully deciphering these triggers requires more information from the experimenter or from the stimulus presentation script (which is shared along with the data). The Presentation PCL file is an ascii file with the code that controls the stimulation computer and sends out triggers to the MEG system. You can open it in an editor. It contains the following description of the triggers, whose values can be recognized in the MEG dtaa.

You can see that not the only responses themselves are coded, but also whether they are correct. The interesting segments of data are not simply a piece of data around a single trigger, but around a specific sequence. E.g. the trigger sequence [10 11 12 13 14] codes for a complete trial in which a correct response was given with the left hand.

The ft_definetrial function, which you were introduced to in the preprocessing hands-on, has built-in support for the segmentation on the basis of single triggers, not for complex trigger sequences. To support complex trigger sequences, ft_definetrial allows you to provide your own piece of MATLAB code. That piece of code should be written as a function. In FieldTrip we refer to such a function as a 'trial function' or trialfun.

INSTRUCTION: Open the provided trial function

This trialfun_visual_cue function is defined such that it takes the cfg structure as input, and produces a 'trl' matrix as output. Furthermore, it returns the event structure.

The code of this function makes use of the cfg.dataset field and of cfg.trialdef.pre = time before the first trigger cfg.trialdef.post = time after the last trigger

which you will have to specify. At line 47 it starts looping over all cues, and for each checks whether the subsequent trigger sequence corresponds to a correctly executed left- or right-hand trial.

On line 61-63 the most important characteristics of the 'trl' matrix are specified: the begin and endsample, and the offset. These code where you want the data segment to start and end, and which sample of the data segment you want to consider as time t=0, i.e. as the time at which you want to align all trials in the analysis.

On line 65-73 some additional characteristics of each trial are determined, which are all concatenated to the trl matrix on line 76. The first three columns of 'trl' are required, all other columns are optional and can for example be used to perform analysis on subsets or for statistical analyses.

Also provided are the trialfun for selecting a data segment around the response (trialfun_visual_response) and around the stimulus (trialfun_visual_stimulus). Since we are for now interested in the visual response, we will continue with trialfun_visual_stimulus.

You pass the name of your trial function (or a function handle to it) to ft_definetrial :

The ft_definetrial function will do some general stuff and subsequently call your function, passing the cfg along. If you compare the cfg structure before and after the call to ft_definetrial , you see that cfg.trl has been added

If you were to look at the code of ft_definetrial , you would see that by default it uses the (separate) function ft_trialfun_general .

There are some other example trial functions in the fieldtrip/trialfun directory, such as ft_trialfun_example1, which detects the occurence of two subsequent triggers, and ft_trialfun_example2, which detects the onset of muscle activity in an EMG channel. The FieldTrip mechanism of "trialfuns" allows you to segment your data, regardless of the complexity, using straight-forward MATLAB code. More information can be found in the online documentation .

Having defined the segments of interest, we can specify additional preprocessing options in the cfg-structure and call ft_preprocessing to read and baseline-correct the data.

The raw data is represented in a MATLAB structure and you can use standard MATLAB code to access and visualize it:

In the data.trialinfo field you can recognize the additional columns that were added to the 'trl' matrix by trialfun_visual_stimulus. They represent the cued hand (1=left, 2=right) and the response latency, i.e. time between the speed change and the response.

We can have a closer look at the behavioral data, i.e, the response time.

You can now clearly see that the response latencies are quantized at approximately 8 ms, plus or minus one sample. This is a consequence of the processing of the responses in Presentation, which synchronizes with the screen refresh rate of 120 Hz. It means that the behavioral data has not been recorded with the highest possible accuracy (1 ms, since the sampling frequency is 1000 Hz).

We continue processing the data. Using ft_rejectvisual we can perform a quick scan of the overall data quality and exclude noisy trials:

Let us save the cleaned data, to allow resuming the analysis at a later point in time.

Although we are not interested in the ERFs in this tuturial, it is good practice to check at various stages in your analysis whether the expected features in the data are present. Here we know that a strong visual stimulus switches on at t=0, which means that there should be a Visual Evoked Field (VEF).

The figure above shows the VEF. You should note that towards the end, from about 1 second post-stimulus, the ERF traces start to diverge. The very last piece of the data at t=1.3 is really far off from the rest.

QUESTION: Can you explain the data getting more noisy towards the end?

Since the speed change happened at an unpredictable point in time, the subject's response can be anywhere from 0.5 seconds onwards. We did not want to include the resonses in the analysis, hence we only selected (in trialfun_visual_stimulus) the data segments up to the speed change, excluding the response. We can visualize this for a few trials with

We can look at the full distribution of the trial durations using some standard MATLAB code:

You see that the data segments end due to the speed-change anywhere from 0.5 seconds post-stimulus up to 1.3 seconds post-stimulus. Up to 0.5 seconds there are many trials that can be averaged. Towards the end, the number of trials that contributes to the average decreases to one, causing the last section of the average to be very noisy.

The number of trials that went into the average at every sample is also represented in the average timelock structure:

Let us focus on the data again. Having computed the ERF, we can look at its spatial distribution:

For a correct interpretation of the planar gradient topography, you should combine the two planar gradients at each location into a single (absolute) magnitude:

INSTRUCTION: Use the interactive mode of the figure to zoom in on the occipital channels. Can you see the occipital topography of the VEF peak around 100ms?

If you look at the single timecourse (i.e. the average ERF of the occipital channels), you see that the planar magnitude increases towards the end. You also see that the baseline is not zero. This is a consequence of the non-linear operation of taking the planar-gradient magnitude. The non-linear sensitivity of the planar gradient to the noise has consequences for statistical comparisons, for example in a group study: in conditions with fewer trials, you always expect more noise. After taking the magnitute (absolute value) this noise is always positive and does not average out over subjects. So when comparing a condition with on average 50 trials with a condition with 150 trials, you expect the 150-trial condition to be less biassed in each of the subjects, and hence have a smaller magnitude of the planar gradient. A statistical comparison of unbalanced data that has been processed like this is not meaningful, since you already expect the difference to be visible in the baseline of your exeriment.

INSTRUCTION: Explore the activity at the start of the window of interest. What seems to be going on in the latency range from -0.8 to -0.4 seconds?

To speed up the subsequent computations and reduce memory requirements, we will downsample the data from 1000 to 250 Hz. This is not recommended for normal analysis, where you would better wait a bit longer. However, in this tutorial we want to compare a number of analyses without having the time to wait.

We use the ft_freqanalysis function for the time-frequency analysis, which takes a raw data structure as input. It implements various algorithms, which can be specified using cfg.method. Each of the methods has additional configuration options.

INSTRUCTION: look at the help of ft_freqanalysis and see whether you can find the options for the 'wavelet' method.

We will describe how to calculate time frequency representations using Hanning tapers. When choosing for a fixed window length, the frequency resolution is defined according to the length of the time window (delta T). The frequency resolution (delta F in figure 1) is 1/length of time window in sec (delta T in figure 1). Thus a 500 ms time window results in a 2 Hz frequency resolution (1/0.5 sec = 2 Hz), meaning that power can be calculated for 2 Hz, 4 Hz, 6 Hz etc. An integer number of cycles must fit in the time window.

Since this step takes quite some time, you might want to save the data afterwards

Regardless of the method used for calculating time-frequency power, the output format is an identical MATLAB structure with the following elements:

The element TFRhann.powspctrm contains the temporal evolution of the raw power values for each specified frequency. The element TFRhann.dimord describes the three dimensions: channels by frequency by time. Each of the dimensions is additionally described by TFRhann.label, TFRhann.freq and TFRhann.time.

To visualize the data, we will combine the two planar channels. Whereas for ERFs it requires application of Pythagoras' rule, in the case of power it simply consists of adding the power of the two respective planar channels.

This part of the tutorial shows how visualize the results of any type of time-frequency analysis.

To visualize the event-related power changes, a normalization with respect to a baseline interval is desired. EEG and MEG data usually has much larger overall power in the lower frequencies than the higher frequencies. The consequence in a color-coded visual representation would be that the power at low frequencies would dominate the figure.

There are three possibilities for normalizing: # subtracting, for each frequency, the average power in a baseline interval from all power values. This gives, for each frequency, the absolute change in power with respect to the baseline interval. # expressing, for each frequency, the raw power values as the relative increase or decrease with respect to the power in the baseline interval. This means active period/baseline. Note that the relative baseline is expressed as a ratio; i.e. no change is represented by 1. # take the previous relative increase and subtract 1, i.e. express it as relative change. A value of 0.1 means 10% increase, a value of -0.1 means 10% decrease.

Note that by using the options cfg.baseline and cfg.baselinetype when calling plotting functions, baseline correction can be applied to the data. Baseline correction can also be applied directly by calling ft_freqbaseline . See the plotting tutorial for more details.

INSTRUCTION: Explore the TFR in detail, especially over the occipital cortex. Do you see gamma-band activity?

The figures use a relative baseline (cfg.basline='relative'), which means that power is expressed relative to the baseline. A value of 0.5 means that the power has halved, a value of 2 means that the power has doubled.

NOTE: The figures by default use an automatic scaling of the colorbar along the min-max range. The colors in one figure can therefore be different than in the other figure. You can specify cfg.zlim to control the colorbar. Please type 'colorbar'

There are three ways of graphically representing the data: # time-frequency plots of all channels, in a quasi-topographical layout # time-frequency plot of an individual channel, # topographical 2-D map of the power changes in a specified time-frequency interval. Starting from the first ( ft_multiplotTFR ) which shows all data, you can use the interactive feature of the figure to get the second ( ft_singleplotTFR ) and third ( ft_topoplotTFR ). For fine-grained control of the figures, e.g. for publications, you would not use the interactive features of the figure, but rather call ft_topoplotTFR itself.

It is also possible to calculate the TFRs with a sliding time-window that varies with frequency. For low frequencies the oscillations are long in time, which requires a long window. For high frequencies you can use a shorter window. The main advantage of this approach is that the temporal smoothing decreases with higher frequencies; however, this is at the expense of frequency smoothing. We will here show how to perform this analysis with a Hanning window. The approach is very similar to wavelet analysis, but differs in the choise of the tapering function. A Morlet wavelet analysis uses a Gaussian-shaped taper, which in principle is infinitely wide, whereas a Hanning taper has a clearly limited width.

The analysis is best done by first selecting the numbers of cycles per time window, which will be the same for all frequencies. For instance if the number of cycles per window is 7, the time window is 1000 ms for 7 Hz (1/7 x 7 cycles); 700 ms for 10 Hz (1/10 x 7 cycles) and 350 ms for 20 Hz (1/20 x 7 cycles). The frequency can be chosen arbitrarily - however; too fine a frequency resolution is just going to increase the redundancy rather than providing new information.

Below is the cfg for a 7-cycle time window.

Let us look at the relation between length of the time window versus frequency before computing the TFR:

Since this step takes quite some time, you might want to save the data again.

Note the boundary effects for lower frequencies (the even blue time frequency points in the plot): there is no power value calculated for these time frequency points. The power value is assigned to the middle time point in the time window and the time window extends in both directions. For example for 2 Hz the time window has a length of 3.5 sec (1/2 * 7 cycles = 3.5 sec), which means 1.75 seconds in both directions from the centre of the 'tile'. There is simply not enough data to fill that time window and the power will be set to NaN (not-a-number). The higher the frequency, the shorter the time window, the closer you can get to the edges of the data window. It is important to consider these boundary effects in your experimental design and during preprocessing to get all desired time-frequency points for your time window of interest.

An alternative to calculating TFRs with the mtmconvol and frequency-dependent time windows is to use Morlet wavelets. The approach is nearly similar to calculating TFRs with time windows that depend on frequency using a taper with a Gaussian shape, except that the Gausian function is infinitely wide. The commands below illustrate how to do this.

One crucial parameter to set is cfg.gwidth. It determines the width of the wavelets in number of cycles. Making the value smaller will increase the temporal resolution at the expense of frequency resolution and vice versa. The spectral bandwidth at a given frequency F is equal to F/width*2 (so, at 30 Hz and a width of 7, the spectral bandwidth is 30/7*2 = 8.6 Hz) while the wavelet duration is equal to width/F/pi (in this case, 7/30/pi = 0.074s = 74ms).

The parameter cfg.width specifies where the Gausian taper will be truncated (cut of and set to zero). So with cfg.gwidth=3 and cfg.width=5, there are three cycles under the bell-shaped curve from -1 to +1 times the standard-deviation, and the bell-shaped curve is truncated at 5 cycles. Ideally you would set cfg.width to infinite to get a proper Morlet wavelet decomposition, but since the data is not infinitely long, this cannot be achieved. Furthermore, cfg.width puts a hard constraint on the temporal characteristics and you can use it to be sure that a stimulus artefact (or response) does not inadvertedly leak into power estimates at other latencies.

Calculate TFRs using Morlet wavelets:

Again we will visualize the data after combining the planar channels:

Finally we will demonstrate how to use the mtmconvol method with multitapering. Multitapering allows us to fully control the division of the the time-frequency space into 'tiles'. Here we will use a constant-length time window, with an increasing smoothing for the higher frequencies.

Since this step takes quite some time, you might want to save the data afterwards.

The time available for the first analysis of this tutorial dataset has been limited. None of the time-frequency analyses demonstrated above will therefore be fully optimal for this experimental design and for the features present in the data of this subject. However, we do hope that this tutorial demonstrates the choices that can be made in optimizing the analysis.

Optimizing the analysis involves tweaking the parameters to best capture the features of the data. If the data has different features at lower than at higher frequencies, you should not hesitate splitting the analysis over the two ranges. For this particular dataset, the most optimal analysis probably will consist of a wavelet or Hanning-tapered analysis for the lower frequencies (say, up to 30 Hz) and a multi-taper analysis for the higher frequencies.

This tutorial showed how to do time-frequency analysis on a single subject MEG dataset and how to plot the time-frequency representations. There were 4 methods shown for calculating time- frequency representations and three functions for plotting the results.

After having finished this tutorial on time-frequency analysis, you can continue with the beamformer source reconstruction tutorial if you are interested in the source-localization of the power changes or the cluster-based permutation tests on time-frequency data tutorial if you are interested how to do statistics on the time-frequency representations.

Published with MATLAB® R2012b

Analysis of sensor- and source-level connectivity

- Introduction

In this tutorial we will explore different measures of connectivity, using simulated data and using source-level MEG data. You will learn how to compute various connectivity measures and how these measures can be interpreted. Furthermore, a number of interpretational problems will be addressed.

Since this tutorial focusses on connectivity measures in the frequency domain, we expect that you understand the basics of frequency domain analysis, because this is not covered by this tutorial.

Note that this tutorial does not cover all possible pitfalls associated with the analysis of connectivity and the interpretational difficulties. Although the computation of connectivity measures might be easy using FieldTrip, the interpretation of the outcomes of those measures in terms of brain networks and activity remains challenging and should be exercised with caution.

This tutorial contains hands-on material that we use for the MEG/EEG toolkit course and it is complemented by this lecture.

The brain is organized in functional units, which at the smallest level consists of neurons, and at higher levels consists of larger neuronal populations. Functional localization studies consider the brain to be organized in specialized neuronal modules corresponding to specific areas in the brain. These functionally specialized brain areas (e.g., visual cortex area V1, V2, V4, MT, …) have to pass information back and forth along anatomical connections. Identifying these functional connections and determining their functional relevance is the goal of connectivity analysis and can be done using ft_connectivityanalysis and associated functions.

The nomenclature for connectivity analysis can be adopted from graph theory , in which brain areas correspond to nodes or vertices and the connections between the nodes is given by edges. One of the fundamental challenges in the analysis of brain networks from MEG and EEG data lies not only in identifying the “edges”, i.e. the functional connections, but also the “nodes”. The remainder of this tutorial will only explain the methods to characterize the edges, but will not go into details for identifying the nodes. The beamformer tutorial and corticomuscular coherence tutorial provide more pointers to localizing the nodes.

Many measures of connectivity exist, and they can be broadly divided into measures of functional connectivity (denoting statistical dependencies between measured signals, without information about causality/directionality), and measures of effective connectivity, which describe directional interactions. If you are not yet familiar with the range of connectivity measures (i.e., mutual information, coherence, granger causality), you may want to read through some of the papers listed under the method references for connectivity analysis

After the identification of the network nodes and the characterization of the edges between the nodes, it is possible to analyze and describe certain network features in more detail. This network analysis is also not covered in this tutorial, although FieldTrip provides some functionality in this direction (see ft_networkanalysis to get started).

This tutorial consists of three parts:

Simulated data with directed connections : In this part we are going to simulate some data with the help of ft_connectivitysimulation and use these data to compute various connectivity metrics. As a generative model of the data we will use a multivariate autoregressive model. Subsequently, we will estimate the multivariate autoregressive model, the spectral transfer function, and the cross-spectral density matrix using the functions ft_mvaranalysis and ft_freqanalysis . In the next step we will compute and inspect various measures of connectivity with ft_connectivityanalysis and ft_connectivityplot .

Simulated data with common pick-up and different noise levels : In this part we are going to simulate some data consisting of an instantaneous mixture of three ‘sources’, creating a situation of common pick up. We will explore the effect of this common pick up on the consequent estimates of connectivity, and we will investigate the effect of different mixings on these estimates.

Connectivity between MEG virtual channel and EMG : In this part we are going to reconstruct MEG virtual channel data and estimate connectivity between this virtual channel and EMG. The data used for this part are the same as in the corticomuscular coherence tutorial .

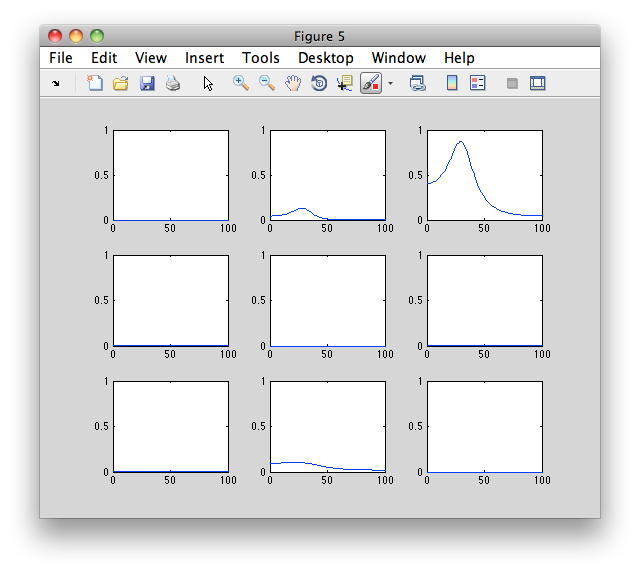

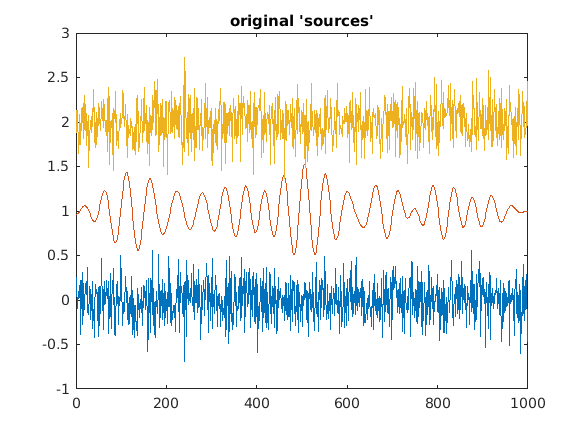

Simulated data with directed connections

We will first simulate some data with a known connectivity structure built in. This way we know what to expect in terms of connectivity. To simulate data we use ft_connectivitysimulation . We will use an order 2 multivariate autoregressive model. The necessary ingredients are a set of NxN coefficient matrices, one matrix for each time lag. These coefficients need to be stored in the cfg.param field. Next to the coefficients we have to specify the NxN covariance matrix of the innovation noise. This matrix needs to be stored in the cfg.noisecov field.

The model we are going to use to simulate the data is as follow

x(t) = 0.8 x(t-1) - 0.5 x(t-2)

y(t) = 0.9 y(t-1) + 0.5 z(t-1) - 0.8*y(t-2)

z(t) = 0.5 z(t-1) + 0.4 x(t-1) - 0.2*z(t-2)

which is done using

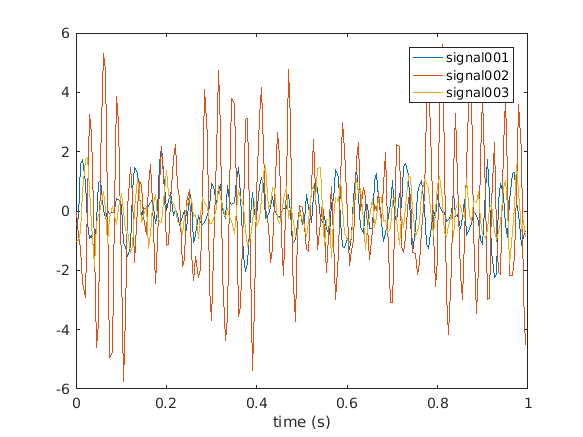

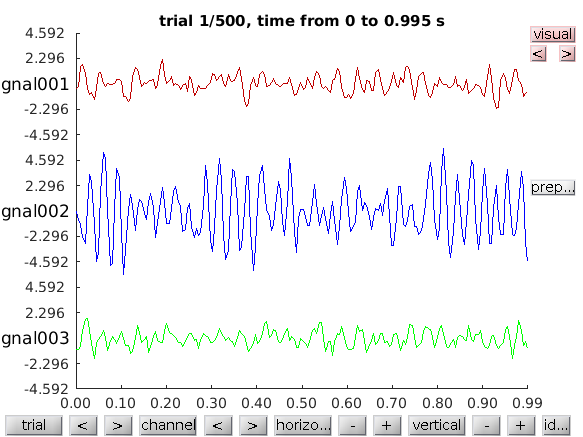

The simulated data consists of 3 channels (cfg.nsignal) in 500 trials (cfg.ntrials). You can easily visualize the data for example in the first trial using

or browse through the complete data using

Computation of the multivariate autoregressive model

To be able to compute spectrally resolved Granger causality , or other frequency-domain directional measures of connectivity, we need to estimate two quantities: the spectral transfer matrix and the covariance of an autoregressive model’s residuals. We fit an autoregressive model to the data using the ft_mvaranalysis function.

For the actual computation of the autoregressive coefficients FieldTrip makes use of an implementation from third party toolboxes. At present ft_mvaranalysis supports the biosig and bsmart toolboxes for these computations.

In this tutorial we will use the bsmart toolbox. The relevant functions have been included in the FieldTrip release in the fieldtrip/external/bsmart directory. Although the exact implementations within the toolboxes differ, their outputs are comparable.

The resulting variable mdata contains a description of the data in terms of a multivariate autoregressive model. For each time-lag up to the model order (cfg.order), a 3x3 matrix of coefficients is outputted. The noisecov-field contains covariance matrix of the model’s residuals.

Here, we know the model order a priori because we simulated the data and we choose a slightly higher model order (five instead of two) to get more interesting results in the output. For real data the appropriate model order for fitting the autoregressive model can vary depending on subject, experimental task, quality and complexity of the data, and model estimation technique that is used. You can estimate the optimal model order for your data by relying on information criteria methods such as the Akaike information criterion or the Bayesian information criterion. Alternatively, you can choose to use a non-parametric approach without having to decide on model order at all (see next section on Non-parametric computation of the cross-spectral density matrix )

Compare the parameters specified for the simulation with the estimated coefficients and discuss.

- Computation of the spectral transfer function

From the autoregressive coefficients it is now possible to compute the spectral transfer matrix, for which we use ft_freqanalysis . There are several ways of computing the spectral transfer function, the parametric and the non-parametric way. We will first illustrate the parametric route:

The resulting mfreq data structure contains the pairwise transfer function between the 3 channels for 101 frequencies.

It is also possible to compute the spectral transfer function using non-parametric spectral factorization of the cross-spectral density matrix. For this, we need a Fourier decomposition of the data. This is done in the following section.

- Non-parametric computation of the cross-spectral density matrix

Some connectivity metrics can be computed from a non-parametric spectral estimate (i.e. after the application of the FFT-algorithm and conjugate multiplication to get cross-spectral densities), such as coherence, phase-locking value and phase slope index. The following part computes the Fourier-representation of the data using ft_freqanalysis .

The resulting freq structure contains the spectral estimate for 3 tapers in each of the 500 trials (hence 1500 estimates), for each of the 3 channels and for 101 frequencies. It is not necessary to compute the cross-spectral density at this stage, because the function used in the next step, ft_connectivityanalysis , contains functionality to compute the cross-spectral density from the Fourier coefficients.

We apply frequency smoothing of 2Hz. The tapsmofrq parameter should already be familiar to you from the multitapers section of the frequency analysis tutorial . How much smoothing is desired will depend on your research question (i.e. frequency band of interest) but also on whether you decide to use the parametric or non-parametric estimation methods for connectivity analysis:

Parametric and non-parametric estimation of Granger causality yield very comparable results, particularly in well-behaved simulated data. The main advantage in calculating Granger causality using the non-parametric technique is that it does not require the determination of the model order for the autoregressive model. When relying on the non-parametric factorization approach more data is required as well as some smoothing for the algorithm to converge to a stable result. Thus the choice of parametric vs. non-paramteric estimation of Granger causality will depend on your data and your certainty of model order. (find this information and more in Bastos and Schoffelen 2016 )

Computation and inspection of the connectivity measures

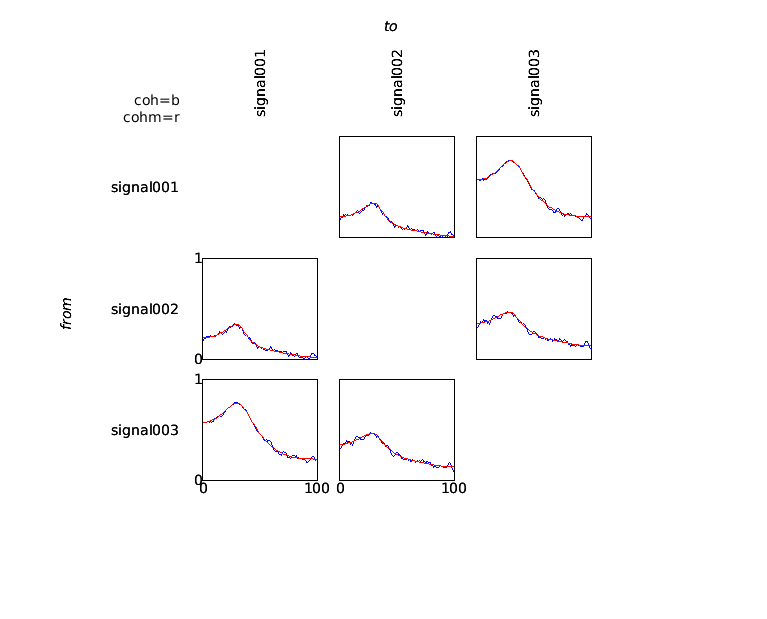

The actual computation of the connectivity metric is done by ft_connectivityanalysis . This function is transparent to the type of input data, i.e. provided the input data allows the requested metric to be computed, the metric will be calculated. Here, we provide an example for the computation and visualization of the coherence coefficient.

Subsequently, the data can be visualized using ft_connectivityplot .

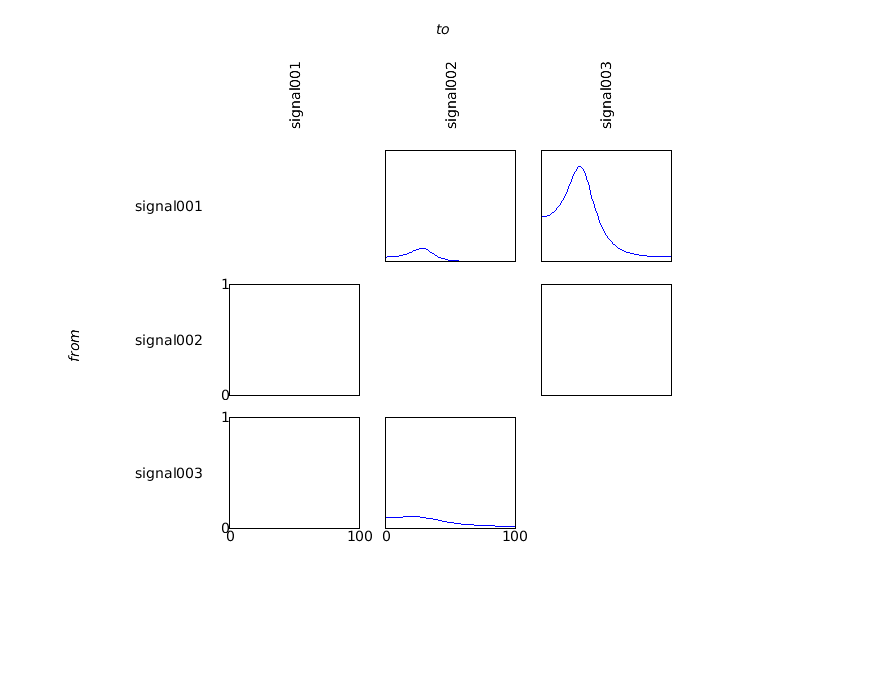

The coherence measure is a symmetric measure, which means that it does not provide information regarding the direction of information flow between any pair of signals. In order to analyze directionality in interactions, measures based on the concept of granger causality can be computed. These measures are based on an estimate of the spectral transfer matrix, which can be computed in a straightforward way from the multivariate autoregressive model fitted to the data.

Compute the granger output using instead the ‘freq’ data structure. Plot them side-by-side using ft_connectivityplot.

Instead of plotting it with ft_connectivityplot , you can use the following low-level MATLAB plotting code which gives a better understanding of the numerical representation of the results.

Discuss the differences between the granger causality spectra, and the coherence spectra.

Compute the following connectivity measures from the mfreq data, and visualize and discuss the results: partial directed coherence (pdc), directed transfer function (dtf), phase slope index (psi). (Note that psi will require specifying cfg.bandwidth. What is the meaning of this parameter?)

Simulated data with common pick-up and different noise levels

When working with electrophysiological data (EEG/MEG/LFP) the signals that are picked up by the individual channels invariably consist of instantaneous mixtures of the underlying source signals. This mixing can severely affect the outcome of connectivity analysis, and thus affects the interpretation. We will demonstrate this by simulating data in two channels, where each of the channels consists of a weighted combination of temporal white noise unique to each of the channels, and a common input of a band-limited signal (filtered between 15 and 25 Hz). We will compute connectivity between these channels, and show that the common input can give rise to spurious estimates of connectivity.

Simulate new data using the following mixing matrix:

and recompute the connectivity measures. Discuss what you see.

Play a bit with the parameters in the mixing matrix and see what is the effect on the estimated connectivity.

Simulate new data where the 2 mixed signals are created from 4 underlying sources, and where two of these sources are common input to both signals, and where these two sources are temporally shifted copies of one another.

Hint: the mixing matrix could look like this:

and the trials could be created like this:

Compute connectivity between the signals and discuss what you observe. In particular, also compute measures of directed interaction.

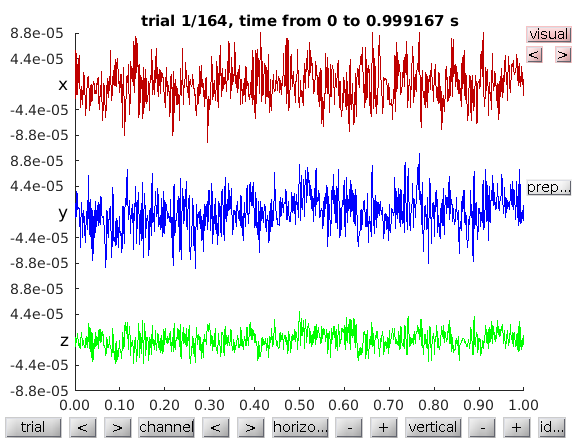

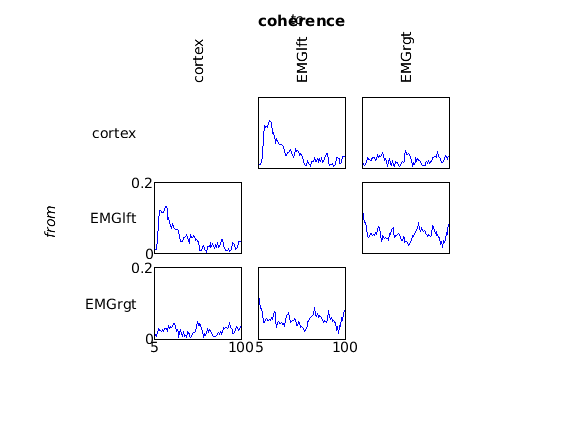

Connectivity between MEG virtual channel and EMG

The previous two examples were using simulated data, either with a clear directed connectivity structure, or with a trivial pick-up of a common source in two channels. We will now continue with connectivity analysis on real MEG data. The dataset is the same as the one used in the Analysis of corticomuscular coherence tutorial .

In short, the dataset consists of combined MEG and EMG recordings while the subject lifted his right hand. The coherence tutorial contains a more elaborate description of the experiment and the dataset and a detailed analysis can be found in the corresponding paper ( Jan-Mathijs Schoffelen, Robert Oostenveld and Pascal Fries. Neuronal Coherence as a Mechanism of Effective Corticospinal Interaction, Science 2005, Vol. 308 no. 5718 pp. 111-113 ). Due to the long distance between the EMG and the MEG, there is no volume conduction and hence no common pick-up. Hence this dataset lends itself well for connectivity analysis. But rather than using one of the MEG channels (as in the original study) and computing connectivity between that one channel and EMG, we will extract the cortical activity using a beamformer virtual channel.

- Compute the spatial filter for the region of interest

We start with determining the motor cortex as the region of interest. At the end of the coherence tutorial it is demonstrated how to make a 3-D reconstruction of the cortico-muscular coherence (CMC) using the DICS algorithm. That source reconstruction serves as starting point for this analysis.

You can download the (source.mat)(https://download.fieldtriptoolbox.org/tutorial/connectivity/source.mat) file with the result from the DICS reconstruction.

We will first determine the position on which the cortico-muscular coherence is the largest.

The cortical position is expressed in individual subject head-coordinates and in centimeter. Relative to the center of the head (in between the ears) the position is 4 cm towards the nose, -3 towards the left side (i.e., 3 cm towards the right!) and 12 cm towards the vertex.

The ft_sourceanalysis methods are usually applied to the whole brain using a regular 3-D grid or using a triangulated cortical sheet. You can also just specify the location of a single or multiple points of interest with cfg.sourcemodel.pos and the LCMV beamformer will simply be performed at the location of interest.

The LCMV beamformer spatial filter for the location of interest will pass the activity at that location with unit-gain, while optimally suppressing all other noise and other source contributions to the MEG data. The LCMV implementation in FieldTrip requires the data covariance matrix to be computed with ft_timelockanalysis .

Rather than doing all the preprocessing again, you can download the preprocessed data and headmodel data.mat and SubjectCMC.hdm .

The source reconstruction contains the estimated power and the source-level time series of the averaged ERF, but here we are not interested in those. The cfg.keepfilter option results in the spatial filter being kept in the output source structure. This filter can be used to reconstruct the single-trial time series as a virtual channel by multiplying it with the original MEG data.

In this case, the headmodel coordinates were defined in cm, this might be different for different headmodels. You can inspect the units of the headmodel with ft_read_headmodel

- Extract the virtual channel time series

The LCMV spatial filter is computed using data in the time domain. However, no time-domain spatial filters (during preprocessing e.g., low-pass or high-pass filters) have been applied before hand. Consequently, the filter will suppress all noise in the data in all frequency bands. The spatial filter derived from the broadband data allows us to compute a broadband source level time series.

If you would know that the subsequent analysis would be limited to a specific frequency range in the data (e.g., everything above 30 Hz), you could first apply a filter using ft_preprocessing (e.g., cfg.hpfilter=yes and cfg.hpfreq=30 ) prior to computing the covariance and the spatial filter.

The sourcedata structure resembles the raw-data output of ft_preprocessing and consequently can be used in any follow-up function. You can for example visualize the single-trial virtual channel time series using ft_databrowser :

Notice that the reconstruction contains three channels, for the x-, the y- and the z-component of the equivalent current dipole source at the location of interest.

Project along the strongest dipole direction

The interpretation of connectivity is facilitated if we can compute it between two plain channels rather than between one channel and a triplet of channels. Therefore we will project the time series along the dipole direction that explains most variance. This projection is equivalent to determining the largest (temporal) eigenvector and can be computationally performed using the singular value decomposition (svd).

Matrix u contains the spatial decomposition, matrix v the temporal and on the diagonal of matrix s you can find the eigenvalues. See help svd for more details.

We now recompute the virtual channel time series, but now only for the dipole direction that has the most power.

Rather than using a sourcemodel in the beamformer that consists of all three (x, y, z) directions, you can also have the beamformer compute the filter for only the optimal source orientation. This is implemented using the cfg.lcmv.fixedori=’yes’ option.

Recompute the spatial filter for the optimal source orientation and using that spatial filter (a 1x151 vector) recompute the time series.

Investigate and describe the difference between the two time series. What is the difference between the two dipole orientations?

Note that one orientation is represented in the SVD matrix “u” and the other is in the source.avg.ori field.

- Combine the virtual channel with the EMG

The raw data structure containing one (virtual) channel can be combined with the two EMG channels from the original preprocessed data.

Compute the connectivity

The resulting combined data structure has three channels: the activity from the cortex, the left EMG and the right EMG. We can now continue with regular channel-level connectivity analysis.

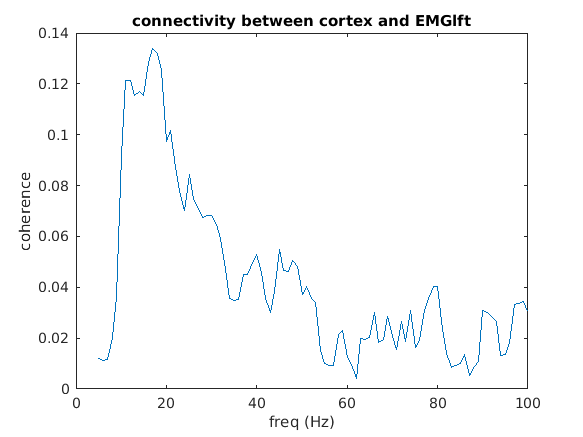

This computes the spectral decomposition and the coherence spectrum between all channel pairs, which can be plotted with

To look in more detail into the numerical representation of the coherence results, you can use

The spectrum reveals coherence peaks at 10 and 20 Hz (remember that the initial DICS localizer was done at beta). Furthermore, there is a broader plateau of coherence in the gamma range from 40-50 Hz.

The spectral decomposition was performed with mutitapering and 5 Hz spectral smoothing (i.e. 5Hz in both directions). Recompute the spectral decomposition and the coherence with a hanning taper. Recompute it with mutitapering and 10 Hz smoothing. Plot the three coherence spectra and look at the differences.

- Exercise 10

Rather than looking at undirected coherence, the virtual channel level data can now also easily be submitted to directed connectivity measures. Compute the spectrally resolved granger connectivity and try to assess whether the directionality is from cortex to EMG or vice versa.

- Exercise 11

Let’s say you wanted to look at cortico-cortical connectivity, e.g., interactions between visual and motor cortex in a particular frequency band. How would you approach this?

- Summary and further reading

This tutorial demonstrates how to compute connectivity measures between two time series. If you want to learn how to make a distributed representation of connectivity throughout the whole brain, you may want to continue with the corticomuscular coherence tutorial .

- How to interpret the sign of the phase slope index?

- In what way can frequency domain data be represented in FieldTrip?

- How can I compute inter-trial coherence?

- What is the difference between coherence and coherency?

Example scripts:

- Conditional Granger causality in the frequency domain

- Effect of SNR on Coherence

- Correlation analysis of fMRI data

- Fourier analysis of neuronal oscillations and synchronization

FieldTrip Made Easy: An Analysis Protocol for Group Analysis of the Auditory Steady State Brain Response in Time, Frequency, and Space

Affiliations.

- 1 Department of Psychology, University of Konstanz, Konstanz, Germany.

- 2 Donders Institute for Brain, Cognition and Behaviour, Radboud University, Nijmegen, Netherlands.

- 3 NatMEG, Department of Clinical Neuroscience, Karolinska Institutet, Stockholm, Sweden.

- PMID: 30356712

- PMCID: PMC6189392

- DOI: 10.3389/fnins.2018.00711

The auditory steady state evoked response (ASSR) is a robust and frequently utilized phenomenon in psychophysiological research. It reflects the auditory cortical response to an amplitude-modulated constant carrier frequency signal. The present report provides a concrete example of a group analysis of the EEG data from 29 healthy human participants, recorded during an ASSR paradigm, using the FieldTrip toolbox. First, we demonstrate sensor-level analysis in the time domain, allowing for a description of the event-related potentials (ERPs), as well as their statistical evaluation. Second, frequency analysis is applied to describe the spectral characteristics of the ASSR, followed by group level statistical analysis in the frequency domain. Third, we show how time- and frequency-domain analysis approaches can be combined in order to describe the temporal and spectral development of the ASSR. Finally, we demonstrate source reconstruction techniques to characterize the primary neural generators of the ASSR. Throughout, we pay special attention to explaining the design of the analysis pipeline for single subjects and for the group level analysis. The pipeline presented here can be adjusted to accommodate other experimental paradigms and may serve as a template for similar analyses.

Keywords: ASSR; EEG; ERP; FieldTrip; beamforming; group analysis.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

handson_sensoranalysis.md

Latest commit, file metadata and controls, time-frequency analysis using hanning window, multitapers and wavelets.

{% include markup/skyblue %} This tutorial was written specifically for the PracticalMEEG workshop in Paris in December 2019, and is an adjusted version of the time-frequency analysis tutorial . {% include markup/end %}

Introduction

In this tutorial you can find information about the time-frequency analysis of a single subject's MEG data using a Hanning window, multitapers and wavelets. This tutorial also shows how to visualize the results.

Here, we will work on the Face recognition dataset . This tutorial is a continuation from the raw2erp tutorial .

Oscillatory components contained in the ongoing EEG or MEG signal often show power changes relative to experimental events. These signals are not necessarily phase-locked to the event and will not be represented in event-related fields and potentials ( Tallon-Baudry and Bertrand (1999) ). The goal of this section is to compute and visualize event-related changes by calculating time-frequency representations (TFRs) of power. This will be done using analysis based on Fourier analysis and wavelets. The Fourier analysis will include the application of multitapers ( Mitra and Pesaran (1999) , Percival and Walden (1993) ) which allow a better control of time and frequency smoothing.

Calculating time-frequency representations of power is done using a sliding time window. This can be done according to two principles: either the time window has a fixed length independent of frequency, or the time window decreases in length with increased frequency. For each time window the power is calculated. Prior to calculating the power one or more tapers are multiplied with the data. The aim of the tapers is to reduce spectral leakage and control the frequency smoothing.

{% include image src="/assets/img/tutorial/timefrequencyanalysis/tfrtiles.png" width="600" %}

Figure: Time and frequency smoothing. (a) For a fixed length time window the time and frequency smoothing remains fixed. (b) For time windows that decrease with frequency, the temporal smoothing decreases and the frequency smoothing increases.

If you want to know more about tapers/ window functions you can have a look at this Wikipedia site . Note that Hann window is another name for Hanning window used in this tutorial. There is also a Wikipedia site about multitapers, to take a look at it click here .

To calculate the time-frequency analysis for the example dataset we will perform the following steps:

- Read the data into MATLAB using the same strategy as in the raw2erp tutorial .

- Compute the power values for each frequency bin and each time bin using the function ft_freqanalysis

- Visualize the results. This can be done by creating time-frequency plots for one ( ft_singleplotTFR ) or several channels ( ft_multiplotTFR ), or by creating a topographic plot for a specified time- and frequency interval ( ft_topoplotTFR ).

{% include image src="/assets/img/tutorial/timefrequencyanalysis/tfr_pipelinenew.png" width="200" %}

Figure: Schematic overview of the steps in time-frequency analysis

In this tutorial, procedures of 4 types of time-frequency analysis will be shown. You can see each of them under the titles: Time-frequency analysis I, II ... and so on. If you are interested in a detailed description about how to visualize the results, look at the Visualization part.

The first step is to read the data using the function ft_preprocessing . With the aim to reduce boundary effects occurring at the start and the end of the trials, it is recommended to read larger time intervals than the time period of interest. In this example, the time of interest is from -0.6 s to 1.3 s (t = 0 s defines the time of stimulus); however, for reasons that will become clear later, the script reads the data from -0.8 s to 1.5 s.

Time-frequency analysis I

Hanning taper, fixed window length.

Here, we will describe how to calculate time frequency representations using Hanning tapers. When choosing for a fixed window length procedure the frequency resolution is defined according to the length of the time window (delta T). The frequency resolution (delta f in figure 1) = 1/length of time window in sec (delta T in figure 1). Thus, a 400 ms time window results in a 2.5 Hz frequency resolution (1/0.4 sec= 2.5 Hz) meaning that power can be calculated for frequency bins centered at 2.5 Hz, 5 Hz, 7.5 Hz etc. An integer number of cycles must fit in the time window.

The ft_freqanalysis function requires a 'raw' data structure, which is the output of ft_preprocessing . In the following code section, we duplicate the preprocessing part of the raw2erp tutorial tutorial, with a few important modifications. As mentioned, the epoch length is increased, in order to account for boundary effects. Moreover, we will not apply a bandpassfilter to the data (why not?) and only read in the MEG data for now. The execution of the following chunk of code takes some time. The precomputed data are in the derivatives/sensoranalysis/sub-15 folder, and can be loaded from there:

Once we have the data in memory, we can compute the time-frequency representation, which we do here for each of the conditions separately:

Regardless of the method used for calculating the TFR, the output format is identical. It is a structure with the following fields:

The 'powspctrm' field contains the temporal evolution of the raw power values for each specified channel and frequency bin. The 'freq' and 'time' fields represent the center points of each frequency and time bin in Hz and s. Note that each power value is not a 'point estimate', but always has some temporal and spectral extent.

Visualization

This part of the tutorial shows how to visualize the results of any type of time-frequency analysis.

To visualize the event-related power changes, a normalization with respect to a baseline interval will be performed. There are two possibilities for normalizing:

- Subtracting, for each frequency, the average power in a baseline interval from all other power values. This gives, for each frequency, the absolute change in power with respect to the baseline interval.

- Expressing, for each frequency, the raw power values as the relative increase or decrease with respect to the power in the baseline interval. This means active period/baseline. Note that the relative baseline is expressed as a ratio; i.e. no change is represented by 1.

There are three ways of graphically representing the data: 1) time-frequency plots of all channels, in a quasi-topographical layout, 2) time-frequency plot of an individual channel (or average of several channels), 3) topographical 2-D map of the power changes in a specified time-frequency interval.

To plot the TFRs from all the magnetometer sensors use the function ft_multiplotTFR . Settings can be adjusted in the cfg structure. For example:

{% include image src="/assets/img/workshop/paris2019/freqlow_famous.png" width="650" %}

Figure: Time-frequency representations calculated using ft_freqanalysis. Plotting was done with ft_multiplotTFR)

Note that using the options cfg.baseline and cfg.baselinetype results in baseline correction of the data. Baseline correction can also be applied directly by calling ft_freqbaseline . Moreover, you can combine the various visualization options/functions interactively to explore your data. Currently, this is the default plotting behavior because the configuration option cfg.interactive='yes' is activated unless you explicitly select cfg.interactive='no' before calling ft_multiplotTFR to deactivate it. See also the plotting tutorial for more details.

An interesting effect seems to be present in the TFR of sensor MEG0731. To make a plot of a single channel use the function ft_singleplotTFR .

{% include image src="/assets/img/workshop/paris2019/freqlow_famous_singleplotTFR.png" width="400" %}

Figure: The time-frequency representation of a single sensor obtained using ft_singleplotTFR

If you see artifacts in your figure, see this question .

From the previous figure you can see that there is an increase in power around 5 Hz in the time interval 0.6 to 0.8 s after stimulus onset. To show the topography of this 'theta' power increase you can use the function ft_topoplotTFR .

{% include image src="/assets/img/workshop/paris2019/freqlow_famous_topoplotTFR.png" width="400" %}

Figure: A topographic representation of the time-frequency representations (15 - 20 Hz, 0.9 - 1.3 s post stimulus) obtained using ft_topoplotTFR

{% include markup/skyblue %} Plot the power with respect to a relative baseline (hint: use cfg.zlim=[0 2.0] and use the cfg.baselinetype option)

How are the responses different? Discuss the assumptions behind choosing a relative or absolute baseline {% include markup/end %}

{% include markup/skyblue %} Plot the TFR of sensor MEG1921. How do you account for the increased power at ~100-200 ms (hint: compare it to the ERFs)? {% include markup/end %}

{% include markup/skyblue %} Visualize the TFR of the gradiometers. Use what you have learnt from the raw2erp tutorial to first combine the horizontal and planar gradient channels into a single estimate. {% include markup/end %}

Time-frequency analysis II

Hanning taper, frequency dependent window length.

It is also possible to calculate the TFRs with respect to a time window that varies with frequency. Typically the time window gets shorter with an increase in frequency. The main advantage of this approach is that the temporal smoothing decreases with higher frequencies, leading to increased sensitivity to short-lived effects. However, an increased temporal resolution is at the expense of frequency resolution (why?). We will here show how to perform a frequency-dependent time-window analysis, using a sliding window Hanning taper based approach. The approach is very similar to wavelet analysis. A wavelet analysis performed with a Morlet wavelet mainly differs by applying a Gaussian shaped taper.

The analysis is best done by first selecting the numbers of cycles per time window which will be the same for all frequencies. For instance if the number of cycles per window is 7, the time window is 1000 ms for 7 Hz (1/7 x 7 cycles); 700 ms for 10 Hz (1/10 x 7 cycles) and 350 ms for 20 Hz (1/20 x 7 cycles). The frequency can be chosen arbitrarily - however; too fine a frequency resolution is just going to increase the redundancy rather than providing new information.

Below is the configuration for a 7-cycle time window. The calculation is only done for one sensor (MEG0741) but it can of course be extended to all sensors.

To plot the result use ft_singleplotTFR :

{% include image src="/assets/img/workshop/paris2019/tfrhann7.png" width="400" %}

Figure: A time-frequency representation of channel MEG0741 obtained using ft_singleplotTFR

If you see artifacts in your figure, see this FAQ .

Note the boundary effects, in particular for the lower frequency bins, i.e. the blue (or white) region in the time frequency plane. Within this region, no power values are calculated. The reason for this is that for the corresponding time points, the requested timewindow is not entirely filled with data. For example, for 2 Hz the time window has a length of 3.5 s (7 cycles for 2 cycles/s = 3.5 s), this does not fit in the 2.3 sec window that is preprocessed and therefore there is no estimate of power. For 5 Hz the window has a length of 1.4 s (7 cycles for 5 cycles/s = 1.4 s). We preprocessed data between t = -.8 sec and t = 1.5 sec so the first power value is assigned to t= -0.1 (since -.8 + (0.5 * 1.4) = -0.1). Because of these boundary effects it is important to apply ft_freqanalysis to a larger time window to get all the time frequency points for your time window of interest. This requires some thinking ahead when designing your experiment, because inclusion of data from epochs-of-non-interest might contaminate your data with artifacts or other unwanted data features (e.g., stimulus-offset related rebounds).

If you would like to learn more about plotting of time-frequency representations, please see the visualization section.

{% include markup/skyblue %} Adjust the length of the time-window and thereby degree of smoothing. Use ft_singleplotTFR to show the results. Discuss the consequences of changing these setting.

4 cycles per time window:

5 cycles per time window:

10 cycles per time window:

{% include markup/end %}

Time-frequency analysis III

Multitapers.

Multitapers are typically used in order to achieve better control over the frequency smoothing. More tapers for a given time window will result in stronger smoothing. For frequencies above 30 Hz, smoothing has been shown to be advantageous, increasing sensitivity thanks to reduced variance in the estimates despite reduced effective spectral resolution. Oscillatory gamma activity (30-100 Hz) is quite broad band and thus analysis of this signal component benefits from multitapering, which trades spectral resolution against increased sensitivity. For signals lower than 30 Hz it is recommend to use only a single taper, e.g., a Hanning taper as shown above. The reason for this is the relationship between the bandwidth of a band-limited oscillatory signal component, its nominal frequency, and the lifetime of the oscillatory transient.

Time-frequency analysis based on multitapers can be performed by the function ft_freqanalysis . The function uses a sliding time window for which the power is calculated for a given frequency. Prior to calculating the power by discrete Fourier transforms the data are 'tapered'. Several orthogonal tapers can be used for each time window. The power is calculated for each tapered data segment and then combined.

Here, we demonstrate this functionality, by focussing on frequencies > 30 Hz, using a fixed length time window. The cfg settings are largely similar to the fixed time-window Hanning-tapered analysis demonstrated above, but in addition you need to specify the multitaper smoothing parameter, with an optional specification of the type of taper used. Note that by default the 'mtmconvol' method applies multitapers, unless otherwise specified by the content of cfg.taper. A heuristic for the specification of the tapsmofrq parameter, which in FieldTrip is a number that expresses the half bandwidth of smoothing in Hz., would be to use an integer number of the frequency resolution, determined by the corresponding frequency's specified time window. The relationship between the smoothing parameter ( tapsmofrq ), the time window length ( t_ftimwin ) and the number of tapers used, is given by (see Percival and Walden (1993) ):

K = 2*t_ftimwin*tapsmofrq-1 , where K is required to be larger than 0.

K is the number of tapers applied; the more, the greater the smoothing.

Plot the result

{% include image src="/assets/img/workshop/paris2019/freqhigh_famous.png" width="650" %}

Figure: Time-frequency representations of power calculated using multitapers.

{% include markup/skyblue %} Rather than visualising the TFRs in isolated conditions (after a baseline correction), you can also visualise the difference between 2 conditions, for example in the following way, using ft_math .

Inspect the resulting TFR, using interactive plotting. Note: don't forget to NOT use the cfg.baseline/baselinetype options (why not?).

Time-frequency analysis IV

Morlet wavelets.

An alternative to calculating TFRs with the multitaper method is to use Morlet wavelets. The approach is equivalent to calculating TFRs with time windows that depend on frequency using a taper with a Gaussian shape. The commands below illustrate how to do this. One crucial parameter to set is cfg.width. It determines the width of the wavelets in number of cycles. Making the value smaller will increase the temporal resolution at the expense of frequency resolution and vice versa. The spectral bandwidth at a given frequency F is equal to F/width 2 (so, at 30 Hz and a width of 7, the spectral bandwidth is 30/7 2 = 8.6 Hz) while the wavelet duration is equal to width/F/pi (in this case, 7/30/pi = 0.074s = 74ms) ( Tallon-Baudry and Bertrand (1999) ).

Calculate TFRs using Morlet wavelet

{% include image src="/assets/img/workshop/paris2019/freq_famous.png" width="650" %}

Figure: Time-frequency representations of power calculated using Morlet wavelets.

{% include markup/skyblue %} Adjust cfg.width and see how the TFRs change. {% include markup/end %}

If you would like to learn more about plotting of time-frequency representations, please see visualization .

A Review of Wavelet Analysis and Its Applications: Challenges and Opportunities

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

I. INTRODUCTION

Ii. high-resolution flow data, iii. coherent vorticity extraction (cve) using orthogonal wavelets, iv correlation between pressure and the incoherent part vorticity, v. conclusions, acknowledgments, author declarations, conflict of interest, data availability, wavelet analysis of high-speed transition and turbulence over a flat surface.

Electronic mail: [email protected] and [email protected]

Electronic mail: [email protected]

Electronic mail: [email protected]

- Split-Screen

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Open the PDF for in another window

- Reprints and Permissions

- Cite Icon Cite

- Search Site

George Khujadze , Dimitris Drikakis , Konstantinos Ritos , Ioannis W. Kokkinakis , S. Michael Spottswood; Wavelet analysis of high-speed transition and turbulence over a flat surface. Physics of Fluids 1 April 2022; 34 (4): 046107. https://doi.org/10.1063/5.0088479

Download citation file:

- Ris (Zotero)

- Reference Manager

This paper presents a study of high speed boundary layers using the wavelet method. We analyze direct numerical simulation data for high-speed, compressible transitional, and turbulent boundary layer flows using orthogonal anisotropic wavelets. The wavelet-based method of extraction of coherent structures is applied to the flow vorticity field, decomposed into coherent and incoherent contributions using thresholding of the wavelet coefficients. We show that the coherent parts of the flow, enstrophy spectra, are close to the statistics of the total flow, and the energy of the incoherent, noise-like background flow is equidistributed. Furthermore, we investigate the distribution of the incoherent vorticity in the transition and turbulent regions and examine the correlation with the near-wall pressure fluctuations. The results of our analysis suggest that the incoherent vorticity part is not a random “noise” and correlates with the actual noise emanating from inside the boundary layer. This could have implications regarding our understanding of the physics of compressible boundary layers and the development of engineering models.

The study is motivated by advancing the understanding of the physics of transitional and turbulent boundary layers (BL) and the relationship of different flow parameters. In particular, the study concerns the high-speed regime (Mach 6), the role of incoherent vorticity, and its relationship with pressure fluctuations. The latter is responsible for the noise generated inside the boundary layer and has implications in the acoustic vibrations and, subsequently, acoustic fatigue. These issues are exaggerated in extreme flow environments, such as supersonic and hypersonic boundary flows.

Several experimental and numerical studies of high-speed turbulent boundary layers have been published. 1–12 The above studies provide data that advance understanding of the flow processes and guide the design of engineering models. Furthermore, direct numerical simulations have shown that the transition process in hypersonic boundary layers is highly random due to higher modes' existence. The random nature of the hypersonic transition process explains its sensitivity to changes in the disturbance environment that can significantly change the transition process. 7 Most of the studies focused on single-mode or “controlled” transition, but more recent studies considered multimode perturbations, which comprise many waves imitating the von Kármán atmospheric turbulence. 8,9,13

Resolving all dynamically active flow scales requires a high-resolution close to the wall. Other mathematical tools complementary to DNS could also provide significant insight into complex flow physics. It is well known that turbulent flows are characterized by a range of spatial and temporal scales, which involves many degrees of freedom interacting nonlinearly. The coherent structures in time and space are observed even at large Reynolds numbers and a random background flow. The computational challenge is that scales increase drastically with Reynolds number. Therefore, detecting and tracking energy-containing eddies to describe turbulent flows with minimum degrees of freedom are essential for studying fluid and acoustics dynamic processes.

Wavelets offer a framework for analyzing turbulent flows based on the ability of wavelet multiresolution analysis to identify and isolate the energetic coherent structures that govern the dynamics of the flow. 14 Wavelets are well-defined and well-localized in space and scale and can be efficiently used for multiscale decomposition. 14–21 The wavelet decomposition of turbulent flows concentrates the most energetic coherent structures in a few wavelet coefficients, while the incoherent background flow is represented with the large majority of the wavelet coefficients that keep a negligibly small intensity.

One of the developed methods is the coherent vortex extraction (CVE) from the turbulent flow, 16 which we present in Sec. III . CVE is related to denoising signals in wavelet space. Its main idea is to decompose the flow into coherent (represented by a few wavelet coefficients) and incoherent parts (noise) using wavelet filtering of the vorticity field. The evolution of the coherent part of the flow is computed deterministically, whereas the influence of the incoherent background flow is modeled statistically. No model is needed for coherent structures definition. Instead, the noise should be modeled more trivial and is assumed to be additive, Gaussian and white. The general properties of CVE were discussed in the literature. 14,15 Wavelets are basis functions localized in both physical and wave-number spaces. For comparison, the classical Fourier transform is based on well-localized functions in wave-number space. Still, it does not provide localization in physical space, leading to the lack of general theory in the latter. The previous studies show that CVE can more efficiently extract the coherent structures than the Fourier filtering. 14–19,21

For wall-bounded flows, the situation becomes more complex because no-slip boundary conditions have to be considered. Indeed, no-slip boundary conditions generate vorticity due to the viscous flow interactions with the walls. In the paper by Khujadze et al., 20 an efficient algorithm to extract coherent vorticity was developed. A locally refined grid was constructed using wavelets for incompressible turbulent boundary layers with mirror boundary conditions. It was shown that less than 1% of wavelet coefficients retain the coherent structures of the flow, while the majority of the coefficients correspond to a structureless, noise-like background flow. Furthermore, scale- and direction-dependent statistics in wavelet space quantify the flow properties at different wall distances.

This study uses wavelets to analyze recently published high-fidelity data from transitional and turbulent BL at Mach 6. 13 We present the coherent and incoherent parts of the flow and enstrophy spectra across the transition and turbulent regions. Furthermore, we discuss the distributions of the incoherent vorticity component and identify a correlation with the near-wall pressure fluctuations. The results of this study prompt further exploration of the relationship between incoherent vorticity and near-wall noise, which could advance understanding of high-speed, near-wall transition, and turbulence and guide the development of engineering models.

The data used in the present study were obtained from the direct numerical simulations (DNSs) of Drikakis et al. 13 They concern a hypersonic flow over a flat plate at Mach 6, subjected to von Kármán atmospheric perturbations at the inlet, with a turbulence intensity of the freestream velocity equal to 1%. A detailed description of the method used in the DNS can be found in the study by Kokkinakis et al. 22 and the flow parameters in the paper by Drikakis et al. 13 A reference length of x l = 2 mm, calculated as the distance from the leading edge of the plate, has been used to non-dimensionalize the data. The Reynolds number is R e x l = 77 791. Similar to previous boundary layer studies in the Mach number range 4–6, 10,12,23–27 the vibrational excitation and dissociation effects of diatomic molecules that could lead to ionization, are neglected. This is further justified as the maximum temperature experienced by the fluid is well below the critical temperature for oxygen dissociation (2500 K).

The DNS solved the full Navier–Stokes equations using a finite volume Godunov-type method in conjunction with the ninth-order Weighted-Essentially Non-Oscillatory (WENO). 22 The computational grid comprised of 4055 × 405 × 605 cells, i.e., 989.2 × 10 6 cells in total. The grid resolution gave Δ x + = 2.25 , Δ y w + = 0.16 , and Δ z + = 0.90 ; thus, we expect that the boundary layer is adequately resolved. Furthermore, the maximum y + value at the edge of the boundary layer was Δ y e + = 1.89 , providing extra confidence in the accuracy of the results. The DNS aimed to capture the smallest possible turbulent scales and their associated acoustic loading near-wall effects. The simulations had captured both transition and fully turbulent flow regions, and further details on the results and flow physics analysis can be found in the reference study of Drikakis et al. 13

In this section, an outline of the CVE method is given. The underlying idea is to perform denoising of vorticity field, ω ( x ) = ∇ × V , in the wavelet coefficient space. We have used Morkovin's hypothesis 28 to assess the applicability of the wavelet method near the wall as it does not account for compressibility effects. We found that the root mean square fluctuations of the density near the plate are small (maximum value around 0.07). Therefore, according to Morkovin's hypothesis—see also Bradshaw 29 and cite Shyy and Krishnamurty 30 —the above level of density fluctuations (i.e., less than 0.1) implies that the structure of turbulence (at least near the wall) is “about the same” as that of incompressible flows. Thus, we have employed the present wavelet method based on the above conclusion. We believe that compressibility and dilatational effects deserve further investigation beyond the scope of this paper. The vorticity field is given on a grid ( x i , y i , z i ) for i , j , k = 0 , N − 1 with N = 2 3 J . J is the corresponding number of octaves, defining the scales from the largest, l max = 2 0 , to the smallest one, l min = 2 1 − J . The details about the wavelets method can be found in the following publications. 14,15,18,21,31,32

The wavelet coefficients threshold is performed with the optimal parameter ε to determine which coefficients belong to the coherent and the incoherent contributions. The latter is assumed to be Gaussian white noise. The threshold parameter ε depends only on the total enstrophy Z = 1 2 ⟨ ω · ω ⟩ xyz and the total number of grid points N as follows: ε = 4 Z ln N . (The choice of ε is based on Donoho's theorem. 33 ) Note that ε has no adjustable parameters. First, using Fast Wavelet Transform (FWT), we compute Ω = ∑ ℓ = 1 3 , ( ω ̃ j , i x , i y , i z μ ) 2 , where ω j , i x , i y , i z μ are wavelet coefficients of vorticity. Then, we reconstruct the coherent vorticity ω c from those wavelet coefficients for which Ω > ε . The incoherent vorticity ω i is obtained from the remaining weak wavelet coefficients. In the first iteration, the thresholding parameter is defined from ε . Subsequently, a new threshold is determined using the incoherent enstrophy computed from the weak wavelet coefficients instead of the total enstrophy. Then, the thresholding is applied once again, and improved estimators of the coherent and incoherent vorticities are obtained. 16 Due to the decomposition's orthogonality, the enstrophy and the threshold can be directly computed in coefficient space using Parseval's relation. At the end of the iterative procedure, we obtain the coherent and incoherent vorticities reconstructed by Inverse Wavelet Transform (IWT) in the physical space. Finally, we obtain ω = ω c + ω i and, by construction, we also have Z = Z c + Z i . The procedure is schematically presented in Fig. 1 . The wavelet method of coherent vorticity extraction proposes a minimal hypothesis: Coherent structures are not noise. Thus, removing the noise from the flow field leads to extraction of coherent structures.

Schematic presentation of the principles of coherent vorticity extraction.

The orthogonal wavelet decomposition requires that the number of computational grid points in each coordinate direction is a power of 2. Therefore, the nonuniform grid in the wall-normal direction was interpolated on the uniform one, and the results are shown in Fig. 2 . The top plot presents the original ω x field, while the bottom one corresponds to the same field, but interpolated on a uniform grid.

Slices of ω x of the original and interpolated data are presented on the top and bottom plots, respectively.

Based on the calculation of the shape factor H , skin friction C f , and Stanton number St on the plate (see Fig. 5 in Ref. 13 ), we split the domain into three regions: the laminar region up to x = 11.5 (dimensionless); the transition region x ∈ 11.5 – 36.2 (dimensionless) corresponding to the sudden rise of C f and St values, and the sudden drop of H ; and the fully turbulent region x ∈ 36.2 – 50.0 .

The entire transitional region was divided into five different domains to study the statistical characteristics of the flow ( Table I ). In the region x ∈ 11.5 – 16.5 , the energy contained in the incoherent part of the vorticity is 0.001 of total energy for a number of wavelet coefficient exceeding 99% of total number of coefficients. A few wavelet coefficients contain almost the entire enstrophy of the flow.