- Write For US

- Join for Ad Free

Time Travel with Delta Tables in Databricks?

- Post author: rimmalapudi

- Post category: Apache Spark

- Post last modified: March 27, 2024

- Reading time: 11 mins read

What is time travel in the delta table on Databricks? In modern-day to day ETL activities, we see a huge amount of data trafficking into the data lake. There are always a few rows inserted, updated, and deleted. Time travel is a key feature present in Delta Lake technology in Databricks.

Delta Lake uses transaction logging to store the history of changes on your data and with this feature, you can access the historical version of data that is changing over time and helps to go back in time travel on the delta table and see the previous snapshot of the data and also helps in auditing, logging, and data tracking.

Table of contents

1. challenges in data transition, 2. what is delta table in databricks, 3 create a delta table in databricks, 4. update delta records and check history, 5.1. using the timestamp, 5.2. using version number, 6. conclusion.

- Auditing : Looking over the data changes is critical to keep data in compliance and for debugging any changes. Data Lake without a time travel feature is failed in such scenarios as we can’t roll back to the previous state once changes are done.

- Reproduce experiments & reports : ML engineers try to create many models using some given set of data. When they try to reproduce the model after a period of time, typically the source data has been modified and they struggle to reproduce their experiments.

- Rollbacks : In the case of Data transitions that do simple appends, rollbacks could be possible by date-based partitioning. But in the case of upserts or changes by mistake, it’s very complicated to roll back data to the previous state.

Delta’s time travel capabilities in Azure Databricks simplify building data pipelines for the above challenges. As you write into a Delta table or directory, every operation is automatically versioned and stored in transactional logs. You can access the different versions of the data in two different ways:

Now, let us create a Delta table and perform some modifications on the same table and try to play with the Time Travel feature.

In Databricks the time travel with delta table is achieved by using the following.

- Using a timestamp

- Using a version number

Note: By default, all the tables that are created in Databricks are Delta tables.

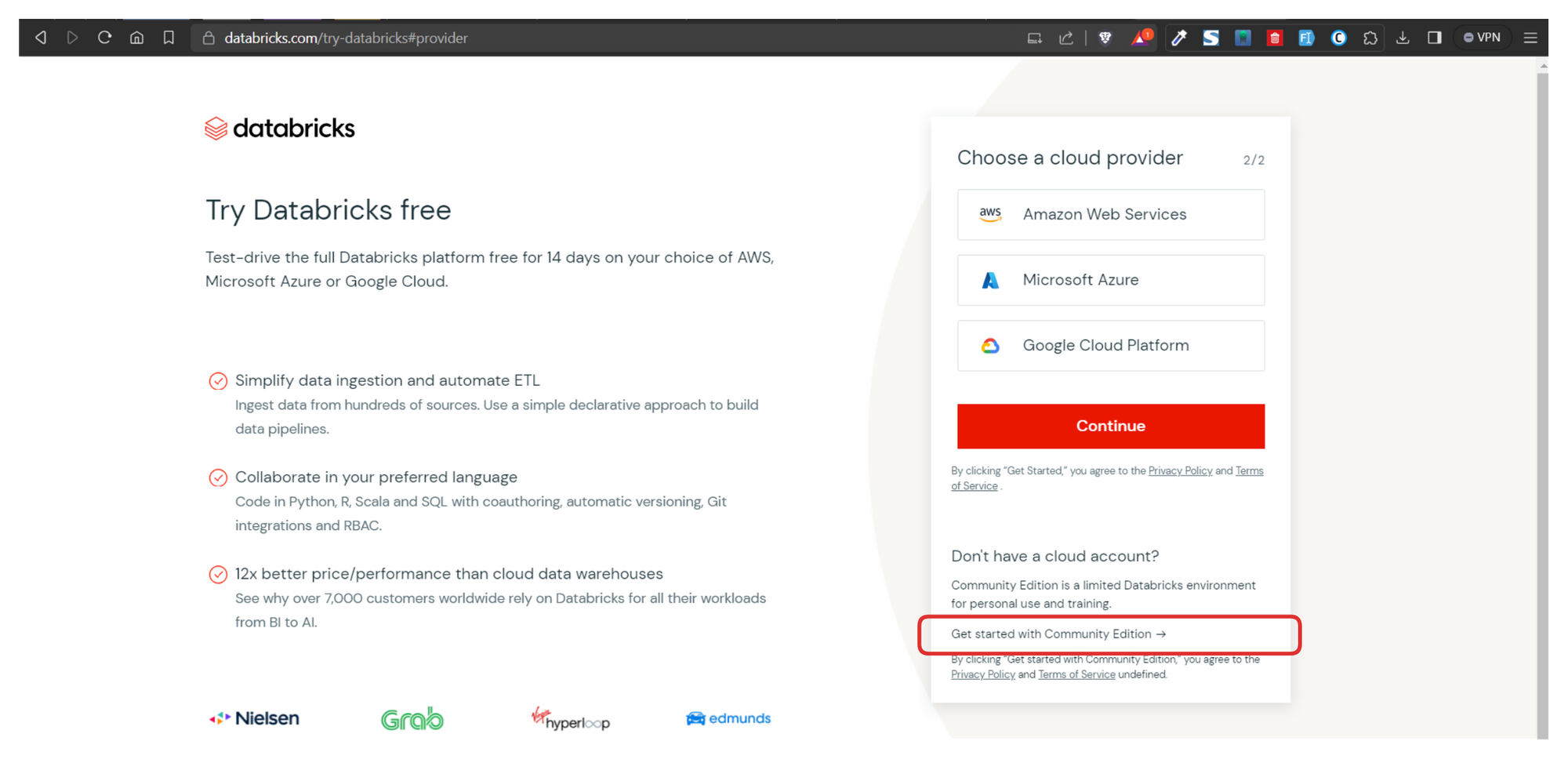

Here, I am using the community Databricks version to achieve this ( https://community.cloud.databricks.com/ ). Create a cluster and attach the cluster to your notebook.

Let’s create the table and insert a few rows to the table.

You will get the below output.

Here we have created a student table with some records and as you can see it’s by default provided as delta.

Let’s update the student delta table with id’s 1,3 and delete records with id 2. Add another cell to simulate the update and delete the row in the table

You will see something below on databricks.

As you can see from the above screenshot, there are total 4 versions since the table is created, with the top record being the most recent change

- version0: Created the student table at 2022-11-14T12:09:24.000+0000

- version1: Inserted records into the table at 2022-11-14T12:09:29.000+0000

- version2: Updated the values of id’s 1,3 at 2022-11-14T12:09:35.000+0000

- version3: Deleted the record of id’s 2 at 2022-11-14T12:09:39.000+0000

Notice that the describe result shows the version , timestamp of the transaction that occurred, operation , parameters , and metrics . Metrics in the results show the number of rows and files changed.

5. Query Delta Table in Time Travel

As we already know Delta tables in Databricks have the time travel functionality which can be explored either using timestamp or by version number.

Note : Regardless of what approach you use, it’s just a simple SQL SELECT command with extending “ as of ”

First, let’s see our initial table, the table before I run the update and delete. To get this let’s use the timestamp column.

Now let’s see how the data is updated and deleted after each statement.

From the above examples, I hope it is clear how to query the table back in time.

As every operation on the delta table is marked with a version number, and you can use the version to travel back in time as well.

Below I have executed some queries to get the initial version of the table, after updating and deleting. The result would be the same as what we got with a timestamp .

Time travel of Delta table in databricks improves developer productivity tremendously. It helps:

- Data scientists and ML experts manage their experiments better by going back to the source of truth.

- Data engineers simplify their pipelines and roll back bad writes.

- Data analysts do easy reporting.

- Rollback to the previous state.

Related Articles

- Spark Timestamp – Extract hour, minute and second

- Spark Timestamp Difference in seconds, minutes and hours

- Spark – What is SparkSession Explained

- Spark Read XML file using Databricks API

- Spark Performance Tuning & Best Practices

- Spark Shell Command Usage with Examples

- datalake-vs-data-warehouse

- What is Apache Spark and Why It Is Ultimate for Working with Big Data

- Apache Spark Interview Questions

This Post Has One Comment

Nice article!

Comments are closed.

Databricks Knowledge Base

If you still have questions or prefer to get help directly from an agent, please submit a request. We’ll get back to you as soon as possible.

Please enter the details of your request. A member of our support staff will respond as soon as possible.

- All articles

Compare two versions of a Delta table

Use time travel to compare two versions of a Delta table.

Written by mathan.pillai

Delta Lake supports time travel, which allows you to query an older snapshot of a Delta table.

One common use case is to compare two versions of a Delta table in order to identify what changed.

For more details on time travel, please review the Delta Lake time travel documentation ( AWS | Azure | GCP ).

Identify all differences

You can use a SQL SELECT query to identify all differences between two versions of a Delta table.

You need to know the name of the table and the version numbers of the snapshots you want to compare.

For example, if you had a table named “schedule” and you wanted to compare version 2 with the original version, your query would look like this:

Identify files added to a specific version

You can use a Scala query to retrieve a list of files that were added to a specific version of the Delta table.

In this example, we are getting a list of all files that were added to version 2 of the Delta table.

00000000000000000002.json contains the list of all files in version 2.

After reading in the full list, we are excluding files that already existed, so the displayed list only includes files added to version 2.

How to Use Databricks Delta Lake with SQL – Full Handbook

Welcome to the Databricks Delta Lake with SQL Handbook! Databricks is a unified analytics platform that brings together data engineering, data science, and business analytics into a collaborative workspace.

Delta Lake, a powerful storage layer built on top of Databricks, provides enhanced reliability, performance, and data quality for big data workloads.

This is a hands-on training guide where you will get a chance to dive into the world of Databricks and learn how to effectively use Delta Lake for managing and analyzing data. It'll provide you with the essential SQL skills to efficiently interact with Delta tables and perform advanced data analytics.

Prerequisites

This handbook is designed for beginner-level SQL users who have some experience with cloud platforms and clusters. Although no prior experience with Databricks is required, it is recommended that you have a basic understanding of the following concepts:

- Databases: Familiarity with the basic structure and functionality of databases will be helpful.

- SQL Queries: Knowledge of SQL syntax and the ability to write basic queries is essential.

- Jupyter Notebooks: Understanding how Jupyter notebooks work and being comfortable with running code cells is recommended.

While this handbook assumes a certain level of familiarity with databases, SQL, and Jupyter notebooks, it will guide you step-by-step through each process, ensuring that you understand and follow along with the material.

As such, no installation is necessary, as all the work will be done on Databricks Delta Notebooks running in the cluster. Everything has already been provisioned, eliminating the need for any setup or configuration.

By the end of this handbook, you would have gained a solid foundation in using SQL with Databricks, enabling you to leverage its powerful capabilities for data analysis and manipulation.

Let's get started!

Table of Contents

Here are the sections of this tutorial:

Introduction to Databricks

- What is Databricks?

- Key features and benefits

- Getting started with Databricks Workspace

- Notebook basics and interactive analytics

2. Introduction to Delta

- Understanding Delta Lake

- Advantages of using Delta

- Use cases of Delta in real-world scenarios

- Supported languages and platforms for Delta

3. How to Create and Manage Tables

- Creating tables from various data sources

- SQL Data Definition Language (DDL) commands

- SQL Data Manipulation Language (DML) commands

- Creating tables from a Databricks dataset

Saving the loaded CSV file to Delta using Python

4. Delta SQL Command Support

- Delta SQL commands for data management

- Performing UPSERT (UPDATE and INSERT) operations

5. Advanced SQL Queries

- Handling data visualization in Delta

- Advanced aggregate queries in Delta

- Counting diamonds by clarity using SQL

- Adding table constraints for data integrity

6. How to Work with DataFrames

- Creating a DataFrame from a Databricks dataset

- Data manipulation and displaying results using DataFrames

7. Version Control and Time Travel in Delta

- Understanding version control and time travel in Delta

- Restoring data to a specific version

- Utilizing autogenerated fields for metadata tracking

8. Delta Table Cloning

- Deep and shallow copying of Delta tables

- Efficiently cloning Delta tables for data exploration and analysis

9. Conclusion

Databricks is a unified analytics platform that combines data engineering, data science, and machine learning into a single collaborative environment. Leveraging Apache Spark, it processes and analyzes vast amounts of data efficiently.

Databricks offers benefits like seamless scalability, real-time collaboration, and simplified workflows, making it a favored choice for data-driven enterprises.

Its versatility suits various use cases: from ETL processes and data preparation to advanced analytics and AI model development. Databricks aids in uncovering insights from structured and unstructured data, empowering businesses to make informed decisions swiftly.

You can see its application in finance for fraud detection, healthcare for predictive analytics, e-commerce for recommendation engines, and so on. Basically, Databricks accelerates data-driven innovation, transforming raw information into actionable intelligence.

To follow along this tutorial, you should first create a Community Edition account so you can create your clusters.

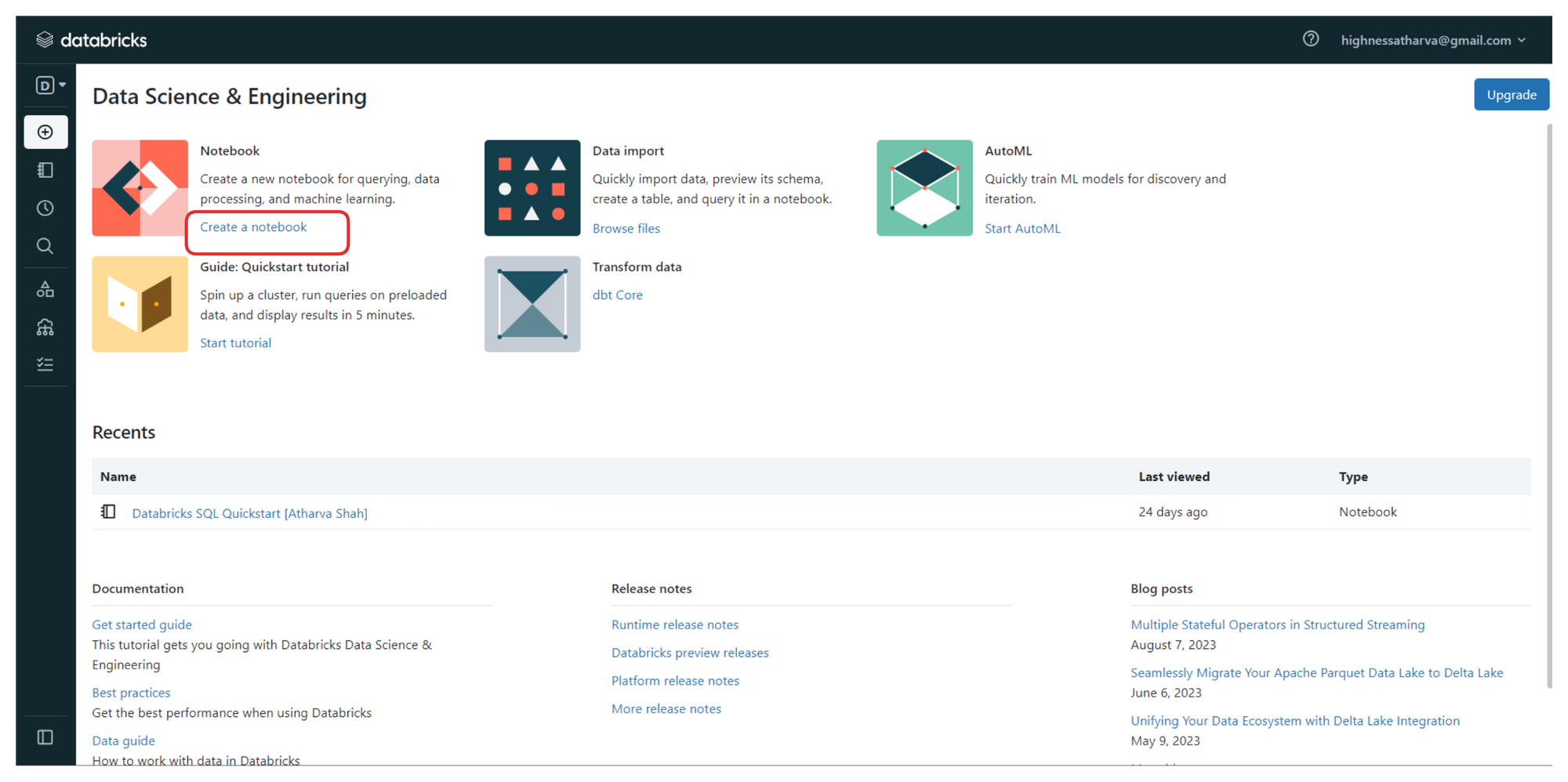

Once you've created your account, head over to the Community Edition login page . Once you have signed in, you'll be greeted with a screen very similar to the one shown below.

From the sidebar on the left, you can create your workspaces, and upload datasets and files that you wish to process.

To follow along, click on the link highlighted in the image above (the one that says "create a notebook"). It will launch a new notebook on Databricks platform where we'll be writing all the code.

You can also access all your notebooks from the left sidebar or from the "Recents" tab on the home screen once you login.

You can find all the code, instructions, and steps used in this handbook with explanations in one of the public notebooks I have created here .

On creating a new notebook, you should create a cluster to run your commands and process the data. Clusters in the Databricks Delta platform are groups of computing resources that drive efficient data processing. They execute tasks in parallel, speeding up tasks like ETL and analysis.

Clusters offer tailored resource allocation, ensuring optimal performance and scalability. Supporting multiple users and tasks concurrently, clusters encourage collaboration. Leveraging Apache Spark, they enable advanced analytics and machine learning.

Integral to Databricks Delta's ACID transactions, clusters ensure data integrity. Overall, clusters empower seamless, high-performance data handling, essential for tasks ranging from data preparation to sophisticated analytics and AI model training.

Now that we have the notebook and clusters set up, we can start with the code. But before we do that, here are a few key terms to know. Awareness of these is more about the platform and less about SQL syntax which will be covered below.

Data Ingestion

Data ingestion in Delta involves loading data from third-party sources, such as Fivetran. The most efficient storage medium for data in Delta is Parquet, which is a columnar storage format. To load data into Delta, we can use Spark or PySpark Python and specify the storage location. The loaded data can be accessed and queried using SQL syntax with the COPY INTO command.

Visualizations created in SQL notebooks within Delta can be added to custom dashboards for BI/Analytics. These dashboards are lightweight and provide real-time updates based on data refreshment. This enables users to create insightful and interactive dashboards for data analysis and reporting. You need not create your dashboards from scratch. Popular Dashboard templates are available.

Delta provides data governance through the Unity Catalog, ensuring that users only have access to databases and tables they are permitted to view or edit. This granular control over data access enhances security and data privacy within the system.

Moderators or superusers can access the history of each query run against all databases, along with timestamps and query execution times. This feature helps in understanding query patterns and optimizing database performance based on usage insights.

Optimization

To improve query performance, Delta offers various optimization techniques, such as database indexing, clustering, Bloom filter indexing, and leveraging MPP paradigms like MapReduce. Knowledge of normalization and schema design also contributes to writing efficient SQL queries.

Delta allows users to set alerts based on comparison operators applied to query results. For example, when a sales count query returns a value below a threshold, an alert can be triggered via Slack, ticketing tools, or emails. Customizable alerts ensure timely notifications for critical data events.

Persona-Based Design

The Databricks Platform is designed to cater to different personas, including Data Science/Analytics and BI/MLOps specialists. Users get segregated interfaces tailored to their roles. However, the Unity Catalog can aggregate all these views, providing a cohesive experience.

SQL Workspace

The SQL Workspace in Delta provides an interface similar to MySQL Workbench or PgAdmin. Users can perform SQL queries on datasets without the need to load the data repeatedly, as done in notebooks. This efficient querying enhances the SQL-based data analysis experience.

Integration with other BI Tools

Databricks integrates well with Tableau and PowerBI. You can import your data points and visualizations seamlessly and get consistent and synced results in the BI tools of your choice. With the click of a button, live queries are generated against the Databricks datasets.

Introduction to Delta

Delta Lake is an open storage format used to save your data in your Lakehouse. Delta provides an abstraction layer on top of files. It's the storage foundation of your Lakehouse.

Why Delta Lake?

Running an ingestion pipeline on Cloud Storage can be very challenging. Data teams typically face the following challenges:

- Hard to append data (Adding newly arrived data leads to incorrect reads).

- Modification of existing data is difficult (GDPR/CCPA requires making fine-grained changes to the existing data lake).

- Jobs failing mid-way (Half of the data appears in the data lake, the rest may be missing).

- Data quality issues (It’s a constant headache to ensure that all the data is correct and high quality).

- Real-time operations (Mixing streaming and batch leads to inconsistency).

- Costly to keep historical versions of the data (Regulated environments require reproducibility, auditing, and governance).

- Difficult to handle large metadata (For large data lakes, the metadata itself becomes difficult to manage).

- “Too many files” problems (Data lakes are not great at handling millions of small files).

- Hard to get great performance (Partitioning the data for performance is error-prone and difficult to change).

These challenges have a real impact on team efficiency and productivity, spending unnecessary time fixing low-level, technical issues instead of focusing on high-level, business implementation.

Because Delta Lake solves all the low-level technical challenges of saving petabytes of data in your lakehouse, it lets you focus on implementing a simple data pipeline while providing blazing-fast query answers for your BI and analytics reports.

In addition, Delta Lake is a fully open source project under the Linux Foundation and is adopted by most of the data players. You know you own your data and won't have vendor lock-in.

Features and Capabilities

You can think about Delta as a file format that your engine can leverage to bring the following capabilities out of the box:

- ACID transactions

- Support for DELETE/UPDATE/MERGE

- Unify batch & streaming

- Time Travel

- Clone zero copy

- Generated partitions

- CDF - Change Data Flow (DBR runtime)

- Blazing-fast queries

This hands-on quickstart guide is going to focus on:

- Loading Databases and Tabular Data from a variety of sources

- Writing DDL, DML, and DTL queries on these datasets

- Visualizing Datasets to get conclusive results

- Time travel and Restoring database

- Performance Optimization

How to Create and Manage Tables

Okay, time to code! If you still have the notebook that we created earlier along with the clusters open, you can start by following along with the code below. Don't worry, explanations for every step will follow.

Select the dropdown next to the notebook title and ensure SQL is selected since this handbook is all about Delta Lakes with SQL.

How to Create Tables from a Databricks Dataset

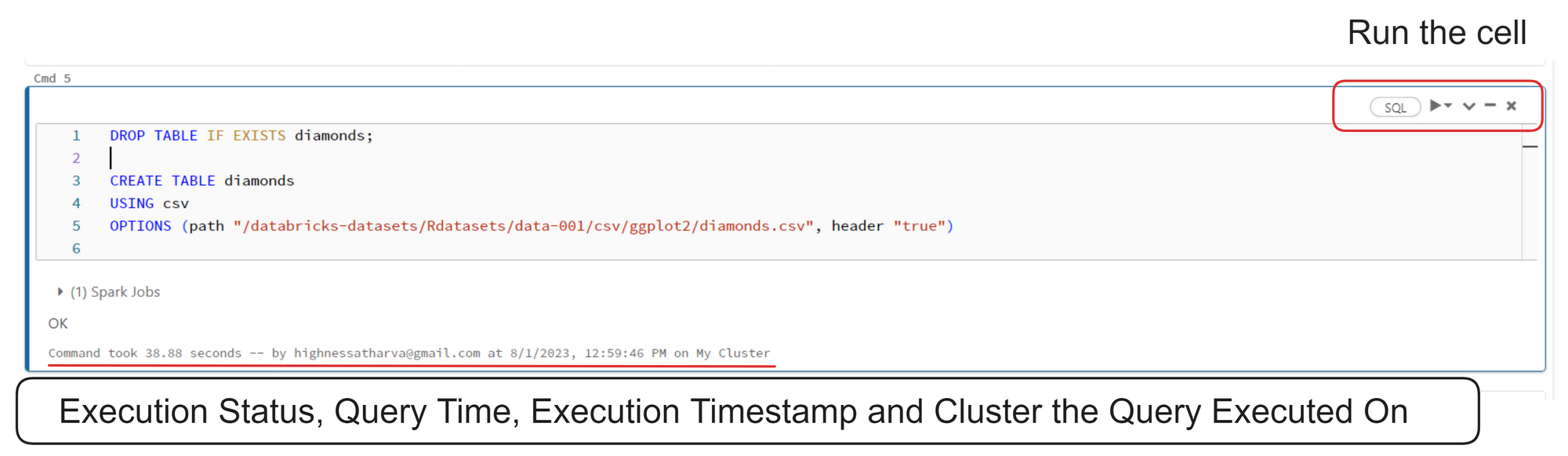

Databricks notebooks are very much like Jupyter Notebooks. You have to insert your code into cells and run them one by one or together. All the output is shown cell by cell, progressively.

Here's the code from the image above:

In the code above, the two SQL statements ( CREATE TABLE ) are used to create a table named diamonds in a database. The table is based on data from a CSV file located at the specified path.

If a table with the same name already exists, the DROP TABLE IF EXISTS diamonds statement ensures it is deleted before creating a new one. The table will have the same schema as the CSV file, with the first row assumed to be the header containing column names ("header 'true'").

Here's a command that returns all the records from the diamonds table:

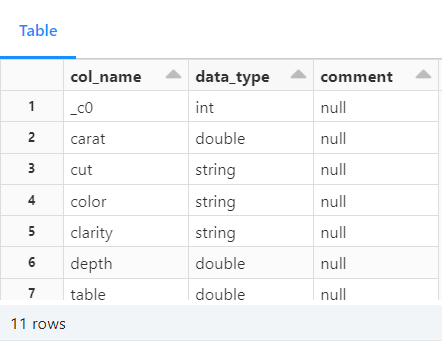

Here's another command:

In SQL, the DESCRIBE statement is used to retrieve metadata information about a table's structure. The specific syntax for the DESCRIBE statement can vary depending on the database system being used.

However, its primary purpose is to provide details about the columns in a table, such as their names, data types, constraints, and other properties.

The best part about using the Databricks platform is that it allows you to write Python, SQL, Scala, and R interchangeably in the same notebook.

You can switch up the languages at any given point by using the "Delta Magic Commands". You can find a full list of magic commands at the end of this handbook.

Data is read from a CSV file located at /databricks-datasets/Rdatasets/data-001/csv/ggplot2/diamonds.csv into a Spark DataFrame named diamonds . The first row of the CSV file is treated as the header, and Spark infers the schema for the DataFrame based on the data.

The DataFrame diamonds is written in a Delta Lake table format. If the table already exists at the specified location ( /delta/diamonds ), it will be overwritten. If it does not exist, a new table will be created.

The SQL statements above drops any existing table named diamonds and creates a new Delta Lake table named diamonds using the data stored in the Delta Lake format at the /delta/diamonds/ location.

You can run a SELECT statement to ensure that the table appears as expected:

Delta SQL Command Support

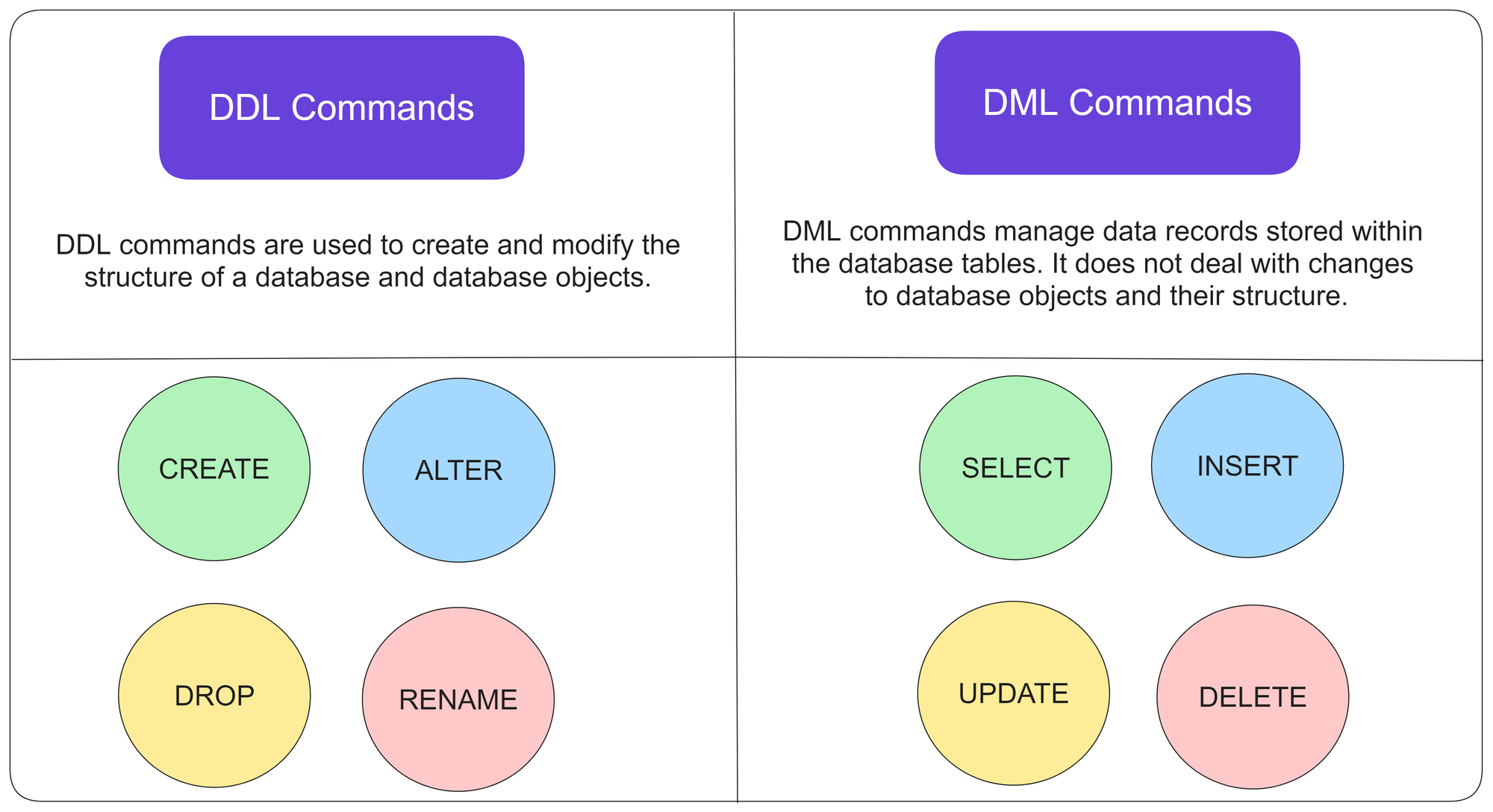

In the world of databases, there are two fundamental types of commands: Data Manipulation Language (DML) and Data Definition Language (DDL). These commands play a crucial role in managing and organizing data within a database. In this article, we will explore what DML and DDL commands are, their key differences, and provide examples of how they are used.

Data Manipulation Language (DML)

It is used to manipulate or modify data stored in a database. These commands allow users to insert, retrieve, update, and delete data from database tables. Let's take a closer look at some commonly used DML commands:

SELECT : The SELECT command is used to retrieve data from one or more tables in a database. It allows you to specify the columns and rows you want to extract by using conditions and filters. For example, SELECT * FROM Customers retrieves all the records from the Customers table.

INSERT : The INSERT command adds new data into a table. It allows you to specify the value for each column or select values from another table. For example, INSERT INTO Customers (Name, Email) VALUES ('John Doe', '[email protected]') adds a new customer record to the Customers table.

UPDATE : The UPDATE command is used to modify existing data in a table. It allows you to change the values of specific columns based on certain conditions. For example, UPDATE Customers SET Email = '[email protected]' WHERE ID = 1 updates the email address of the customer with ID of 1.

DELETE : The DELETE command is used to remove data from a table. It allows you to delete specific rows based on certain conditions. For example, DELETE FROM Customers WHERE ID = 1 deletes the customer record with ID of 1 from the Customers table.

Data Definition Language (DDL) Commands

DDL commands are used to define the structure and organization of a database. These commands allow users to create, modify, and delete database objects such as tables, indexes, and constraints.

Let's explore some commonly used DDL commands:

CREATE : Creates a new database object, such as a table or an index. It allows you to define the columns, data types, and constraints for the object. For example, CREATE TABLE Customers (ID INT, Name VARCHAR(50), Email VARCHAR(100)) creates a new table named Customers with three columns.

ALTER : Modifies the structure of an existing database object. It allows you to add, modify, or delete columns, constraints, or indexes. For example, ALTER TABLE Customers ADD COLUMN Phone VARCHAR(20) adds a new column named Phone to the Customers table.

DROP : Deletes an existing database object. It permanently removes the object and its associated data from the database. For example, DROP TABLE Customers deletes the Customers table from the database.

TRUNCATE : The TRUNCATE command is used to remove all the data from a table, while keeping the table structure intact. It is faster than the DELETE command when you want to remove all records from a table. For example, TRUNCATE TABLE Customers removes all records from the Customers table.

Delta Lake supports standard DML including UPDATE , DELETE and MERGE INTO , providing developers with more control to manage their big datasets.

Here's an example that uses the INSERT , UPDATE , and SELECT commands:

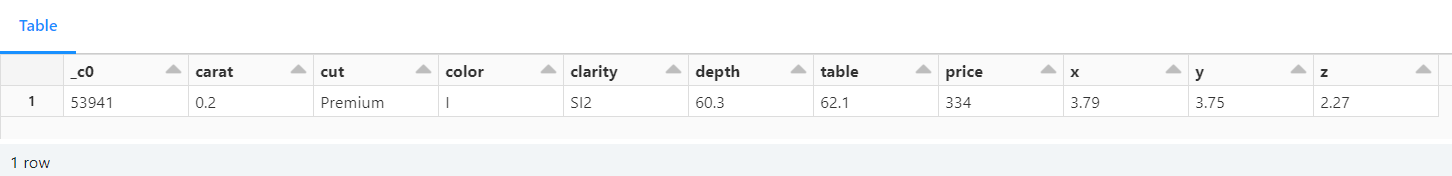

In the example above, an initial row is inserted into the diamonds table with specific values for each column.

Then the carat value for the row with _c0 equal to 53941 is updated to 0.20.

The final SELECT statement retrieves the row with _c0 equal to 53941, showing its current state after the INSERT and UPDATE operations. This shows that the record insertion was successful.

The above DELETE command paired with the WHERE clause removes the row from the database and the subsequent SELECT query validates this by returning a null result set.

UPSERT Operation

The "upsert" operation updates if the record exists, and inserts the record doesn't exist.

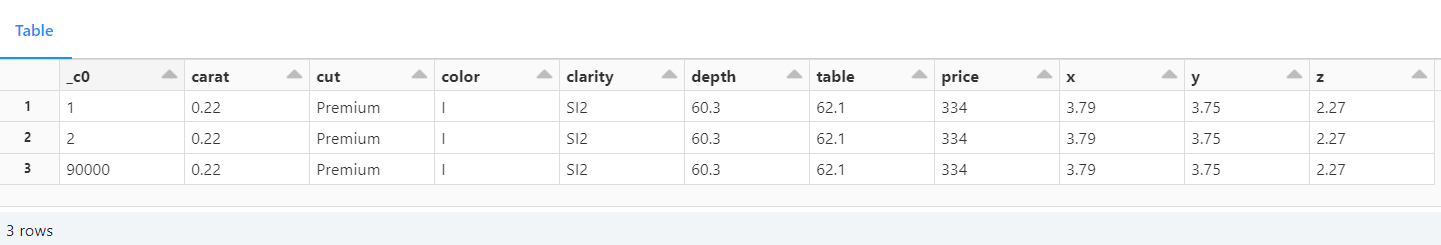

In this scenario, we have created a table named diamond__mini to test upsert (that is, insert or update) operations into the diamonds table.

diamond__mini is a subset of the diamonds table, containing only 3 records. Two of these rows (with _c0 values 1 and 2) already exist in the diamonds table, and one row (with _c0 value 90000) does not exist.

Therefore, the code will drop and create the diamond__mini table with a specific schema to match the diamonds table.

Then clear the diamond__mini table by deleting all existing records, ensuring that we have a clean slate for the upsert test.

It'll then perform three INSERT statements to the diamond__mini table, attempting to add three new records with different _c0 values, including one with _c0 = 90000 .

Lastly, we'll select all records from the diamond__mini table to observe the changes and verify if the upsert worked correctly.

Since the _c0 values 1 and 2 already exist in the diamonds table, the corresponding rows in diamond__mini will be considered as updates for the existing rows.

On the other hand, the row with _c0 = 90000 is new and does not exist in the diamonds table, so it will be treated as an insert.

The describe command shows the metadata of the new table:

Here's another example that uses the upsert operation:

In this example, a MERGE operation is performed between two tables: diamonds (target table) and diamond__mini (source table). The MERGE statement compares the records in both tables based on the common _c0 column.

Here's a concise explanation:

- The MERGE statement matches records with the same _c0 value in both tables ( diamonds and diamond__mini ).

- When a match is found (based on _c0 ), it performs an UPDATE on the target table ( diamonds ) using the values from the source table ( diamond__mini ). This is done for all columns using UPDATE SET * .

- If no match is found for a record from the source table ( diamond__mini ), it performs an INSERT into the target table ( diamonds ) using the values from the source table for all columns (using INSERT * ).

- After the MERGE operation, a SELECT statement retrieves the records from the target table ( diamonds ) with _c0 values 1, 2, and 90000 to observe the changes made during the merge.

The MERGE statement is used to synchronize data between the diamonds and diamond__mini tables based on their common _c0 column, updating existing records and inserting new ones.

Advanced SQL Queries

Data visualization in delta.

In Databricks Delta platform, you can leverage SQL queries to visualize data and gain valuable insights without the need for complex programming. Here are some ways to visualize data using SQL queries in Databricks Delta:

- Basic SELECT Queries: Retrieves data from your Delta tables. By selecting specific columns or applying filters with WHERE clauses, you can quickly get an overview of the data's characteristics.

- Aggregate Functions: SQL provides a variety of aggregate functions like COUNT , SUM , AVG , MIN , and MAX . By using these functions, you can summarize and visualize data at a higher level. You perform operations such as counting the number of records, calculating the average values, or finding the maximum and minimum values.

- Grouping and Aggregating Data: The GROUP BY clause in SQL allows you to group data based on specific columns, and then apply aggregate functions to each group. This enables generation of meaningful insights by analyzing data on a category-wise basis.

- Window Functions: SQL window functions, like ROW_NUMBER , RANK , and DENSE_RANK , are valuable for partitioning data and calculating rankings or running totals. These functions enable analyzing data in a more granular way and help discover patterns.

- Joining Tables: Helps to combine data from multiple Delta tables using SQL JOIN operations. Merging related data, performing cross-table analysis, and advanced visualizations is possible through joins.

- Subqueries and CTEs: SQL subqueries and Common Table Expressions (CTEs) allow you to break down complex problems into manageable parts. These techniques can simplify analysis and make SQL queries more organized and maintainable.

- Window Aggregates: SQL window aggregates, such as SUM , AVG , and ROW_NUMBER with the OVER clause, enable you to perform calculations on specific windows or ranges of data. This is useful for analyzing trends over time or within specific subsets of your data.

- CASE Statements: CASE statements in SQL help you create conditional expressions, allowing you to categorize or group data based on certain conditions. This can aid in creating custom labels or grouping data into different categories for visualization purposes.

The platform's powerful SQL capabilities empower data analysts and developers to extract meaningful insights from their Delta Lake data, all without the need for additional programming languages or tools.

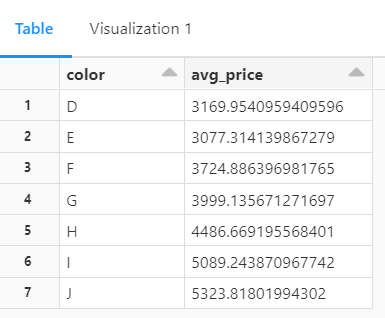

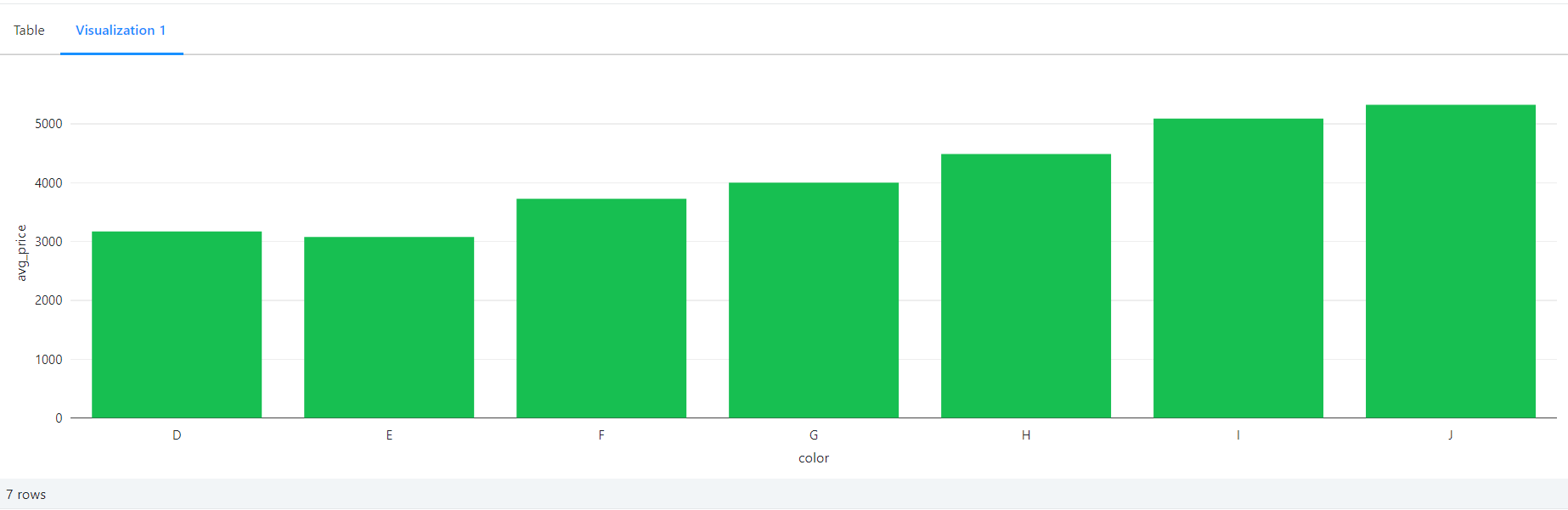

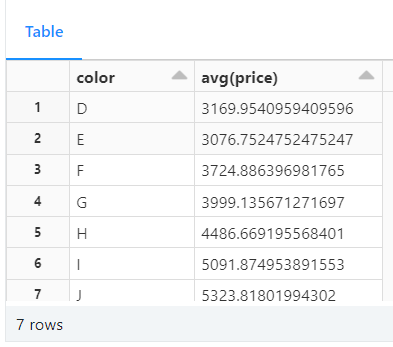

This SQL query above is used to retrieve the average price of diamonds based on their colors.

Let's break down the code:

SELECT color, avg(price) AS avg_price specifies the columns that will be selected in the result set. It selects the color column and calculates the average price using the avg() function. The calculated average is aliased as avg_price for easier reference in the result set.

The FROM diamonds command specifies the table from which data will be retrieved. In this case, the table is named diamonds .

GROUP BY color groups the data by the color column. The result set will contain one row for each unique color, and the average price will be calculated for each group separately.

ORDER BY color arranges the result set in ascending order based on the color column. The output will be sorted alphabetically by color.

Count of Diamonds by Clarity

This SQL query above calculates the count of diamonds for each clarity level and presents the results in descending order. It selects the clarity column and uses the COUNT() function to count the number of occurrences for each clarity value.

The result set is grouped by clarity and sorted in descending order based on the count of diamonds.

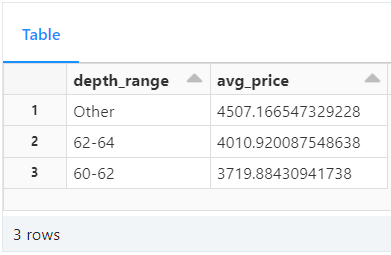

Average Price by Depth Range

Here, we are calculating the average price of diamonds grouped into depth ranges. It uses a CASE statement to categorize the diamonds into three depth ranges: '60-62' for depths between 60 and 62, '62-64' for depths between 62 and 64, and 'Other' for all other depth values.

The AVG() function is then used to calculate the average price for each depth range. The result set is grouped by the depth_range column and ordered in descending order based on the average price.

Price Distribution by Table

This SQL query calculates the median, first quartile (q1), and third quartile (q3) prices for each unique table value in the diamonds table. It uses the PERCENTILE_CONT() function to calculate these statistical measures.

The function is applied to the price column, which is cast as a double for accurate calculations. The result set is grouped by the table column, providing insights into the price distribution within each table category.

Price Factor by X, Y and Z

This query will calculate the average price of diamonds grouped by their x, y, and z values from the diamonds table. It selects the columns x , y , z , and uses the AVG() function to calculate the average price for each combination of x, y, and z values.

The result set is then ordered in descending order based on the average price, providing insights into the average price of diamonds with different dimensions.

Add Constraints

Note that this won't actually yield any output. Guess why? Because it does not stick to the NOT NULL constraint. So, whenever constraints are not fulfilled an error will be thrown. In this case, this exact error is shown:

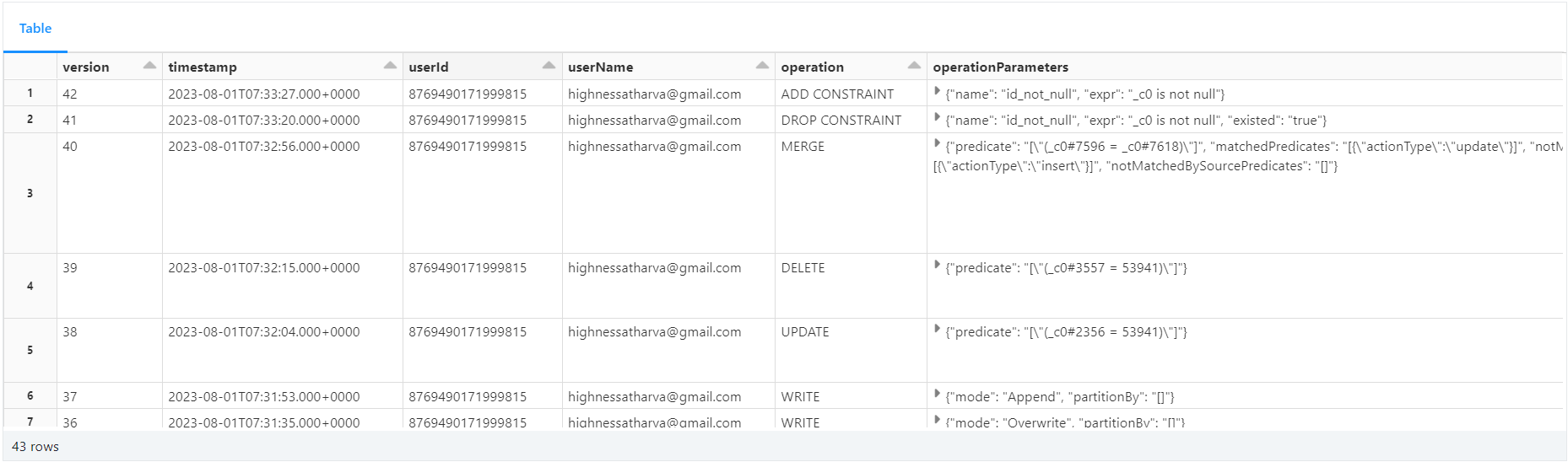

This SQL code snippet demonstrates the alteration of the diamonds table to enforce data integrity.

The first line of code, ALTER TABLE diamonds DROP CONSTRAINT IF EXISTS id_not_null; , checks if a constraint named id_not_null exists in the diamonds table and drops it if it does. This step ensures that any existing constraint with the same name is removed before adding a new one.

The second line of code, ALTER TABLE diamonds ADD CONSTRAINT id_not_null CHECK (_c0 is not null); , adds a new constraint named id_not_null to the diamonds table. This constraint specifies that the column _c0 must not contain null values. It ensures that whenever data is inserted or updated in this table, the '_c0' column cannot have a null value, maintaining data integrity.

However, the subsequent command, INSERT INTO diamonds(_c0, carat, cut, color, clarity, depth, table, price, x, y, z) VALUES (null, 0.22, 'Premium', 'I', 'SI2', '60.3', '62.1', '334', '3.79', '3.75', '2.27'); , attempts to insert a row into the diamonds table with a null value in the _c0 column.

Since the newly added constraint prohibits null values in this column, the INSERT operation will fail, preserving the data integrity specified by the constraint.

How to Work with Dataframes

The best part is that you are not just restricted to using SQL to achieve this. Below, the same thing is done by first loading the dataset into diamonds with Python and then using pyspark library functions to do complex queries.

In the Databricks Delta Lake platform, the spark object represents the SparkSession, which is the entry point for interacting with Spark functionality. It provides a programming interface to work with structured and semi-structured data.

The spark.read.csv() function is used to read a CSV file into a DataFrame. In this case, it reads the diamonds.csv file from the specified path. The arguments passed to the function include:

- "/databricks-datasets/Rdatasets/data-001/csv/ggplot2/diamonds.csv" : This is the path to the CSV file. You can replace this with the actual path where your file is located.

- header="true" : This specifies that the first row of the CSV file contains the column names.

- inferSchema="true" : This instructs Spark to automatically infer the data types of the columns in the DataFrame.

Once the CSV file is read, it is stored in the diamonds variable as a DataFrame. The DataFrame represents a distributed collection of data organized into named columns. It provides various functions and methods to manipulate and analyze the data.

By reading the CSV file into a DataFrame on the Databricks Delta Lake platform, you can leverage the rich querying and processing capabilities of Spark to perform data analysis, transformations, and other operations on the diamonds data.

Manipulate the data and displays the results

The below example showcases that on the Databricks Delta Lake platform, you are not limited to using only SQL queries. You can also leverage Python and its rich ecosystem of libraries, such as PySpark, to perform complex data manipulations and analyses.

By using Python, you have access to a wide range of functions and methods provided by PySpark's DataFrame API. This allows you to perform various transformations, aggregations, calculations, and sorting operations on your data.

Whether you choose to use SQL or Python, the Databricks Delta Lake platform provides a flexible environment for data processing and analysis, enabling you to unlock valuable insights from your data.

Firstly, the from pyspark.sql.functions import avg statement imports the avg function from the pyspark.sql.functions module. This function is used to calculate the average value of a column.

Next, the diamonds.select("color", "price").groupBy("color").agg(avg("price")).sort("color") expression performs the following operations:

diamonds.select("color", "price") selects only the color and price columns from the diamonds DataFrame.

groupBy("color") groups the data based on the color column.

agg(avg("price")) calculates the average price for each group (color). The avg("price") argument specifies that we want to calculate the average of the "price" column.

sort("color") sorts the resulting DataFrame in ascending order based on the color column.

Finally, the display() function is used to visualize the resulting DataFrame in a tabular format.

Version Control and Time Travel in Delta

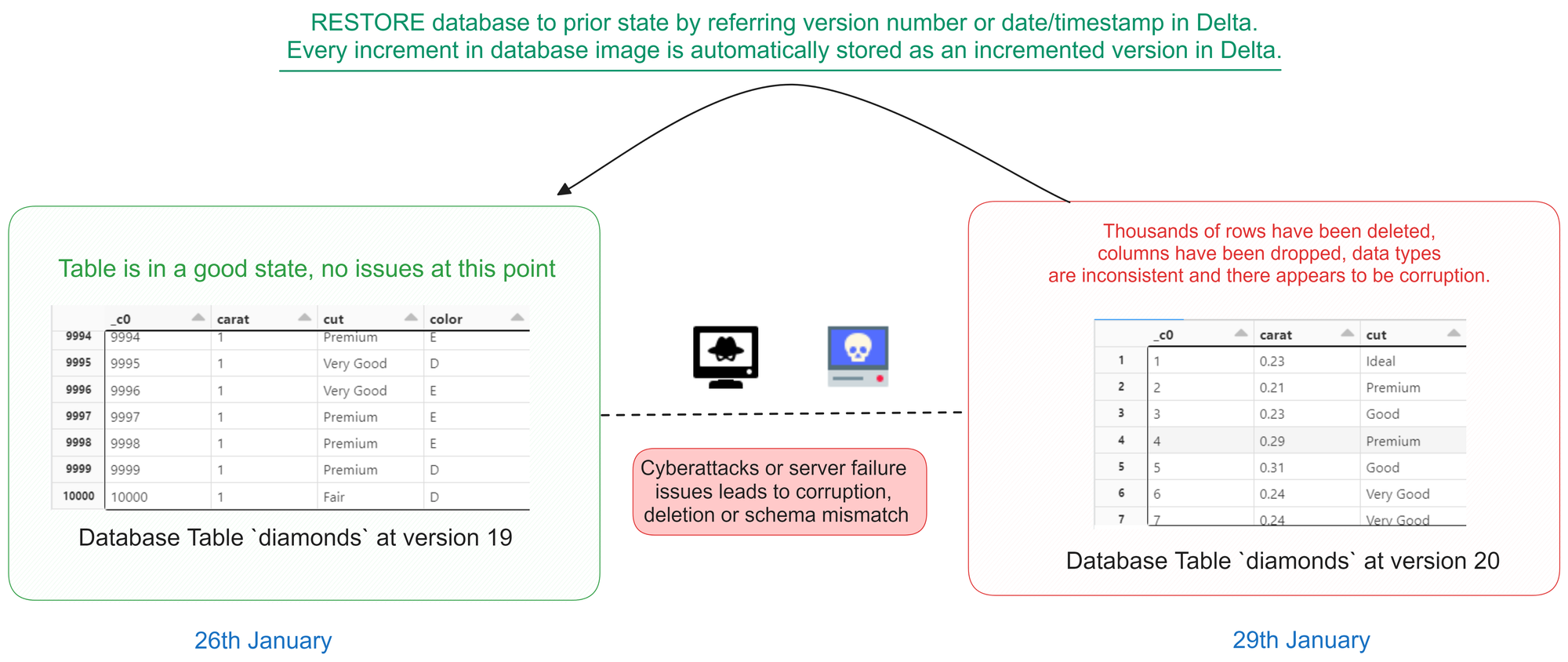

Databricks Delta’s time travel capabilities simplify building data pipelines. It comes handy when auditing data changes, reproducing experiments and reports or performing database transaction rollbacks. It is also useful for disaster recovery and allows us to undo changes and shifting back to any specific version of a database.

As you write into a Delta table or directory, every operation is automatically versioned. Query a table by referring to a timestamp or a version number.

The command below returns a list of all the versions and timestamps in a table called diamonds :

Restore Setup

Delta provides built-in support for backup and restore strategies to handle issues like data corruption or accidental data loss. In our scenario, we'll intentionally delete some rows from the main table to simulate such situations.

We'll then use Delta's restore capability to revert the table to a point in time before the delete operation. By doing so, we can verify if the deletion was successful or if the data was restored correctly to its previous state. This feature ensures data safety and provides an easy way to recover from undesirable changes or failures.

Here's the code:

Restoring From A Version Number

The code below restores the diamonds table to the version that existed at version number 19, using a database versioning or historical data feature. After the restoration, a SELECT statement is executed to retrieve all data from the diamonds table as it existed at version 19.

This process allows you to view the historical state of the table at that specific version, enabling data analysis or comparisons with the current version.

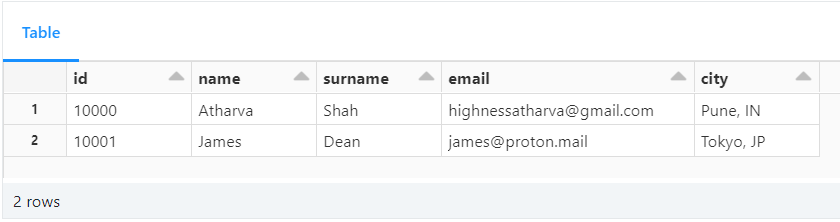

Autogenerated Fields

Let us see how to use auto-increment in Delta with SQL. The code below demonstrates the creation of a table called test__autogen with an "autogenerated" field named id . The id column is defined as BIGINT GENERATED ALWAYS AS IDENTITY , meaning its values will be automatically generated by the database engine during the insertion process.

The id serves as an auto-incrementing primary key for the table, ensuring each new record receives a unique identifier without any manual input. This feature simplifies data insertion and guarantees the uniqueness of records within the table, enhancing database management efficiency.

This auto-incrementing feature is commonly used for primary keys, as it guarantees the uniqueness of each record in the table. It also saves developers from having to manage the generation of unique identifiers manually, providing a more streamlined and efficient workflow.

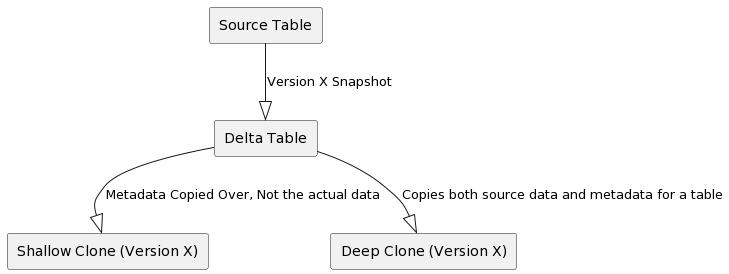

Delta Table Cloning

Cloning Delta tables allows you to create a replica of an existing Delta table at a specific version. This feature is particularly valuable when you need to transfer data from a production environment to a staging environment or when archiving a specific version for regulatory purposes.

There are two types of clones available:

- Deep Clone: This type of clone copies both the source table data and metadata to the clone target. In other words, it replicates the entire table, making it independent of the source.

- Shallow Clone: A shallow clone only replicates the table metadata without copying the actual data files to the clone target. As a result, these clones are more cost-effective to create. However, it's crucial to note that shallow clones act as pointers to the main table. If a VACUUM operation is performed on the original table, it may delete the underlying files and potentially impact the shallow clone.

It's important to remember that any modifications made to either deep or shallow clones only affect the clones themselves and not the source table.

Cloning Delta tables is a powerful feature that simplifies data replication and version archiving, enhancing data management capabilities within your Delta Lake environment.

The code below shows how to clone a table using shallow and deep clones:

Delta Magic Commands

There are convenient shortcuts in Databricks notebooks for managing Delta tables. They simplify common operations like displaying table metadata and running optimization.

You can use these shortcut commands to improve productivity by streamlining Delta table management tasks within a notebook environment.

- %run : runs a Python file or a notebook.

- %sh : executes shell commands on the cluster nodes.

- %fs : allows you to interact with the Databricks file system.

- %sql : allows you to run SQL queries.

- %scala : switches the notebook context to Scala.

- %python : switches the notebook context to Python.

- %md : allows you to write markdown text.

- %r : switches the notebook context to R.

- %lsmagic : lists all the available magic commands.

- %jobs : lists all the running jobs.

- %config : allows you to set configuration options for the notebook.

- %reload : reloads the contents of a module.

- %pip : allows you to install Python packages.

- %load : loads the contents of a file into a cell.

- %matplotlib : sets up the matplotlib backend.

- %who : lists all the variables in the current scope.

- %env : allows you to set environment variables.

This in-depth handbook explored the power of Databricks, a platform that unifies analytics and data science in a single workspace. We went through Databricks Workspace, interactive analytics, and Delta Lake, emphasizing its data manipulation and analysis capabilities.

Delta, a data integrity and agility engine, supports SQL commands as well as sophisticated queries. Data frames are used to shape and display data to improve insights. Retrospection and accuracy are enabled through version control and time travel. Delta's table cloning provides innovation by permitting analytical studies into previously undiscovered territory.

Your pursuit of data excellence doesn't end here. Let's stay connected: explore more insights on my blog , consider supporting me with a cup of coffee , and join the conversation on Twitter and LinkedIn . Keep the momentum going by checking out a few of my other posts.

- Databricks Official Documentation

- Databricks Labs - Delta Lake Tutorials

Google Cloud Facilitator | FullStack Django Developer | Python Expert with a flair for Data Science and REST APIs | Sir Read-A-Lot. Also a cinephile and music aficionado.

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

MungingData

Piles of precious data.

Introduction to Delta Lake and Time Travel

Delta Lake is a wonderful technology that adds powerful features to Parquet data lakes.

This blog post demonstrates how to create and incrementally update Delta lakes.

We will learn how the Delta transaction log stores data lake metadata.

Then we’ll see how the transaction log allows us to time travel and explore our data at a given point in time.

Creating a Delta data lake

Let’s create a Delta lake from a CSV file with data on people. Here’s the CSV data we’ll use:

Here’s the code that’ll read the CSV file into a DataFrame and write it out as a Delta data lake (all of the code in this post in stored in this GitHub repo ).

The person_data_lake directory will contain these files:

The data is stored in a Parquet file and the metadata is stored in the _delta_log/00000000000000000000.json file.

The JSON file contains information on the write transaction, schema of the data, and what file was added. Let’s inspect the contents of the JSON file.

Incrementally updating Delta data lake

Let’s use some New York City taxi data to build and then incrementally update a Delta data lake.

Here’s the code that’ll initially build the Delta data lake:

This code creates a Parquet file and a _delta_log/00000000000000000000.json file.

Let’s inspect the contents of the incremental Delta data lake.

The Delta lake contains 5 rows of data after the first load.

Let’s load another file into the Delta data lake with SaveMode.Append :

This code creates a Parquet file and a _delta_log/00000000000000000001.json file. The incremental_data_lake contains these files now:

The Delta lake contains 10 rows of data after the file is loaded:

Time travel

Delta lets you time travel and explore the state of the data lake as of a given data load. Let’s write a query to examine the incrementally updating Delta data lake after the first data load (ignoring the second data load).

The option("versionAsOf", 0) tells Delta to only grab the files in _delta_log/00000000000000000000.json and ignore the files in _delta_log/00000000000000000001.json .

Let’s say you’re training a machine learning model off of a data lake and want to hold the data constant while experimenting. Delta lake makes it easy to use a single version of the data when you’re training your model.

You can easily access a full history of the Delta lake transaction log.

The schema of the Delta history table is as follows:

We can also grab a Delta table version by timestamp.

This is the same as grabbing version 1 of our Delta table (examine the transaction log history output to see why):

This blog post just scratches the surface on the host of features offered by Delta Lake.

In the coming blog posts we’ll explore how to compact Delta lakes, schema evolution, schema enforcement, updates, deletes, and streaming.

Registration

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Documentation

Table batch reads and writes

- Delta Lake GitHub repo

Delta Lake supports most of the options provided by Apache Spark DataFrame read and write APIs for performing batch reads and writes on tables.

For many Delta Lake operations on tables, you enable integration with Apache Spark DataSourceV2 and Catalog APIs (since 3.0) by setting configurations when you create a new SparkSession . See Configure SparkSession .

In this article:

Create a table

Partition data, control data location, use generated columns, specify default values for columns, use special characters in column names, default table properties, read a table, query an older snapshot of a table (time travel), data retention, write to a table, limit rows written in a file, idempotent writes, set user-defined commit metadata, schema validation, update table schema, explicitly update schema, automatic schema update, replace table schema, views on tables, table properties, syncing table schema and properties to the hive metastore, table metadata, describe detail, describe history, configure sparksession, configure storage credentials, spark configurations, sql session configurations, dataframe options.

Delta Lake supports creating two types of tables—tables defined in the metastore and tables defined by path.

To work with metastore-defined tables, you must enable integration with Apache Spark DataSourceV2 and Catalog APIs by setting configurations when you create a new SparkSession . See Configure SparkSession .

You can create tables in the following ways.

SQL DDL commands : You can use standard SQL DDL commands supported in Apache Spark (for example, CREATE TABLE and REPLACE TABLE ) to create Delta tables.

SQL also supports creating a table at a path, without creating an entry in the Hive metastore.

.. code-language-tabs:

DataFrameWriter API : If you want to simultaneously create a table and insert data into it from Spark DataFrames or Datasets, you can use the Spark DataFrameWriter ( Scala or Java and Python ).

You can also create Delta tables using the Spark DataFrameWriterV2 API.

DeltaTableBuilder API : You can also use the DeltaTableBuilder API in Delta Lake to create tables. Compared to the DataFrameWriter APIs, this API makes it easier to specify additional information like column comments, table properties, and generated columns .

This feature is new and is in Preview.

See the API documentation for details.

You can partition data to speed up queries or DML that have predicates involving the partition columns. To partition data when you create a Delta table, specify a partition by columns. The following example partitions by gender.

To determine whether a table contains a specific partition, use the statement SELECT COUNT(*) > 0 FROM <table-name> WHERE <partition-column> = <value> . If the partition exists, true is returned. For example:

For tables defined in the metastore, you can optionally specify the LOCATION as a path. Tables created with a specified LOCATION are considered unmanaged by the metastore. Unlike a managed table, where no path is specified, an unmanaged table’s files are not deleted when you DROP the table.

When you run CREATE TABLE with a LOCATION that already contains data stored using Delta Lake, Delta Lake does the following:

If you specify only the table name and location , for example:

the table in the metastore automatically inherits the schema, partitioning, and table properties of the existing data. This functionality can be used to “import” data into the metastore.

If you specify any configuration (schema, partitioning, or table properties), Delta Lake verifies that the specification exactly matches the configuration of the existing data.

If the specified configuration does not exactly match the configuration of the data, Delta Lake throws an exception that describes the discrepancy.

The metastore is not the source of truth about the latest information of a Delta table. In fact, the table definition in the metastore may not contain all the metadata like schema and properties. It contains the location of the table, and the table’s transaction log at the location is the source of truth. If you query the metastore from a system that is not aware of this Delta-specific customization, you may see incomplete or stale table information.

Delta Lake supports generated columns which are a special type of columns whose values are automatically generated based on a user-specified function over other columns in the Delta table. When you write to a table with generated columns and you do not explicitly provide values for them, Delta Lake automatically computes the values. For example, you can automatically generate a date column (for partitioning the table by date) from the timestamp column; any writes into the table need only specify the data for the timestamp column. However, if you explicitly provide values for them, the values must satisfy the constraint (<value> <=> <generation expression>) IS TRUE or the write will fail with an error.

Tables created with generated columns have a higher table writer protocol version than the default. See How does Delta Lake manage feature compatibility? to understand table protocol versioning and what it means to have a higher version of a table protocol version.

The following example shows how to create a table with generated columns:

Generated columns are stored as if they were normal columns. That is, they occupy storage.

The following restrictions apply to generated columns:

A generation expression can use any SQL functions in Spark that always return the same result when given the same argument values, except the following types of functions:

User-defined functions.

Aggregate functions.

Window functions.

Functions returning multiple rows.

For Delta Lake 1.1.0 and above, MERGE operations support generated columns when you set spark.databricks.delta.schema.autoMerge.enabled to true.

Delta Lake may be able to generate partition filters for a query whenever a partition column is defined by one of the following expressions:

CAST(col AS DATE) and the type of col is TIMESTAMP .

YEAR(col) and the type of col is TIMESTAMP .

Two partition columns defined by YEAR(col), MONTH(col) and the type of col is TIMESTAMP .

Three partition columns defined by YEAR(col), MONTH(col), DAY(col) and the type of col is TIMESTAMP .

Four partition columns defined by YEAR(col), MONTH(col), DAY(col), HOUR(col) and the type of col is TIMESTAMP .

SUBSTRING(col, pos, len) and the type of col is STRING

DATE_FORMAT(col, format) and the type of col is TIMESTAMP .

DATE_TRUNC(format, col) and the type of the col` is TIMESTAMP or DATE .

TRUNC(col, format) and type of the col is either TIMESTAMP or DATE .

If a partition column is defined by one of the preceding expressions, and a query filters data using the underlying base column of a generation expression, Delta Lake looks at the relationship between the base column and the generated column, and populates partition filters based on the generated partition column if possible. For example, given the following table:

If you then run the following query:

Delta Lake automatically generates a partition filter so that the preceding query only reads the data in partition date=2020-10-01 even if a partition filter is not specified.

As another example, given the following table:

Delta Lake automatically generates a partition filter so that the preceding query only reads the data in partition year=2020/month=10/day=01 even if a partition filter is not specified.

You can use an EXPLAIN clause and check the provided plan to see whether Delta Lake automatically generates any partition filters.

Delta enables the specification of default expressions for columns in Delta tables. When users write to these tables without explicitly providing values for certain columns, or when they explicitly use the DEFAULT SQL keyword for a column, Delta automatically generates default values for those columns. For more information, please refer to the dedicated documentation page .

By default, special characters such as spaces and any of the characters ,;{}()\n\t= are not supported in table column names. To include these special characters in a table’s column name, enable column mapping .

Delta Lake configurations set in the SparkSession override the default table properties for new Delta Lake tables created in the session. The prefix used in the SparkSession is different from the configurations used in the table properties.

For example, to set the delta.appendOnly = true property for all new Delta Lake tables created in a session, set the following:

To modify table properties of existing tables, use SET TBLPROPERTIES .

You can load a Delta table as a DataFrame by specifying a table name or a path:

The DataFrame returned automatically reads the most recent snapshot of the table for any query; you never need to run REFRESH TABLE . Delta Lake automatically uses partitioning and statistics to read the minimum amount of data when there are applicable predicates in the query.

In this section:

Delta Lake time travel allows you to query an older snapshot of a Delta table. Time travel has many use cases, including:

Re-creating analyses, reports, or outputs (for example, the output of a machine learning model). This could be useful for debugging or auditing, especially in regulated industries.

Writing complex temporal queries.

Fixing mistakes in your data.

Providing snapshot isolation for a set of queries for fast changing tables.

This section describes the supported methods for querying older versions of tables, data retention concerns, and provides examples.

The timestamp of each version N depends on the timestamp of the log file corresponding to the version N in Delta table log. Hence, time travel by timestamp can break if you copy the entire Delta table directory to a new location. Time travel by version will be unaffected.

This section shows how to query an older version of a Delta table.

SQL AS OF syntax

timestamp_expression can be any one of:

'2018-10-18T22:15:12.013Z' , that is, a string that can be cast to a timestamp

cast('2018-10-18 13:36:32 CEST' as timestamp)

'2018-10-18' , that is, a date string

current_timestamp() - interval 12 hours

date_sub(current_date(), 1)

Any other expression that is or can be cast to a timestamp

version is a long value that can be obtained from the output of DESCRIBE HISTORY table_spec .

Neither timestamp_expression nor version can be subqueries.

DataFrameReader options

DataFrameReader options allow you to create a DataFrame from a Delta table that is fixed to a specific version of the table.

For timestamp_string , only date or timestamp strings are accepted. For example, "2019-01-01" and "2019-01-01T00:00:00.000Z" .

A common pattern is to use the latest state of the Delta table throughout the execution of a job to update downstream applications.

Because Delta tables auto update, a DataFrame loaded from a Delta table may return different results across invocations if the underlying data is updated. By using time travel, you can fix the data returned by the DataFrame across invocations:

Fix accidental deletes to a table for the user 111 :

Fix accidental incorrect updates to a table:

Query the number of new customers added over the last week.

To time travel to a previous version, you must retain both the log and the data files for that version.

The data files backing a Delta table are never deleted automatically; data files are deleted only when you run VACUUM . VACUUM does not delete Delta log files; log files are automatically cleaned up after checkpoints are written.

By default you can time travel to a Delta table up to 30 days old unless you have:

Run VACUUM on your Delta table.

Changed the data or log file retention periods using the following table properties :

delta.logRetentionDuration = "interval <interval>" : controls how long the history for a table is kept. The default is interval 30 days .

Each time a checkpoint is written, Delta automatically cleans up log entries older than the retention interval. If you set this config to a large enough value, many log entries are retained. This should not impact performance as operations against the log are constant time. Operations on history are parallel but will become more expensive as the log size increases.

delta.deletedFileRetentionDuration = "interval <interval>" : controls how long ago a file must have been deleted before being a candidate for VACUUM . The default is interval 7 days .

To access 30 days of historical data even if you run VACUUM on the Delta table, set delta.deletedFileRetentionDuration = "interval 30 days" . This setting may cause your storage costs to go up.

Due to log entry cleanup, instances can arise where you cannot time travel to a version that is less than the retention interval. Delta Lake requires all consecutive log entries since the previous checkpoint to time travel to a particular version. For example, with a table initially consisting of log entries for versions [0, 19] and a checkpoint at verison 10, if the log entry for version 0 is cleaned up, then you cannot time travel to versions [1, 9]. Increasing the table property delta.logRetentionDuration can help avoid these situations.

To atomically add new data to an existing Delta table, use append mode:

To atomically replace all the data in a table, use overwrite mode:

You can selectively overwrite only the data that matches an arbitrary expression. This feature is available with DataFrames in Delta Lake 1.1.0 and above and supported in SQL in Delta Lake 2.4.0 and above.

The following command atomically replaces events in January in the target table, which is partitioned by start_date , with the data in replace_data :

This sample code writes out the data in replace_data , validates that it all matches the predicate, and performs an atomic replacement. If you want to write out data that doesn’t all match the predicate, to replace the matching rows in the target table, you can disable the constraint check by setting spark.databricks.delta.replaceWhere.constraintCheck.enabled to false:

In Delta Lake 1.0.0 and below, replaceWhere overwrites data matching a predicate over partition columns only. The following command atomically replaces the month in January in the target table, which is partitioned by date , with the data in df :

In Delta Lake 1.1.0 and above, if you want to fall back to the old behavior, you can disable the spark.databricks.delta.replaceWhere.dataColumns.enabled flag:

Dynamic Partition Overwrites

Delta Lake 2.0 and above supports dynamic partition overwrite mode for partitioned tables.

When in dynamic partition overwrite mode, we overwrite all existing data in each logical partition for which the write will commit new data. Any existing logical partitions for which the write does not contain data will remain unchanged. This mode is only applicable when data is being written in overwrite mode: either INSERT OVERWRITE in SQL, or a DataFrame write with df.write.mode("overwrite") .

Configure dynamic partition overwrite mode by setting the Spark session configuration spark.sql.sources.partitionOverwriteMode to dynamic . You can also enable this by setting the DataFrameWriter option partitionOverwriteMode to dynamic . If present, the query-specific option overrides the mode defined in the session configuration. The default for partitionOverwriteMode is static .

Dynamic partition overwrite conflicts with the option replaceWhere for partitioned tables.

If dynamic partition overwrite is enabled in the Spark session configuration, and replaceWhere is provided as a DataFrameWriter option, then Delta Lake overwrites the data according to the replaceWhere expression (query-specific options override session configurations).

You’ll receive an error if the DataFrameWriter options have both dynamic partition overwrite and replaceWhere enabled.

Validate that the data written with dynamic partition overwrite touches only the expected partitions. A single row in the incorrect partition can lead to unintentionally overwriting an entire partition. We recommend using replaceWhere to specify which data to overwrite.

If a partition has been accidentally overwritten, you can use Restore a Delta table to an earlier state to undo the change.

For Delta Lake support for updating tables, see Table deletes, updates, and merges .

You can use the SQL session configuration spark.sql.files.maxRecordsPerFile to specify the maximum number of records to write to a single file for a Delta Lake table. Specifying a value of zero or a negative value represents no limit.

You can also use the DataFrameWriter option maxRecordsPerFile when using the DataFrame APIs to write to a Delta Lake table. When maxRecordsPerFile is specified, the value of the SQL session configuration spark.sql.files.maxRecordsPerFile is ignored.

Sometimes a job that writes data to a Delta table is restarted due to various reasons (for example, job encounters a failure). The failed job may or may not have written the data to Delta table before terminating. In the case where the data is written to the Delta table, the restarted job writes the same data to the Delta table which results in duplicate data.

To address this, Delta tables support the following DataFrameWriter options to make the writes idempotent:

txnAppId : A unique string that you can pass on each DataFrame write. For example, this can be the name of the job.

txnVersion : A monotonically increasing number that acts as transaction version. This number needs to be unique for data that is being written to the Delta table(s). For example, this can be the epoch seconds of the instant when the query is attempted for the first time. Any subsequent restarts of the same job needs to have the same value for txnVersion .

The above combination of options needs to be unique for each new data that is being ingested into the Delta table and the txnVersion needs to be higher than the last data that was ingested into the Delta table. For example:

Last successfully written data contains option values as dailyETL:23423 ( txnAppId:txnVersion ).

Next write of data should have txnAppId = dailyETL and txnVersion as at least 23424 (one more than the last written data txnVersion ).

Any attempt to write data with txnAppId = dailyETL and txnVersion as 23422 or less is ignored because the txnVersion is less than the last recorded txnVersion in the table.

Attempt to write data with txnAppId:txnVersion as anotherETL:23424 is successful writing data to the table as it contains a different txnAppId compared to the same option value in last ingested data.

You can also configure idempotent writes by setting the Spark session configuration spark.databricks.delta.write.txnAppId and spark.databricks.delta.write.txnVersion . In addition, you can set spark.databricks.delta.write.txnVersion.autoReset.enabled to true to automatically reset spark.databricks.delta.write.txnVersion after every write. When both the writer options and session configuration are set, we will use the writer option values.

This solution assumes that the data being written to Delta table(s) in multiple retries of the job is same. If a write attempt in a Delta table succeeds but due to some downstream failure there is a second write attempt with same txn options but different data, then that second write attempt will be ignored. This can cause unexpected results.

You can specify user-defined strings as metadata in commits made by these operations, either using the DataFrameWriter option userMetadata or the SparkSession configuration spark.databricks.delta.commitInfo.userMetadata . If both of them have been specified, then the option takes preference. This user-defined metadata is readable in the history operation.

Delta Lake automatically validates that the schema of the DataFrame being written is compatible with the schema of the table. Delta Lake uses the following rules to determine whether a write from a DataFrame to a table is compatible:

All DataFrame columns must exist in the target table. If there are columns in the DataFrame not present in the table, an exception is raised. Columns present in the table but not in the DataFrame are set to null.

DataFrame column data types must match the column data types in the target table. If they don’t match, an exception is raised.

DataFrame column names cannot differ only by case. This means that you cannot have columns such as “Foo” and “foo” defined in the same table. While you can use Spark in case sensitive or insensitive (default) mode, Parquet is case sensitive when storing and returning column information. Delta Lake is case-preserving but insensitive when storing the schema and has this restriction to avoid potential mistakes, data corruption, or loss issues.

Delta Lake support DDL to add new columns explicitly and the ability to update schema automatically.

If you specify other options, such as partitionBy , in combination with append mode, Delta Lake validates that they match and throws an error for any mismatch. When partitionBy is not present, appends automatically follow the partitioning of the existing data.

Delta Lake lets you update the schema of a table. The following types of changes are supported:

Adding new columns (at arbitrary positions)

Reordering existing columns

You can make these changes explicitly using DDL or implicitly using DML.

When you update a Delta table schema, streams that read from that table terminate. If you want the stream to continue you must restart it.

You can use the following DDL to explicitly change the schema of a table.

Add columns

By default, nullability is true .

To add a column to a nested field, use:

If the schema before running ALTER TABLE boxes ADD COLUMNS (colB.nested STRING AFTER field1) is:

the schema after is:

Adding nested columns is supported only for structs. Arrays and maps are not supported.

Change column comment or ordering

To change a column in a nested field, use:

If the schema before running ALTER TABLE boxes CHANGE COLUMN colB.field2 field2 STRING FIRST is:

Replace columns

When running the following DDL:

if the schema before is:

Rename columns

This feature is available in Delta Lake 1.2.0 and above. This feature is currently experimental.

To rename columns without rewriting any of the columns’ existing data, you must enable column mapping for the table. See enable column mapping .

To rename a column:

To rename a nested field:

When you run the following command:

If the schema before is:

Then the schema after is:

Drop columns

This feature is available in Delta Lake 2.0 and above. This feature is currently experimental.

To drop columns as a metadata-only operation without rewriting any data files, you must enable column mapping for the table. See enable column mapping .

Dropping a column from metadata does not delete the underlying data for the column in files.

To drop a column:

To drop multiple columns:

Change column type or name

You can change a column’s type or name or drop a column by rewriting the table. To do this, use the overwriteSchema option:

Change a column type

Change a column name.

Delta Lake can automatically update the schema of a table as part of a DML transaction (either appending or overwriting), and make the schema compatible with the data being written.

Columns that are present in the DataFrame but missing from the table are automatically added as part of a write transaction when:

write or writeStream have .option("mergeSchema", "true")

spark.databricks.delta.schema.autoMerge.enabled is true

When both options are specified, the option from the DataFrameWriter takes precedence. The added columns are appended to the end of the struct they are present in. Case is preserved when appending a new column.

NullType columns

Because Parquet doesn’t support NullType , NullType columns are dropped from the DataFrame when writing into Delta tables, but are still stored in the schema. When a different data type is received for that column, Delta Lake merges the schema to the new data type. If Delta Lake receives a NullType for an existing column, the old schema is retained and the new column is dropped during the write.

NullType in streaming is not supported. Since you must set schemas when using streaming this should be very rare. NullType is also not accepted for complex types such as ArrayType and MapType .

By default, overwriting the data in a table does not overwrite the schema. When overwriting a table using mode("overwrite") without replaceWhere , you may still want to overwrite the schema of the data being written. You replace the schema and partitioning of the table by setting the overwriteSchema option to true :

Delta Lake supports the creation of views on top of Delta tables just like you might with a data source table.

The core challenge when you operate with views is resolving the schemas. If you alter a Delta table schema, you must recreate derivative views to account for any additions to the schema. For instance, if you add a new column to a Delta table, you must make sure that this column is available in the appropriate views built on top of that base table.

You can store your own metadata as a table property using TBLPROPERTIES in CREATE and ALTER . You can then SHOW that metadata. For example:

TBLPROPERTIES are stored as part of Delta table metadata. You cannot define new TBLPROPERTIES in a CREATE statement if a Delta table already exists in a given location.

In addition, to tailor behavior and performance, Delta Lake supports certain Delta table properties:

Block deletes and updates in a Delta table: delta.appendOnly=true .

Configure the time travel retention properties: delta.logRetentionDuration=<interval-string> and delta.deletedFileRetentionDuration=<interval-string> . For details, see Data retention .

Configure the number of columns for which statistics are collected: delta.dataSkippingNumIndexedCols=n . This property indicates to the writer that statistics are to be collected only for the first n columns in the table. Also the data skipping code ignores statistics for any column beyond this column index. This property takes affect only for new data that is written out.

Modifying a Delta table property is a write operation that will conflict with other concurrent write operations , causing them to fail. We recommend that you modify a table property only when there are no concurrent write operations on the table.

You can also set delta. -prefixed properties during the first commit to a Delta table using Spark configurations. For example, to initialize a Delta table with the property delta.appendOnly=true , set the Spark configuration spark.databricks.delta.properties.defaults.appendOnly to true . For example:

See also the Delta table properties reference .

You can enable asynchronous syncing of table schema and properties to the metastore by setting spark.databricks.delta.catalog.update.enabled to true . Whenever the Delta client detects that either of these two were changed due to an update, it will sync the changes to the metastore.

The schema is stored in the table properties in HMS. If the schema is small, it will be stored directly under the key spark.sql.sources.schema :

If Schema is large, the schema will be broken down into multiple parts. Appending them together should give the correct schema. E.g.

Delta Lake has rich features for exploring table metadata.

It supports SHOW COLUMNS and DESCRIBE TABLE .

It also provides the following unique commands:

Provides information about schema, partitioning, table size, and so on. For details, see Retrieve Delta table details .

Provides provenance information, including the operation, user, and so on, and operation metrics for each write to a table. Table history is retained for 30 days. For details, see Retrieve Delta table history .

For many Delta Lake operations, you enable integration with Apache Spark DataSourceV2 and Catalog APIs (since 3.0) by setting the following configurations when you create a new SparkSession .

Alternatively, you can add configurations when submitting your Spark application using spark-submit or when starting spark-shell or pyspark by specifying them as command-line parameters.

Delta Lake uses Hadoop FileSystem APIs to access the storage systems. The credentails for storage systems usually can be set through Hadoop configurations. Delta Lake provides multiple ways to set Hadoop configurations similar to Apache Spark.

When you start a Spark application on a cluster, you can set the Spark configurations in the form of spark.hadoop.* to pass your custom Hadoop configurations. For example, Setting a value for spark.hadoop.a.b.c will pass the value as a Hadoop configuration a.b.c , and Delta Lake will use it to access Hadoop FileSystem APIs.

See Spark doc for more details.

Spark SQL will pass all of the current SQL session configurations to Delta Lake, and Delta Lake will use them to access Hadoop FileSystem APIs. For example, SET a.b.c=x.y.z will tell Delta Lake to pass the value x.y.z as a Hadoop configuration a.b.c , and Delta Lake will use it to access Hadoop FileSystem APIs.

Besides setting Hadoop file system configurations through the Spark (cluster) configurations or SQL session configurations, Delta supports reading Hadoop file system configurations from DataFrameReader and DataFrameWriter options (that is, option keys that start with the fs. prefix) when the table is read or written, by using DataFrameReader.load(path) or DataFrameWriter.save(path) .

For example, you can pass your storage credentails through DataFrame options:

You can find the details of the Hadoop file system configurations for your storage in Storage configuration .

A Guide to Working with Time Travel in Spark Delta Lake: A Deep Dive