Advisory boards aren’t only for executives. Join the LogRocket Content Advisory Board today →

- Product Management

- Solve User-Reported Issues

- Find Issues Faster

- Optimize Conversion and Adoption

- Start Monitoring for Free

Streaming video in Safari: Why is it so difficult?

The problem

I recently implemented support for AI tagging of videos in my product Sortal . A part of the feature is that you can then play back the videos you uploaded. I thought, no problem — video streaming seems pretty simple.

In fact, it is so simple (just a few lines of code) that I chose video streaming as the theme for examples in my book Bootstrapping Microservices .

But when we came to testing in Safari, I learned the ugly truth. So let me rephrase the previous assertion: video streaming is simple for Chrome , but not so much for Safari .

Why is it so difficult for Safari? What does it take to make it work for Safari? The answers to these questions are revealed in this blog post.

Try it for yourself

Before we start looking at the code together, please try it for yourself! The code that accompanies this blog post is available on GitHub . You can download the code or use Git to clone the repository. You’ll need Node.js installed to try it out.

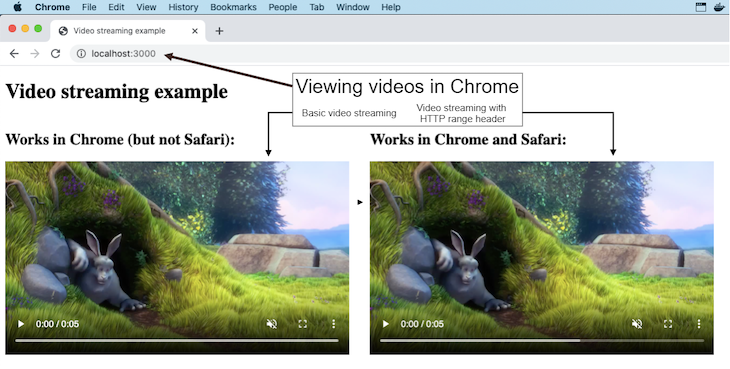

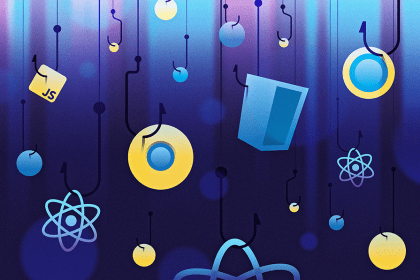

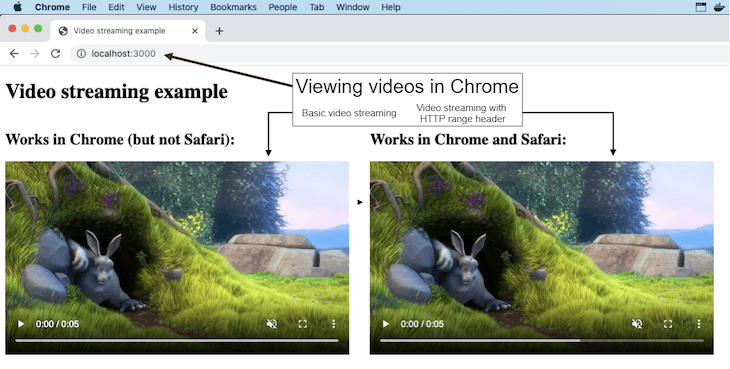

Start the server as instructed in the readme and navigate your browser to http://localhost:3000 . You’ll see either Figure 1 or Figure 2, depending on whether you are viewing the page in Chrome or Safari.

Notice that in Figure 2, when the webpage is viewed in Safari, the video on the left side doesn’t work. However, the example on the right does work, and this post explains how I achieved a working version of the video streaming code for Safari.

Basic video streaming

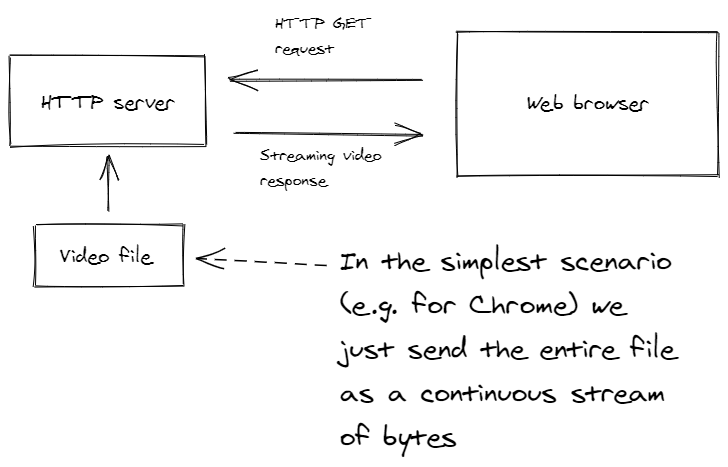

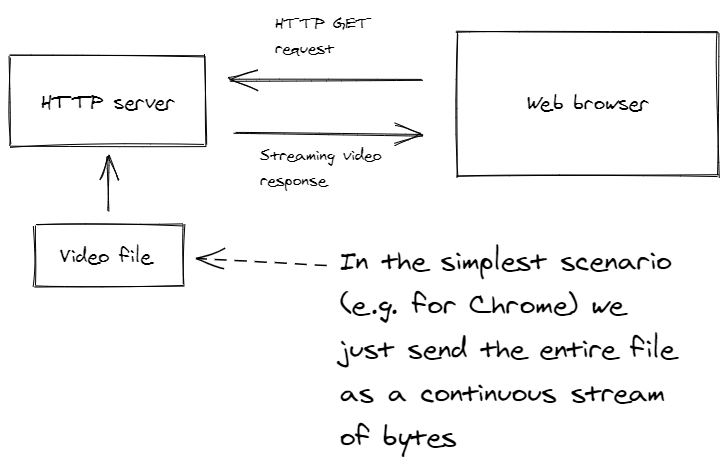

The basic form of video streaming that works in Chrome is trivial to implement in your HTTP server. We are simply streaming the entire video file from the backend to the frontend, as illustrated in Figure 3.

In the frontend

To render a video in the frontend, we use the HTML5 video element. There’s not much to it; Listing 1 shows how it works. This is the version that works only in Chrome. You can see that the src of the video is handled in the backend by the /works-in-chrome route.

Listing 1: A simple webpage to render streaming video that works in Chrome

In the backend.

The backend for this example is a very simple HTTP server built on the Express framework running on Node.js. You can see the code in Listing 2. This is where the /works-in-chrome route is implemented.

In response to the HTTP GET request, we stream the whole file to the browser. Along the way, we set various HTTP response headers.

The content-type header is set to video/mp4 so the browser knows it’s receiving a video.

Then we stat the file to get its length and set that as the content-length header so the browser knows how much data it’s receiving.

Listing 2: Node.js Express web server with simple video streaming that works for Chrome

But it doesn’t work in safari.

Unfortunately, we can’t just send the entire video file to Safari and expect it to work. Chrome can deal with it, but Safari refuses to play the game.

What’s missing?

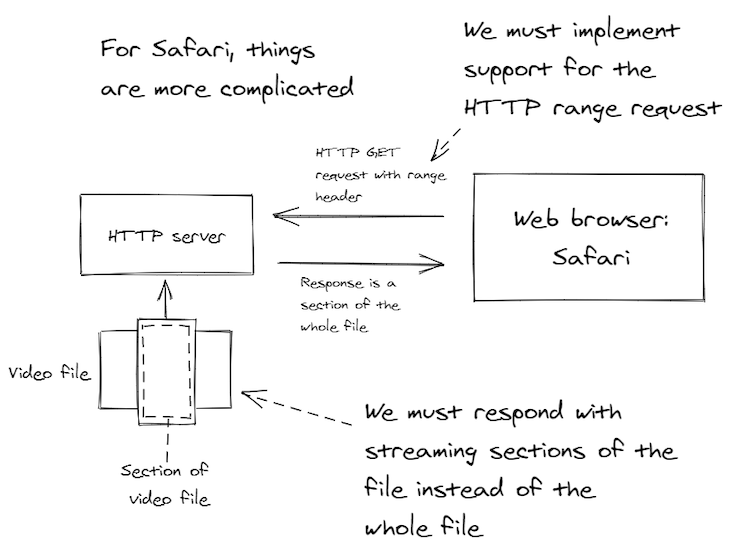

Safari doesn’t want the entire file delivered in one go. That’s why the brute-force tactic of streaming the whole file doesn’t work.

Safari would like to stream portions of the file so that it can be incrementally buffered in a piecemeal fashion. It also wants random, ad hoc access to any portion of the file that it requires.

Over 200k developers use LogRocket to create better digital experiences

This actually makes sense. Imagine that a user wants to rewind the video a bit — you wouldn’t want to start the whole file streaming again, would you?

Instead, Safari wants to just go back a bit and request that portion of the file again. In fact, this works in Chrome as well. Even though the basic streaming video works in Chrome, Chrome can indeed issue HTTP range requests for more efficient handling of streaming videos.

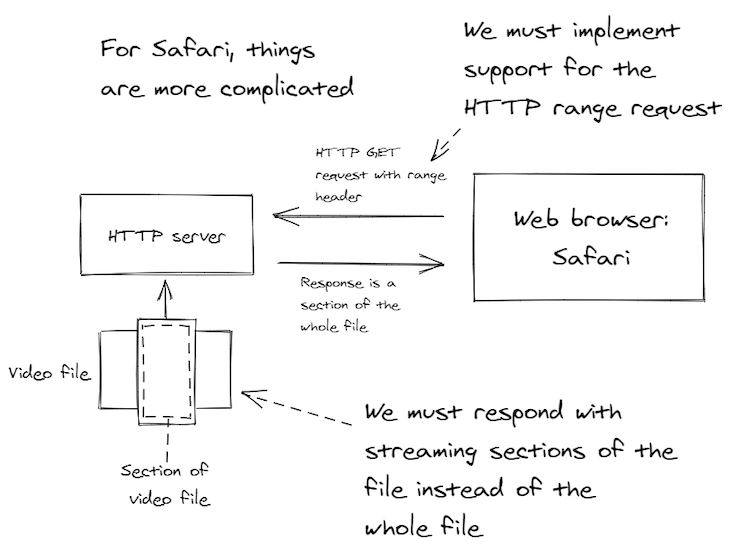

Figure 4 gives you an idea of how this works. We need to modify our HTTP server so that rather than streaming the entire video file to the frontend, we can instead serve random access portions of the file depending on what the browser is requesting.

Supporting HTTP range requests

Specifically, we have to support HTTP range requests. But how do we implement it?

There’s surprisingly little readable documentation for it. Of course, we could read the HTTP specifications, but who has the time and motivation for that? (I’ll give you links to resources at the end of this post.)

Instead, allow me to guide you through an overview of my implementation. The key to it is the HTTP request range header that starts with the prefix "bytes=" .

This header is how the frontend asks for a particular range of bytes to be retrieved from the video file. You can see in Listing 3 how we can parse the value for this header to obtain starting and ending values for the range of bytes.

More great articles from LogRocket:

- Don't miss a moment with The Replay , a curated newsletter from LogRocket

- Learn how LogRocket's Galileo cuts through the noise to proactively resolve issues in your app

- Use React's useEffect to optimize your application's performance

- Switch between multiple versions of Node

- Discover how to use the React children prop with TypeScript

- Explore creating a custom mouse cursor with CSS

- Advisory boards aren’t just for executives. Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Listing 3: Parsing the HTTP range header

Responding to the http head request.

An HTTP HEAD request is how the frontend probes the backend for information on a particular resource. We should take some care with how we handle this.

The Express framework also sends HEAD requests to our HTTP GET handler, so we can check the req.method and return early from the request handler before we do more work than is necessary for the HEAD request.

Listing 4 shows how we respond to the HEAD request. We don’t have to return any data from the file, but we do have to configure the response headers to tell the frontend that we are supporting the HTTP range request and to let it know the full size of the video file.

The accept-ranges response header used here indicates that this request handler can respond to an HTTP range request.

Listing 4: Responding to the HTTP HEAD request

Full file vs. partial file.

Now for the tricky part. Are we sending the full file or are we sending a portion of the file?

With some care, we can make our request handler support both methods. You can see in Listing 5 how we compute retrievedLength from the start and end variables when it is a range request and those variables are defined; otherwise, we just use contentLength (the complete file’s size) when it’s not a range request.

Listing 5: Determining the content length based on the portion of the file requested

Send status code and response headers.

We’ve dealt with the HEAD request. All that’s left to handle is the HTTP GET request.

Listing 6 shows how we send an appropriate success status code and response headers.

The status code varies depending on whether this is a request for the full file or a range request for a portion of the file. If it’s a range request, the status code will be 206 (for partial content); otherwise, we use the regular old success status code of 200.

Listing 6: Sending response headers

Streaming a portion of the file.

Now the easiest part: streaming a portion of the file. The code in Listing 7 is almost identical to the code in the basic video streaming example way back in Listing 2.

The difference now is that we are passing in the options object. Conveniently, the createReadStream function from Node.js’ file system module takes start and end values in the options object, which enable reading a portion of the file from the hard drive.

In the case of an HTTP range request, the earlier code in Listing 3 will have parsed the start and end values from the header, and we inserted them into the options object.

In the case of a normal HTTP GET request (not a range request), the start and end won’t have been parsed and won’t be in the options object, in that case, we are simply reading the entire file.

Listing 7: Streaming a portion of the file

Putting it all together.

Now let’s put all the code together into a complete request handler for streaming video that works in both Chrome and Safari.

Listing 8 is the combined code from Listing 3 through to Listing 7, so you can see it all in context. This request handler can work either way. It can retrieve a portion of the video file if requested to do so by the browser. Otherwise, it retrieves the entire file.

Listing 8: Full HTTP request handler

Updated frontend code.

Nothing needs to change in the frontend code besides making sure the video element is pointing to an HTTP route that can handle HTTP range requests.

Listing 9 shows that we have simply rerouted the video element to a route called /works-in-chrome-and-safari . This frontend will work both in Chrome and in Safari.

Listing 9: Updated frontend code

Even though video streaming is simple to get working for Chrome, it’s quite a bit more difficult to figure out for Safari — at least if you are trying to figure it out by yourself from the HTTP specification.

Lucky for you, I’ve already trodden that path, and this blog post has laid the groundwork that you can build on for your own streaming video implementation.

- Example code for this blog post

- A Stack Overflow post that helped me understand what I was missing

- HTTP specification

- Range requests

- 206 Partial Content success status

Get set up with LogRocket's modern error tracking in minutes:

- Visit https://logrocket.com/signup/ to get an app ID

Install LogRocket via npm or script tag. LogRocket.init() must be called client-side, not server-side

Share this:

- Click to share on Twitter (Opens in new window)

- Click to share on Reddit (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Facebook (Opens in new window)

Stop guessing about your digital experience with LogRocket

Recent posts:.

CSS Hooks and the state of CSS-in-JS

Explore the evolution of CSS-in-JS and CSS Hooks, a React-based solution that simplifies dynamic styling and component encapsulation.

How Waku compares to Next.js

Waku is a new lightweight React framework built on top of React Server Components (RSCs). Let’s compare Waku with Next.js in this article.

Mojo CSS vs. Tailwind: Choosing the best CSS framework

Compare the established Tailwind CSS framework with the newer Mojo CSS based on benchmarks like plugins, built-in components, and more.

Leader Spotlight: Riding the rocket ship of scale, with Justin Kitagawa

We sit down with Justin Kitagawa to learn more about his leadership style and approach for handling the complexities that come with scaling fast.

10 Replies to "Streaming video in Safari: Why is it so difficult?"

Hi, thank you for this blog, it is very helpful. It works perfectly with Safari (Mac), but not in Mobile Safari on ios (downloading the full video before starting to play…). Do you know why?

Thanks for your feedback!

That’s strange… the production version I’m using works although I never tested the code for the blog post. It might have been broken when I simplified it.

Could you please log an issue against the code in GitHub, that will be an easier way to work through the problem, thanks.

Ashley Davis

I’ve encountered this problem with a web page I’m writing that shows videos. I’m doing the range queries correctly, but they still malfunction because the user shows first one video, then another. Your code assumes there’s only one video path, so it won’t work in this case. Range requests don’t include the file path. So how does the server code know which file to retrieve the bytes of data from? I tried using a session variable, but this fails because the session variable can change asynchronously with respect to what the video player is actually doing. The user can reposition and switch between a number of different videos! On Mac/Safari, this abnormally halts the video player.

Range requests can have a route, they can have query parameters or they can have a body… so that’s at least three ways to statelessly include the file path or id of a video to play. Use which ever one best satisfies your needs.

The problem doesn’t happen because of accept range requests (using the Accept-Ranges header), or for requests to open and play a video file. You are correct, all information from the server is available there.

The problem happens for range requests from the client, which are made by the client application, such as a video player. These requests ARE ONLY MARKED by the HTTP_RANGE header. There is no other information that the server can use to locate the file whose bytes are to be returned to the client. This is one of the rare cases where a client calls a server to obtain information. Usually, it’s the other way around.

This appears to be a very poor design, since multiple overlapping video displaying can be done by one server on behalf of one user. The bytes returned by the server may go to the wrong client player, which causes a crash of the player.

You must be misunderstanding something, If what you were saying were true then YouTube wouldn’t work.

You might be able to learn more here: https://developer.mozilla.org/en-US/docs/Web/HTTP/Range_requests

YouTube works fine because it serves one video at a time. So its byte requests don’t need to specify which file is the context. When a client of mine tried to access a second video right after a first, the log showed that the byte requests were interleaved, probably due to lack of sync in setting the path in the Session variables. Anyway, when I look at the $_SERVER information for byte requests from the video player, the video path is NOT there, just the range of bytes.

Ok maybe I’m misunderstanding something.

But I just opened two browser windows and started two YouTube videos simultaneously. It was serving two videos simultaneously and seemed to have no problem. In fact I imagine that YouTube is serving millions of videos at once because that’s how many people are probably watching YouTube at the same time.

If you want I can look at your code and see if I can spot a problem with it, just email me on [email protected]

It is possible that my explanation is incorrect. The fact is that my code works for Firefox, Chrome, and Safari on a mobile device. It only fails for Safari on MacOS. It is a known bug with apparently no solution published as yet.

Do you have links to information on the bug? I might be able to help if I learn more about it.

Leave a Reply Cancel reply

streaming video on safari: working around range request requirements

~ 2 min read

When it comes to playing video on the web, we now have a <video> tag. This is really handy, however, browser implementations vary and Safari in particular expects the data to be streamed. Specifically, in order to use the <video> tag with Safari, the server needs to send a 206 response with a range request (indicating which bytes are being sent) and will reject a 200.

Our server was not configured to differentiate between requests and responded with a 200 . From its perspective, everything was OK!

The suggested way to resolve this is reconfigure your server to support partial / range requests. LogRocket has a great blog post on the topic.

In our case, modifying the server to support playing video was more work than it was worth. (We landed on using the <video> tag in the first place because iPad’s don’t mute playing video like they do playing audio when the iPad is on “silent” mode. Discovering that “feature” is a story for another day.) So, we had to get creative.

The solution was to create a new URL to serve as the source for the video tag instead of pointing to it directly. So, we fetch the video initially as a file, then construct a URL. From the browser’s perspective, at this point, the video is just data and the browser won’t care what it is. The URL we create for it copies the data into memory and we can then use that URL as the media source.

Here’s a Code Sandbox demonstrating the concept:

Now, it’s worth pointing out that there are advantages to actually following Safari’s recommended approach - specifically, you download fewer bits that might be unused at a time (though it does make buffering ahead harder). So, range requests are more efficient (generally), but if your server’s not set up to handle them, and you need a work around, try this approach of downloading the data completely, and transforming the blob on your own.

- ⇐ Previous: CSS Experiments: Polygons and Gradients

- Next: Notes on Maya Angelou's "I Know Why the Caged Bird Sings" ⇒

Hi there and thanks for reading! My name's Stephen. I live in Chicago with my wife, Kate, and dog, Finn. Want more? See about and get in touch!

Http Range Request and MP4 Video Play in Browser

Basic concept.

Splitting large video file into parts, transfering it over network part by part, are vital for browser displaying video to user. The network may losing packet. The user may want to play at any random time point. The parts way can avoid retransmission and improve user experience.

In HTTP protocol 1.1 to achieve this range request can be leveraged. More backgroun and details are at HTTP RFC7233 , Wiki Byte_serving .

Briefly, the HTTP Client send a request with header Range that declare needed byte ranges in resource. The HTTP server reponse with status code 206 (Partial Content), with header Content-Range describing what range of the selected representation is enclosed, and a payload consisting of the range.

If the request range is invalid or don’t overlap the selected resource, the server reponse with status code 416 (Range Not Satisfiable).

Examples of valid byte ranges (assuming a resource of length 10240):

- bytes=0-499 , the first 500 bytes

- bytes=1000-1999 , 1000 bytes start from offset 1000

- bytes=-500 , the final 500 bytes (byte offsets 9739-10239, inclusive)

- bytes=0-0,-1 , the first and last bytes only

- bytes=0- , bytes=0-10250 , be interpreted as bytes=0-10239

As RFC7233 pointed out

If the last-byte-pos value is absent, or if the value is greater than or equal to the current length of the representation data, the byte range is interpreted as the remainder of the representation

How Range Requests happen in Browsers

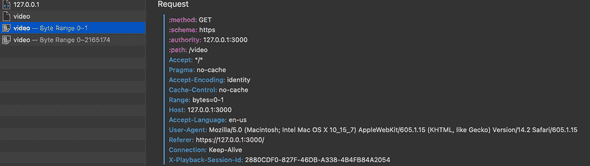

How could the browser/client knows whether to send a range request and not? The answer is HTTP header Accept-Ranges: bytes .

Below is a example from Chrome that request and play a video (791MB) from Golang HTTP file server. It start with a normal HTTP request. By reading the response header from stream, Chrome find the server side supports range, then abort the connection and start sending range requests.

FireFox’s Behaviors are quite similar to Chrome, except that’s only one trivial range request.

Safari send first trivial range request with bytes=0-1 , and other two trivial range requests like Chrome.

Both Chrome and FireFox send range request using byte range (i.e bytes=1867776- ) with last-byte-pos value absent. But Safari always set the last-byte-pos.

Whiles Chrome/FireFox try fetching the remaining bytes in one range request (with range without last-byte-pos i.e bytes=720896-), Safari sends many range request. It firstly request some small parts from the sever, wait for completion. In the next, Safari ask for all remaining bytes ranging from the bytes it holds to the end of file. But once every 4-5MB was transmitted from the server, Safari close the connection and start a new one.

So what happens when user drag the progress bar to any time point? The browser just mapping the desired the point of video to an offset from start,then it abort the inflight range request and start a new one like byte=offset-total .

Can HTTP Server return 206 to Browser for the First Request?

Maybe yes, but meaningless.

The server return 206 (Partial Content) directly for browser’s first request (the request without Range header). As sample code show

Browsers include Chrome, FireFox, Safari handle this condition well. Sample server can play with docker and visit localhost:9100 to see browser’s behavior

Full sample code is here .

Can Server Side Control the Size of Each Range Request?

Demands like [ how-to-make-browser-request-smaller-range-with-206-partial-content ] are exist in server side.

Chrome and FireFox ask for ranges like bytes=300- , can server side return a smaller-range part, other than part from offset 300 to end of file? The answer is yes.

Blow code sample shows that, when last-byte-pos is absent, set a position 5,000,000 bytes from the start position.

This works well with Chrome and FireFox. The browser will send serial range request, like bytes=5_000_300- , bytes=10_000_300- , bytes=15_000_300- , until the end of file reached.

The full sample code is here . You can run the server in Docker and visit localhost:9100 in Chrome or FireFox to verify result

As Safari always set the last-byte-pos, if the server response another last-byte-pos than desired position, the browser reject to play the video.

How Can Server Side Handle Video Stream of Dynamic Size?

The sample server go-http-range-dynamic returns 5,000,000 bytes for each range request of Chrome or FireFox (For Safari it depends on). when the range start of request is close the end of file, replace file with a large one.

Last two range request looks like this, the server try to update the resource size from 43730385 to 95644582. All browser have same behavior, they reject to fetch more streams and finish play the video.

This sample server can run in Docker directly

What if the server side return a size large enough (i.e 100GB) as Content-Length header value for the first time browser requesting resource? Would the browser continually send range requests util 100GB reached? Answer is no. The browser may have some way to detect the actual size of first video(during head/tail verification), when its end reached, they reject to fetch more streams and exit the video play.

Try sample server with option -dynamic true to verify browser’s behavior

Bypassing browser’s verification need knowledges in video encoding technology and is tricky. The best solution for serving dynamic stream is write a custom one, popular video platforms like YouTube , Twitch all use their own video player.

HTTP range request is a widely used feature when it comes to file resource. File systems such as S3 have good support for this. Learning internals about range request helps you building HTTP system with higher aggregate throughput, for both server side and client side.

Browsers are quite smart with range request. Tricks sometimes works, but sometimes not. Obeying RFC7233 is the best practice guide. HTTP range request is not a good solution for serving dynamic content. If you are building a site for livestream, it’s better to come up with custom player.

Further Reading

- MDN range request

- Wiki Byte_serving

Author Zeng Xu

LastMod 2023-03-08 10:51

License 本作品采用 知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议 进行许可,转载时请注明原文链接。

Streaming video in Safari: Why is it so difficult?

The problem.

I recently implemented support for AI tagging of videos in my product Sortal . A part of the feature is that you can then play back the videos you uploaded. I thought, no problem — video streaming seems pretty simple.

In fact, it is so simple (just a few lines of code) that I chose video streaming as the theme for examples in my book Bootstrapping Microservices .

But when we came to testing in Safari, I learned the ugly truth. So let me rephrase the previous assertion: video streaming is simple for Chrome, but not so much for Safari.

Why is it so difficult for Safari? What does it take to make it work for Safari? The answers to these questions are revealed in this blog post.

Try it for yourself

Before we start looking at the code together, please try it for yourself! The code that accompanies this blog post is available on GitHub . You can download the code or use Git to clone the repository. You’ll need Node.js installed to try it out.

Start the server as instructed in the readme and navigate your browser to http://localhost:3000 . You’ll see either Figure 1 or Figure 2, depending on whether you are viewing the page in Chrome or Safari.

Notice that in Figure 2, when the webpage is viewed in Safari, the video on the left side doesn’t work. However, the example on the right does work, and this post explains how I achieved a working version of the video streaming code for Safari.

Figure 1: Video streaming example viewed in Chrome.

Figure 2: Video streaming example viewed in Safari. Notice that the basic video streaming on the left is not functional.

Basic video streaming.

The basic form of video streaming that works in Chrome is trivial to implement in your HTTP server. We are simply streaming the entire video file from the backend to the frontend, as illustrated in Figure 3.

Figure 3: Simple video streaming that works in Chrome.

In the frontend.

To render a video in the frontend, we use the HTML5 video element. There’s not much to it; Listing 1 shows how it works. This is the version that works only in Chrome. You can see that the src of the video is handled in the backend by the /works-in-chrome route.

Listing 1: A simple webpage to render streaming video that works in Chrome

In the backend.

The backend for this example is a very simple HTTP server built on the Express framework running on Node.js. You can see the code in Listing 2. This is where the /works-in-chrome route is implemented.

In response to the HTTP GET request, we stream the whole file to the browser. Along the way, we set various HTTP response headers.

The content-type header is set to video/mp4 so the browser knows it’s receiving a video.

Then we stat the file to get its length and set that as the content-length header so the browser knows how much data it’s receiving.

Listing 2: Node.js Express web server with simple video streaming that works for Chrome

But it doesn’t work in safari.

Unfortunately, we can’t just send the entire video file to Safari and expect it to work. Chrome can deal with it, but Safari refuses to play the game.

What’s missing?

Safari doesn’t want the entire file delivered in one go. That’s why the brute-force tactic of streaming the whole file doesn’t work.

Safari would like to stream portions of the file so that it can be incrementally buffered in a piecemeal fashion. It also wants random, ad hoc access to any portion of the file that it requires.

This actually makes sense. Imagine that a user wants to rewind the video a bit — you wouldn’t want to start the whole file streaming again, would you?

Instead, Safari wants to just go back a bit and request that portion of the file again. In fact, this works in Chrome as well. Even though the basic streaming video works in Chrome, Chrome can indeed issue HTTP range requests for more efficient handling of streaming videos.

Figure 4 gives you an idea of how this works. We need to modify our HTTP server so that rather than streaming the entire video file to the frontend, we can instead serve random access portions of the file depending on what the browser is requesting.

Figure 4: For video streaming to work in Safari, we must support HTTP range requests that can retrieve a portion of the video file instead of the whole file.

Supporting http range requests.

Specifically, we have to support HTTP range requests. But how do we implement it?

There’s surprisingly little readable documentation for it. Of course, we could read the HTTP specifications, but who has the time and motivation for that? (I’ll give you links to resources at the end of this post.)

Instead, allow me to guide you through an overview of my implementation. The key to it is the HTTP request range header that starts with the prefix "bytes=" .

This header is how the frontend asks for a particular range of bytes to be retrieved from the video file. You can see in Listing 3 how we can parse the value for this header to obtain starting and ending values for the range of bytes.

####Listing 3: Parsing the HTTP range header

Responding to the HTTP HEAD request

An HTTP HEAD request is how the frontend probes the backend for information on a particular resource. We should take some care with how we handle this.

The Express framework also sends HEAD requests to our HTTP GET handler, so we can check the req.method and return early from the request handler before we do more work than is necessary for the HEAD request.

Listing 4 shows how we respond to the HEAD request. We don’t have to return any data from the file, but we do have to configure the response headers to tell the frontend that we are supporting the HTTP range request and to let it know the full size of the video file.

The accept-ranges response header used here indicates that this request handler can respond to an HTTP range request.

Listing 4: Responding to the HTTP HEAD request

Full file vs. partial file.

Now for the tricky part. Are we sending the full file or are we sending a portion of the file?

With some care, we can make our request handler support both methods. You can see in Listing 5 how we compute retrievedLength from the start and end variables when it is a range request and those variables are defined; otherwise, we just use contentLength (the complete file’s size) when it’s not a range request.

Listing 5: Determining the content length based on the portion of the file requested

Send status code and response headers.

We’ve dealt with the HEAD request. All that’s left to handle is the HTTP GET request.

Listing 6 shows how we send an appropriate success status code and response headers.

The status code varies depending on whether this is a request for the full file or a range request for a portion of the file. If it’s a range request, the status code will be 206 (for partial content); otherwise, we use the regular old success status code of 200.

Listing 6: Sending response headers

Streaming a portion of the file.

Now the easiest part: streaming a portion of the file. The code in Listing 7 is almost identical to the code in the basic video streaming example way back in Listing 2.

The difference now is that we are passing in the options object. Conveniently, the [createReadStream](https://nodejs.org/api/fs.html#fs_fs_createreadstream_path_options) function from Node.js’ file system module takes start and end values in the options object, which enable reading a portion of the file from the hard drive.

In the case of an HTTP range request, the earlier code in Listing 3 will have parsed the start and end values from the header, and we inserted them into the options object.

In the case of a normal HTTP GET request (not a range request), the start and end won’t have been parsed and won’t be in the options object, in that case, we are simply reading the entire file.

Listing 7: Streaming a portion of the file

Putting it all together.

Now let’s put all the code together into a complete request handler for streaming video that works in both Chrome and Safari.

Listing 8 is the combined code from Listing 3 through to Listing 7, so you can see it all in context. This request handler can work either way. It can retrieve a portion of the video file if requested to do so by the browser. Otherwise, it retrieves the entire file.

Listing 8: Full HTTP request handler

Updated frontend code.

Nothing needs to change in the frontend code besides making sure the video element is pointing to an HTTP route that can handle HTTP range requests.

Listing 9 shows that we have simply rerouted the video element to a route called /works-in-chrome-and-safari . This frontend will work both in Chrome and in Safari.

Listing 9: Updated frontend code

Even though video streaming is simple to get working for Chrome, it’s quite a bit more difficult to figure out for Safari — at least if you are trying to figure it out by yourself from the HTTP specification.

Lucky for you, I’ve already trodden that path, and this blog post has laid the groundwork that you can build on for your own streaming video implementation.

- Example code for this blog post

- A Stack Overflow post that helped me understand what I was missing

- HTTP specification

- Range requests

- 206 partial content success status

Safari Web Content Guide

- Table of Contents

- Jump To…

- Download Sample Code

Creating Video

Safari supports audio and video viewing in a webpage on the desktop and iOS. You can use audio and video HTML elements or use the embed element to use the native application for video playback. In either case, you need to ensure that the video you create is optimized for the platform and different bandwidths.

iOS streams movies and audio using HTTP over EDGE, 3G, and Wi-Fi networks. iOS uses a native application to play back video even when video is embedded in your webpages. Video automatically expands to the size of the screen and rotates when the user changes orientation. The controls automatically hide when they are not in use and appear when the user taps the screen. This is the experience the user expects when viewing all video on iOS.

Safari on iOS supports a variety of rich media, including QuickTime movies, as described in Use Supported iOS Rich Media MIME Types . Safari on iOS does not support Flash so don’t bring up JavaScript alerts that ask users to download Flash. Also, don’t use JavaScript movie controls to play back video since iOS supplies its own controls.

Safari on the desktop supports the same audio and video formats as Safari on iOS. However, if you use the audio and video HTML elements on the desktop, you can customize the play back controls. See Safari DOM Additions Reference for more details on the HTMLMediaElement class.

Follow these guidelines to deliver the best web audio and video experience in Safari on any platform:

Follow current best practices for embedding movies in webpages as described in Sizing Movies Appropriately , Don’t Let the Bit Rate Stall Your Movie , and Using Supported Movie Standards .

Use QuickTime Pro to encode H.264/AAC at appropriate sizes and bit rates for EDGE, 3G, and Wi-Fi networks, as described in Encoding Video for Wi-Fi, 3G, and EDGE .

Use reference movies so that iOS automatically streams the best version of your content for the current network connection, as described in Creating a Reference Movie .

Use poster JPEGs (not poster frames in a movie) to display a preview of your embedded movie in webpages, as described in Creating a Poster Image for Movies .

Make sure the HTTP servers hosting your media files support byte-range requests, as described in Configuring Your Server .

If your site has a custom media player, also provide direct links to the media files. iOS users can follow these links to play those files directly.

Sizing Movies Appropriately

In landscape orientation on iOS, the screen is 480 x 320 pixels. Users can easily switch the view mode between scaled-to-fit (letterboxed) and full-screen (centered and cropped). You should use a size that preserves the aspect ratio of your content and fits within a 480 x 360 rectangle. 480 x 360 is a good choice for 4:3 aspect ratio content and 480 x 270 is a good choice for widescreen content as it keeps the video sharp in full-screen view mode. You can also use 640 x 360 or anamorphic 640 x 480 with pixel aspect ratio tagging for widescreen content.

Don’t Let the Bit Rate Stall Your Movie

When viewing media over the network, the bit rate makes a crucial difference to the playback experience. If the network cannot keep up with the media bit rate, playback stalls. Encode your media for iOS as described in Encoding Video for Wi-Fi, 3G, and EDGE and use a reference movie as described in Creating a Reference Movie .

Using Supported Movie Standards

The following compression standards are supported:

H.264 Baseline Profile Level 3.0 video, up to 640 x 480 at 30 fps. Note that B frames are not supported in the Baseline profile.

MPEG-4 Part 2 video (Simple Profile)

AAC-LC audio, up to 48 kHz

Movie files with the extensions .mov , .mp4 , .m4v , and .3gp are supported.

Any movies or audio files that can play on iPod play correctly on iPhone.

If you export your movies using QuickTime Pro 7.2, as described in Encoding Video for Wi-Fi, 3G, and EDGE , then you can be sure that they are optimized to play on iOS.

Encoding Video for Wi-Fi, 3G, and EDGE

Because users may be connected to the Internet via wired or wireless technology, using either Wi-Fi, 3G, or EDGE on iOS, you need to provide alternate media for these different connection speeds. You can use QuickTime Pro, the QuickTime API, or any Apple applications that provide iOS exporters to encode your video for Wi-Fi, 3G, and EDGE. This section contains specific instructions for exporting video using QuickTime Pro.

Follow these steps to export video using QuickTime Pro 7.2.1 and later:

Open your movie using QuickTime Player Pro.

Choose File > Export for Web.

A dialog appears.

Enter the file name prefix, location of your export, and set of versions to export as shown in Figure 10-1 .

Click Export.

QuickTime Player Pro saves these versions of your QuickTime movie, along with a reference movie, poster image, and ReadMe.html file to the specified location. See the ReadMe.html file for instructions on embedding the generated movie in your webpage, including sample HTML.

Creating a Reference Movie

A reference movie contains a list of movie URLs, each of which has a list of tests, as show in Figure 10-2 . When opening the reference movie, a playback device or computer chooses one of the movie URLs by finding the last one that passes all its tests. Tests can check the capabilities of the device or computer and the speed of the network connection.

If you use QuickTime Pro 7.2.1 or later to export your movies for iOS, as described in Encoding Video for Wi-Fi, 3G, and EDGE , then you already have a reference movie. Otherwise, you can use the MakeRefMovie tool to create reference movies. For more information on creating reference movies see Creating Reference Movies - MakeRefMovie .

Also, refer to the MakeiPhoneRefMovie sample for a command-line tool that creates reference movies.

For more details on reference movies and instructions on how to set them up see "Applications and Examples" in HTML Scripting Guide for QuickTime .

Creating a Poster Image for Movies

The video is not decoded until the user enters movie playback mode. Consequently, when displaying a webpage with video, users may see a gray rectangle with a QuickTime logo until they tap the Play button. Therefore, use a poster JPEG as a preview of your movie. If you use QuickTime Pro 7.2.1 or later to export your movies, as described in Encoding Video for Wi-Fi, 3G, and EDGE , then a poster image is already created for you. Otherwise, follow these instructions to set a poster image.

If you are using the <video> element, specify a poster image by setting the poster attribute as follows:

If you are using an <embed> HTML element, specify a poster image by setting the image for src , the movie for href , the media MIME type for type , and myself as the target :

Make similar changes if you are using the <object> HTML element or JavaScript to embed movies in your webpage.

On the desktop, this image is displayed until the user clicks, at which time the movie is substituted.

Configuring Your Server

HTTP servers hosting media files for iOS must support byte-range requests, which iOS uses to perform random access in media playback. (Byte-range support is also known as content-range or partial-range support.) Most, but not all, HTTP 1.1 servers already support byte-range requests.

If you are not sure whether your media server supports byte-range requests, you can open the Terminal application in OS X and use the curl command-line tool to download a short segment from a file on the server:

If the tool reports that it downloaded 100 bytes, the media server correctly handled the byte-range request. If it downloads the entire file, you may need to update the media server. For more information on curl , see OS X Man Pages .

Ensure that your HTTP server sends the correct MIME types for the movie filename extensions shown in Table 10-1 .

Be aware that iOS supports movies larger than 2 GB. However, some older web servers are not able to serve files this large. Apache 2 supports downloading files larger than 2 GB.

RTSP is not supported.

Copyright © 2016 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2016-12-12

Sending feedback…

We’re sorry, an error has occurred..

Please try submitting your feedback later.

Thank you for providing feedback!

Your input helps improve our developer documentation.

How helpful is this document?

How can we improve this document.

* Required information

To submit a product bug or enhancement request, please visit the Bug Reporter page.

Please read Apple's Unsolicited Idea Submission Policy before you send us your feedback.

Safari User Guide

- Change your homepage

- Import bookmarks, history, and passwords

- Make Safari your default web browser

- Go to websites

- Find what you’re looking for

- Bookmark webpages that you want to revisit

- See your favorite websites

- Use tabs for webpages

- Pin frequently visited websites

- Play web videos

- Mute audio in tabs

- Pay with Apple Pay

- Autofill credit card info

- Autofill contact info

- Keep a Reading List

- Hide ads when reading articles

- Translate a webpage

- Download items from the web

- Share or post webpages

- Add passes to Wallet

- Save part or all of a webpage

- Print or create a PDF of a webpage

- Customize a start page

- Customize the Safari window

- Customize settings per website

- Zoom in on webpages

- Get extensions

- Manage cookies and website data

- Block pop-ups

- Clear your browsing history

- Browse privately

- Autofill user name and password info

- Prevent cross-site tracking

- View a Privacy Report

- Change Safari preferences

- Keyboard and other shortcuts

- Troubleshooting

Play web videos in Safari on Mac

With Picture in Picture, you can play a video in a movable window that “floats” on top of everything else, so you can always see it, no matter what you’re doing. With AirPlay, you can play web videos on any HDTV with Apple TV—without showing everything else on your desktop.

Open Safari for me

Play a web video with Picture in Picture

Click and hold the Audio button in the Smart Search field or in a tab.

Choose Enter Picture in Picture.

Play a web video on your HDTV

See Use AirPlay to stream what’s on your Mac to an HDTV .

- Skip to main content

- Skip to search

- Skip to select language

- Sign up for free

- English (US)

Web video codec guide

This guide introduces the video codecs you're most likely to encounter or consider using on the web, summaries of their capabilities and any compatibility and utility concerns, and advice to help you choose the right codec for your project's video.

Due to the sheer size of uncompressed video data, it's necessary to compress it significantly in order to store it, let alone transmit it over a network. Imagine the amount of data needed to store uncompressed video:

- A single frame of high definition (1920x1080) video in full color (4 bytes per pixel) is 8,294,400 bytes.

- At a typical 30 frames per second, each second of HD video would occupy 248,832,000 bytes (~249 MB).

- A minute of HD video would need 14.93 GB of storage.

- A fairly typical 30 minute video conference would need about 447.9 GB of storage, and a 2-hour movie would take almost 1.79 TB (that is, 1790 GB) .

Not only is the required storage space enormous, but the network bandwidth needed to transmit an uncompressed video like that would be enormous, at 249 MB/sec—not including audio and overhead. This is where video codecs come in. Just as audio codecs do for the sound data, video codecs compress the video data and encode it into a format that can later be decoded and played back or edited.

Most video codecs are lossy , in that the decoded video does not precisely match the source. Some details may be lost; the amount of loss depends on the codec and how it's configured, but as a general rule, the more compression you achieve, the more loss of detail and fidelity will occur. Some lossless codecs do exist, but they are typically used for archival and storage for local playback rather than for use on a network.

Common codecs

The following video codecs are those which are most commonly used on the web. For each codec, the containers (file types) that can support them are also listed. Each codec provides a link to a section below which offers additional details about the codec, including specific capabilities and compatibility issues you may need to be aware of.

Factors affecting the encoded video

As is the case with any encoder, there are two basic groups of factors affecting the size and quality of the encoded video: specifics about the source video's format and contents, and the characteristics and configuration of the codec used while encoding the video.

The simplest guideline is this: anything that makes the encoded video look more like the original, uncompressed, video will generally make the resulting data larger as well. Thus, it's always a tradeoff of size versus quality. In some situations, a greater sacrifice of quality in order to bring down the data size is worth that lost quality; other times, the loss of quality is unacceptable and it's necessary to accept a codec configuration that results in a correspondingly larger file.

Effect of source video format on encoded output

The degree to which the format of the source video will affect the output varies depending on the codec and how it works. If the codec converts the media into an internal pixel format, or otherwise represents the image using a means other than simple pixels, the format of the original image doesn't make any difference. However, things such as frame rate and, obviously, resolution will always have an impact on the output size of the media.

Additionally, all codecs have their strengths and weaknesses. Some have trouble with specific kinds of shapes and patterns, or aren't good at replicating sharp edges, or tend to lose detail in dark areas, or any number of possibilities. It all depends on the underlying algorithms and mathematics.

The degree to which these affect the resulting encoded video will vary depending on the precise details of the situation, including which encoder you use and how it's configured. In addition to general codec options, the encoder could be configured to reduce the frame rate, to clean up noise, and/or to reduce the overall resolution of the video during encoding.

Effect of codec configuration on encoded output

The algorithms used do encode video typically use one or more of a number of general techniques to perform their encoding. Generally speaking, any configuration option that is intended to reduce the output size of the video will probably have a negative impact on the overall quality of the video, or will introduce certain types of artifacts into the video. It's also possible to select a lossless form of encoding, which will result in a much larger encoded file but with perfect reproduction of the original video upon decoding.

In addition, each encoder utility may have variations in how they process the source video, resulting in differences in the output quality and/or size.

The options available when encoding video, and the values to be assigned to those options, will vary not only from one codec to another but depending on the encoding software you use. The documentation included with your encoding software will help you to understand the specific impact of these options on the encoded video.

Compression artifacts

Artifacts are side effects of a lossy encoding process in which the lost or rearranged data results in visibly negative effects. Once an artifact has appeared, it may linger for a while, because of how video is displayed. Each frame of video is presented by applying a set of changes to the currently-visible frame. This means that any errors or artifacts will compound over time, resulting in glitches or otherwise strange or unexpected deviations in the image that linger for a time.

To resolve this, and to improve seek time through the video data, periodic key frames (also known as intra-frames or i-frames ) are placed into the video file. The key frames are full frames, which are used to repair any damage or artifact residue that's currently visible.

Aliasing is a general term for anything that upon being reconstructed from the encoded data does not look the same as it did before compression. There are many forms of aliasing; the most common ones you may see include:

Color edging

Color edging is a type of visual artifact that presents as spurious colors introduced along the edges of colored objects within the scene. These colors have no intentional color relationship to the contents of the frame.

Loss of sharpness

The act of removing data in the process of encoding video requires that some details be lost. If enough compression is applied, parts or potentially all of the image could lose sharpness, resulting in a slightly fuzzy or hazy appearance.

Lost sharpness can make text in the image difficult to read, as text—especially small text—is very detail-oriented content, where minor alterations can significantly impact legibility.

Lossy compression algorithms can introduce ringing , an effect where areas outside an object are contaminated with colored pixels generated by the compression algorithm. This happens when an algorithm that uses blocks that span across a sharp boundary between an object and its background. This is particularly common at higher compression levels.

Note the blue and pink fringes around the edges of the star above (as well as the stepping and other significant compression artifacts). Those fringes are the ringing effect. Ringing is similar in some respects to mosquito noise , except that while the ringing effect is more or less steady and unchanging, mosquito noise shimmers and moves.

Ringing is another type of artifact that can make it particularly difficult to read text contained in your images.

Posterizing

Posterization occurs when the compression results in the loss of color detail in gradients. Instead of smooth transitions through the various colors in a region, the image becomes blocky, with blobs of color that approximate the original appearance of the image.

Note the blockiness of the colors in the plumage of the bald eagle in the photo above (and the snowy owl in the background). The details of the feathers is largely lost due to these posterization artifacts.

Contouring or color banding is a specific form of posterization in which the color blocks form bands or stripes in the image. This occurs when the video is encoded with too coarse a quantization configuration. As a result, the video's contents show a "layered" look, where instead of smooth gradients and transitions, the transitions from color to color are abrupt, causing strips of color to appear.

In the example image above, note how the sky has bands of different shades of blue, instead of being a consistent gradient as the sky color changes toward the horizon. This is the contouring effect.

Mosquito noise

Mosquito noise is a temporal artifact which presents as noise or edge busyness that appears as a flickering haziness or shimmering that roughly follows outside the edges of objects with hard edges or sharp transitions between foreground objects and the background. The effect can be similar in appearance to ringing .

The photo above shows mosquito noise in a number of places, including in the sky surrounding the bridge. In the upper-right corner, an inset shows a close-up of a portion of the image that exhibits mosquito noise.

Mosquito noise artifacts are most commonly found in MPEG video, but can occur whenever a discrete cosine transform (DCT) algorithm is used; this includes, for example, JPEG still images.

Motion compensation block boundary artifacts

Compression of video generally works by comparing two frames and recording the differences between them, one frame after another, until the end of the video. This technique works well when the camera is fixed in place, or the objects in the frame are relatively stationary, but if there is a great deal of motion in the frame, the number of differences between frames can be so great that compression doesn't do any good.

Motion compensation is a technique that looks for motion (either of the camera or of objects in the frame of view) and determines how many pixels the moving object has moved in each direction. Then that shift is stored, along with a description of the pixels that have moved that can't be described just by that shift. In essence, the encoder finds the moving objects, then builds an internal frame of sorts that looks like the original but with all the objects translated to their new locations. In theory, this approximates the new frame's appearance. Then, to finish the job, the remaining differences are found, then the set of object shifts and the set of pixel differences are stored in the data representing the new frame. This object that describes the shift and the pixel differences is called a residual frame .

There are two general types of motion compensation: global motion compensation and block motion compensation . Global motion compensation generally adjusts for camera movements such as tracking, dolly movements, panning, tilting, rolling, and up and down movements. Block motion compensation handles localized changes, looking for smaller sections of the image that can be encoded using motion compensation. These blocks are normally of a fixed size, in a grid, but there are forms of motion compensation that allow for variable block sizes, and even for blocks to overlap.

There are, however, artifacts that can occur due to motion compensation. These occur along block borders, in the form of sharp edges that produce false ringing and other edge effects. These are due to the mathematics involved in the coding of the residual frames, and can be easily noticed before being repaired by the next key frame.

Reduced frame size

In certain situations, it may be useful to reduce the video's dimensions in order to improve the final size of the video file. While the immediate loss of size or smoothness of playback may be a negative factor, careful decision-making can result in a good end result. If a 1080p video is reduced to 720p prior to encoding, the resulting video can be much smaller while having much higher visual quality; even after scaling back up during playback, the result may be better than encoding the original video at full size and accepting the quality hit needed to meet your size requirements.

Reduced frame rate

Similarly, you can remove frames from the video entirely and decrease the frame rate to compensate. This has two benefits: it makes the overall video smaller, and that smaller size allows motion compensation to accomplish even more for you. For example, instead of computing motion differences for two frames that are two pixels apart due to inter-frame motion, skipping every other frame could lead to computing a difference that comes out to four pixels of movement. This lets the overall movement of the camera be represented by fewer residual frames.

The absolute minimum frame rate that a video can be before its contents are no longer perceived as motion by the human eye is about 12 frames per second. Less than that, and the video becomes a series of still images. Motion picture film is typically 24 frames per second, while standard definition television is about 30 frames per second (slightly less, but close enough) and high definition television is between 24 and 60 frames per second. Anything from 24 FPS upward will generally be seen as satisfactorily smooth; 30 or 60 FPS is an ideal target, depending on your needs.

In the end, the decisions about what sacrifices you're able to make are entirely up to you and/or your design team.

Codec details

The AOMedia Video 1 ( AV1 ) codec is an open format designed by the Alliance for Open Media specifically for internet video. It achieves higher data compression rates than VP9 and H.265/HEVC , and as much as 50% higher rates than AVC . AV1 is fully royalty-free and is designed for use by both the <video> element and by WebRTC .

AV1 currently offers three profiles: main , high , and professional with increasing support for color depths and chroma subsampling. In addition, a series of levels are specified, each defining limits on a range of attributes of the video. These attributes include frame dimensions, image area in pixels, display and decode rates, average and maximum bit rates, and limits on the number of tiles and tile columns used in the encoding/decoding process.

For example, AV1 level 2.0 offers a maximum frame width of 2048 pixels and a maximum height of 1152 pixels, but its maximum frame size in pixels is 147,456, so you can't actually have a 2048x1152 video at level 2.0. It's worth noting, however, that at least for Firefox and Chrome, the levels are actually ignored at this time when performing software decoding, and the decoder just does the best it can to play the video given the settings provided. For compatibility's sake going forward, however, you should stay within the limits of the level you choose.

The primary drawback to AV1 at this time is that it is very new, and support is still in the process of being integrated into most browsers. Additionally, encoders and decoders are still being optimized for performance, and hardware encoders and decoders are still mostly in development rather than production. For this reason, encoding a video into AV1 format takes a very long time, since all the work is done in software.

For the time being, because of these factors, AV1 is not yet ready to be your first choice of video codec, but you should watch for it to be ready to use in the future.

AVC (H.264)

The MPEG-4 specification suite's Advanced Video Coding ( AVC ) standard is specified by the identical ITU H.264 specification and the MPEG-4 Part 10 specification. It's a motion compensation based codec that is widely used today for all sorts of media, including broadcast television, RTP videoconferencing, and as the video codec for Blu-Ray discs.

AVC is highly flexible, with a number of profiles with varying capabilities; for example, the Constrained Baseline Profile is designed for use in videoconferencing and mobile scenarios, using less bandwidth than the Main Profile (which is used for standard definition digital TV in some regions) or the High Profile (used for Blu-Ray Disc video). Most of the profiles use 8-bit color components and 4:2:0 chroma subsampling; The High 10 Profile adds support for 10-bit color, and advanced forms of High 10 add 4:2:2 and 4:4:4 chroma subsampling.

AVC also has special features such as support for multiple views of the same scene (Multiview Video Coding), which allows, among other things, the production of stereoscopic video.

AVC is a proprietary format, however, and numerous patents are owned by multiple parties regarding its technologies. Commercial use of AVC media requires a license, though the MPEG LA patent pool does not require license fees for streaming internet video in AVC format as long as the video is free for end users.

Non-web browser implementations of WebRTC (any implementation which doesn't include the JavaScript APIs) are required to support AVC as a codec in WebRTC calls. While web browsers are not required to do so, some do.

In HTML content for web browsers, AVC is broadly compatible and many platforms support hardware encoding and decoding of AVC media. However, be aware of its licensing requirements before choosing to use AVC in your project!

Firefox support for AVC is dependent upon the operating system's built-in or preinstalled codecs for AVC and its container in order to avoid patent concerns.

ITU's H.263 codec was designed primarily for use in low-bandwidth situations. In particular, its focus is for video conferencing on PSTN (Public Switched Telephone Networks), RTSP , and SIP (IP-based videoconferencing) systems. Despite being optimized for low-bandwidth networks, it is fairly CPU intensive and may not perform adequately on lower-end computers. The data format is similar to that of MPEG-4 Part 2.

H.263 has never been widely used on the web. Variations on H.263 have been used as the basis for other proprietary formats, such as Flash video or the Sorenson codec. However, no major browser has ever included H.263 support by default. Certain media plugins have enabled support for H.263 media.

Unlike most codecs, H.263 defines fundamentals of an encoded video in terms of the maximum bit rate per frame (picture), or BPPmaxKb . During encoding, a value is selected for BPPmaxKb, and then the video cannot exceed this value for each frame. The final bit rate will depend on this, the frame rate, the compression, and the chosen resolution and block format.

H.263 has been superseded by H.264 and is therefore considered a legacy media format which you generally should avoid using if you can. The only real reason to use H.263 in new projects is if you require support on very old devices on which H.263 is your best choice.

H.263 is a proprietary format, with patents held by a number of organizations and companies, including Telenor, Fujitsu, Motorola, Samsung, Hitachi, Polycom, Qualcomm, and so on. To use H.263, you are legally obligated to obtain the appropriate licenses.

HEVC (H.265)

The High Efficiency Video Coding ( HEVC ) codec is defined by ITU's H.265 as well as by MPEG-H Part 2 (the still in-development follow-up to MPEG-4). HEVC was designed to support efficient encoding and decoding of video in sizes including very high resolutions (including 8K video), with a structure specifically designed to let software take advantage of modern processors. Theoretically, HEVC can achieve compressed file sizes half that of AVC but with comparable image quality.

For example, each coding tree unit (CTU)—similar to the macroblock used in previous codecs—consists of a tree of luma values for each sample as well as a tree of chroma values for each chroma sample used in the same coding tree unit, as well as any required syntax elements. This structure supports easy processing by multiple cores.

An interesting feature of HEVC is that the main profile supports only 8-bit per component color with 4:2:0 chroma subsampling. Also interesting is that 4:4:4 video is handled specially. Instead of having the luma samples (representing the image's pixels in grayscale) and the Cb and Cr samples (indicating how to alter the grays to create color pixels), the three channels are instead treated as three monochrome images, one for each color, which are then combined during rendering to produce a full-color image.

HEVC is a proprietary format and is covered by a number of patents. Licensing is managed by MPEG LA ; fees are charged to developers rather than to content producers and distributors. Be sure to review the latest license terms and requirements before making a decision on whether or not to use HEVC in your app or website!

Chrome support HEVC for devices with hardware support on Windows 8+, Linux and ChromeOS, for all devices on macOS Big Sur 11+ and Android 5.0+.

Edge (Chromium) supports HEVC for devices with hardware support on Windows 10 1709+ when HEVC video extensions from the Microsoft Store is installed, and has the same support status as Chrome on other platforms. Edge (Legacy) only supports HEVC for devices with a hardware decoder.

Mozilla will not support HEVC while it is encumbered by patents.

Opera and other Chromium based browsers have the same support status as Chrome.

Safari supports HEVC for all devices on macOS High Sierra or later.

The MPEG-4 Video Elemental Stream ( MP4V-ES ) format is part of the MPEG-4 Part 2 Visual standard. While in general, MPEG-4 part 2 video is not used by anyone because of its lack of compelling value related to other codecs, MP4V-ES does have some usage on mobile. MP4V is essentially H.263 encoding in an MPEG-4 container.

Its primary purpose is to be used to stream MPEG-4 audio and video over an RTP session. However, MP4V-ES is also used to transmit MPEG-4 audio and video over a mobile connection using 3GP .

You almost certainly don't want to use this format, since it isn't supported in a meaningful way by any major browsers, and is quite obsolete. Files of this type should have the extension .mp4v , but sometimes are inaccurately labeled .mp4 .

Firefox supports MP4V-ES in 3GP containers only.

Chrome does not support MP4V-ES; however, ChromeOS does.

MPEG-1 Part 2 Video

MPEG-1 Part 2 Video was unveiled at the beginning of the 1990s. Unlike the later MPEG video standards, MPEG-1 was created solely by MPEG, without the ITU's involvement.

Because any MPEG-2 decoder can also play MPEG-1 video, it's compatible with a wide variety of software and hardware devices. There are no active patents remaining in relation to MPEG-1 video, so it may be used free of any licensing concerns. However, few web browsers support MPEG-1 video without the support of a plugin, and with plugin use deprecated in web browsers, these are generally no longer available. This makes MPEG-1 a poor choice for use in websites and web applications.

MPEG-2 Part 2 Video

MPEG-2 Part 2 is the video format defined by the MPEG-2 specification, and is also occasionally referred to by its ITU designation, H.262. It is very similar to MPEG-1 video—in fact, any MPEG-2 player can automatically handle MPEG-1 without any special work—except it has been expanded to support higher bit rates and enhanced encoding techniques.

The goal was to allow MPEG-2 to compress standard definition television, so interlaced video is also supported. The standard definition compression rate and the quality of the resulting video met needs well enough that MPEG-2 is the primary video codec used for DVD video media.

MPEG-2 has several profiles available with different capabilities. Each profile is then available four levels, each of which increases attributes of the video, such as frame rate, resolution, bit rate, and so forth. Most profiles use Y'CbCr with 4:2:0 chroma subsampling, but more advanced profiles support 4:2:2 as well. In addition, there are four levels, each of which offers support for larger frame dimensions and bit rates. For example, the ATSC specification for television used in North America supports MPEG-2 video in high definition using the Main Profile at High Level, allowing 4:2:0 video at both 1920 x 1080 (30 FPS) and 1280 x 720 (60 FPS), at a maximum bit rate of 80 Mbps.

However, few web browsers support MPEG-2 without the support of a plugin, and with plugin use deprecated in web browsers, these are generally no longer available. This makes MPEG-2 a poor choice for use in websites and web applications.

Theora , developed by Xiph.org , is an open and free video codec which may be used without royalties or licensing. Theora is comparable in quality and compression rates to MPEG-4 Part 2 Visual and AVC, making it a very good if not top-of-the-line choice for video encoding. But its status as being free from any licensing concerns and its relatively low CPU resource requirements make it a popular choice for many software and web projects. The low CPU impact is particularly useful since there are no hardware decoders available for Theora.

Theora was originally based upon the VC3 codec by On2 Technologies. The codec and its specification were released under the LGPL license and entrusted to Xiph.org, which then developed it into the Theora standard.

One drawback to Theora is that it only supports 8 bits per color component, with no option to use 10 or more in order to avoid color banding. That said, 8 bits per component is still the most commonly-used color format in use today, so this is only a minor inconvenience in most cases. Also, Theora can only be used in an Ogg container. The biggest drawback of all, however, is that it is not supported by Safari, leaving Theora unavailable not only on macOS but on all those millions and millions of iPhones and iPads.

The Theora Cookbook offers additional details about Theora as well as the Ogg container format it is used within.

Edge supports Theora with the optional Web Media Extensions add-on.

The Video Processor 8 ( VP8 ) codec was initially created by On2 Technologies. Following their purchase of On2, Google released VP8 as an open and royalty-free video format under a promise not to enforce the relevant patents. In terms of quality and compression rate, VP8 is comparable to AVC .

If supported by the browser, VP8 allows video with an alpha channel, allowing the video to play with the background able to be seen through the video to a degree specified by each pixel's alpha component.

There is good browser support for VP8 in HTML content, especially within WebM files. This makes VP8 a good candidate for your content, although VP9 is an even better choice if available to you. Web browsers are required to support VP8 for WebRTC, but not all browsers that do so also support it in HTML audio and video elements.

Video Processor 9 ( VP9 ) is the successor to the older VP8 standard developed by Google. Like VP8, VP9 is entirely open and royalty-free. Its encoding and decoding performance is comparable to or slightly faster than that of AVC, but with better quality. VP9's encoded video quality is comparable to that of HEVC at similar bit rates.

VP9's main profile supports only 8-bit color depth at 4:2:0 chroma subsampling levels, but its profiles include support for deeper color and the full range of chroma subsampling modes. It supports several HDR implementations, and offers substantial freedom in selecting frame rates, aspect ratios, and frame sizes.

VP9 is widely supported by browsers, and hardware implementations of the codec are fairly common. VP9 is one of the two video codecs mandated by WebM (the other being VP8 ). Note however that Safari support for WebM and VP9 was only introduced in version 14.1, so if you choose to use VP9, consider offering a fallback format such as AVC or HEVC for iPhone, iPad, and Mac users.

VP9 is a good choice if you are able to use a WebM container (and can provide fallback video when needed). This is especially true if you wish to use an open codec rather than a proprietary one.

Color spaces supported: Rec. 601 , Rec. 709 , Rec. 2020 , SMPTE C , SMPTE-240M (obsolete; replaced by Rec. 709), and sRGB .

All versions of Chrome, Edge, Firefox, Opera, and Safari

Firefox only supports VP8 in MSE when no H.264 hardware decoder is available. Use MediaSource.isTypeSupported() to check for availability.

Choosing a video codec

The decision as to which codec or codecs to use begins with a series of questions to prepare yourself:

- Do you wish to use an open format, or are proprietary formats also to be considered?

- Do you have the resources to produce more than one format for each of your videos? The ability to provide a fallback option vastly simplifies the decision-making process.

- Are there any browsers you're willing to sacrifice compatibility with?

- How old is the oldest version of web browser you need to support? For example, do you need to work on every browser shipped in the past five years, or just the past one year?

In the sections below, we offer recommended codec selections for specific use cases. For each use case, you'll find up to two recommendations. If the codec which is considered best for the use case is proprietary or may require royalty payments, then two options are provided: first, an open and royalty-free option, followed by the proprietary one.

If you are only able to offer a single version of each video, you can choose the format that's most appropriate for your needs. The first one is recommended as being a good combination of quality, performance, and compatibility. The second option will be the most broadly compatible choice, at the expense of some amount of quality, performance, and/or size.

Recommendations for everyday videos

First, let's look at the best options for videos presented on a typical website such as a blog, informational site, small business website where videos are used to demonstrate products (but not where the videos themselves are a product), and so forth.

- A WebM container using the VP9 codec for video and the Opus codec for audio. These are all open, royalty-free formats which are generally well-supported, although only in quite recent browsers, which is why a fallback is a good idea. html < video controls src = " filename.webm " > </ video >

- An MP4 container and the AVC ( H.264 ) video codec, ideally with AAC as your audio codec. This is because the MP4 container with AVC and AAC codecs within is a broadly-supported combination—by every major browser, in fact—and the quality is typically good for most use cases. Make sure you verify your compliance with the license requirements, however. html < video controls > < source type = " video/webm " src = " filename.webm " /> < source type = " video/mp4 " src = " filename.mp4 " /> </ video >

Note: The <video> element requires a closing </video> tag, whether or not you have any <source> elements inside it.

Recommendations for high-quality video presentation

If your mission is to present video at the highest possible quality, you will probably benefit from offering as many formats as possible, as the codecs capable of the best quality tend also to be the newest, and thus the most likely to have gaps in browser compatibility.

- A WebM container using AV1 for video and Opus for audio. If you're able to use the High or Professional profile when encoding AV1, at a high level like 6.3, you can get very high bit rates at 4K or 8K resolution, while maintaining excellent video quality. Encoding your audio using Opus's Fullband profile at a 48 kHz sample rate maximizes the audio bandwidth captured, capturing nearly the entire frequency range that's within human hearing. html < video controls src = " filename.webm " > </ video >

- An MP4 container using the HEVC codec using one of the advanced Main profiles, such as Main 4:2:2 with 10 or 12 bits of color depth, or even the Main 4:4:4 profile at up to 16 bits per component. At a high bit rate, this provides excellent graphics quality with remarkable color reproduction. In addition, you can optionally include HDR metadata to provide high dynamic range video. For audio, use the AAC codec at a high sample rate (at least 48 kHz but ideally 96kHz) and encoded with complex encoding rather than fast encoding. html < video controls > < source type = " video/webm " src = " filename.webm " /> < source type = " video/mp4 " src = " filename.mp4 " /> </ video >

Recommendations for archival, editing, or remixing

There are not currently any lossless—or even near-lossless—video codecs generally available in web browsers. The reason for this is simple: video is huge. Lossless compression is by definition less effective than lossy compression. For example, uncompressed 1080p video (1920 by 1080 pixels) with 4:2:0 chroma subsampling needs at least 1.5 Gbps. Using lossless compression such as FFV1 (which is not supported by web browsers) could perhaps reduce that to somewhere around 600 Mbps, depending on the content. That's still a huge number of bits to pump through a connection every second, and is not currently practical for any real-world use.

This is the case even though some of the lossy codecs have a lossless mode available; the lossless modes are not implemented in any current web browsers. The best you can do is to select a high-quality codec that uses lossy compression and configure it to perform as little compression as possible. One way to do this is to configure the codec to use "fast" compression, which inherently means less compression is achieved.

Preparing video externally

To prepare video for archival purposes from outside your website or app, use a utility that performs compression on the original uncompressed video data. For example, the free x264 utility can be used to encode video in AVC format using a very high bit rate:

While other codecs may have better best-case quality levels when compressing the video by a significant margin, their encoders tend to be slow enough that the nearly-lossless encoding you get with this compression is vastly faster at about the same overall quality level.

Recording video