- Write For US

- Join for Ad Free

Time Travel with Delta Tables in Databricks?

- Post author: rimmalapudi

- Post category: Apache Spark

- Post last modified: March 27, 2024

- Reading time: 11 mins read

What is time travel in the delta table on Databricks? In modern-day to day ETL activities, we see a huge amount of data trafficking into the data lake. There are always a few rows inserted, updated, and deleted. Time travel is a key feature present in Delta Lake technology in Databricks.

Delta Lake uses transaction logging to store the history of changes on your data and with this feature, you can access the historical version of data that is changing over time and helps to go back in time travel on the delta table and see the previous snapshot of the data and also helps in auditing, logging, and data tracking.

Table of contents

1. challenges in data transition, 2. what is delta table in databricks, 3 create a delta table in databricks, 4. update delta records and check history, 5.1. using the timestamp, 5.2. using version number, 6. conclusion.

- Auditing : Looking over the data changes is critical to keep data in compliance and for debugging any changes. Data Lake without a time travel feature is failed in such scenarios as we can’t roll back to the previous state once changes are done.

- Reproduce experiments & reports : ML engineers try to create many models using some given set of data. When they try to reproduce the model after a period of time, typically the source data has been modified and they struggle to reproduce their experiments.

- Rollbacks : In the case of Data transitions that do simple appends, rollbacks could be possible by date-based partitioning. But in the case of upserts or changes by mistake, it’s very complicated to roll back data to the previous state.

Delta’s time travel capabilities in Azure Databricks simplify building data pipelines for the above challenges. As you write into a Delta table or directory, every operation is automatically versioned and stored in transactional logs. You can access the different versions of the data in two different ways:

Now, let us create a Delta table and perform some modifications on the same table and try to play with the Time Travel feature.

In Databricks the time travel with delta table is achieved by using the following.

- Using a timestamp

- Using a version number

Note: By default, all the tables that are created in Databricks are Delta tables.

Here, I am using the community Databricks version to achieve this ( https://community.cloud.databricks.com/ ). Create a cluster and attach the cluster to your notebook.

Let’s create the table and insert a few rows to the table.

You will get the below output.

Here we have created a student table with some records and as you can see it’s by default provided as delta.

Let’s update the student delta table with id’s 1,3 and delete records with id 2. Add another cell to simulate the update and delete the row in the table

You will see something below on databricks.

As you can see from the above screenshot, there are total 4 versions since the table is created, with the top record being the most recent change

- version0: Created the student table at 2022-11-14T12:09:24.000+0000

- version1: Inserted records into the table at 2022-11-14T12:09:29.000+0000

- version2: Updated the values of id’s 1,3 at 2022-11-14T12:09:35.000+0000

- version3: Deleted the record of id’s 2 at 2022-11-14T12:09:39.000+0000

Notice that the describe result shows the version , timestamp of the transaction that occurred, operation , parameters , and metrics . Metrics in the results show the number of rows and files changed.

5. Query Delta Table in Time Travel

As we already know Delta tables in Databricks have the time travel functionality which can be explored either using timestamp or by version number.

Note : Regardless of what approach you use, it’s just a simple SQL SELECT command with extending “ as of ”

First, let’s see our initial table, the table before I run the update and delete. To get this let’s use the timestamp column.

Now let’s see how the data is updated and deleted after each statement.

From the above examples, I hope it is clear how to query the table back in time.

As every operation on the delta table is marked with a version number, and you can use the version to travel back in time as well.

Below I have executed some queries to get the initial version of the table, after updating and deleting. The result would be the same as what we got with a timestamp .

Time travel of Delta table in databricks improves developer productivity tremendously. It helps:

- Data scientists and ML experts manage their experiments better by going back to the source of truth.

- Data engineers simplify their pipelines and roll back bad writes.

- Data analysts do easy reporting.

- Rollback to the previous state.

Related Articles

- Spark Timestamp – Extract hour, minute and second

- Spark Timestamp Difference in seconds, minutes and hours

- Spark – What is SparkSession Explained

- Spark Read XML file using Databricks API

- Spark Performance Tuning & Best Practices

- Spark Shell Command Usage with Examples

- datalake-vs-data-warehouse

- What is Apache Spark and Why It Is Ultimate for Working with Big Data

- Apache Spark Interview Questions

rimmalapudi

This post has one comment.

Nice article!

Comments are closed.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Tutorial: Delta Lake

- 4 contributors

This tutorial introduces common Delta Lake operations on Azure Databricks, including the following:

- Create a table.

- Upsert to a table.

- Read from a table.

- Display table history.

- Query an earlier version of a table.

- Optimize a table.

- Add a Z-order index.

- Vacuum unreferenced files.

You can run the example Python, R, Scala, and SQL code in this article from within a notebook attached to an Azure Databricks cluster . You can also run the SQL code in this article from within a query associated with a SQL warehouse in Databricks SQL .

Some of the following code examples use a two-level namespace notation consisting of a schema (also called a database) and a table or view (for example, default.people10m ). To use these examples with Unity Catalog , replace the two-level namespace with Unity Catalog three-level namespace notation consisting of a catalog, schema, and table or view (for example, main.default.people10m ).

Create a table

All tables created on Azure Databricks use Delta Lake by default.

Delta Lake is the default for all reads, writes, and table creation commands Azure Databricks.

The preceding operations create a new managed table by using the schema that was inferred from the data. For information about available options when you create a Delta table, see CREATE TABLE .

For managed tables, Azure Databricks determines the location for the data. To get the location, you can use the DESCRIBE DETAIL statement, for example:

Sometimes you may want to create a table by specifying the schema before inserting data. You can complete this with the following SQL commands:

In Databricks Runtime 13.3 LTS and above, you can use CREATE TABLE LIKE to create a new empty Delta table that duplicates the schema and table properties for a source Delta table. This can be especially useful when promoting tables from a development environment into production, such as in the following code example:

You can also use the DeltaTableBuilder API in Delta Lake to create tables. Compared to the DataFrameWriter APIs, this API makes it easier to specify additional information like column comments, table properties, and generated columns .

This feature is in Public Preview .

Upsert to a table

To merge a set of updates and insertions into an existing Delta table, you use the MERGE INTO statement. For example, the following statement takes data from the source table and merges it into the target Delta table. When there is a matching row in both tables, Delta Lake updates the data column using the given expression. When there is no matching row, Delta Lake adds a new row. This operation is known as an upsert .

If you specify * , this updates or inserts all columns in the target table. This assumes that the source table has the same columns as those in the target table, otherwise the query will throw an analysis error.

You must specify a value for every column in your table when you perform an INSERT operation (for example, when there is no matching row in the existing dataset). However, you do not need to update all values.

To see the results, query the table.

Read a table

You access data in Delta tables by the table name or the table path, as shown in the following examples:

Write to a table

Delta Lake uses standard syntax for writing data to tables.

To atomically add new data to an existing Delta table, use append mode as in the following examples:

To atomically replace all the data in a table, use overwrite mode as in the following examples:

Update a table

You can update data that matches a predicate in a Delta table. For example, in a table named people10m or a path at /tmp/delta/people-10m , to change an abbreviation in the gender column from M or F to Male or Female , you can run the following:

Delete from a table

You can remove data that matches a predicate from a Delta table. For instance, in a table named people10m or a path at /tmp/delta/people-10m , to delete all rows corresponding to people with a value in the birthDate column from before 1955 , you can run the following:

delete removes the data from the latest version of the Delta table but does not remove it from the physical storage until the old versions are explicitly vacuumed. See vacuum for details.

Display table history

To view the history of a table, use the DESCRIBE HISTORY statement, which provides provenance information, including the table version, operation, user, and so on, for each write to a table.

Query an earlier version of the table (time travel)

Delta Lake time travel allows you to query an older snapshot of a Delta table.

To query an older version of a table, specify a version or timestamp in a SELECT statement. For example, to query version 0 from the history above, use:

For timestamps, only date or timestamp strings are accepted, for example, "2019-01-01" and "2019-01-01'T'00:00:00.000Z" .

DataFrameReader options allow you to create a DataFrame from a Delta table that is fixed to a specific version of the table, for example in Python:

or, alternately:

For details, see Work with Delta Lake table history .

Optimize a table

Once you have performed multiple changes to a table, you might have a lot of small files. To improve the speed of read queries, you can use OPTIMIZE to collapse small files into larger ones:

Z-order by columns

To improve read performance further, you can co-locate related information in the same set of files by Z-Ordering. This co-locality is automatically used by Delta Lake data-skipping algorithms to dramatically reduce the amount of data that needs to be read. To Z-Order data, you specify the columns to order on in the ZORDER BY clause. For example, to co-locate by gender , run:

For the full set of options available when running OPTIMIZE , see Compact data files with optimize on Delta Lake .

Clean up snapshots with VACUUM

Delta Lake provides snapshot isolation for reads, which means that it is safe to run OPTIMIZE even while other users or jobs are querying the table. Eventually however, you should clean up old snapshots. You can do this by running the VACUUM command:

For details on using VACUUM effectively, see Remove unused data files with vacuum .

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

MungingData

Piles of precious data.

Introduction to Delta Lake and Time Travel

Delta Lake is a wonderful technology that adds powerful features to Parquet data lakes.

This blog post demonstrates how to create and incrementally update Delta lakes.

We will learn how the Delta transaction log stores data lake metadata.

Then we’ll see how the transaction log allows us to time travel and explore our data at a given point in time.

Creating a Delta data lake

Let’s create a Delta lake from a CSV file with data on people. Here’s the CSV data we’ll use:

Here’s the code that’ll read the CSV file into a DataFrame and write it out as a Delta data lake (all of the code in this post in stored in this GitHub repo ).

The person_data_lake directory will contain these files:

The data is stored in a Parquet file and the metadata is stored in the _delta_log/00000000000000000000.json file.

The JSON file contains information on the write transaction, schema of the data, and what file was added. Let’s inspect the contents of the JSON file.

Incrementally updating Delta data lake

Let’s use some New York City taxi data to build and then incrementally update a Delta data lake.

Here’s the code that’ll initially build the Delta data lake:

This code creates a Parquet file and a _delta_log/00000000000000000000.json file.

Let’s inspect the contents of the incremental Delta data lake.

The Delta lake contains 5 rows of data after the first load.

Let’s load another file into the Delta data lake with SaveMode.Append :

This code creates a Parquet file and a _delta_log/00000000000000000001.json file. The incremental_data_lake contains these files now:

The Delta lake contains 10 rows of data after the file is loaded:

Time travel

Delta lets you time travel and explore the state of the data lake as of a given data load. Let’s write a query to examine the incrementally updating Delta data lake after the first data load (ignoring the second data load).

The option("versionAsOf", 0) tells Delta to only grab the files in _delta_log/00000000000000000000.json and ignore the files in _delta_log/00000000000000000001.json .

Let’s say you’re training a machine learning model off of a data lake and want to hold the data constant while experimenting. Delta lake makes it easy to use a single version of the data when you’re training your model.

You can easily access a full history of the Delta lake transaction log.

The schema of the Delta history table is as follows:

We can also grab a Delta table version by timestamp.

This is the same as grabbing version 1 of our Delta table (examine the transaction log history output to see why):

This blog post just scratches the surface on the host of features offered by Delta Lake.

In the coming blog posts we’ll explore how to compact Delta lakes, schema evolution, schema enforcement, updates, deletes, and streaming.

Registration

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Implementing ETL Logging on Lakehouse using Delta Lake's Time Travel capability

By: Fikrat Azizov | Updated: 2022-06-10 | Comments (1) | Related: 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | > Azure Synapse Analytics

This tip is part of the series dedicated to the building end-to-end Lakehouse solutions leveraging Azure Synapse Analytics. In previous posts, we've explored the ways to build ETL pipelines to ingest, transform and load data into Lakehouse. The Lakehouse concept is based on the Delta Lake technology, allowing you to treat the files in Azure Data Lake like relational databases. However, what makes Delta Lake technology outstanding is its time travel functionality. In this tip, I'm going to demonstrate how to use Delta Lake's time travel functionality for ETL logging.

What is Delta Lake time travel?

Delta Lake technology uses transaction logging to preserve the history of changes to the underlying files. This allows you to go back in time and see the previous states of the rows (see Introducing Delta Time Travel for Large Scale Data Lakes for more details). This feature can be used for many different purposes, including auditing, troubleshooting, logging, etc. You can query rows by version, as well as by timestamp.

Let me illustrate this using the SalesOrderHeader Delta table I've created in this tip .

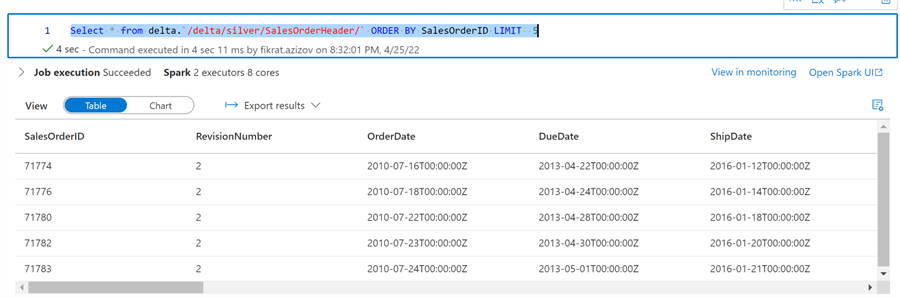

Add a Spark pool and create a Spark notebook with SparkSQL language (see Microsoft documentation for more details). Add a cell with the following command to browse a few rows:

Notice that I'm treating the location of the Delta tables, as the table names in a relational database. There's an alternative way of querying Delta tables, that involves prior registration of these tables in Delta Lake's meta store (see this Delta Lake documentation for more details)

Here are the query results:

Add another cell to simulate updating single row in the table:

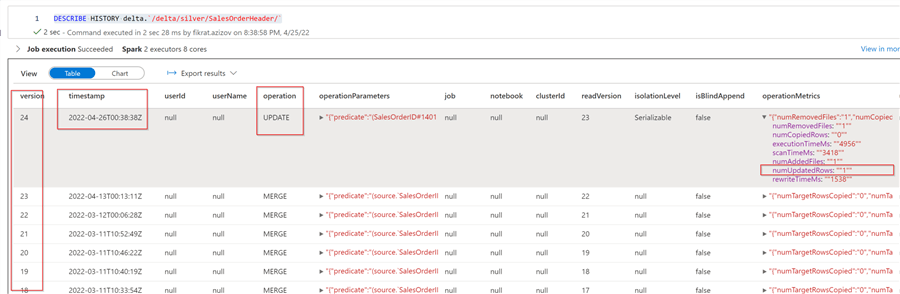

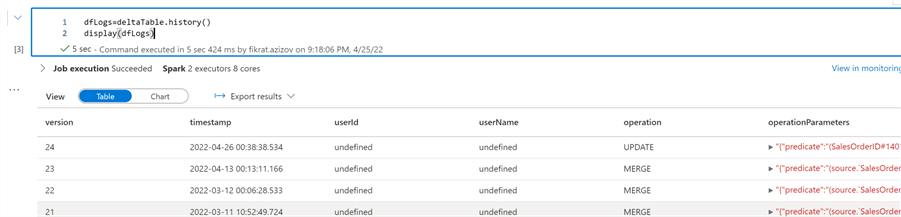

Now let's see the history of the changes to this table by using the following command:

As you can see from the below screenshot, we've got multiple changes, with the top record being the most recent change:

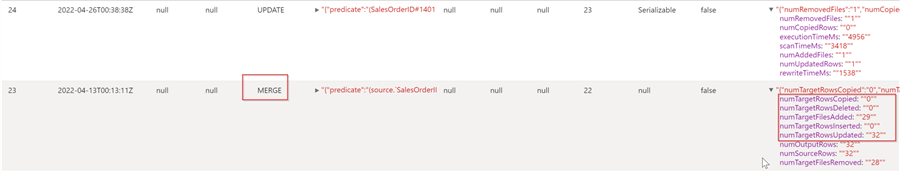

Notice that the results also contain the transaction timestamp, operation type and operation metrics columns, among other details. The operationMetrics column is in JSON format, and it includes more granular transaction details, like the number of updated rows. As you can see from the query results, the previous changes have been caused by the merge operation, which is because we've selected an upsert method to populate these tables from Mapping Data Flow. Let's also examine the operationMetrics details for the Merge operation:

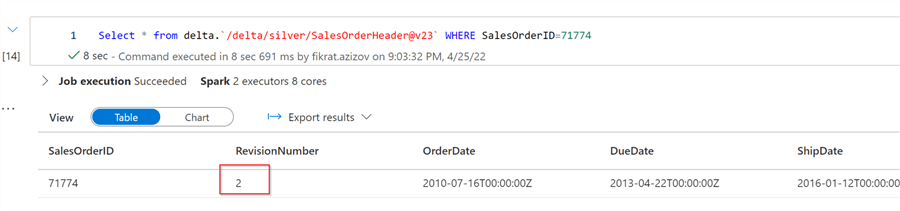

Notice the structure of the operationMetrics column is slightly different for the Merge operation. We can also get the table's past state as of version 23, using the following command:

Here's the screenshot:

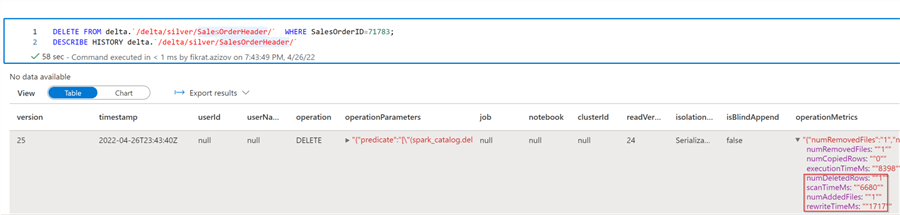

Finally, let's explore the Delete command, by using following commands:

Now that we know how the time travel functionality works, let's see how this can be applied for ETL logging purposes.

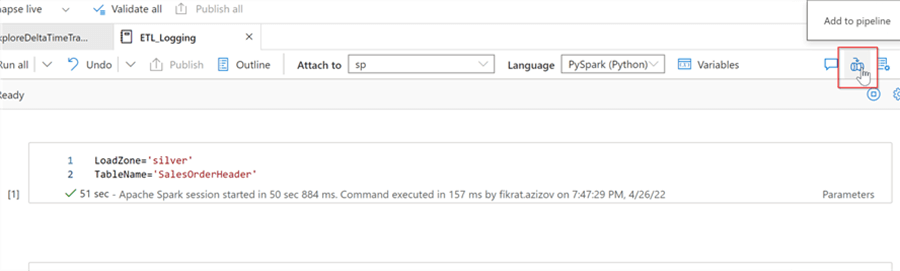

The ETL logging notebook

Let's create another Spark notebook with PySpark language. I'm going to use an alternative way of querying Delta Lake, which involves Delta Lake API. Add the parameter-type cell with the following parameters:

Add another cell with following command:

These commands build the table path from the parameters and create a Delta table object based on that path.

Now we can use the history method and assign the results to a data frame, as follows:

Here's the screenshot with query results:

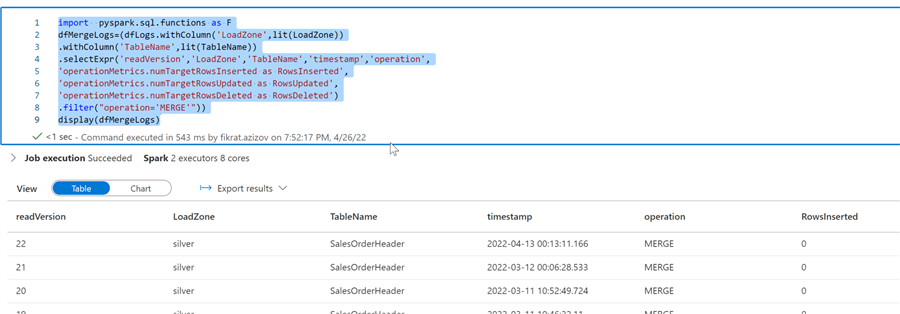

And let's run following commands to get merge command stats:

The above command creates a new data frame by extracting granular transaction details from the operationMetrics column. Here's the screenshot:

Let's get details for update and delete commands, using similar methods:

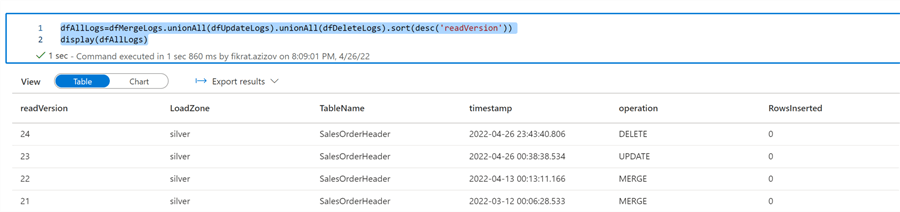

Finally, let's union all three data frames and order the results by change versions, using the following command:

And now we can persist the logs in the Delta table. First, run this command to create a database:

And then persist the data frame in the managed Delta table, by using following command:

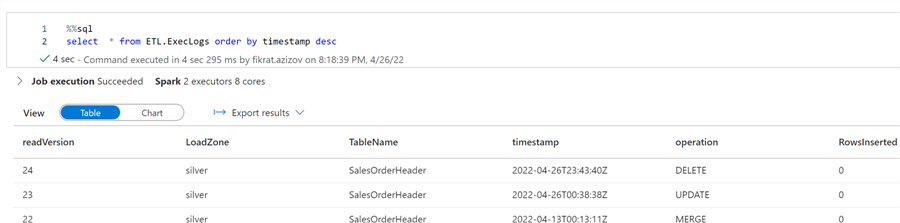

Let's do a quick validation:

The last touch here would be adding limit( 1 ) method to the final write command, to ensure that historical rows are not appended on subsequent executions:

Congratulations, we've built a notebook with ETL logging!

The ETL logging orchestration

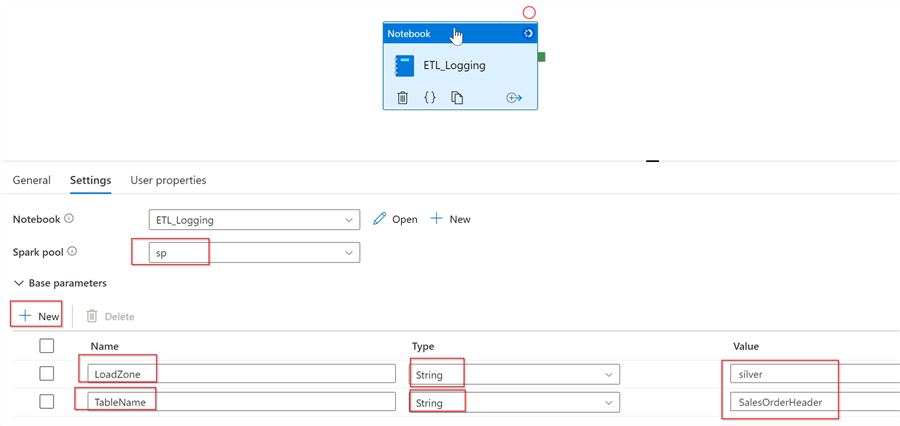

The notebook we've created above can be kicked off from the data integration pipeline, and we can create that pipeline directly from this notebook- just use Add to pipeline button, as follows:

Once inside the pipeline, select the Notebook activity, select Spark pool and add the required parameters, as follows:

This activity could be included in a dedicated pipeline or be added to the existing ETL pipeline, depending on your overall ETL orchestration needs.

- Read: Common Data Warehouse Development Challenges

- Read: What is Delta Lake

- Read: Linux Foundation Delta Lake overview

- Read: Create, develop, and maintain Synapse notebooks in Azure Synapse Analytics

About the author

Comments For This Article

Related Content

Building Scalable Lakehouse Solutions using Azure Synapse Analytics

Common Data Warehouse Development Challenges

Data Ingestion Into Landing Zone Using Azure Synapse Analytics

Raw Data Ingestion into Delta Lake Bronze tables using Azure Synapse Mapping Data Flow

Implementing Deduplication Logic in the Lakehouse using Synapse Analytics Mapping Data Flow

Implement Data Enrichment in Lakehouse using Synapse Analytics Mapping Data Flow

Handle Missing Values in Lakehouse using Azure Synapse Mapping Data Flow

Related Categories

Apache Spark

Azure Data Factory

Azure Databricks

Azure Integration Services

Azure Synapse Analytics

Microsoft Fabric

Development

Date Functions

System Functions

JOIN Tables

SQL Server Management Studio

Database Administration

Performance

Performance Tuning

Locking and Blocking

Data Analytics \ ETL

Integration Services

Popular Articles

SQL Date Format Options with SQL CONVERT Function

SQL Date Format examples using SQL FORMAT Function

SQL Server CROSS APPLY and OUTER APPLY

DROP TABLE IF EXISTS Examples for SQL Server

SQL Server Cursor Example

SQL CASE Statement in Where Clause to Filter Based on a Condition or Expression

Rolling up multiple rows into a single row and column for SQL Server data

SQL Convert Date to YYYYMMDD

SQL NOT IN Operator

Resolving could not open a connection to SQL Server errors

Format numbers in SQL Server

SQL Server PIVOT and UNPIVOT Examples

Script to retrieve SQL Server database backup history and no backups

How to install SQL Server 2022 step by step

An Introduction to SQL Triggers

Using MERGE in SQL Server to insert, update and delete at the same time

List SQL Server Login and User Permissions with fn_my_permissions

SQL Server Loop through Table Rows without Cursor

How to monitor backup and restore progress in SQL Server

SQL Server Database Stuck in Restoring State

- Documentation

Welcome to the Delta Lake documentation

- Delta Lake GitHub repo

This is the documentation site for Delta Lake.

- Introduction

- Set up Apache Spark with Delta Lake

- Create a table

- Update table data

- Read older versions of data using time travel

- Write a stream of data to a table

- Read a stream of changes from a table

- Read a table

- Query an older snapshot of a table (time travel)

- Write to a table

- Schema validation

- Update table schema

- Replace table schema

- Views on tables

- Table properties

- Syncing table schema and properties to the Hive metastore

- Table metadata

- Configure SparkSession

- Configure storage credentials

- Delta table as a source

- Delta table as a sink

- Idempotent table writes in foreachBatch

- Delete from a table

- Update a table

- Upsert into a table using merge

- Special considerations for schemas that contain arrays of structs

- Merge examples

- Enable change data feed

- Read changes in batch queries

- Read changes in streaming queries

- What is the schema for the change data feed?

- Change data feed limitations for tables with column mapping enabled

- Frequently asked questions (FAQ)

- Remove files no longer referenced by a Delta table

- Retrieve Delta table history

- Retrieve Delta table details

- Generate a manifest file

- Convert a Parquet table to a Delta table

- Convert an Iceberg table to a Delta table

- Convert a Delta table to a Parquet table

- Restore a Delta table to an earlier state

- Shallow clone a Delta table

- Clone Parquet or Iceberg table to Delta

- NOT NULL constraint

- CHECK constraint

- What Delta Lake features require client upgrades?

- What is a table protocol specification?

- Which protocols must be upgraded?

- What are table features?

- Do table features change how Delta Lake features are enabled?

- What is a protocol version?

- Features by protocol version

- Upgrading protocol versions

- How to enable Delta Lake default column values

- How to use default columns in SQL commands

- How to enable Delta Lake column mapping

- Rename a column

- Drop columns

- Supported characters in column names

- Known limitations

- What is liquid clustering used for?

- Enable liquid clustering

- Choose clustering columns

- Write data to a clustered table

- How to trigger clustering

- Read data from a clustered table

- Limitations

- Enable deletion vectors

- Apply changes to Parquet data files

- How can I drop a Delta table feature?

- What Delta table features can be dropped?

- How are Delta table features dropped?

- Delta Spark

- Delta Standalone

- Delta Flink

- Delta Kernel

- Microsoft Azure storage

- Google Cloud Storage

- Oracle Cloud Infrastructure

- IBM Cloud Object Storage

- Requirements

- Enable Delta Lake UniForm

- When does UniForm generate Iceberg metadata?

- Check Iceberg metadata generation status

- Read UniForm tables as Iceberg tables in Apache Spark

- Read using a metadata JSON path

- Delta and Iceberg table versions

- Read a snapshot

- Query an older snapshot of a shared table (time travel)

- Read Table Changes (CDF)

- Read Advanced Delta Lake Features in Delta Sharing

- Optimistic concurrency control

- Write conflicts

- Avoid conflicts using partitioning and disjoint command conditions

- Conflict exceptions

- Presto to Delta Lake integration

- Trino to Delta Lake integration

- Athena to Delta Lake integration

- Other integrations

- Migrate workloads to Delta Lake

- Migrate Delta Lake workloads to newer versions

- Choose the right partition column

- Compact files

- Replace the content or schema of a table

- Spark caching

- What is Delta Lake?

- How is Delta Lake related to Apache Spark?

- What format does Delta Lake use to store data?

- How can I read and write data with Delta Lake?

- Where does Delta Lake store the data?

- Can I copy my Delta Lake table to another location?

- Can I stream data directly into and from Delta tables?

- Does Delta Lake support writes or reads using the Spark Streaming DStream API?

- When I use Delta Lake, will I be able to port my code to other Spark platforms easily?

- Does Delta Lake support multi-table transactions?

- How can I change the type of a column?

- Release notes

- Compatibility with Apache Spark

- Blog posts and talks

- VLDB 2020 paper

- Delta Lake transaction log specification

- Optimize performance with file management

- Auto compaction

- Data skipping

- Z-Ordering (multi-dimensional clustering)

- Multi-part checkpointing

- Log compactions

- Optimized Write

- Delta table properties reference

Databricks Knowledge Base

If you still have questions or prefer to get help directly from an agent, please submit a request. We’ll get back to you as soon as possible.

Please enter the details of your request. A member of our support staff will respond as soon as possible.

- All articles

Compare two versions of a Delta table

Use time travel to compare two versions of a Delta table.

Written by mathan.pillai

Delta Lake supports time travel, which allows you to query an older snapshot of a Delta table.

One common use case is to compare two versions of a Delta table in order to identify what changed.

For more details on time travel, please review the Delta Lake time travel documentation ( AWS | Azure | GCP ).

Identify all differences

You can use a SQL SELECT query to identify all differences between two versions of a Delta table.

You need to know the name of the table and the version numbers of the snapshots you want to compare.

For example, if you had a table named “schedule” and you wanted to compare version 2 with the original version, your query would look like this:

Identify files added to a specific version

You can use a Scala query to retrieve a list of files that were added to a specific version of the Delta table.

In this example, we are getting a list of all files that were added to version 2 of the Delta table.

00000000000000000002.json contains the list of all files in version 2.

After reading in the full list, we are excluding files that already existed, so the displayed list only includes files added to version 2.

- Microsoft Azure

- Google Cloud Platform

- Documentation

- What is Delta Lake?

Tutorial: Delta Lake

This tutorial introduces common Delta Lake operations on Databricks, including the following:

Create a table.

Upsert to a table.

Read from a table.

Display table history.

Query an earlier version of a table.

Optimize a table.

Add a Z-order index.

Vacuum unreferenced files.

You can run the example Python, R, Scala, and SQL code in this article from within a notebook attached to a Databricks cluster . You can also run the SQL code in this article from within a query associated with a SQL warehouse in Databricks SQL .

Some of the following code examples use a two-level namespace notation consisting of a schema (also called a database) and a table or view (for example, default.people10m ). To use these examples with Unity Catalog , replace the two-level namespace with Unity Catalog three-level namespace notation consisting of a catalog, schema, and table or view (for example, main.default.people10m ).

Create a table

All tables created on Databricks use Delta Lake by default.

Delta Lake is the default for all reads, writes, and table creation commands Databricks.

The preceding operations create a new managed table by using the schema that was inferred from the data. For information about available options when you create a Delta table, see CREATE TABLE .

For managed tables, Databricks determines the location for the data. To get the location, you can use the DESCRIBE DETAIL statement, for example:

Sometimes you may want to create a table by specifying the schema before inserting data. You can complete this with the following SQL commands:

In Databricks Runtime 13.3 LTS and above, you can use CREATE TABLE LIKE to create a new empty Delta table that duplicates the schema and table properties for a source Delta table. This can be especially useful when promoting tables from a development environment into production, such as in the following code example:

You can also use the DeltaTableBuilder API in Delta Lake to create tables. Compared to the DataFrameWriter APIs, this API makes it easier to specify additional information like column comments, table properties, and generated columns .

This feature is in Public Preview .

Upsert to a table

To merge a set of updates and insertions into an existing Delta table, you use the MERGE INTO statement. For example, the following statement takes data from the source table and merges it into the target Delta table. When there is a matching row in both tables, Delta Lake updates the data column using the given expression. When there is no matching row, Delta Lake adds a new row. This operation is known as an upsert .

If you specify * , this updates or inserts all columns in the target table. This assumes that the source table has the same columns as those in the target table, otherwise the query will throw an analysis error.

You must specify a value for every column in your table when you perform an INSERT operation (for example, when there is no matching row in the existing dataset). However, you do not need to update all values.

To see the results, query the table.

Read a table

You access data in Delta tables by the table name or the table path, as shown in the following examples:

Write to a table

Delta Lake uses standard syntax for writing data to tables.

To atomically add new data to an existing Delta table, use append mode as in the following examples:

To atomically replace all the data in a table, use overwrite mode as in the following examples:

Update a table

You can update data that matches a predicate in a Delta table. For example, in a table named people10m or a path at /tmp/delta/people-10m , to change an abbreviation in the gender column from M or F to Male or Female , you can run the following:

Delete from a table

You can remove data that matches a predicate from a Delta table. For instance, in a table named people10m or a path at /tmp/delta/people-10m , to delete all rows corresponding to people with a value in the birthDate column from before 1955 , you can run the following:

delete removes the data from the latest version of the Delta table but does not remove it from the physical storage until the old versions are explicitly vacuumed. See vacuum for details.

Display table history

To view the history of a table, use the DESCRIBE HISTORY statement, which provides provenance information, including the table version, operation, user, and so on, for each write to a table.

Query an earlier version of the table (time travel)

Delta Lake time travel allows you to query an older snapshot of a Delta table.

To query an older version of a table, specify a version or timestamp in a SELECT statement. For example, to query version 0 from the history above, use:

For timestamps, only date or timestamp strings are accepted, for example, "2019-01-01" and "2019-01-01'T'00:00:00.000Z" .

DataFrameReader options allow you to create a DataFrame from a Delta table that is fixed to a specific version of the table, for example in Python:

or, alternately:

For details, see Work with Delta Lake table history .

Optimize a table

Once you have performed multiple changes to a table, you might have a lot of small files. To improve the speed of read queries, you can use OPTIMIZE to collapse small files into larger ones:

Z-order by columns

To improve read performance further, you can co-locate related information in the same set of files by Z-Ordering. This co-locality is automatically used by Delta Lake data-skipping algorithms to dramatically reduce the amount of data that needs to be read. To Z-Order data, you specify the columns to order on in the ZORDER BY clause. For example, to co-locate by gender , run:

For the full set of options available when running OPTIMIZE , see Compact data files with optimize on Delta Lake .

Clean up snapshots with VACUUM

Delta Lake provides snapshot isolation for reads, which means that it is safe to run OPTIMIZE even while other users or jobs are querying the table. Eventually however, you should clean up old snapshots. You can do this by running the VACUUM command:

For details on using VACUUM effectively, see Remove unused data files with vacuum .

Time Travel with Delta Table in PySpark

Insights stats, code description.

Delta Lake provides time travel functionalities to retrieve data at certain point of time or at certain version. This can be done easily using the following two options when reading from delta table as DataFrame:

- versionAsOf - an integer value to specify a version.

- timestampAsOf - A timestamp or date string.

This code snippet shows you how to use them in Spark DataFrameReader APIs. It includes three examples:

- Query data as version 1

- Query data as 30 days ago (using computed value via Spark SQL)

- Query data as certain timestamp (' 2022-09-01 12:00:00.999999UTC 10:00 ')

You may encounter issues if the timestamp is earlier than the earlier commit:

pyspark.sql.utils.AnalysisException: The provided timestamp (2022-08-03 00:00:00.0) is before the earliest version available to this table (2022-08-27 10:53:18.213). Please use a timestamp after 2022-08-27 10:53:18.

Similarly, if the provided timestamp is later than the last commit, you may encounter another issue like the following:

pyspark.sql.utils.AnalysisException: The provided timestamp: 2022-09-07 00:00:00.0 is after the latest commit timestamp of 2022-08-27 11:30:47.185. If you wish to query this version of the table, please either provide the version with "VERSION AS OF 1" or use the exact timestamp of the last commit: "TIMESTAMP AS OF '2022-08-27 11:30:47'".

Delta Lake with PySpark Walkthrough

Code snippet

Please log in or register to comment.

Log in with external accounts

Please log in or register first.

- Code Snippets & Tips

- Subscribe RSS

- Help centre

Delta Lake is an open source storage layer that makes data lakes more reliable. This topic describes the Delta Lake table format.

Dremio version 14.0.0 and later provides read-only support for the Delta Lake table format.

Delta Lake is an open source table format that provides transactional consistency and increased scale for datasets by creating a consistent definition of datasets and including schema evolution changes and data mutations. With Delta Lake, updates to the datasets are viewed in a consistent manner across any application consuming the datasets, and users are kept from seeing an inconsistent view of data during transformation. This creates a consistent and reliable view of datasets in the data lake as they are updated and evolved.

Data consistency is enabled by creating a series of manifest files which define the schema and data for a given point in time as well as a transaction log that defines an ordered record of every transaction on the dataset. By reading the transaction log and manifest files, applications are guaranteed to see a consistent view of data at any point in time and users can ensure intermediate changes are invisible until a write operation is complete.

Delta Lake provides the following benefits:

Large-scale support: Efficient metadata handling enables applications to readily process petabyte-sized datasets with millions of files

Schema consistency: All applications processing a dataset operate on a consistent and shared definition of the dataset metadata such as columns, data types, partitions.

Supported Data Sources

The Delta Lake table format is supported with the following sources in the Parquet file format:

Amazon Glue

Azure Storage

Hive (supported in Dremio 24.0 and later)

Analyzing Delta Lake Datasets

Dremio supports analyzing Delta Lake datasets on the sources listed above through a native and high-performance reader. It automatically identifies which datasets are saved in the Delta Lake format, and imports table information from the Delta Lake manifest files. Dataset promotion is seamless and operates the same as any other data format in Dremio, where users can promote file system directories containing a Delta Lake dataset to a table manually or automatically by querying the directory. When using Delta Lake format, Dremio supports datasets of any size including petabyte-sized datasets with billions of files.

Dremio reads Delta Lake tables created or updated by another engine such as Spark and others with transactional consistency.Dremio automatically identifies tables that are in the Delta Lake format and selects the appropriate format for the user.

Refreshing Metadata

Metadata refresh is required to query the latest version of a Delta Lake table. You can wait for an automatic refresh of metadata or manually refresh it.

Example of Querying a Delta Lake Table

Perform the following steps to query a Delta Lake table:

In Dremio, open the Datasets page.

Go to the data source that contains the Delta Lake table.

If the data source is not AWS Glue or Hive, follow these steps:

- Click Save .

Run a query on the Delta Lake table to see the results.

Update the Delta Lake table in the data source.

Go back to the Datasets UI and wait for the table metadata to refresh or manually refresh it using the syntax below.

The following statement shows refreshing metadata of a Delta Lake table.

Run the previous query on the Delta Lake table to retrieve the results from the updated Delta Lake table.

Table metadata and time travel queries

Dremio supports time travel on Delta lake tables from 24.2.0. You can query a Delta table's history using the following SQL commands:

And get the data from a specific timestamp with the following SQL:

Limitations

- Creating Delta Lake tables is not supported.

- DML operations are not supported.

- Incremental reflections are not supported.

- Metadata refresh is required to query the latest version of a Delta Lake table.

- Only Delta Lake tables with minReaderVersion 1 or 2 can be read. Column Mapping is supported with minReaderVersion 2.

- Supported Data Sources

- Refreshing Metadata

- Limitations

Understanding & Using Time Travel ¶

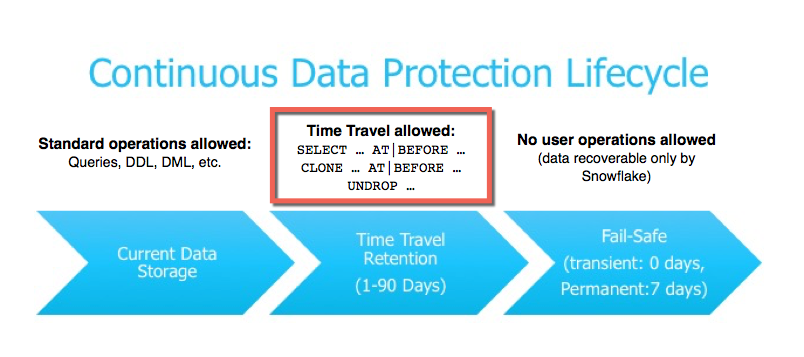

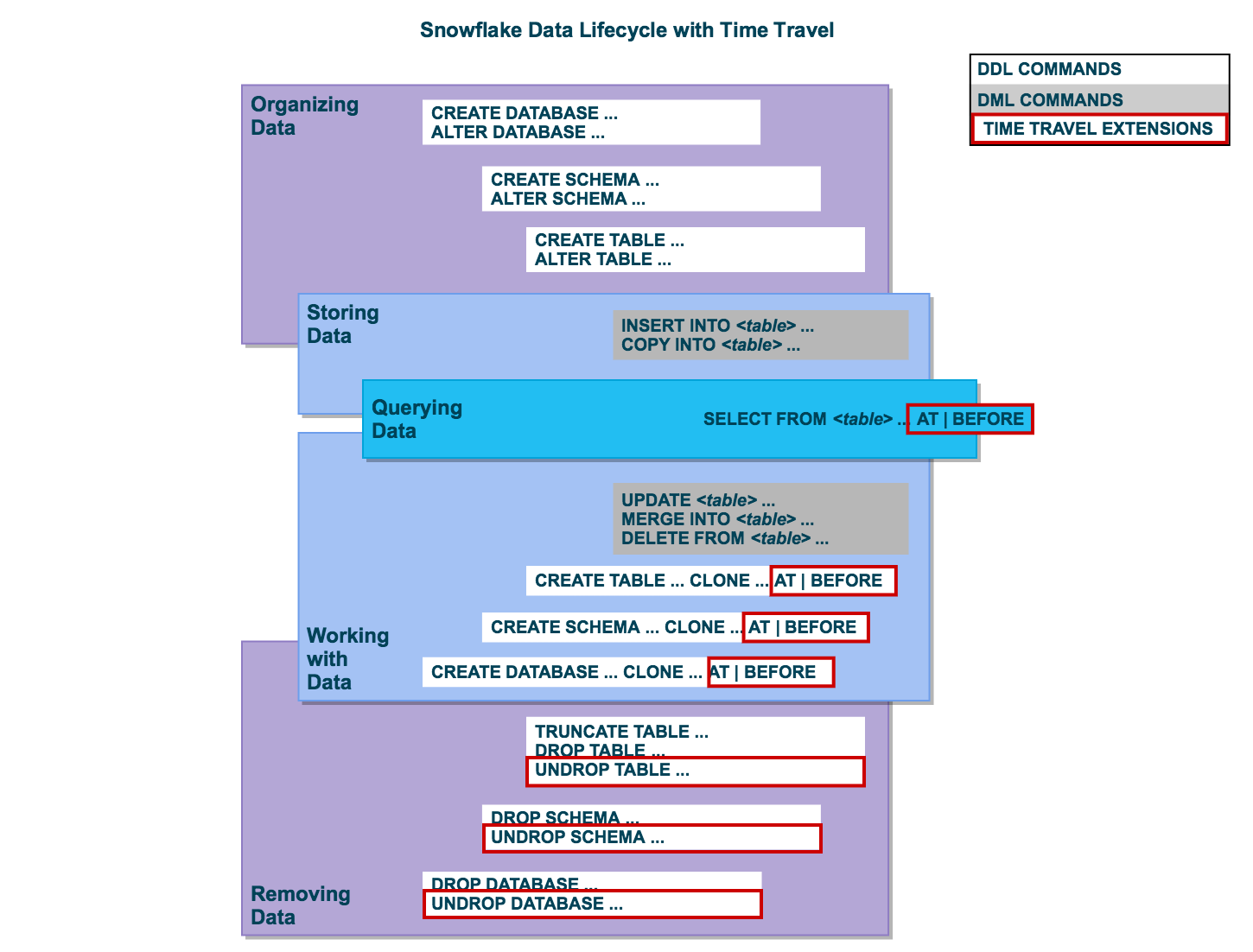

Snowflake Time Travel enables accessing historical data (i.e. data that has been changed or deleted) at any point within a defined period. It serves as a powerful tool for performing the following tasks:

Restoring data-related objects (tables, schemas, and databases) that might have been accidentally or intentionally deleted.

Duplicating and backing up data from key points in the past.

Analyzing data usage/manipulation over specified periods of time.

Introduction to Time Travel ¶

Using Time Travel, you can perform the following actions within a defined period of time:

Query data in the past that has since been updated or deleted.

Create clones of entire tables, schemas, and databases at or before specific points in the past.

Restore tables, schemas, and databases that have been dropped.

When querying historical data in a table or non-materialized view, the current table or view schema is used. For more information, see Usage notes for AT | BEFORE.

After the defined period of time has elapsed, the data is moved into Snowflake Fail-safe and these actions can no longer be performed.

A long-running Time Travel query will delay moving any data and objects (tables, schemas, and databases) in the account into Fail-safe, until the query completes.

Time Travel SQL Extensions ¶

To support Time Travel, the following SQL extensions have been implemented:

AT | BEFORE clause which can be specified in SELECT statements and CREATE … CLONE commands (immediately after the object name). The clause uses one of the following parameters to pinpoint the exact historical data you wish to access:

OFFSET (time difference in seconds from the present time)

STATEMENT (identifier for statement, e.g. query ID)

UNDROP command for tables, schemas, and databases.

Data Retention Period ¶

A key component of Snowflake Time Travel is the data retention period.

When data in a table is modified, including deletion of data or dropping an object containing data, Snowflake preserves the state of the data before the update. The data retention period specifies the number of days for which this historical data is preserved and, therefore, Time Travel operations (SELECT, CREATE … CLONE, UNDROP) can be performed on the data.

The standard retention period is 1 day (24 hours) and is automatically enabled for all Snowflake accounts:

For Snowflake Standard Edition, the retention period can be set to 0 (or unset back to the default of 1 day) at the account and object level (i.e. databases, schemas, and tables).

For Snowflake Enterprise Edition (and higher):

For transient databases, schemas, and tables, the retention period can be set to 0 (or unset back to the default of 1 day). The same is also true for temporary tables.

For permanent databases, schemas, and tables, the retention period can be set to any value from 0 up to 90 days.

A retention period of 0 days for an object effectively disables Time Travel for the object.

When the retention period ends for an object, the historical data is moved into Snowflake Fail-safe :

Historical data is no longer available for querying.

Past objects can no longer be cloned.

Past objects that were dropped can no longer be restored.

To specify the data retention period for Time Travel:

The DATA_RETENTION_TIME_IN_DAYS object parameter can be used by users with the ACCOUNTADMIN role to set the default retention period for your account.

The same parameter can be used to explicitly override the default when creating a database, schema, and individual table.

The data retention period for a database, schema, or table can be changed at any time.

The MIN_DATA_RETENTION_TIME_IN_DAYS account parameter can be set by users with the ACCOUNTADMIN role to set a minimum retention period for the account. This parameter does not alter or replace the DATA_RETENTION_TIME_IN_DAYS parameter value. However it may change the effective data retention time. When this parameter is set at the account level, the effective minimum data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Enabling and Disabling Time Travel ¶

No tasks are required to enable Time Travel. It is automatically enabled with the standard, 1-day retention period.

However, you may wish to upgrade to Snowflake Enterprise Edition to enable configuring longer data retention periods of up to 90 days for databases, schemas, and tables. Note that extended data retention requires additional storage which will be reflected in your monthly storage charges. For more information about storage charges, see Storage Costs for Time Travel and Fail-safe .

Time Travel cannot be disabled for an account. A user with the ACCOUNTADMIN role can set DATA_RETENTION_TIME_IN_DAYS to 0 at the account level, which means that all databases (and subsequently all schemas and tables) created in the account have no retention period by default; however, this default can be overridden at any time for any database, schema, or table.

A user with the ACCOUNTADMIN role can also set the MIN_DATA_RETENTION_TIME_IN_DAYS at the account level. This parameter setting enforces a minimum data retention period for databases, schemas, and tables. Setting MIN_DATA_RETENTION_TIME_IN_DAYS does not alter or replace the DATA_RETENTION_TIME_IN_DAYS parameter value. It may, however, change the effective data retention period for objects. When MIN_DATA_RETENTION_TIME_IN_DAYS is set at the account level, the data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Time Travel can be disabled for individual databases, schemas, and tables by specifying DATA_RETENTION_TIME_IN_DAYS with a value of 0 for the object. However, if DATA_RETENTION_TIME_IN_DAYS is set to a value of 0, and MIN_DATA_RETENTION_TIME_IN_DAYS is set at the account level and is greater than 0, the higher value setting takes precedence.

Before setting DATA_RETENTION_TIME_IN_DAYS to 0 for any object, consider whether you wish to disable Time Travel for the object, particularly as it pertains to recovering the object if it is dropped. When an object with no retention period is dropped, you will not be able to restore the object.

As a general rule, we recommend maintaining a value of (at least) 1 day for any given object.

If the Time Travel retention period is set to 0, any modified or deleted data is moved into Fail-safe (for permanent tables) or deleted (for transient tables) by a background process. This may take a short time to complete. During that time, the TIME_TRAVEL_BYTES in table storage metrics might contain a non-zero value even when the Time Travel retention period is 0 days.

Specifying the Data Retention Period for an Object ¶

By default, the maximum retention period is 1 day (i.e. one 24 hour period). With Snowflake Enterprise Edition (and higher), the default for your account can be set to any value up to 90 days:

When creating a table, schema, or database, the account default can be overridden using the DATA_RETENTION_TIME_IN_DAYS parameter in the command.

If a retention period is specified for a database or schema, the period is inherited by default for all objects created in the database/schema.

A minimum retention period can be set on the account using the MIN_DATA_RETENTION_TIME_IN_DAYS parameter. If this parameter is set at the account level, the data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Changing the Data Retention Period for an Object ¶

If you change the data retention period for a table, the new retention period impacts all data that is active, as well as any data currently in Time Travel. The impact depends on whether you increase or decrease the period:

Causes the data currently in Time Travel to be retained for the longer time period.

For example, if you have a table with a 10-day retention period and increase the period to 20 days, data that would have been removed after 10 days is now retained for an additional 10 days before moving into Fail-safe.

Note that this doesn’t apply to any data that is older than 10 days and has already moved into Fail-safe.

Reduces the amount of time data is retained in Time Travel:

For active data modified after the retention period is reduced, the new shorter period applies.

For data that is currently in Time Travel:

If the data is still within the new shorter period, it remains in Time Travel. If the data is outside the new period, it moves into Fail-safe.

For example, if you have a table with a 10-day retention period and you decrease the period to 1-day, data from days 2 to 10 will be moved into Fail-safe, leaving only the data from day 1 accessible through Time Travel.

However, the process of moving the data from Time Travel into Fail-safe is performed by a background process, so the change is not immediately visible. Snowflake guarantees that the data will be moved, but does not specify when the process will complete; until the background process completes, the data is still accessible through Time Travel.

If you change the data retention period for a database or schema, the change only affects active objects contained within the database or schema. Any objects that have been dropped (for example, tables) remain unaffected.

For example, if you have a schema s1 with a 90-day retention period and table t1 is in schema s1 , table t1 inherits the 90-day retention period. If you drop table s1.t1 , t1 is retained in Time Travel for 90 days. Later, if you change the schema’s data retention period to 1 day, the retention period for the dropped table t1 is unchanged. Table t1 will still be retained in Time Travel for 90 days.

To alter the retention period of a dropped object, you must undrop the object, then alter its retention period.

To change the retention period for an object, use the appropriate ALTER <object> command. For example, to change the retention period for a table:

Changing the retention period for your account or individual objects changes the value for all lower-level objects that do not have a retention period explicitly set. For example:

If you change the retention period at the account level, all databases, schemas, and tables that do not have an explicit retention period automatically inherit the new retention period.

If you change the retention period at the schema level, all tables in the schema that do not have an explicit retention period inherit the new retention period.

Keep this in mind when changing the retention period for your account or any objects in your account because the change might have Time Travel consequences that you did not anticipate or intend. In particular, we do not recommend changing the retention period to 0 at the account level.

Dropped Containers and Object Retention Inheritance ¶

Currently, when a database is dropped, the data retention period for child schemas or tables, if explicitly set to be different from the retention of the database, is not honored. The child schemas or tables are retained for the same period of time as the database.

Similarly, when a schema is dropped, the data retention period for child tables, if explicitly set to be different from the retention of the schema, is not honored. The child tables are retained for the same period of time as the schema.

To honor the data retention period for these child objects (schemas or tables), drop them explicitly before you drop the database or schema.

Querying Historical Data ¶

When any DML operations are performed on a table, Snowflake retains previous versions of the table data for a defined period of time. This enables querying earlier versions of the data using the AT | BEFORE clause.

This clause supports querying data either exactly at or immediately preceding a specified point in the table’s history within the retention period. The specified point can be time-based (e.g. a timestamp or time offset from the present) or it can be the ID for a completed statement (e.g. SELECT or INSERT).

For example:

The following query selects historical data from a table as of the date and time represented by the specified timestamp :

SELECT * FROM my_table AT ( TIMESTAMP => 'Fri, 01 May 2015 16:20:00 -0700' ::timestamp_tz ); Copy

The following query selects historical data from a table as of 5 minutes ago:

SELECT * FROM my_table AT ( OFFSET => - 60 * 5 ); Copy

The following query selects historical data from a table up to, but not including any changes made by the specified statement:

SELECT * FROM my_table BEFORE ( STATEMENT => '8e5d0ca9-005e-44e6-b858-a8f5b37c5726' ); Copy

If the TIMESTAMP, OFFSET, or STATEMENT specified in the AT | BEFORE clause falls outside the data retention period for the table, the query fails and returns an error.

Cloning Historical Objects ¶

In addition to queries, the AT | BEFORE clause can be used with the CLONE keyword in the CREATE command for a table, schema, or database to create a logical duplicate of the object at a specified point in the object’s history.

The following CREATE TABLE statement creates a clone of a table as of the date and time represented by the specified timestamp:

CREATE TABLE restored_table CLONE my_table AT ( TIMESTAMP => 'Sat, 09 May 2015 01:01:00 +0300' ::timestamp_tz ); Copy

The following CREATE SCHEMA statement creates a clone of a schema and all its objects as they existed 1 hour before the current time:

CREATE SCHEMA restored_schema CLONE my_schema AT ( OFFSET => - 3600 ); Copy

The following CREATE DATABASE statement creates a clone of a database and all its objects as they existed prior to the completion of the specified statement:

CREATE DATABASE restored_db CLONE my_db BEFORE ( STATEMENT => '8e5d0ca9-005e-44e6-b858-a8f5b37c5726' ); Copy

The cloning operation for a database or schema fails:

If the specified Time Travel time is beyond the retention time of any current child (e.g., a table) of the entity. As a workaround for child objects that have been purged from Time Travel, use the IGNORE TABLES WITH INSUFFICIENT DATA RETENTION parameter of the CREATE <object> … CLONE command. For more information, see Child objects and data retention time . If the specified Time Travel time is at or before the point in time when the object was created.

The following CREATE DATABASE statement creates a clone of a database and all its objects as they existed four days ago, skipping any tables that have a data retention period of less than four days:

CREATE DATABASE restored_db CLONE my_db AT ( TIMESTAMP => DATEADD ( days , - 4 , current_timestamp ) ::timestamp_tz ) IGNORE TABLES WITH INSUFFICIENT DATA RETENTION ; Copy

Dropping and Restoring Objects ¶

Dropping objects ¶.

When a table, schema, or database is dropped, it is not immediately overwritten or removed from the system. Instead, it is retained for the data retention period for the object, during which time the object can be restored. Once dropped objects are moved to Fail-safe , you cannot restore them.

To drop a table, schema, or database, use the following commands:

DROP SCHEMA

DROP DATABASE

After dropping an object, creating an object with the same name does not restore the object. Instead, it creates a new version of the object. The original, dropped version is still available and can be restored.

Restoring a dropped object restores the object in place (i.e. it does not create a new object).

Listing Dropped Objects ¶

Dropped tables, schemas, and databases can be listed using the following commands with the HISTORY keyword specified:

SHOW TABLES

SHOW SCHEMAS

SHOW DATABASES

SHOW TABLES HISTORY LIKE 'load%' IN mytestdb . myschema ; SHOW SCHEMAS HISTORY IN mytestdb ; SHOW DATABASES HISTORY ; Copy

The output includes all dropped objects and an additional DROPPED_ON column, which displays the date and time when the object was dropped. If an object has been dropped more than once, each version of the object is included as a separate row in the output.

After the retention period for an object has passed and the object has been purged, it is no longer displayed in the SHOW <object_type> HISTORY output.

Restoring Objects ¶

A dropped object that has not been purged from the system (i.e. the object is displayed in the SHOW <object_type> HISTORY output) can be restored using the following commands:

UNDROP TABLE

UNDROP SCHEMA

UNDROP DATABASE

Calling UNDROP restores the object to its most recent state before the DROP command was issued.

UNDROP TABLE mytable ; UNDROP SCHEMA myschema ; UNDROP DATABASE mydatabase ; Copy

If an object with the same name already exists, UNDROP fails. You must rename the existing object, which then enables you to restore the previous version of the object.

Access Control Requirements and Name Resolution ¶

Similar to dropping an object, a user must have OWNERSHIP privileges for an object to restore it. In addition, the user must have CREATE privileges on the object type for the database or schema where the dropped object will be restored.

Restoring tables and schemas is only supported in the current schema or current database, even if a fully-qualified object name is specified.

Example: Dropping and Restoring a Table Multiple Times ¶

In the following example, the mytestdb.public schema contains two tables: loaddata1 and proddata1 . The loaddata1 table is dropped and recreated twice, creating three versions of the table:

Current version Second (i.e. most recent) dropped version First dropped version

The example then illustrates how to restore the two dropped versions of the table:

First, the current table with the same name is renamed to loaddata3 . This enables restoring the most recent version of the dropped table, based on the timestamp. Then, the most recent dropped version of the table is restored. The restored table is renamed to loaddata2 to enable restoring the first version of the dropped table. Lastly, the first version of the dropped table is restored. SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ DROP TABLE loaddata1 ; SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ CREATE TABLE loaddata1 ( c1 number ); INSERT INTO loaddata1 VALUES ( 1111 ), ( 2222 ), ( 3333 ), ( 4444 ); DROP TABLE loaddata1 ; CREATE TABLE loaddata1 ( c1 varchar ); SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | Fri, 13 May 2016 19:05:51 -0700 | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ ALTER TABLE loaddata1 RENAME TO loaddata3 ; UNDROP TABLE loaddata1 ; SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA3 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ ALTER TABLE loaddata1 RENAME TO loaddata2 ; UNDROP TABLE loaddata1 ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA2 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA3 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ Copy

Time Travel

What is time travel.

Time Travel in computing, especially data management, refers to the ability to access and analyze an earlier state of data without affecting its current state. It is a vital feature offered by many modern databases and data lakehouse systems, allowing users to compare historical snapshots of data with the current scenario. This functionality is immensely beneficial in tracing back errors, auditing, and analyzing trends over time.

Functionality and Features

Time Travel allows users to go back in time to retrieve, inspect, and analyze past versions of the data. The features include:Historical Data Access : Users can view and compare different versions of the data at different points in time.Version Control: Changes are tracked incrementally, allowing any past version of the dataset to be restored. Audit Trail : Traceable log of all changes, useful for maintaining data integrity and compliance.

Benefits and Use Cases

Time Travel's unique capacities offer many benefits to businesses:

- Error Correction: In the event of data corruption or accidental deletion, it enables data restoration.

- Data Comparison: By accessing historical data, businesses can compare trends and patterns over time, influencing strategic decisions.

- Compliance: It aids in data governance and regulatory compliance by maintaining an audit trail.

Challenges and Limitations

Despite its benefits, Time Travel has limitations. It requires substantial storage to maintain multiple data versions , potentially leading to performance issues. Additionally, without proper management, navigating through various data versions can become complex.

Integration with Data Lakehouse

Time Travel naturally fits into a data lakehouse environment. Modern data lakehouses, like Dremio, offer native Time Travel capabilities. This allows organizations to access historical data directly from their data lakehouse , providing a unified, consistent view of their data across time.

Security Aspects

Time Travel includes security features that maintain the integrity and confidentiality of data. It keeps track of all changes, enabling detailed audits and secure access control .

Dremio Vs. Time Travel

Dremio, a leading data lakehouse platform, not only supports Time Travel but enhances it with advanced features. With Dremio’s Time Travel capabilities, users can access point-in-time snapshots of their data directly from their data lakehouse without the need for complex data pipelines .

- What is Time Travel in the context of data management? It refers to the ability to access and analyze any prior state of data without impacting its current state.

- Why is Time Travel important in data analytics? It allows for historical data analysis, error tracing, auditing, and trend analysis over time.

- Does Time Travel require extra storage? Yes, to maintain different versions of data, Time Travel requires additional storage.

- How does Time Travel support data governance? It aids in maintaining data integrity, tracking changes, and ensuring regulatory compliance.

- Does Dremio support Time Travel? Yes, Dremio supports and further enhances Time Travel with advanced features.

Data Lakehouse: A new architectural paradigm, combining the best features of data lakes and data warehouses.

Version Control: The task of keeping a software system consisting of many versions and configurations well organized.

Audit Trail: A security-relevant chronological record that provides documentary evidence of the sequence of activities in a system.

Get Started Free

No time limit - totally free - just the way you like it.

See Dremio in Action

Not ready to get started today? See the platform in action.

Talk to an Expert

Not sure where to start? Get your questions answered fast.

Ready to Get Started?

Bring your users closer to the data with organization-wide self-service analytics and lakehouse flexibility , scalability , and performance at a fraction of the cost . Run Dremio anywhere with self-managed software or Dremio Cloud.

- Cloud Pak for Data

- Data Governance - Knowledge Catalog

- Data Integration

- Global DataOps

- Governance, Risk, and Compliance (GRC)

- InfoSphere Optim

- Master Data Management

- Data Webinars

- Upcoming DataOps Events

- On Demand Webinars

- IBM TechXchange Webinars

- Virtual Community Events

- All IBM TechXchange Community Events

- Gamification Program

- Community Manager's Welcome

- Post to Forum

- Share a Resource

- Share Your Expertise

- Blogging on the Community

- Connect with DataOps Users

- All IBM TechXchange Community Users

- IBM TechXchange Group

- Data and AI Learning

- IBM Champions

- IBM Cloud Support

- IBM Documentation

- IBM Support

- IBM Technology Zone

- IBM Training

- IBM TechXchange Conference 2024

- Marketplace

IBM Data Lifecycle - Integration and Governance Connect with experts and peers to elevate technical expertise, solve problems and share insights. Join / Log in

- Discussion 659

- Members 940

Populate data lakehouses easier than ever with DataStage support for Iceberg and Delta Lake table formats

By caroline garay posted 4 days ago.

It should come as no surprise that business leaders will risk compromising their competitive edge if they do not proactively implement Generative AI (GenAI). The technology is predicted to significantly impact our economy , with Goldman Sachs Research expect ing GenAI to raise global GDP by 7% within 10 years . As a result, there is an unprecedented race across organizations to infuse their processes with artificial intelligence (AI): Gartner estimates 80% of enterprises will have deployed or plan to deploy foundation models and adopt GenAI by 2026 .

Though, businesses scaling AI face entry barriers, notably data quality issues. Organizations require reliable data for robust AI models and accurate insights, yet the current technology landscape presents unparalleled data quality challenges. According to IDC, stored data is set to increase by 250% by 2025 , with data rapidly propagating on premises and across clouds, application s, and locations with compromised quality. This situation will exacerbate data silos, increase costs, and complicate governance of AI and data workloads.

Traditional approaches to addressing these data management challenges, such as data warehouses , excel in processing structured data but face scalability and cost limitations. Modern data lakes offer an alternative with the ability to house unstructured and semi-structured data, but lack the organizational structure found in a data warehouse, resulting in messy data swamps with poor governance.

The solution: modern data lakehouses

The emerging solution, the data lakehouse , combines the strengths of data warehouses with data lakes while mitigating their drawbacks. With an open and flexible architecture, the data lakehouse runs warehouse workloads on various data types, reducing costs and enhancing efficiency.

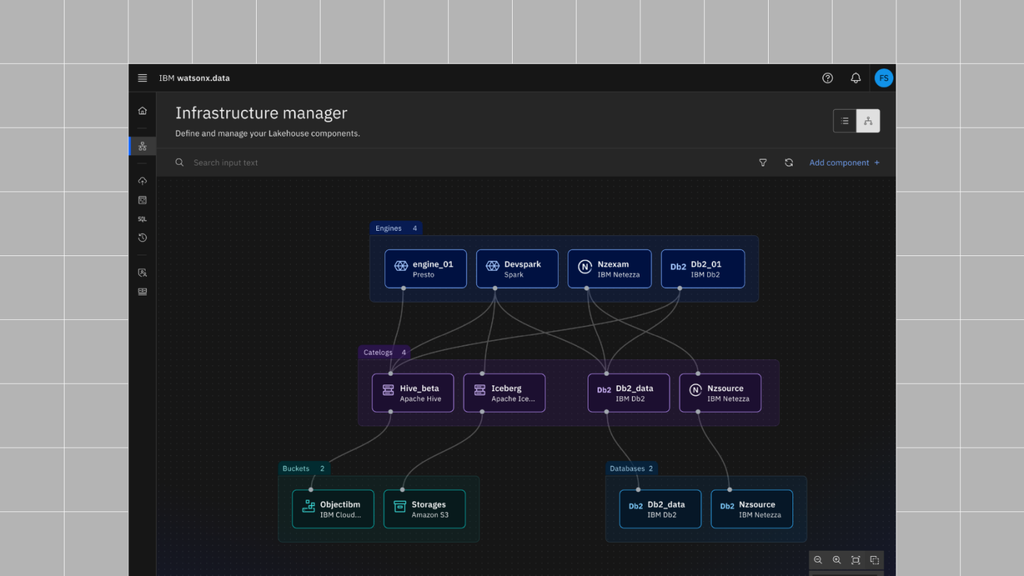

There are many modern data lakehouses currently available to customers, one of them being watsonx.data , IBM’s fit for purpose data store, one of three watsonx products that help organizations accelerate and scale AI. Built on an open lakehouse architecture, watsonx.data combines the high performance and usability of a data warehouse with the flexibility and scalability of a data lake to address the challenges of today’s complex data landscape and scale AI.

Foundation of a data lakehouse: the storage layer

One of the key layers in a data lakehouse, and specifically wastonx.data, is the storage layer . In this layer, the structured, unstructured, and semi-structured data is stored in open-source file formats, such as Parquet or Optimized Row Columnar (ORC), to store data at a fraction of the cost compared to traditional block storage. The real benefit of a lakehouse is the system’s ability to accept all data types at an affordable cost.

See the below picture to view the different layers of the watsonx.data architecture. The bottom layer is the storage layer of the lakehouse.

- Show more sharing options

- Copy Link URL Copied!

Delta Air Lines eked out a narrow first-quarter profit and said Wednesday that demand for travel is strong heading into the summer vacation season, with travelers seemingly unfazed by recent incidents in the industry that ranged from a panel blowing off a jetliner in flight to a tire falling off another plane during takeoff.

Delta reported the highest revenue for any first quarter in its history and a $37 million profit. It expects record-breaking revenue in the current quarter as well. The airline said that second-quarter earnings will likely beat Wall Street expectations.

CEO Ed Bastian said Delta’s best 11 days ever for ticket sales occurred during the early weeks of 2024.

If travelers are worried about a spate of problem flights and increased scrutiny of plane maker Boeing , “I haven’t seen it,” Bastian said in an interview. “I only look at my numbers. Demand is the healthiest I’ve ever seen.”

A slight majority of Delta’s fleet of more than 950 planes are Boeing models, but in recent years it has bought primarily from Airbus , including a January order for 20 big Airbus A350s. As a result, Delta will avoid the dilemma facing rivals United Airlines and American Airlines, which can’t get all the Boeing planes they ordered. United is even asking pilots to take unpaid time off in May because of a plane shortage.

“Airbus has been consistent throughout these last five years (at) meeting their delivery targets,” Bastian said.

Delta does not operate any Boeing 737 Max jets, the plane that was grounded worldwide after two fatal crashes in 2018 and 2019, and which suffered the panel blowout on an Alaska Airlines flight this year. However, the Atlanta-based airline has ordered a new, larger version of the Max that still hasn’t been approved by regulators. Bastian said Delta will be happy to use the Max 10 when they arrive.

While Delta has largely dodged headaches caused by Boeing, it faces other obstacles in handling this summer’s crowds.

Delta is lobbying the federal government to again allow it to operate fewer flights into the New York City area. Otherwise, Delta could lose valuable takeoff and landing slots.

The Federal Aviation Administration granted a similar request last summer and even extended it until late October . The FAA said the relief helped airlines reduce canceled flights at the region’s busy three main airports by 40%.

Peter Carter, an executive vice president who oversees government affairs, said Delta and other airlines need another waiver permitting fewer flights this summer because the FAA still doesn’t have enough air traffic controllers.

“Absent the waiver, I think we would have, as an industry, some real challenges in New York,” Carter said.

Airlines for America, a trade group of the major U.S. carriers, is also pushing for a waiver from rules on minimum flights in New York. The FAA said it would review the request.

Delta customers will see another change — a new system for boarding planes . Instead of boarding by groups with names such as Diamond Medallions, Delta Premium Select and Sky Priority, passengers will board in groups numbered one through eight. The airline says it will be less confusing.

“When you have a number and you’re standing in line, we are all trained to know when it’s our turn,” Bastian said.

The change won’t alter the pecking order of when each type of customer gets to board. Those with the cheapest tickets, Basic Economy, will still board last.

Delta’s first-quarter profit follows a $363 million loss a year ago, when the results were weighed down by spending on a new labor contract with pilots.

“We expect Delta to be one of the few airlines to report a profit in the March quarter,” TD Cowen analyst Helane Becker said even before Delta’s results were released.