Understanding & Using Time Travel ¶

Snowflake Time Travel enables accessing historical data (i.e. data that has been changed or deleted) at any point within a defined period. It serves as a powerful tool for performing the following tasks:

Restoring data-related objects (tables, schemas, and databases) that might have been accidentally or intentionally deleted.

Duplicating and backing up data from key points in the past.

Analyzing data usage/manipulation over specified periods of time.

Introduction to Time Travel ¶

Using Time Travel, you can perform the following actions within a defined period of time:

Query data in the past that has since been updated or deleted.

Create clones of entire tables, schemas, and databases at or before specific points in the past.

Restore tables, schemas, and databases that have been dropped.

When querying historical data in a table or non-materialized view, the current table or view schema is used. For more information, see Usage notes for AT | BEFORE.

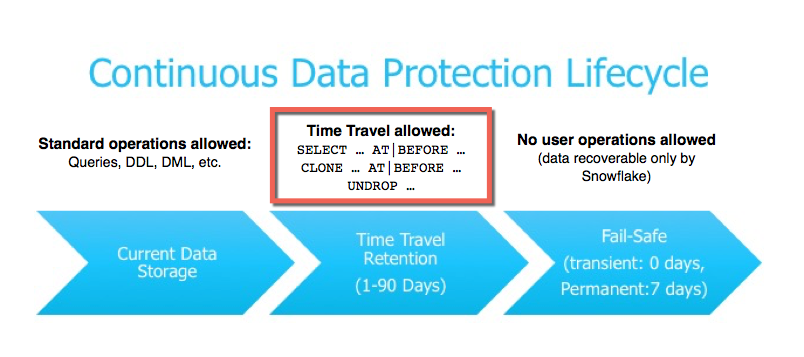

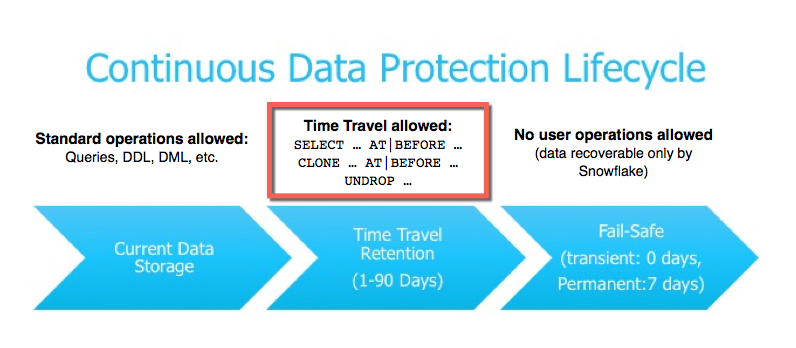

After the defined period of time has elapsed, the data is moved into Snowflake Fail-safe and these actions can no longer be performed.

A long-running Time Travel query will delay moving any data and objects (tables, schemas, and databases) in the account into Fail-safe, until the query completes.

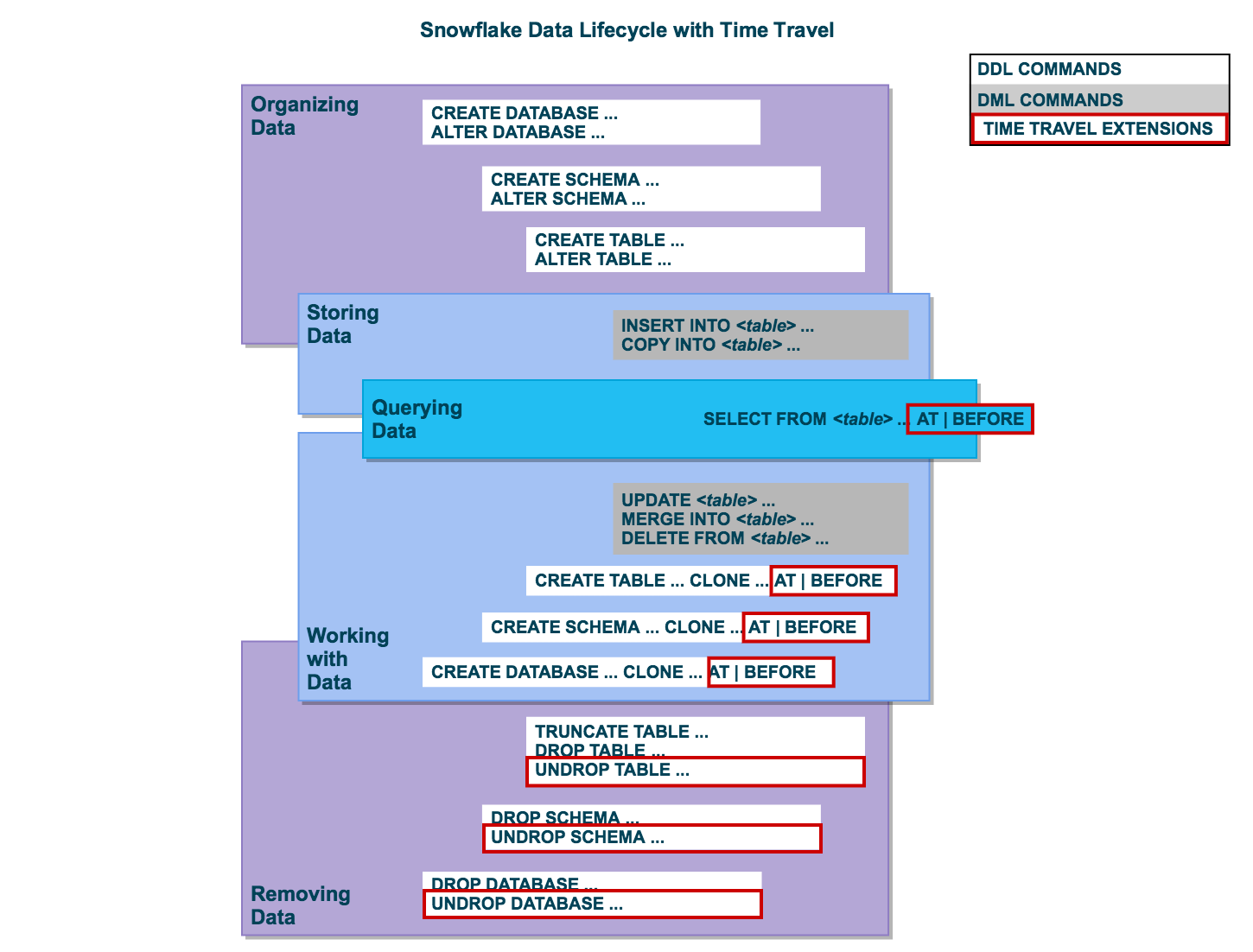

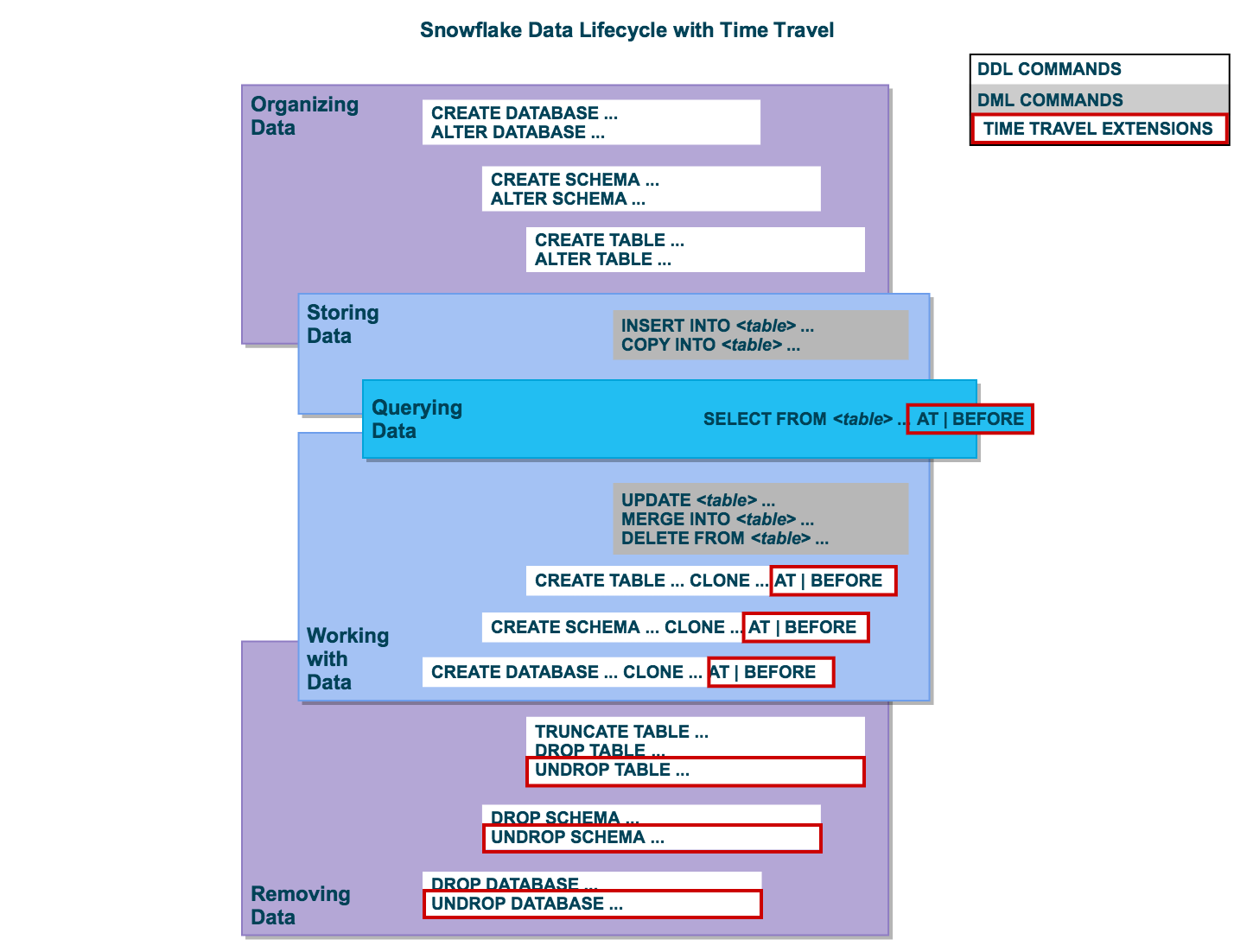

Time Travel SQL Extensions ¶

To support Time Travel, the following SQL extensions have been implemented:

AT | BEFORE clause which can be specified in SELECT statements and CREATE … CLONE commands (immediately after the object name). The clause uses one of the following parameters to pinpoint the exact historical data you wish to access:

OFFSET (time difference in seconds from the present time)

STATEMENT (identifier for statement, e.g. query ID)

UNDROP command for tables, schemas, and databases.

Data Retention Period ¶

A key component of Snowflake Time Travel is the data retention period.

When data in a table is modified, including deletion of data or dropping an object containing data, Snowflake preserves the state of the data before the update. The data retention period specifies the number of days for which this historical data is preserved and, therefore, Time Travel operations (SELECT, CREATE … CLONE, UNDROP) can be performed on the data.

The standard retention period is 1 day (24 hours) and is automatically enabled for all Snowflake accounts:

For Snowflake Standard Edition, the retention period can be set to 0 (or unset back to the default of 1 day) at the account and object level (i.e. databases, schemas, and tables).

For Snowflake Enterprise Edition (and higher):

For transient databases, schemas, and tables, the retention period can be set to 0 (or unset back to the default of 1 day). The same is also true for temporary tables.

For permanent databases, schemas, and tables, the retention period can be set to any value from 0 up to 90 days.

A retention period of 0 days for an object effectively disables Time Travel for the object.

When the retention period ends for an object, the historical data is moved into Snowflake Fail-safe :

Historical data is no longer available for querying.

Past objects can no longer be cloned.

Past objects that were dropped can no longer be restored.

To specify the data retention period for Time Travel:

The DATA_RETENTION_TIME_IN_DAYS object parameter can be used by users with the ACCOUNTADMIN role to set the default retention period for your account.

The same parameter can be used to explicitly override the default when creating a database, schema, and individual table.

The data retention period for a database, schema, or table can be changed at any time.

The MIN_DATA_RETENTION_TIME_IN_DAYS account parameter can be set by users with the ACCOUNTADMIN role to set a minimum retention period for the account. This parameter does not alter or replace the DATA_RETENTION_TIME_IN_DAYS parameter value. However it may change the effective data retention time. When this parameter is set at the account level, the effective minimum data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Enabling and Disabling Time Travel ¶

No tasks are required to enable Time Travel. It is automatically enabled with the standard, 1-day retention period.

However, you may wish to upgrade to Snowflake Enterprise Edition to enable configuring longer data retention periods of up to 90 days for databases, schemas, and tables. Note that extended data retention requires additional storage which will be reflected in your monthly storage charges. For more information about storage charges, see Storage Costs for Time Travel and Fail-safe .

Time Travel cannot be disabled for an account. A user with the ACCOUNTADMIN role can set DATA_RETENTION_TIME_IN_DAYS to 0 at the account level, which means that all databases (and subsequently all schemas and tables) created in the account have no retention period by default; however, this default can be overridden at any time for any database, schema, or table.

A user with the ACCOUNTADMIN role can also set the MIN_DATA_RETENTION_TIME_IN_DAYS at the account level. This parameter setting enforces a minimum data retention period for databases, schemas, and tables. Setting MIN_DATA_RETENTION_TIME_IN_DAYS does not alter or replace the DATA_RETENTION_TIME_IN_DAYS parameter value. It may, however, change the effective data retention period for objects. When MIN_DATA_RETENTION_TIME_IN_DAYS is set at the account level, the data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Time Travel can be disabled for individual databases, schemas, and tables by specifying DATA_RETENTION_TIME_IN_DAYS with a value of 0 for the object. However, if DATA_RETENTION_TIME_IN_DAYS is set to a value of 0, and MIN_DATA_RETENTION_TIME_IN_DAYS is set at the account level and is greater than 0, the higher value setting takes precedence.

Before setting DATA_RETENTION_TIME_IN_DAYS to 0 for any object, consider whether you wish to disable Time Travel for the object, particularly as it pertains to recovering the object if it is dropped. When an object with no retention period is dropped, you will not be able to restore the object.

As a general rule, we recommend maintaining a value of (at least) 1 day for any given object.

If the Time Travel retention period is set to 0, any modified or deleted data is moved into Fail-safe (for permanent tables) or deleted (for transient tables) by a background process. This may take a short time to complete. During that time, the TIME_TRAVEL_BYTES in table storage metrics might contain a non-zero value even when the Time Travel retention period is 0 days.

Specifying the Data Retention Period for an Object ¶

By default, the maximum retention period is 1 day (i.e. one 24 hour period). With Snowflake Enterprise Edition (and higher), the default for your account can be set to any value up to 90 days:

When creating a table, schema, or database, the account default can be overridden using the DATA_RETENTION_TIME_IN_DAYS parameter in the command.

If a retention period is specified for a database or schema, the period is inherited by default for all objects created in the database/schema.

A minimum retention period can be set on the account using the MIN_DATA_RETENTION_TIME_IN_DAYS parameter. If this parameter is set at the account level, the data retention period for an object is determined by MAX(DATA_RETENTION_TIME_IN_DAYS, MIN_DATA_RETENTION_TIME_IN_DAYS).

Changing the Data Retention Period for an Object ¶

If you change the data retention period for a table, the new retention period impacts all data that is active, as well as any data currently in Time Travel. The impact depends on whether you increase or decrease the period:

Causes the data currently in Time Travel to be retained for the longer time period.

For example, if you have a table with a 10-day retention period and increase the period to 20 days, data that would have been removed after 10 days is now retained for an additional 10 days before moving into Fail-safe.

Note that this doesn’t apply to any data that is older than 10 days and has already moved into Fail-safe.

Reduces the amount of time data is retained in Time Travel:

For active data modified after the retention period is reduced, the new shorter period applies.

For data that is currently in Time Travel:

If the data is still within the new shorter period, it remains in Time Travel. If the data is outside the new period, it moves into Fail-safe.

For example, if you have a table with a 10-day retention period and you decrease the period to 1-day, data from days 2 to 10 will be moved into Fail-safe, leaving only the data from day 1 accessible through Time Travel.

However, the process of moving the data from Time Travel into Fail-safe is performed by a background process, so the change is not immediately visible. Snowflake guarantees that the data will be moved, but does not specify when the process will complete; until the background process completes, the data is still accessible through Time Travel.

If you change the data retention period for a database or schema, the change only affects active objects contained within the database or schema. Any objects that have been dropped (for example, tables) remain unaffected.

For example, if you have a schema s1 with a 90-day retention period and table t1 is in schema s1 , table t1 inherits the 90-day retention period. If you drop table s1.t1 , t1 is retained in Time Travel for 90 days. Later, if you change the schema’s data retention period to 1 day, the retention period for the dropped table t1 is unchanged. Table t1 will still be retained in Time Travel for 90 days.

To alter the retention period of a dropped object, you must undrop the object, then alter its retention period.

To change the retention period for an object, use the appropriate ALTER <object> command. For example, to change the retention period for a table:

Changing the retention period for your account or individual objects changes the value for all lower-level objects that do not have a retention period explicitly set. For example:

If you change the retention period at the account level, all databases, schemas, and tables that do not have an explicit retention period automatically inherit the new retention period.

If you change the retention period at the schema level, all tables in the schema that do not have an explicit retention period inherit the new retention period.

Keep this in mind when changing the retention period for your account or any objects in your account because the change might have Time Travel consequences that you did not anticipate or intend. In particular, we do not recommend changing the retention period to 0 at the account level.

Dropped Containers and Object Retention Inheritance ¶

Currently, when a database is dropped, the data retention period for child schemas or tables, if explicitly set to be different from the retention of the database, is not honored. The child schemas or tables are retained for the same period of time as the database.

Similarly, when a schema is dropped, the data retention period for child tables, if explicitly set to be different from the retention of the schema, is not honored. The child tables are retained for the same period of time as the schema.

To honor the data retention period for these child objects (schemas or tables), drop them explicitly before you drop the database or schema.

Querying Historical Data ¶

When any DML operations are performed on a table, Snowflake retains previous versions of the table data for a defined period of time. This enables querying earlier versions of the data using the AT | BEFORE clause.

This clause supports querying data either exactly at or immediately preceding a specified point in the table’s history within the retention period. The specified point can be time-based (e.g. a timestamp or time offset from the present) or it can be the ID for a completed statement (e.g. SELECT or INSERT).

For example:

The following query selects historical data from a table as of the date and time represented by the specified timestamp :

SELECT * FROM my_table AT ( TIMESTAMP => 'Fri, 01 May 2015 16:20:00 -0700' ::timestamp_tz ); Copy

The following query selects historical data from a table as of 5 minutes ago:

SELECT * FROM my_table AT ( OFFSET => - 60 * 5 ); Copy

The following query selects historical data from a table up to, but not including any changes made by the specified statement:

SELECT * FROM my_table BEFORE ( STATEMENT => '8e5d0ca9-005e-44e6-b858-a8f5b37c5726' ); Copy

If the TIMESTAMP, OFFSET, or STATEMENT specified in the AT | BEFORE clause falls outside the data retention period for the table, the query fails and returns an error.

Cloning Historical Objects ¶

In addition to queries, the AT | BEFORE clause can be used with the CLONE keyword in the CREATE command for a table, schema, or database to create a logical duplicate of the object at a specified point in the object’s history.

The following CREATE TABLE statement creates a clone of a table as of the date and time represented by the specified timestamp:

CREATE TABLE restored_table CLONE my_table AT ( TIMESTAMP => 'Sat, 09 May 2015 01:01:00 +0300' ::timestamp_tz ); Copy

The following CREATE SCHEMA statement creates a clone of a schema and all its objects as they existed 1 hour before the current time:

CREATE SCHEMA restored_schema CLONE my_schema AT ( OFFSET => - 3600 ); Copy

The following CREATE DATABASE statement creates a clone of a database and all its objects as they existed prior to the completion of the specified statement:

CREATE DATABASE restored_db CLONE my_db BEFORE ( STATEMENT => '8e5d0ca9-005e-44e6-b858-a8f5b37c5726' ); Copy

The cloning operation for a database or schema fails:

If the specified Time Travel time is beyond the retention time of any current child (e.g., a table) of the entity. As a workaround for child objects that have been purged from Time Travel, use the IGNORE TABLES WITH INSUFFICIENT DATA RETENTION parameter of the CREATE <object> … CLONE command. For more information, see Child objects and data retention time . If the specified Time Travel time is at or before the point in time when the object was created.

The following CREATE DATABASE statement creates a clone of a database and all its objects as they existed four days ago, skipping any tables that have a data retention period of less than four days:

CREATE DATABASE restored_db CLONE my_db AT ( TIMESTAMP => DATEADD ( days , - 4 , current_timestamp ) ::timestamp_tz ) IGNORE TABLES WITH INSUFFICIENT DATA RETENTION ; Copy

Dropping and Restoring Objects ¶

Dropping objects ¶.

When a table, schema, or database is dropped, it is not immediately overwritten or removed from the system. Instead, it is retained for the data retention period for the object, during which time the object can be restored. Once dropped objects are moved to Fail-safe , you cannot restore them.

To drop a table, schema, or database, use the following commands:

DROP SCHEMA

DROP DATABASE

After dropping an object, creating an object with the same name does not restore the object. Instead, it creates a new version of the object. The original, dropped version is still available and can be restored.

Restoring a dropped object restores the object in place (i.e. it does not create a new object).

Listing Dropped Objects ¶

Dropped tables, schemas, and databases can be listed using the following commands with the HISTORY keyword specified:

SHOW TABLES

SHOW SCHEMAS

SHOW DATABASES

SHOW TABLES HISTORY LIKE 'load%' IN mytestdb . myschema ; SHOW SCHEMAS HISTORY IN mytestdb ; SHOW DATABASES HISTORY ; Copy

The output includes all dropped objects and an additional DROPPED_ON column, which displays the date and time when the object was dropped. If an object has been dropped more than once, each version of the object is included as a separate row in the output.

After the retention period for an object has passed and the object has been purged, it is no longer displayed in the SHOW <object_type> HISTORY output.

Restoring Objects ¶

A dropped object that has not been purged from the system (i.e. the object is displayed in the SHOW <object_type> HISTORY output) can be restored using the following commands:

UNDROP TABLE

UNDROP SCHEMA

UNDROP DATABASE

Calling UNDROP restores the object to its most recent state before the DROP command was issued.

UNDROP TABLE mytable ; UNDROP SCHEMA myschema ; UNDROP DATABASE mydatabase ; Copy

If an object with the same name already exists, UNDROP fails. You must rename the existing object, which then enables you to restore the previous version of the object.

Access Control Requirements and Name Resolution ¶

Similar to dropping an object, a user must have OWNERSHIP privileges for an object to restore it. In addition, the user must have CREATE privileges on the object type for the database or schema where the dropped object will be restored.

Restoring tables and schemas is only supported in the current schema or current database, even if a fully-qualified object name is specified.

Example: Dropping and Restoring a Table Multiple Times ¶

In the following example, the mytestdb.public schema contains two tables: loaddata1 and proddata1 . The loaddata1 table is dropped and recreated twice, creating three versions of the table:

Current version Second (i.e. most recent) dropped version First dropped version

The example then illustrates how to restore the two dropped versions of the table:

First, the current table with the same name is renamed to loaddata3 . This enables restoring the most recent version of the dropped table, based on the timestamp. Then, the most recent dropped version of the table is restored. The restored table is renamed to loaddata2 to enable restoring the first version of the dropped table. Lastly, the first version of the dropped table is restored. SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ DROP TABLE loaddata1 ; SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ CREATE TABLE loaddata1 ( c1 number ); INSERT INTO loaddata1 VALUES ( 1111 ), ( 2222 ), ( 3333 ), ( 4444 ); DROP TABLE loaddata1 ; CREATE TABLE loaddata1 ( c1 varchar ); SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | Fri, 13 May 2016 19:05:51 -0700 | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ ALTER TABLE loaddata1 RENAME TO loaddata3 ; UNDROP TABLE loaddata1 ; SHOW TABLES HISTORY ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA3 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | Fri, 13 May 2016 19:04:46 -0700 | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ ALTER TABLE loaddata1 RENAME TO loaddata2 ; UNDROP TABLE loaddata1 ; + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ | created_on | name | database_name | schema_name | kind | comment | cluster_by | rows | bytes | owner | retention_time | dropped_on | |---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------| | Tue, 17 Mar 2016 17:41:55 -0700 | LOADDATA1 | MYTESTDB | PUBLIC | TABLE | | | 48 | 16248 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:05:32 -0700 | LOADDATA2 | MYTESTDB | PUBLIC | TABLE | | | 4 | 4096 | PUBLIC | 1 | [NULL] | | Fri, 13 May 2016 19:06:01 -0700 | LOADDATA3 | MYTESTDB | PUBLIC | TABLE | | | 0 | 0 | PUBLIC | 1 | [NULL] | | Tue, 17 Mar 2016 17:51:30 -0700 | PRODDATA1 | MYTESTDB | PUBLIC | TABLE | | | 12 | 4096 | PUBLIC | 1 | [NULL] | + ---------------------------------+-----------+---------------+-------------+-------+---------+------------+------+-------+--------+----------------+---------------------------------+ Copy

How to Leverage the Time Travel Feature on Snowflake

Welcome to Time Travel in the Snowflake Data Cloud . You may be tempted to think “only superheroes can Time Travel,” and you would be right. But Snowflake gives you the ability to be your own real-life superhero.

Have you ever feared deleting the wrong data in your production database? Or that your carefully written script might accidentally remove the wrong records? Never fear, you are here – with Snowflake Time Travel!

What’s The Big Deal?

Snowflake Time Travel, when properly configured, allows for any Snowflake user with the proper permissions to recover and query data that has been changed or deleted up to the last 90 days (though this recovery period is dependent on the Snowflake version, as we’ll see later.)

This provides comprehensive, robust, and configurable data history in Snowflake that your team doesn’t have to manage! It includes the following advantages:

- Data (or even entire databases and schemas) can be restored that may have been lost due to a deletion, no matter if that deletion was on purpose or not

- The ability to maintain backup copies of your data for all past versions of it for a period of time

- Allowing for inspection of changes made over specific periods of time

To further investigate these features, we will look at:

- How Time Travel works

- How to configure Time Travel in your account

- How to use Time Travel

- How Time Travel impacts Snowflake cost

- Some Time Travel best practices

How Time Travel Works

Before we learn how to use it, let’s understand a little more about why Snowflake can offer this feature.

Snowflake stores the records in each table in immutable objects called micro-partitions that contain a subset of the records in a given table.

Each time a record is changed (created/updated/deleted), a brand new micro-partition is created, preserving the previous micro-partitions to create an immutable historical record of the data in the table at any given moment in time.

Time Travel is simply accessing the micro-partitions that were current for the table at a particular moment in time.

How To Configure Time Travel In Your Account

Time Travel is available and enabled in all account types.

However, the extent to which it is available is dependent on the type of Snowflake account, the object type, and the access granted to your user.

Default Retention Period

The retention period is the amount of time you can travel back and recover the state of a table at a given point and time. It is variable per account type. The default Time Travel retention period is 1 day (24 hours).

PRO TIP: Snowflake does have an additional layer of data protection called fail-safe , which is only accessible by Snowflake to restore customer data past the time travel window. However, unlike time travel, it should not be considered as a part of your organization’s backup strategy.

Account/Object Type Considerations

All Snowflake accounts have Time Travel for permanent databases, schemas, and tables enabled for the default retention period.

Snowflake Standard accounts (and above) can remove Time Travel retention altogether by setting the retention period to 0 days, effectively disabling Time Travel.

Snowflake Enterprise accounts (and above) can set the Time Travel retention period for transient databases, schemas, tables, and temporary tables to either 0 or 1 day. The retention period can also be increased to 0-90 days for permanent databases, schemas, and tables.

The following table summarizes the above considerations:

Changing Retention Period

For the Snowflake Enterprise accounts; two account level parameters can be used to change the default account level retention time.

- DATA_RETENTION_TIME_IN_DAYS: How many days that Snowflake stores historical data for the purpose of Time Travel.

- MIN_DATA_RETENTION_TIME_IN_DAYS: How many days at a minimum that Snowflake stores historical data for the purpose of Time Travel.

The parameter DATA_RETENTION_TIME_IN_DAYS can also be used at an object level to override the default retention time for an object and its children. Example:

How To Use Time Travel

Using Time Travel is easy! There are two sets of SQL commands that can invoke Time Travel capabilities:

- AT or BEFORE : clauses for both SELECT and CREATE .. CLONE statements. AT is inclusive and BEFORE is exclusive

- UNDROP : command for restoring a deleted table/schema/database

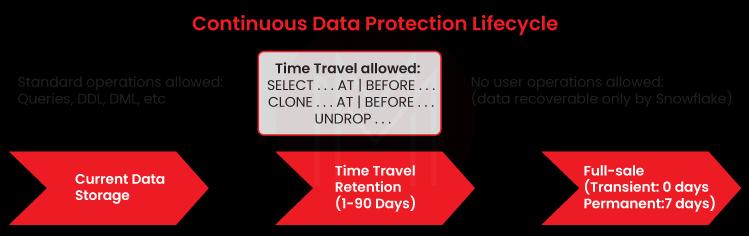

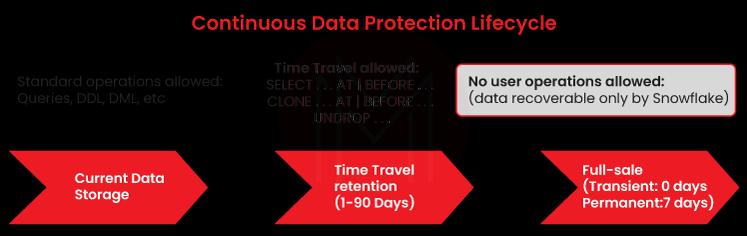

The following graphic from the Snowflake documentation summarizes this visually:

Query Historical Data

You can query historical data using the AT or BEFORE clauses and one of three parameters:

- TIMESTAMP : A specific historical timestamp at which to query data from a particular object. Example: SELECT * FROM my_table AT (TIMESTAMP => ‘Fri, 01 May 2015 15:00:00 -0700’::TIMESTAMP_TZ);

- OFFSET : The difference in seconds from the current time at which to query data from a particular object. Example: CREATE SCHEMA restored_schema CLONE my_schema AT (OFFSET => -4800);

- STATEMENT : The query ID of a statement that is used as a reference point from which to query data from a particular object. Example: CREATE DATABASE restored_db CLONE my_db BEFORE (STATEMENT => ‘8e5d0ca9-005e-44e6-b858-a8f5b37c5726’);

The one thing to understand is that these commands will work only within the retention period for the object that you are querying against. So, if your retention time is set to the default one day, and you try to UNDROP a table two days after deleting it, you receive an error and be out of luck!

PRO TIP: Snowflake does have an additional layer of data protection called fail-safe , which is only accessible by Snowflake to restore customer data past the time travel window. However, unlike time travel, it should not be considered as a part of your organization’s backup strategy.

Restore Deleted Objects

You can also restore objects that have been deleted by using the UNDROP command. To use this command, another table with the same fully qualified name (database.schema.table) cannot exist.

Example: UNDROP TABLE my_table

How Time Travel Impacts Snowflake Cost

Snowflake accounts are billed for the number of 24-hour periods that Time Travel data (the micro-partitions) is necessary to be maintained for the data that is being retained.

Every time there is a change in a table’s data, the historical version of that changed data will be retained (and charged in addition) for the entire retention period. This may not be an entire second copy of the table. Snowflake will try to optimize to maintain only the minimal amount of historical data needed but will incur additional costs.

As an example, if every row of a 100 GB table were changed ten times a day, the storage consumed (and charged) for this data per day would be 100GB x 10 changes = 1 TB.

What can you do to optimize cost to ensure your ops team does not wake up to an unnecessarily large Time Travel bill? Below are a couple of suggestions.

Use Transient and Temporary Tables When Possible

If data does not need to be protected using Time Travel, or there is data only being used as an intermediate stage in an ETL process, then take advantage of using transient and temporary tables with the DATA_RETENTION_TIME_IN_DAYS parameter set to 0. This will essentially disable Time Travel and make sure there are no extra costs because of it.

Copy Large High-Churn Tables

If you have large permanent tables where a high percentage of records are often changed every day, it might be a good idea to change your storage strategy for these tables based on the cost implications mentioned above.

One way of dealing with such a table would be to create it as a transient table with 0 Time Travel retention (DATA_RETENTION_TIME_IN_DAYS=0) and copy it over to a permanent table on a periodic basis.

This would allow you to control the number of copies of this data you maintain without worrying about ballooning Time Travel costs in the background.

Time Travel is an incredibly useful tool that removes the need for your team to maintain backups/snapshots/complex restoration processes/etc… as with a traditional database. Specifically, it enables the following advantages:

- Data recovery/restoration : use the ability to query historical data to restore old versions of a particular dataset, or recover databases/schemas/tables that have been deleted

- Backups : If not explicitly disabled, time travel automatically is maintaining backup copies of all past versions of your data for at least 1 day, and up to 90 days

- Change Auditing : The queryable nature of time travel allows for inspection of changes made to your data over specific periods of time

Final Thoughts

Hopefully, this has helped understand how to use Snowflake Time Travel and the context around how it works, and some of the cost implications.

If your organization needs help using or configuring Time Travel, or any other Snowflake feature, phData is a certified Elite Snowflake partner, and we would love to hear from you so that our team can help drive the value of your organization’s data forward!

More to explore

Page Navigation in Power BI

How to Use Fivetran to Ingest Workday Data Into Snowflake

Schema Detection and Evolution in Snowflake for Streaming Data

Join our team

- About phData

- Leadership Team

- All Technology Partners

- Case Studies

- phData Toolkit

Subscribe to our newsletter

- © 2023 phData

- Privacy Policy

- Accesibility Policy

- Website Terms of Use

- Data Processing Agreement

- End User License Agreement

Data Coach is our premium analytics training program with one-on-one coaching from renowned experts.

- Data Coach Overview

- Course Collection

Accelerate and automate your data projects with the phData Toolkit

- Get Started

- Financial Services

- Manufacturing

- Retail and CPG

- Healthcare and Life Sciences

- Call Center Analytics Services

- Snowflake Native Streaming of HL7 Data

- Snowflake Retail & CPG Supply Chain Forecasting

- Snowflake Plant Intelligence For Manufacturing

- Snowflake Demand Forecasting For Manufacturing

- Snowflake Data Collaboration For Manufacturing

- MLOps Framework

- Teradata to Snowflake

- Cloudera CDP Migration

Technology Partners

Other technology partners.

Check out our latest insights

- Dashboard Library

- Whitepapers and eBooks

Data Engineering

Consulting, migrations, data pipelines, dataops, change management, enablement & learning, coe, coaching, pmo, data science and machine learning services, mlops enablement, prototyping, model development and deployment, strategy services, data, analytics, and ai strategy, architecture and assessments, reporting, analytics, and visualization services, self-service, integrated analytics, dashboards, automation, elastic operations, data platforms, data pipelines, and machine learning.

Time Travel snowflake: The Ultimate Guide to Understand, Use & Get Started 101

By: Harsh Varshney | Published: January 13, 2022

To empower your business decisions with data, you need Real-Time High-Quality data from all of your data sources in a central repository. Traditional On-Premise Data Warehouse solutions have limited Scalability and Performance , and they require constant maintenance. Snowflake is a more Cost-Effective and Instantly Scalable solution with industry-leading Query Performance. It’s a one-stop-shop for Cloud Data Warehousing and Analytics, with full SQL support for Data Analysis and Transformations. One of the highlighting features of Snowflake is Snowflake Time Travel.

Table of Contents

Snowflake Time Travel allows you to access Historical Data (that is, data that has been updated or removed) at any point in time. It is an effective tool for doing the following tasks:

- Restoring Data-Related Objects (Tables, Schemas, and Databases) that may have been removed by accident or on purpose.

- Duplicating and Backing up Data from previous periods of time.

- Analyzing Data Manipulation and Consumption over a set period of time.

In this article, you will learn everything about Snowflake Time Travel along with the process which you might want to carry out while using it with simple SQL code to make the process run smoothly.

What is Snowflake?

Snowflake is the world’s first Cloud Data Warehouse solution, built on the customer’s preferred Cloud Provider’s infrastructure (AWS, Azure, or GCP) . Snowflake (SnowSQL) adheres to the ANSI Standard and includes typical Analytics and Windowing Capabilities. There are some differences in Snowflake’s syntax, but there are also some parallels.

Snowflake’s integrated development environment (IDE) is totally Web-based . Visit XXXXXXXX.us-east-1.snowflakecomputing.com. You’ll be sent to the primary Online GUI , which works as an IDE, where you can begin interacting with your Data Assets after logging in. Each query tab in the Snowflake interface is referred to as a “ Worksheet ” for simplicity. These “ Worksheets ,” like the tab history function, are automatically saved and can be viewed at any time.

Key Features of Snowflake

- Query Optimization: By using Clustering and Partitioning, Snowflake may optimize a query on its own. With Snowflake, Query Optimization isn’t something to be concerned about.

- Secure Data Sharing: Data can be exchanged securely from one account to another using Snowflake Database Tables, Views, and UDFs.

- Support for File Formats: JSON, Avro, ORC, Parquet, and XML are all Semi-Structured data formats that Snowflake can import. It has a VARIANT column type that lets you store Semi-Structured data.

- Caching: Snowflake has a caching strategy that allows the results of the same query to be quickly returned from the cache when the query is repeated. Snowflake uses permanent (during the session) query results to avoid regenerating the report when nothing has changed.

- SQL and Standard Support: Snowflake offers both standard and extended SQL support, as well as Advanced SQL features such as Merge, Lateral View, Statistical Functions, and many others.

- Fault Resistant: Snowflake provides exceptional fault-tolerant capabilities to recover the Snowflake object in the event of a failure (tables, views, database, schema, and so on).

To get further information check out the official website here .

What is Snowflake Time Travel Feature?

Snowflake Time Travel is an interesting tool that allows you to access data from any point in the past. For example, if you have an Employee table, and you inadvertently delete it, you can utilize Time Travel to go back 5 minutes and retrieve the data. Snowflake Time Travel allows you to Access Historical Data (that is, data that has been updated or removed) at any point in time. It is an effective tool for doing the following tasks:

- Query Data that has been changed or deleted in the past.

- Make clones of complete Tables, Schemas, and Databases at or before certain dates.

- Tables, Schemas, and Databases that have been deleted should be restored.

As the ability of businesses to collect data explodes, data teams have a crucial role to play in fueling data-driven decisions. Yet, they struggle to consolidate the data scattered across sources into their warehouse to build a single source of truth. Broken pipelines, data quality issues, bugs and errors, and lack of control and visibility over the data flow make data integration a nightmare.

1000+ data teams rely on Hevo’s Data Pipeline Platform to integrate data from over 150+ sources in a matter of minutes. Billions of data events from sources as varied as SaaS apps, Databases, File Storage and Streaming sources can be replicated in near real-time with Hevo’s fault-tolerant architecture. What’s more – Hevo puts complete control in the hands of data teams with intuitive dashboards for pipeline monitoring, auto-schema management, custom ingestion/loading schedules.

All of this combined with transparent pricing and 24×7 support makes us the most loved data pipeline software on review sites.

Take our 14-day free trial to experience a better way to manage data pipelines.

How to Enable & Disable Snowflake Time Travel Feature?

1) enable snowflake time travel.

To enable Snowflake Time Travel, no chores are necessary. It is turned on by default, with a one-day retention period . However, if you want to configure Longer Data Retention Periods of up to 90 days for Databases, Schemas, and Tables, you’ll need to upgrade to Snowflake Enterprise Edition. Please keep in mind that lengthier Data Retention necessitates more storage, which will be reflected in your monthly Storage Fees. See Storage Costs for Time Travel and Fail-safe for further information on storage fees.

For Snowflake Time Travel, the example below builds a table with 90 days of retention.

To shorten the retention term for a certain table, the below query can be used.

2) Disable Snowflake Time Travel

Snowflake Time Travel cannot be turned off for an account, but it can be turned off for individual Databases, Schemas, and Tables by setting the object’s DATA_RETENTION_TIME_IN_DAYS to 0.

Users with the ACCOUNTADMIN role can also set DATA_RETENTION_TIME_IN_DAYS to 0 at the account level, which means that by default, all Databases (and, by extension, all Schemas and Tables) created in the account have no retention period. However, this default can be overridden at any time for any Database, Schema, or Table.

3) What are Data Retention Periods?

Data Retention Time is an important part of Snowflake Time Travel. Snowflake preserves the state of the data before the update when data in a table is modified, such as deletion of data or removing an object containing data. The Data Retention Period sets the number of days that this historical data will be stored, allowing Time Travel operations ( SELECT, CREATE… CLONE, UNDROP ) to be performed on it.

All Snowflake Accounts have a standard retention duration of one day (24 hours) , which is automatically enabled:

- At the account and object level in Snowflake Standard Edition , the Retention Period can be adjusted to 0 (or unset to the default of 1 day) (i.e. Databases, Schemas, and Tables).

- The Retention Period can be set to 0 for temporary Databases, Schemas, and Tables (or unset back to the default of 1 day ). The same can be said of Temporary Tables.

- The Retention Time for permanent Databases, Schemas, and Tables can be configured to any number between 0 and 90 days .

4) What are Snowflake Time Travel SQL Extensions?

The following SQL extensions have been added to facilitate Snowflake Time Travel:

- OFFSET (time difference in seconds from the present time)

- STATEMENT (identifier for statement, e.g. query ID)

- For Tables, Schemas, and Databases, use the UNDROP command.

How Many Days Does Snowflake Time Travel Work?

How to specify a custom data retention period for snowflake time travel .

The maximum Retention Time in Standard Edition is set to 1 day by default (i.e. one 24 hour period). The default for your account in Snowflake Enterprise Edition (and higher) can be set to any value up to 90 days :

- The account default can be modified using the DATA_RETENTION_TIME IN_DAYS argument in the command when creating a Table, Schema, or Database.

- If a Database or Schema has a Retention Period , that duration is inherited by default for all objects created in the Database/Schema.

The Data Retention Time can be set in the way it has been set in the example below.

Using manual scripts and custom code to move data into the warehouse is cumbersome. Frequent breakages, pipeline errors and lack of data flow monitoring makes scaling such a system a nightmare. Hevo’s reliable data pipeline platform enables you to set up zero-code and zero-maintenance data pipelines that just work.

- Reliability at Scale : With Hevo, you get a world-class fault-tolerant architecture that scales with zero data loss and low latency.

- Monitoring and Observability : Monitor pipeline health with intuitive dashboards that reveal every stat of pipeline and data flow. Bring real-time visibility into your ELT with Alerts and Activity Logs

- Stay in Total Control : When automation isn’t enough, Hevo offers flexibility – data ingestion modes, ingestion, and load frequency, JSON parsing, destination workbench, custom schema management, and much more – for you to have total control.

- Auto-Schema Management : Correcting improper schema after the data is loaded into your warehouse is challenging. Hevo automatically maps source schema with destination warehouse so that you don’t face the pain of schema errors.

- 24×7 Customer Support : With Hevo you get more than just a platform, you get a partner for your pipelines. Discover peace with round the clock “Live Chat” within the platform. What’s more, you get 24×7 support even during the 14-day full-feature free trial.

- Transparent Pricing : Say goodbye to complex and hidden pricing models. Hevo’s Transparent Pricing brings complete visibility to your ELT spend. Choose a plan based on your business needs. Stay in control with spend alerts and configurable credit limits for unforeseen spikes in data flow.

How to Modify Data Retention Period for Snowflake Objects?

When you alter a Table’s Data Retention Period, the new Retention Period affects all active data as well as any data in Time Travel. Whether you lengthen or shorten the period has an impact:

1) Increasing Retention

This causes the data in Snowflake Time Travel to be saved for a longer amount of time.

For example, if you increase the retention time from 10 to 20 days on a Table, data that would have been destroyed after 10 days is now kept for an additional 10 days before being moved to Fail-Safe. This does not apply to data that is more than 10 days old and has previously been put to Fail-Safe mode .

2) Decreasing Retention

- Temporal Travel reduces the quantity of time data stored.

- The new Shorter Retention Period applies to active data updated after the Retention Period was trimmed.

- If the data is still inside the new Shorter Period , it will stay in Time Travel.

- If the data is not inside the new Timeframe, it is placed in Fail-Safe Mode.

For example, If you have a table with a 10-day Retention Term and reduce it to one day, data from days 2 through 10 will be moved to Fail-Safe, leaving just data from day 1 accessible through Time Travel.

However, since the data is moved from Snowflake Time Travel to Fail-Safe via a background operation, the change is not immediately obvious. Snowflake ensures that the data will be migrated, but does not say when the process will be completed; the data is still accessible using Time Travel until the background operation is completed.

Use the appropriate ALTER <object> Command to adjust an object’s Retention duration. For example, the below command is used to adjust the Retention duration for a table:

How to Query Snowflake Time Travel Data?

When you make any DML actions on a table, Snowflake saves prior versions of the Table data for a set amount of time. Using the AT | BEFORE Clause, you can Query previous versions of the data.

This Clause allows you to query data at or immediately before a certain point in the Table’s history throughout the Retention Period . The supplied point can be either a time-based (e.g., a Timestamp or a Time Offset from the present) or a Statement ID (e.g. SELECT or INSERT ).

- The query below selects Historical Data from a Table as of the Date and Time indicated by the Timestamp:

- The following Query pulls Data from a Table that was last updated 5 minutes ago:

- The following Query collects Historical Data from a Table up to the specified statement’s Modifications, but not including them:

How to Clone Historical Data in Snowflake?

The AT | BEFORE Clause, in addition to queries, can be combined with the CLONE keyword in the Construct command for a Table, Schema, or Database to create a logical duplicate of the object at a specific point in its history.

Consider the following scenario:

- The CREATE TABLE command below generates a Clone of a Table as of the Date and Time indicated by the Timestamp:

- The following CREATE SCHEMA command produces a Clone of a Schema and all of its Objects as they were an hour ago:

- The CREATE DATABASE command produces a Clone of a Database and all of its Objects as they were before the specified statement was completed:

Using UNDROP Command with Snowflake Time Travel: How to Restore Objects?

The following commands can be used to restore a dropped object that has not been purged from the system (i.e. the item is still visible in the SHOW object type> HISTORY output):

- UNDROP DATABASE

- UNDROP TABLE

- UNDROP SCHEMA

UNDROP returns the object to its previous state before the DROP command is issued.

A Database can be dropped using the UNDROP command. For example,

Similarly, you can UNDROP Tables and Schemas .

Snowflake Fail-Safe vs Snowflake Time Travel: What is the Difference?

In the event of a System Failure or other Catastrophic Events , such as a Hardware Failure or a Security Incident, Fail-Safe ensures that Historical Data is preserved . While Snowflake Time Travel allows you to Access Historical Data (that is, data that has been updated or removed) at any point in time.

Fail-Safe mode allows Snowflake to recover Historical Data for a (non-configurable) 7-day period . This time begins as soon as the Snowflake Time Travel Retention Period expires.

This article has exposed you to the various Snowflake Time Travel to help you improve your overall decision-making and experience when trying to make the most out of your data. In case you want to export data from a source of your choice into your desired Database/destination like Snowflake , then Hevo is the right choice for you!

However, as a Developer, extracting complex data from a diverse set of data sources like Databases, CRMs, Project management Tools, Streaming Services, and Marketing Platforms to your Database can seem to be quite challenging. If you are from non-technical background or are new in the game of data warehouse and analytics, Hevo can help!

Hevo will automate your data transfer process, hence allowing you to focus on other aspects of your business like Analytics, Customer Management, etc. Hevo provides a wide range of sources – 150+ Data Sources (including 40+ Free Sources) – that connect with over 15+ Destinations. It will provide you with a seamless experience and make your work life much easier.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand.

You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

Harsh comes with experience in performing research analysis who has a passion for data, software architecture, and writing technical content. He has written more than 100 articles on data integration and infrastructure.

No-code Data Pipeline for Snowflake

- Data Warehousing

Get Started with Hevo

Hevo - No Code Data Pipeline

Related articles

Syeda Famita Amber

Snowflake Describe Table Command 101: Syntax & Usage Simplified

Snowflake Clean Room: Future of Cross-Cloud Data Collaboration

Harsh Varshney

Snowflake CREATE USERS: Syntax, Usage & Practical Examples

I want to read this e-book.

Overview of Snowflake Time Travel

Consider a scenario where instead of dropping a backup table you have accidentally dropped the actual table (or) instead of updating a set of records, you accidentally updated all the records present in the table (because you didn’t use the Where clause in your update statement).

What would be your next action after realizing your mistake? You must be thinking to go back in time to a period where you didn’t execute your incorrect statement so that you can undo your mistake.

Snowflake provides this exact feature where you could get back to the data present at a particular period of time. This feature in Snowflake is called Time Travel .

Let us understand more about Snowflake Time Travel in this article with examples.

1. What is Snowflake Time Travel?

Snowflake Time Travel enables accessing historical data that has been changed or deleted at any point within a defined period. It is a powerful CDP (Continuous Data Protection) feature which ensures the maintenance and availability of your historical data.

Below actions can be performed using Snowflake Time Travel within a defined period of time:

- Restore tables, schemas, and databases that have been dropped.

- Query data in the past that has since been updated or deleted.

- Create clones of entire tables, schemas, and databases at or before specific points in the past.

Once the defined period of time has elapsed, the data is moved into Snowflake Fail-Safe and these actions can no longer be performed.

2. Restoring Dropped Objects

A dropped object can be restored within the Snowflake Time Travel retention period using the “UNDROP” command.

Consider we have a table ‘Employee’ and it has been dropped accidentally instead of a backup table.

It can be easily restored using the Snowflake UNDROP command as shown below.

Databases and Schemas can also be restored using the UNDROP command.

Calling UNDROP restores the object to its most recent state before the DROP command was issued.

3. Querying Historical Objects

When unwanted DML operations are performed on a table, the Snowflake Time Travel feature enables querying earlier versions of the data using the AT | BEFORE clause.

The AT | BEFORE clause is specified in the FROM clause immediately after the table name and it determines the point in the past from which historical data is requested for the object.

Let us understand with an example. Consider the table Employee. The table has a field IS_ACTIVE which indicates whether an employee is currently working in the Organization.

The employee ‘Michael’ has left the organization and the field IS_ACTIVE needs to be updated as FALSE. But instead you have updated IS_ACTIVE as FALSE for all the records present in the table.

There are three different ways you could query the historical data using AT | BEFORE Clause.

3.1. OFFSET

“ OFFSET” is the time difference in seconds from the present time.

The following query selects historical data from a table as of 5 minutes ago.

3.2. TIMESTAMP

Use “TIMESTAMP” to get the data at or before a particular date and time.

The following query selects historical data from a table as of the date and time represented by the specified timestamp.

3.3. STATEMENT

Identifier for statement, e.g. query ID

The following query selects historical data from a table up to, but not including any changes made by the specified statement.

The Query ID used in the statement belongs to Update statement we executed earlier. The query ID can be obtained from “Open History”.

4. Cloning Historical Objects

We have seen how to query the historical data. In addition, the AT | BEFORE clause can be used with the CLONE keyword in the CREATE command to create a logical duplicate of the object at a specified point in the object’s history.

The following queries show how to clone a table using AT | BEFORE clause in three different ways using OFFSET, TIMESTAMP and STATEMENT.

To restore the data in the table to a historical state, create a clone using AT | BEFORE clause, drop the actual table and rename the cloned table to the actual table name.

5. Data Retention Period

A key component of Snowflake Time Travel is the data retention period.

When data in a table is modified, deleted or the object containing data is dropped, Snowflake preserves the state of the data before the update. The data retention period specifies the number of days for which this historical data is preserved.

Time Travel operations can be performed on the data during this data retention period of the object. When the retention period ends for an object, the historical data is moved into Snowflake Fail-safe.

6. How to find the Time Travel Data Retention period of Snowflake Objects?

SHOW PARAMETERS command can be used to find the Time Travel retention period of Snowflake objects.

Below commands can be used to find the data retention period of data bases, schemas and tables.

The DATA_RETENTION_TIME_IN_DAYS parameters specifies the number of days to retain the old version of deleted/updated data.

The below image shows that the table Employee has the DATA_RETENTION_TIME_IN_DAYS value set as 1.

7. How to set custom Time-Travel Data Retention period for Snowflake Objects?

Time travel is automatically enabled with the standard, 1-day retention period. However, you may wish to upgrade to Snowflake Enterprise Edition or higher to enable configuring longer data retention periods of up to 90 days for databases, schemas, and tables.

You can configure the data retention period of a table while creating the table as shown below.

To modify the data retention period of an existing table, use below syntax

The below image shows that the data retention period of table is altered to 30 days.

A retention period of 0 days for an object effectively disables Time Travel for the object.

8. Data Retention Period Rules and Inheritance

Changing the retention period for your account or individual objects changes the value for all lower-level objects that do not have a retention period explicitly set. For example:

- If you change the retention period at the account level, all databases, schemas, and tables that do not have an explicit retention period automatically inherit the new retention period.

- If you change the retention period at the schema level, all tables in the schema that do not have an explicit retention period inherit the new retention period.

Currently, when a database is dropped, the data retention period for child schemas or tables, if explicitly set to be different from the retention of the database, is not honored. The child schemas or tables are retained for the same period of time as the database.

- To honor the data retention period for these child objects (schemas or tables), drop them explicitly before you drop the database or schema.

Related Articles:

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

Related Posts

QUALIFY in Snowflake: Filter Window Functions

GROUP BY ALL in Snowflake

Rank Transformation in Informatica Cloud (IICS)

Snowflake Time Travel - A Detailed Guide

- Snowflake Interview Questions and Answers

- Snowflake Tutorial

- Snowflake Architecture

- What is Snowflake Data Warehouse

- Star schema and Snowflake schema in QlikView

- Snowflake vs Redshift - Which is the Best Data Warehousing Tool

- Snowflake vs BigQuery

- What is a Snowflake Table & Types of Tables

- Snowflake vs Databricks

- Snowflake vs Azure

- Snowflake Vs Hadoop: What's the Difference?

- How to Insert Data in Snowflake

- Snowflake Connectors

- Snowflake Documentation - A Complete Guide

- How to Update Data in Snowflake?

- How to Delete Data in Snowflake

- How to Add a Default Value to a Column in Snowflake

- How to Add a Column in Snowflake

- How to Add a NOT NULL Constraint in Snowflake

- How to Alter a Sequence in Snowflake

- How to Create a Table in Snowflake

- How to Create a View in Snowflake

- How to create an Index in Snowflake

- How to Drop a Column in Snowflake

- How to Drop a Table in Snowflake - MindMajix

- How to Drop a View in Snowflake?

- How to Drop the Index in Snowflake

- How to Duplicate a Table in Snowflake

- How to Remove a NOT NULL Constraint in Snowflake

- How to Remove a Default Value to a Column in Snowflake

- How to Rename a Table in Snowflake

- How to Rename a Column in Snowflake

- How to write a Common Table Expression(CTE) in Snowflake

- How to Write a Case Statement in Snowflake

- How to Use Coalesce in Snowflake

- How to Query a JSON Object in Snowflake

- How to Truncate a Table in Snowflake

- How to Group by Time in Snowflake

- How to Import a CSV in Snowflake

- How to Query Date and Time in Snowflake

- How to Convert UTC to Local Time Zone in Snowflake

- How to Avoid Gaps in Data in Snowflake

- How to Have Multiple Counts in Snowflake

- How do we Calculate the Running Total or Cumulative Sum in Snowflake

- How to Round Timestamps in Snowflake

- How to Calculate Percentiles in Snowflake

- How to Compare Two Values When One Value is Null in Snowflake

- How to do Type Casting in Snowflake

- How to Get First Row Per Group in Snowflake

- Snowflake Cloning - Zero Copy Clone

- Explore real-time issues getting addressed by experts

- Test and Explore your knowledge

- Introduction to Time Travel

Time Travel SQL Extensions

- Data Retention Period

- How to Specify the data retention period for Time Travel?

- What is fail-safe in Snowflake?

- Which Snowflake Edition Provides Time Travel?

How to Restore Objects?

Enabling and disabling time travel.

- Snowflake Time Travel Storage cost

- Difference between Time Travel and Fail-Safe in Snowflake

Traveling across time would be fantastic! There are a lot of time travel movies and series out there, on the other hand, consistently caution us about the risks of altering timelines. Perhaps time travel isn't a good idea. I can think of a few scenarios in which time travel would be helpful!

Snowflake Time Travel - Table of Contents

Do you wish you could travel across time and observe the evolution of your data over time without having to restore backups or implement a fully functional data warehouse as a business user? Please don't misunderstand what I'm saying: I'm not stating that a data warehouse isn't necessary; I'm simply stating that the data you require may not be available in your current data warehouse, or you may never have had one and wish you had.

Have you ever accidentally dropped, truncated, or erased rows from a table? Hopefully, you've prepared backups to fall back on! It's critical to be extra cautious when using update and remove statements. Developers or Database administrators occasionally find themselves in situations where they would like to execute a single piece of code and revert back to the snapshot before their last test SQL execution.

These are the kinds of circumstances where database time travel might be extremely useful. Snowflake supports database time travel. Snowflake Time Travel allows you to go back in time and view past data, i.e. data that has been modified or removed). As the data storage layer, Snowflake employs immutable cloud storage. This indicates that it makes a new version of the file rather than changing it. Time travel is one of the new and fascinating possibilities that this manner of functioning opens up.

It is an effective tool for doing the following tasks:

- Restoring data-related objects (tables, schemas, and databases) that may have been removed by accident or on purpose.

- Duplicating and backing up data from previous periods of time.

- Analyzing data manipulation and consumption over a set time.

Introduction to Snowflake Time Travel

Time travel is an interesting feature that allows you to access data from any point in the past. If you have an Employee table, for example, and you unintentionally delete it, you can utilize time travel to go back 5 minutes and retrieve the data.

It also can be used to back up data, compare data, and examine previous data usage over a period of time. As a result, it acts as a continuous data protection lifecycle that is dependent on the data retention durations set for each item. It's critical to comprehend the various data retention periods which can be used in distinct versions. All Snowflake accounts have a standard retention duration of one day (24 hours), which is enabled by default. At the account and object level, the retention period in Snowflake Standard Edition can be adjusted to 0 (or unset to the default of 1 day). The retention time for everlasting databases, schemas, and tables in Snowflake Enterprise Edition and higher can be specified to any value between 0 and 90 days (with the exception of transient tables).

Consider the following scenario: You're working on a bug patch and are connected to the PROD database, where you executed an update statement on a table that updated billions of records. On that table, you also conducted additional delete and update logic. You later realized that when using update and delete statements in your SQL query, you forgot to use the necessary where clause.

So, what are you going to do now? Perhaps you'll consider retrieving the database's most recent backup copy and restoring it. Alternatively, you might try to truncate the table and load new data from the source. Alternatively, you will inform your boss.

If you're working in a PROD environment, you won't have enough time to backup your data and load new data. When it comes to restoring a backup copy, there is a potential that data will be lost between the last backup and the current data. If you want to do a fresh data load, it will take anything from hours to days, depending on the amount of data you have.

Then you're probably considering going back in time and restoring things to their previous state. What I mean is that I want to go back to that point in time and get the data as it was before I made a mistake with my initial update/Delete statement.

And Snowflake has a capability that allows you to go back in time and retrieve your data. This property of a snowflake is also known as its time-traveling property.

We use the Time Travel SQL Extensions AT or BEFORE clause in SELECT queries and CREATE... CLONE commands in Snowflake Time Travel to retrieve or clone historical data. We use the SQL Extensions with the following arguments to locate the particular historical data that you want to access:

- OFFSET (time difference from the current time in seconds).

- STATEMENT (statement's identifier, e.g. query ID).

What is Data Retention Period For Snowflake Time Travel?

The characteristic of time travel relies heavily on the data retention duration. When a user makes changes to a table, Snowflake saves the current state of the data before making any alterations. And this state of data from the past will last for a set amount of time, which is known as the Time travel data retention period.

For all Snowflake accounts, the default data retention duration is one day. It is 1 day by default for standard aims, and it ranges from 0 - 90 days for enterprise edition and higher accounts.

How to Specify the Data Retention Period For Time Travel?

Steps to specify the data retention duration are as follows:

- DATA_RETENTION_TIME_IN_DAYS object parameter can be used by users with the ACCOUNTADMIN role to set the data retention time for your account.

- When building a schema, database, or individual table, the DATA_RETENTION_TIME_IN_DAYS object option is also used to alter the default.

- At any moment, users can change the data retention term for a schema, database, or table.

You can either increase or decrease the data retention duration.

- Increasing Retention: It extends the data retention time for time travel data.

- Decreasing Retention: It reduces the data retention duration for time travel data.

What is Fail-safe in Snowflake?

Fail-safe gives a (non-configurable) 7-day timeframe during which Snowflake may be able to retrieve prior data. This time begins as soon as the Time Travel retention period expires.

It is a data recovery service that is offered with the best effort and should only be used after all other options have been exhausted. After the Time Travel retention term has expired, there is no fail-safe method for accessing prior data. It's just for Snowflake's use to recover data that's been lost or destroyed as a result of extreme operational failures. Fail-safe data recovery might take somewhere from a few hours to many days.

Which Snowflake Edition provides Time Travel?

These functions are offered as standard for all accounts, requiring no additional license; however, standard Time Travel is limited to one day. Snowflake Enterprise Edition is required for extended time travel (up to 90 days). Furthermore, both Time Travel and Fail-safe necessitate additional data storage, which comes with a cost.

The UNDROP command can be used to restore a dropped table, schema, or database that has not yet been purged from the system (i.e. not yet put into Snowflake Fail-safe). UNDROP returns the item to its most recent state, which was before it was dropped.

Snowflake's time travel feature is activated by default in all editions, with a 1-day data retention period. For enterprise and higher versions, however, we can enable a longer data retention duration of up to 90 days. Individual databases, schemas, and tables can have time travel disabled by setting the data retention time in days to 0. Use the appropriate ALTER command to adjust an object's retention duration.

[ Related Article: Star schema and Snowflake schema in QlikView ]

Snowflake Time Travel Storage Cost

During both the Time Travel and Fail-safe phases, storage charges are incurred for retaining prior data.

Snowflake keeps only the information needed to restore the individual table rows that were updated or deleted, reducing the amount of storage required for historical data. As a result, storage utilization is expressed as a percentage of the altered table. Tables are only kept in full copies when they are dropped or shortened.

The charges for storage are assessed for each 24-hour period (i.e. one day) starting from the time the data was modified. The number of days historical data is kept is determined by the table type and the table's Time Travel retention period.

Visit here to learn Snowflake Training in Pune

Difference Between Time Travel and Fail-Safe in Snowflake

Time travel allows the user to query the data and see how it appeared previously, as well as query and restore the table's former state. Internally, snowflakes use fail-safe to restore data in the event of hardware failure.

Snowflake's Time Travel feature is a wonderful way to save data that was either deleted or lost accidentally in the past. Fail-safe offers free 7-day storage and begins working immediately after the time-travel period has ended. I hope you found some useful information from this Snowflake Time Travel blog.

Stay updated with our newsletter, packed with Tutorials, Interview Questions, How-to's, Tips & Tricks, Latest Trends & Updates, and more ➤ Straight to your inbox!

Madhuri is a Senior Content Creator at MindMajix. She has written about a range of different topics on various technologies, which include, Splunk, Tensorflow, Selenium, and CEH. She spends most of her time researching on technology, and startups. Connect with her via LinkedIn and Twitter .

Copyright © 2013 - 2024 MindMajix Technologies

IMAGES

VIDEO

COMMENTS

Snowflake Time Travel enables accessing historical data (i.e. data that has been changed or deleted) at any point within a defined period. It serves as a powerful tool for performing the following tasks: Restoring data-related objects (tables, schemas, and databases) that might have been accidentally or intentionally deleted.

Cloning Objects with Time Travel. There is no backup in Snowflake. But cloning can be also used to perform backups. For Enterprise Edition and higher, Snowflake supports time travel retention of up to 90 days. But you can make a Zero Copy Clone every 3 months, to preserve the history indefinitely. For one year, you can save the table as a clone ...

The Time Travel feature allows database administrators to query historical data, clone old tables, and restore objects dropped in the past. However, given the massive sizes databases can grow into, Time Travel is not a drop-in equivalent of code version control. Every time a table is modified (deleted or updated), Snowflake takes a snapshot of ...

Nov 28, 2023. Learn how to leverage Snowflake's Time Travel feature in conjunction with DbVisualizer to effortlessly explore historical data, restore tables to previous states, and track changes ...

The default Time Travel retention period is 1 day (24 hours). PRO TIP: Snowflake does have an additional layer of data protection called fail-safe, which is only accessible by Snowflake to restore customer data past the time travel window. However, unlike time travel, it should not be considered as a part of your organization's backup strategy.

Snowflake Time Travel is an interesting tool that allows you to access data from any point in the past. For example, if you have an Employee table, and you inadvertently delete it, you can utilize Time Travel to go back 5 minutes and retrieve the data. Snowflake Time Travel allows you to Access Historical Data (that is, data that has been ...

7. How to set custom Time-Travel Data Retention period for Snowflake Objects? Time travel is automatically enabled with the standard, 1-day retention period. However, you may wish to upgrade to Snowflake Enterprise Edition or higher to enable configuring longer data retention periods of up to 90 days for databases, schemas, and tables.

In all Snowflake editions, It is set to 1 day by default for all objects. This parameter can be extended to 90 days for Enterprise and Business-Critical editions. The parameter "DATA RETENTION PERIOD" controls an object's time travel capability. Once the time travel duration is exceeded the object enters the Fail-safe region.

Snowflake's Time Travel feature is an extremely useful tool for data recovery. By creating a Time Travel enabled table, you can travel back in time and retrieve lost data without the need for extensive recovery processes. Additionally, this feature is unique to Snowflake and offers an alternative to competitors' temporal table features.

Snowflake Time Travel enables to query data as it was saved at a particular point in time and roll back to the corresponding version. It means that the intentional or unintentional changes to the ...

#snowflakedatawarehouse #snowflaketutorial #snowflakedatabaseNew to Snowflake Data Warehouse and struggling to find practical advice and knowledge? You're in...

Snowflake Time Travel is an exciting feature that allows you to query previous versions of data. This is a low-cost, low-latency feature because of the unique way Snowflake structures table data.

Snowflake's time travel feature is activated by default in all editions, with a 1-day data retention period. For enterprise and higher versions, however, we can enable a longer data retention duration of up to 90 days. Individual databases, schemas, and tables can have time travel disabled by setting the data retention time in days to 0.

1. Snowflake Time Travel is a fascinating feature that grants you the power to access historical data. Imagine you have an Employee table, and you accidentally delete it. With Time Travel, you can ...

timestamp "2022-01-02T12:30:30.471395746-06" is not recognized snowflake Hot Network Questions From a security standpoint, why should unused software be deleted?

0. Session variable in Snowflake can work with query substitution and would help in the timestamp clause of your time travel query. Currently, this feature is not supported inside a UDF so you would declare the session variable and then use it with your UDF: SET x = (SELECT current_timestamp()); CREATE OR REPLACE FUNCTION my_timetravel()

Time-Travel Options In Snowflake Historical Data from Table Using Specific Time Stamp: SELECT * FROM <table_name> at (timestamp => 'Fri, 25 February 2022 16:14:00 -0700'::timestamp);