Reset password New user? Sign up

Existing user? Log in

Traveling Salesperson Problem

Already have an account? Log in here.

A salesperson needs to visit a set of cities to sell their goods. They know how many cities they need to go to and the distances between each city. In what order should the salesperson visit each city exactly once so that they minimize their travel time and so that they end their journey in their city of origin?

The traveling salesperson problem is an extremely old problem in computer science that is an extension of the Hamiltonian Circuit Problem . It has important implications in complexity theory and the P versus NP problem because it is an NP-Complete problem . This means that a solution to this problem cannot be found in polynomial time (it takes superpolynomial time to compute an answer). In other words, as the number of vertices increases linearly, the computation time to solve the problem increases exponentially.

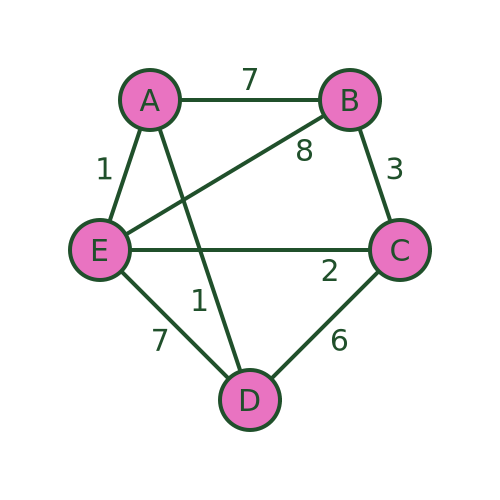

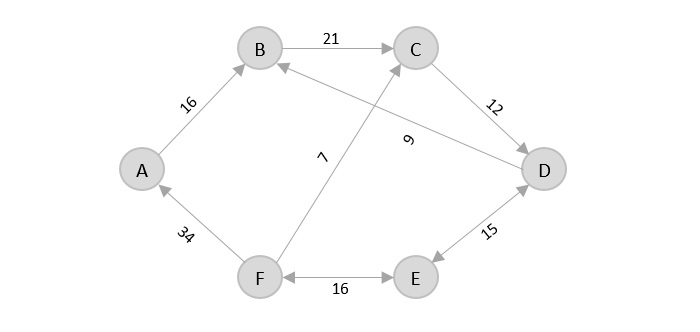

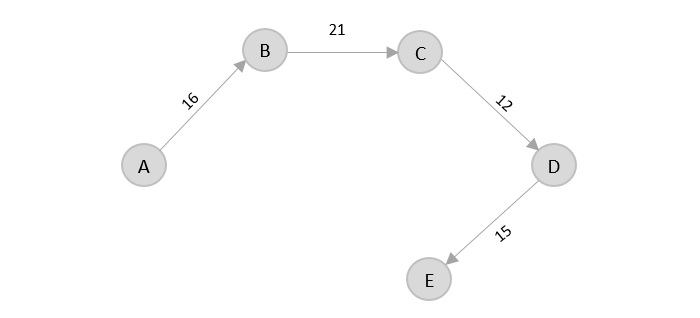

The following image is a simple example of a network of cities connected by edges of a specific distance. The origin city is also marked.

Network of cities

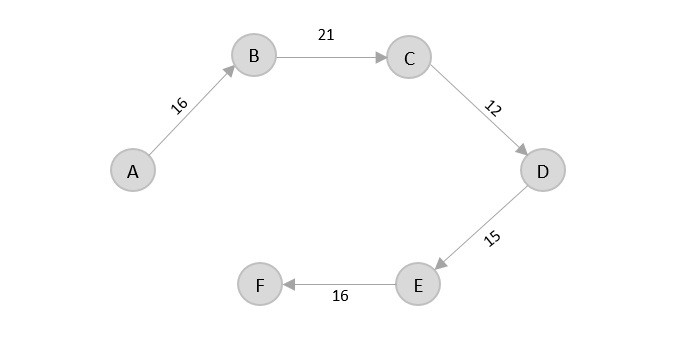

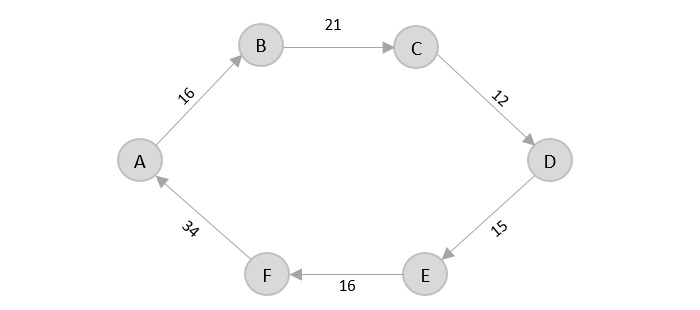

Here is the solution for that network, it has a distance traveled of only 14. Any other path that the salesman can takes will result in a path length that is more than 14.

Relationship to Graphs

Special kinds of tsp, importance for p vs np, applications.

The traveling salesperson problem can be modeled as a graph . Specifically, it is typical a directed, weighted graph. Each city acts as a vertex and each path between cities is an edge. Instead of distances, each edge has a weight associated with it. In this model, the goal of the traveling salesperson problem can be defined as finding a path that visits every vertex, returns to the original vertex, and minimizes total weight.

To that end, many graph algorithms can be used on this model. Search algorithms like breadth-first search (BFS) , depth-first search (DFS) , and Dijkstra's shortest path algorithm can certainly be used, however, they do not take into consideration that fact that every vertex must be visited.

The Traveling Salesperson Problem (TSP), an NP-Complete problem, is notoriously complicated to solve. That is because the greedy approach is so computational intensive. The greedy approach to solving this problem would be to try every single possible path and see which one is the fastest. Try this conceptual question to see if you have a grasp for how hard it is to solve.

For a fully connected map with \(n\) cities, how many total paths are possible for the traveling salesperson? Show Answer There are (n-1)! total paths the salesperson can take. The computation needed to solve this problem in this way grows far too quickly to be a reasonable solution. If this map has only 5 cities, there are \(4!\), or 24, paths. However, if the size of this map is increased to 20 cities, there will be \(1.22 \cdot 10^{17}\) paths!

The greedy approach to TSP would go like this:

- Find all possible paths.

- Find the cost of every paths.

- Choose the path with the lowest cost.

Another version of a greedy approach might be: At every step in the algorithm, choose the best possible path. This version might go a little quicker, but it's not guaranteed to find the best answer, or an answer at all since it might hit a dead end.

For NP-Hard problems (a subset of NP-Complete problems) like TSP, exact solutions can only be implemented in a reasonable amount of time for small input sizes (maps with few cities). Otherwise, the best approach we can do is provide a heuristic to help the problem move forward in an optimal way. However, these approaches cannot be proven to be optimal because they always have some sort of downside.

Small input sizes

As described, in a previous section , the greedy approach to this problem has a complexity of \(O(n!)\). However, there are some approaches that decrease this computation time.

The Held-Karp Algorithm is one of the earliest applications of dynamic programming . Its complexity is much lower than the greedy approach at \(O(n^2 2^n)\). Basically what this algorithm says is that every sub path along an optimal path is itself an optimal path. So, computing an optimal path is the same as computing many smaller subpaths and adding them together.

Heuristics are a way of ranking possible next steps in an algorithm in the hopes of cutting down computation time for the entire algorithm. They are often a tradeoff of some attribute - such as completeness, accuracy, or precision - in favor of speed. Heuristics exist for the traveling salesperson problem as well.

The most simple heuristic for this problem is the greedy heuristic. This heuristic simply says, at each step of the network traversal, choose the best next step. In other words, always choose the closest city that you have not yet visited. This heuristic seems like a good one because it is simple and intuitive, and it is even used in practice sometimes, however there are heuristics that are proven to be more effective.

Christofides algorithm is another heuristic. It produces at most 1.5 times the optimal weight for TSP. This algorithm involves finding a minimum spanning tree for the network. Next, it creates matchings for the cities of an odd degree (meaning they have an odd number of edges coming out of them), calculates an eulerian path , and converts back to a TSP path.

Even though it is typically impossible to optimally solve TSP problems, there are cases of TSP problems that can be solved if certain conditions hold.

The metric-TSP is an instance of TSP that satisfies this condition: The distance from city A to city B is less than or equal to the distance from city A to city C plus the distance from city C to city B. Or,

\[distance_{AB} \leq distance_{AC} + distance_{CB}\]

This is a condition that holds in the real world, but it can't always be expected to hold for every TSP problem. But, with this inequality in place, the approximated path will be no more than twice the optimal path. Even better, we can bound the solution to a \(3/2\) approximation by using Christofide's Algorithm .

The euclidean-TSP has an even stricter constraint on the TSP input. It states that all cities' edges in the network must obey euclidean distances . Recent advances have shown that approximation algorithms using euclidean minimum spanning trees have reduced the runtime of euclidean-TSP, even though they are also NP-hard. In practice, though, simpler heuristics are still used.

The P versus NP problem is one of the leading questions in modern computer science. It asks whether or not every problem whose solution can be verified in polynomial time by a computer can also be solved in polynomial time by a computer. TSP, for example, cannot be solved in polynomial time (at least that's what is currently theorized). However, TSP can be solved in polynomial time when it is phrased like this: Given a graph and an integer, x, decide if there is a path of length x or less than x . It's easy to see that given a proposed answer to this question, it is simple to check if it is less than or equal to x.

The traveling salesperson problem, like other problems that are NP-Complete, are very important to this debate. That is because if a polynomial time solution can be found to this problems, then \(P = NP\). As it stands, most scientists believe that \(P \ne NP\).

The traveling salesperson problem has many applications. The obvious ones are in the transportation space. Planning delivery routes or flight patterns, for example, would benefit immensly from breakthroughs is this problem or in the P versus NP problem .

However, this same logic can be applied to many facets of planning as well. In robotics, for instance, planning the order in which to drill holes in a circuit board is a complex task due to the sheer number of holes that must be drawn.

The best and most important application of TSP, however, comes from the fact that it is an NP-Complete problem. That means that its practical applications amount to the applications of any problem that is NP-Complete. So, if there are significant breakthroughs for TSP, that means that those exact same breakthrough can be applied to any problem in the NP-Complete class.

Problem Loading...

Note Loading...

Set Loading...

Traveling salesman problem

This web page is a duplicate of https://optimization.mccormick.northwestern.edu/index.php/Traveling_salesman_problems

Author: Jessica Yu (ChE 345 Spring 2014)

Steward: Dajun Yue, Fengqi You

The traveling salesman problem (TSP) is a widely studied combinatorial optimization problem, which, given a set of cities and a cost to travel from one city to another, seeks to identify the tour that will allow a salesman to visit each city only once, starting and ending in the same city, at the minimum cost. 1

- 2.1 Graph Theory

- 2.2 Classifications of the TSP

- 2.3 Variations of the TSP

- 3.1 aTSP ILP Formulation

- 3.2 sTSP ILP Formulation

- 4.1 Exact algorithms

- 4.2.1 Tour construction procedures

- 4.2.2 Tour improvement procedures

- 5 Applications

- 7 References

The origins of the traveling salesman problem are obscure; it is mentioned in an 1832 manual for traveling salesman, which included example tours of 45 German cities but gave no mathematical consideration. 2 W. R. Hamilton and Thomas Kirkman devised mathematical formulations of the problem in the 1800s. 2

It is believed that the general form was first studied by Karl Menger in Vienna and Harvard in the 1930s. 2,3

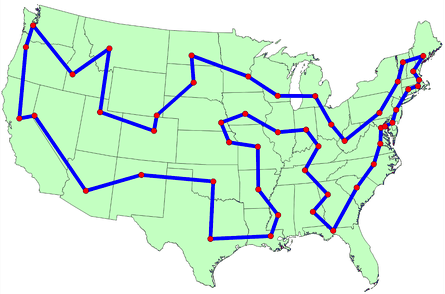

Hassler Whitney, who was working on his Ph.D. research at Harvard when Menger was a visiting lecturer, is believed to have posed the problem of finding the shortest route between the 48 states of the United States during either his 1931-1932 or 1934 seminar talks. 2 There is also uncertainty surrounding the individual who coined the name “traveling salesman problem” for Whitney’s problem. 2

The problem became increasingly popular in the 1950s and 1960s. Notably, George Dantzig, Delber R. Fulkerson, and Selmer M. Johnson at the RAND Corporation in Santa Monica, California solved the 48 state problem by formulating it as a linear programming problem. 2 The methods described in the paper set the foundation for future work in combinatorial optimization, especially highlighting the importance of cutting planes. 2,4

In the early 1970s, the concept of P vs. NP problems created buzz in the theoretical computer science community. In 1972, Richard Karp demonstrated that the Hamiltonian cycle problem was NP-complete, implying that the traveling salesman problem was NP-hard. 4

Increasingly sophisticated codes led to rapid increases in the sizes of the traveling salesman problems solved. Dantzig, Fulkerson, and Johnson had solved a 48 city instance of the problem in 1954. 5 Martin Grötechel more than doubled this 23 years later, solving a 120 city instance in 1977. 5 Enoch Crowder and Manfred W. Padberg again more than doubled this in just 3 years, with a 318 city solution. 5

In 1987, rapid improvements were made, culminating in a 2,392 city solution by Padberg and Giovanni Rinaldi. In the following two decades, David L. Appelgate, Robert E. Bixby, Vasek Chvátal, & William J. Cook led the cutting edge, solving a 7,397 city instance in 1994 up to the current largest solved problem of 24,978 cities in 2004. 5

Description

Graph theory.

In the context of the traveling salesman problem, the verticies correspond to cities and the edges correspond to the path between those cities. When modeled as a complete graph, paths that do not exist between cities can be modeled as edges of very large cost without loss of generality. 6 Minimizing the sum of the costs for Hamiltonian cycle is equivalent to identifying the shortest path in which each city is visiting only once.

Classifications of the TSP

The TRP can be divided into two classes depending on the nature of the cost matrix. 3,6

- Applies when the distance between cities is the same in both directions

- Applies when there are differences in distances (e.g. one-way streets)

An ATSP can be formulated as an STSP by doubling the number of nodes. 6

Variations of the TSP

Formulation

The objective function is then given by

To ensure that the result is a valid tour, several contraints must be added. 1,3

There are several other formulations for the subtour elimnation contraint, including circuit packing contraints, MTZ constraints, and network flow constraints.

aTSP ILP Formulation

The integer linear programming formulation for an aTSP is given by

sTSP ILP Formulation

The symmetric case is a special case of the asymmetric case and the above formulation is valid. 3, 6 The integer linear programming formulation for an sTSP is given by

Exact algorithms

Branch-and-bound algorithms are commonly used to find solutions for TSPs. 7 The ILP is first relaxed and solved as an LP using the Simplex method, then feasibility is regained by enumeration of the integer variables. 7

Other exact solution methods include the cutting plane method and branch-and-cut. 8

Heuristic algorithms

Given that the TSP is an NP-hard problem, heuristic algorithms are commonly used to give a approximate solutions that are good, though not necessarily optimal. The algorithms do not guarantee an optimal solution, but gives near-optimal solutions in reasonable computational time. 3 The Held-Karp lower bound can be calculated and used to judge the performance of a heuristic algorithm. 3

There are two general heuristic classifications 7 :

- Tour construction procedures where a solution is gradually built by adding a new vertex at each step

- Tour improvement procedures where a feasbile solution is improved upon by performing various exchanges

The best methods tend to be composite algorithms that combine these features. 7

Tour construction procedures

Tour improvement procedures

Applications

The importance of the traveling salesman problem is two fold. First its ubiquity as a platform for the study of general methods than can then be applied to a variety of other discrete optimization problems. 5 Second is its diverse range of applications, in fields including mathematics, computer science, genetics, and engineering. 5,6

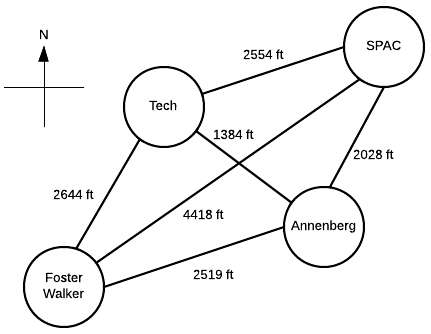

Suppose a Northwestern student, who lives in Foster-Walker , has to accomplish the following tasks:

- Drop off a homework set at Tech

- Work out a SPAC

- Complete a group project at Annenberg

Distances between buildings can be found using Google Maps. Note that there is particularly strong western wind and walking east takes 1.5 times as long.

It is the middle of winter and the student wants to spend the least possible time walking. Determine the path the student should take in order to minimize walking time, starting and ending at Foster-Walker.

Start with the cost matrix (with altered distances taken into account):

Method 1: Complete Enumeration

All possible paths are considered and the path of least cost is the optimal solution. Note that this method is only feasible given the small size of the problem.

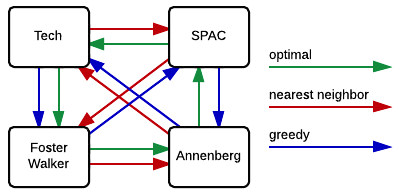

From inspection, we see that Path 4 is the shortest. So, the student should walk 2.28 miles in the following order: Foster-Walker → Annenberg → SPAC → Tech → Foster-Walker

Method 2: Nearest neighbor

Starting from Foster-Walker, the next building is simply the closest building that has not yet been visited. With only four nodes, this can be done by inspection:

- Smallest distance is from Foster-Walker is to Annenberg

- Smallest distance from Annenberg is to Tech

- Smallest distance from Tech is to Annenberg ( creates a subtour, therefore skip )

- Next smallest distance from Tech is to Foster-Walker ( creates a subtour, therefore skip )

- Next smallest distance from Tech is to SPAC

- Smallest distance from SPAC is to Annenberg ( creates a subtour, therefore skip )

- Next smallest distance from SPAC is to Tech ( creates a subtour, therefore skip )

- Next smallest distance from SPAC is to Foster-Walker

So, the student would walk 2.54 miles in the following order: Foster-Walker → Annenberg → Tech → SPAC → Foster-Walker

Method 3: Greedy

With this method, the shortest paths that do not create a subtour are selected until a complete tour is created.

- Smallest distance is Annenberg → Tech

- Next smallest is SPAC → Annenberg

- Next smallest is Tech → Annenberg ( creates a subtour, therefore skip )

- Next smallest is Anneberg → Foster-Walker ( creates a subtour, therefore skip )

- Next smallest is SPAC → Tech ( creates a subtour, therefore skip )

- Next smallest is Tech → Foster-Walker

- Next smallest is Annenberg → SPAC ( creates a subtour, therefore skip )

- Next smallest is Foster-Walker → Annenberg ( creates a subtour, therefore skip )

- Next smallest is Tech → SPAC ( creates a subtour, therefore skip )

- Next smallest is Foster-Walker → Tech ( creates a subtour, therefore skip )

- Next smallest is SPAC → Foster-Walker ( creates a subtour, therefore skip )

- Next smallest is Foster-Walker → SPAC

So, the student would walk 2.40 miles in the following order: Foster-Walker → SPAC → Annenberg → Tech → Foster-Walker

As we can see in the figure to the right, the heuristic methods did not give the optimal solution. That is not to say that heuristics can never give the optimal solution, just that it is not guaranteed.

Both the optimal and the nearest neighbor algorithms suggest that Annenberg is the optimal first building to visit. However, the optimal solution then goes to SPAC, while both heuristic methods suggest Tech. This is in part due to the large cost of SPAC → Foster-Walker. The heuristic algorithms cannot take this future cost into account, and therefore fall into that local optimum.

We note that the nearest neighbor and greedy algorithms give solutions that are 11.4% and 5.3%, respectively, above the optimal solution. In the scale of this problem, this corresponds to fractions of a mile. We also note that neither heuristic gave the worst case result, Foster-Walker → SPAC → Tech → Annenberg → Foster-Walker.

Only tour building heuristics were used. Combined with a tour improvement algorithm (such as 2-opt or simulated annealing), we imagine that we may be able to locate solutions that are closer to the optimum.

The exact algorithm used was complete enumeration, but we note that this is impractical even for 7 nodes (6! or 720 different possibilities). Commonly, the problem would be formulated and solved as an ILP to obtain exact solutions.

- Vanderbei, R. J. (2001). Linear programming: Foundations and extensions (2nd ed.). Boston: Kluwer Academic.

- Schrijver, A. (n.d.). On the history of combinatorial optimization (till 1960).

- Matai, R., Singh, S., & Lal, M. (2010). Traveling salesman problem: An overview of applications, formulations, and solution approaches. In D. Davendra (Ed.), Traveling Salesman Problem, Theory and Applications . InTech.

- Junger, M., Liebling, T., Naddef, D., Nemhauser, G., Pulleyblank, W., Reinelt, G., Rinaldi, G., & Wolsey, L. (Eds.). (2009). 50 years of integer programming, 1958-2008: The early years and state-of-the-art surveys . Heidelberg: Springer.

- Cook, W. (2007). History of the TSP. The Traveling Salesman Problem . Retrieved from http://www.math.uwaterloo.ca/tsp/history/index.htm

- Punnen, A. P. (2002). The traveling salesman problem: Applications, formulations and variations. In G. Gutin & A. P. Punnen (Eds.), The Traveling Salesman Problem and its Variations . Netherlands: Kluwer Academic Publishers.

- Laporte, G. (1992). The traveling salesman problem: An overview of exact and approximate algorithms. European Journal of Operational Research, 59 (2), 231–247.

- Goyal, S. (n.d.). A suvey on travlling salesman problem.

Navigation menu

Traveling Salesman Problem

The traveling salesman problem is mentioned by the character Larry Fleinhardt in the Season 2 episode " Rampage " (2006) of the television crime drama NUMB3RS .

Explore with Wolfram|Alpha

More things to try:

- traveling salesman problem

- 3-state, 4-color Turing machine rule 8460623198949736

- edge detect Abraham Lincoln image with radius x

Cite this as:

Weisstein, Eric W. "Traveling Salesman Problem." From MathWorld --A Wolfram Web Resource. https://mathworld.wolfram.com/TravelingSalesmanProblem.html

Subject classifications

Traveling Salesman Problem (TSP)

- Reference work entry

- First Online: 01 January 2017

- pp 2334–2337

- Cite this reference work entry

- Rhett Wilfahrt 4 &

- Sangho Kim 5

269 Accesses

Hamiltonian Cycle with the Least Weight ; Traveling Salesperson Problem ; TSP

The traveling salesperson problem is a well studied and famous problem in the area of computer science. In brief, consider a salesperson who wants to travel around the country from city to city to sell his wares. A simple example is shown in Fig. 1 .

An example of a city map for the traveling salesman problem

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Millennium Problems, The Clay Mathematics Institute of Cambridge, Massachusetts. http://www.claymath.org/millennium/

Appletgate D, Bixby R, Chvátal V, Cook W (1998) On the solution of traveling salesman problems. Documenta Mathematica Journal der Deutschen MathematikerVereinigung International Congress of Mathematicians

MATH Google Scholar

Biggs NL, Lloyd EK, Wilson RJ (1976) Graph theory 1736–1936. Clarendon Press, Oxford

Recommended Reading

Traveling Salesman Problem website supported by School of Industrial and Systems Engineering at Georgia Tech. http://www.tsp.gatech.edu/

Wikipedia, Traveling Salesman Problem. http://en.wikipedia.org/

Fekete S, Meijer H, Rohe A, Tietze W (2002) Solving a Hard problem to approximate an easy one: heuristics for maximum matchings and maximum traveling salesman problems. J Exp Algorithm 7

Google Scholar

Barvinok A, Fekete S, Johnson D, Tamir A, Woeginger G, Woodroofe R (2003) The geometric maximum traveling salesman problem. J ACM 50(5):3

Article MathSciNet MATH Google Scholar

Krolak P, Felts W, Marble G (1971) A man-machine approach toward solving the traveling salesman problem. Commun ACM 14(5):327–334

Article MATH Google Scholar

Papadimitriou C, Steiglitz K (1976) Some complexity results for the traveling salesman problem. In: Proceedings of the eighth annual ACM symposium on theory of computing, Hershey, May 1976

Book MATH Google Scholar

Download references

Author information

Authors and affiliations.

Department of Computer Science and Engineering, University of Minnesota, Minneapolis, MN, USA

Rhett Wilfahrt

Rancho Cucamonga, 7951 Etiwanda Ave. #14102, 91739, CA, USA

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

University of Minnesota, Minneapolis, MN, USA

Shashi Shekhar

Management Science and Information Systems Department, Rutgers Business School, Rutgers, The State University of New Jersey, Newark, NJ, USA

Department of Management Sciences, Tippie College of Business, University of Iowa, Iowa City, IA, USA

Rights and permissions

Reprints and permissions

Copyright information

© 2017 Springer International Publishing AG

About this entry

Cite this entry.

Wilfahrt, R., Kim, S. (2017). Traveling Salesman Problem (TSP). In: Shekhar, S., Xiong, H., Zhou, X. (eds) Encyclopedia of GIS. Springer, Cham. https://doi.org/10.1007/978-3-319-17885-1_1406

Download citation

DOI : https://doi.org/10.1007/978-3-319-17885-1_1406

Published : 12 May 2017

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-17884-4

Online ISBN : 978-3-319-17885-1

eBook Packages : Computer Science Reference Module Computer Science and Engineering

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Travelling salesman problem

The travelling salesman problem (often abbreviated to TSP) is a classic problem in graph theory . It has many applications, in many fields. It also has quite a few different solutions.

The problem

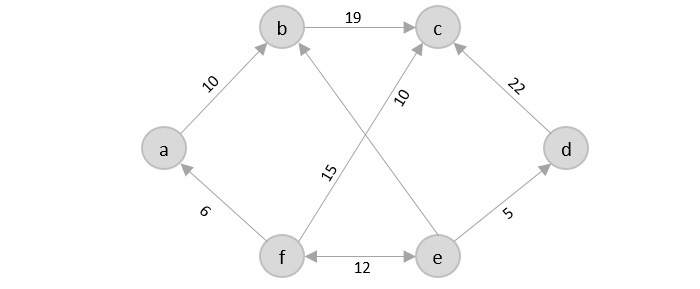

The problem is usually stated in terms of a salesman who needs to visit several towns before eventually returning to the starting point. There are various routes he could take, visiting the different towns in different orders. Here is an example:

There are several different routes that will visit every town. For example, we could visit the towns in the order A , B , C , D , E , then back to A . Or we could use the route A , D , C , E , B then back to A .

But not all routes are possible. For example, we cannot use a route A , D , E , B , C and then back to A , because there is no road from C to A .

The aim is to find the route that visits all the towns with the minimum cost . The cost is shown as the weight of each edge in the graph. This might be the distance between the towns, or it might be some other measure. For example, it might be the time taken to travel between the towns, which might not be proportionate to the distance because some roads have lower speed limits or are more congested. Or, if the salesman was travelling by train, it might be the price of the tickets.

The salesman can decide to optimise for whatever measure he considers to be most important.

Alternative applications and variants

TSP applies to any problem that involves visiting various places in sequence. One example is a warehouse, where various items need to be fetched from different parts of a warehouse to collect the items for an order. In the simplest case, where one person fetches all the items for a single order and then packs and dispatches the items, a TSP algorithm can be used. Of course, a different route would be required for each order, which would be generated by the ordering system.

Another interesting example is printed circuit board (PCB) drilling. A PCB is the circuit board you will find inside any computer or other electronic device. They often need holes drilled in them, including mounting holes where the board is attached to the case and holes where component wires need to pass through the board. These holes are usually drilled by a robot drill that moves across the board to drill each hole in the correct place. TSP can be used to calculate the optimum drilling order.

The TSP algorithm can be applied to directed graphs (where the distance from A to B might be different to the distance from B to A ). Directed graphs can represent things like one-way streets (where the distance might not be the same in both directions) or flight costs (where the price of a single airline ticket might not be the same in both directions).

There are some variants of the TSP scenario. The mTSP problem covers the situation where there are several salesmen and exactly one salesman must visit each city. This applies to delivery vans, where there might be several delivery vans. The problem is to decide which parcels to place on each van and also to decide the route of each van.

The travelling purchaser problem is another variant. In that case, a purchaser needs to buy several items that are being sold in different places, potentially at different prices. The task is to purchase all the items, minimising the total cost (the cost of the items and the cost of travel). A simple approach would be to buy each item from the place where it is cheapest, in which case this becomes a simple TSP. However, it is not always worth buying every item from the cheapest place because sometimes the travel cost might outweigh the price difference.

In this article, we will only look at the basic TSP case.

Brute force algorithm

We will look at 3 potential algorithms here. There are many others. The first, and simplest, is the brute force approach.

We will assume that we start at vertex A . Since we intend to make a tour of the vertices (ie visit every vertex once and return to the original vertex) it doesn't matter which vertex we start at, the shortest loop will still be the same.

So we will start at A , visit nodes B , C , D , and E in some particular order, then return to A .

To find the shortest route, we will try every possible ordering of vertices B , C , D , E , and record the cost of each one. Then we can find the shortest.

For example, the ordering ABCDEA has a total cost of 7+3+6+7+1 = 24.

The ordering ABCEDA (i.e. swapping D and E ) has a total cost of 7+3+2+7+1 = 20.

Some routes, such as ADEBCA are impossible because a required road doesn't exist. We can just ignore those routes.

After evaluating every possible route, we are certain to find the shortest route (or routes, as several different routes may happen to have the same length that also happens to be the shortest length). In this case, the shortest route is AECBDA with a total length of 1+8+3+6+1 = 19.

The main problem with this algorithm is that it is very inefficient. In this example, since we have already decided that A is the start/end point, we must work out the visiting order for the 4 towns BCDE . We have 4 choices of the first town, 3 choices for the second town, 2 choices for the third town, and 1 choice for the fourth town. So there are 4! (factorial) combinations. That is only 24 combinations, which is no problem, you could even do it by hand.

If we had 10 towns (in addition to the home town) there would be 10! combinations, which is 3628800. Far too many to do by hand, but a modern PC might be able to do that in a fraction of a second if the algorithm was implemented efficiently.

If we had 20 towns, then the number of combinations would be of order 10 to the power 18. If we assume a computer that could evaluate a billion routes per second (which a typical PC would struggle to do, at least at the time of writing), that would take a billion seconds, which is several decades.

Of course, there are more powerful computers available, and maybe quantum computing will come into play soon, but that is only 20 towns. If we had 100 towns, the number of combinations would be around 10 to the power 157, and 1000 towns 10 to the power 2568. For some applications, it would be quite normal to have hundreds of vertices. The brute force method is impractical for all but the most trivial scenarios.

Nearest neighbour algorithm

The nearest neighbour algorithm is what we might call a naive attempt to find a good route with very little computation. The algorithm is quite simple and obvious, and it might seem like a good idea to someone who hasn't put very much thought into it. You start by visiting whichever town is closest to the starting point. Then each time you want to visit the next town, you look at the map and pick the closest town to wherever you happen to be (of course, you will ignore any towns that you have visited already). What could possibly go wrong?

Starting at A , we visit the nearest town that we haven't visited yet. In this case D and E are both distance 1 from A . We will (completely arbitrarily) always prioritise the connections in alphabetical order. So we pick D .

As an aside, if we had picked E instead we would get a different result. It might be better, but it is just as likely to be worse, so there is no reason to chose one over the other.

From D , the closest town is C with a distance of 6 (we can't pick A because we have already been there).

From C the closest town is E with distance 2. From E we have to go to B because we have already visited every other town - that is a distance of 8. And from B we must return to A with a distance of 7.

The final path is ADCEBA and the total distance is 1+6+2+8+7 = 24.

The path isn't the best, but it isn't terrible. This algorithm will often find a reasonable path, particularly if there is a natural shortest path. However, it can sometimes go badly wrong.

The basic problem is that the algorithm doesn't take account of the big picture. It just blindly stumbles from one town to whichever next town is closest.

In particular, the algorithm implicitly decides that the final step will be B to A . It does this based on the other distances, but without taking the distance BA into account. But what if, for example, there is a big lake between B and A that you have to drive all the way around? This might make the driving distance BA very large. A more sensible algorithm would avoid that road at all costs, but the nearest neighbour algorithm just blindly leads us there.

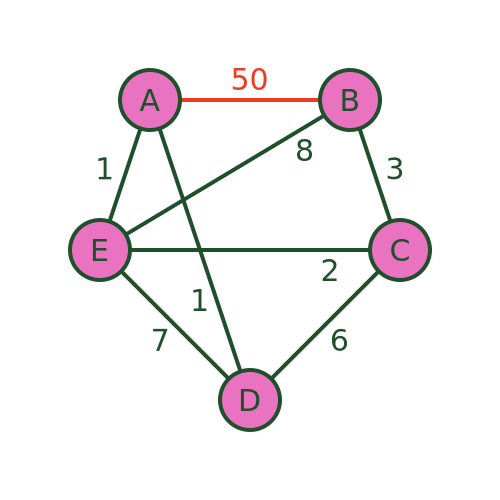

We can see this with this revised graph where BA has a distance of 50:

The algorithm will still recommend the same path because it never takes the distance BA into account. The path is still ADCEBA but the total distance is now 1+6+2+8+50 = 67. There are much better routes that avoid BA .

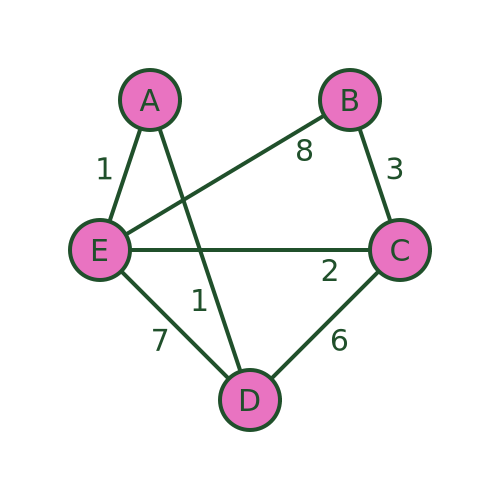

An even worse problem can occur if there is no road at all from B to A . The algorithm would still guide us to town B as the final visit. But in that case, it is impossible to get from B to A to complete the journey:

Bellman–Held–Karp algorithm

The Bellman–Held–Karp algorithm is a dynamic programming algorithm for solving TSP more efficiently than brute force. It is sometimes called the Held–Karp algorithm because it was discovered by Michael Held and Richard Karp, but it was also discovered independently by Richard Bellman at about the same time.

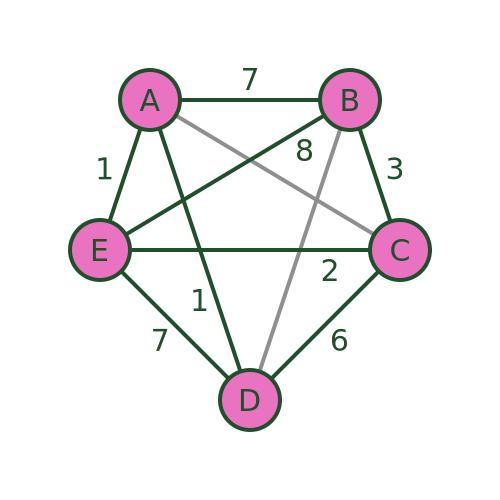

The algorithm assumes a complete graph (ie a graph where every vertex is connected to every other). However, it can be used with an incomplete graph like the one we have been using. To do this, we simply add extra the missing connections (shown below in grey) and assign them a very large distance (for example 1000). This ensures that the missing connections will never form part of the shortest path:

The technique works by incrementally calculating the shortest path for every possible set of 3 towns (starting from A ), then for every possible set of 4 towns, and so on until it eventually calculates the shortest path that goes from A via every other town and back to A .

Because the algorithm stores its intermediate calculations and discards non-optimal paths as early as possible, it is much more efficient than brute force.

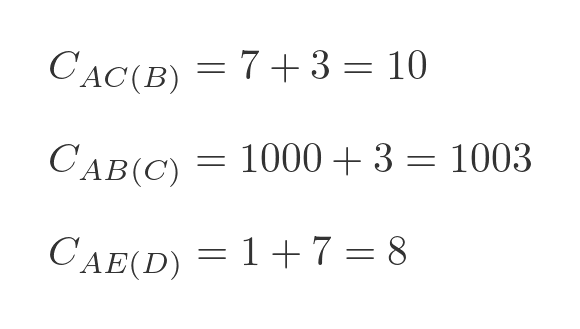

We will use the following notation. We will use this to indicate the distance between towns A and B :

And we will use this to indicate the cost (ie the total distance) from A to C via B :

Here are some examples:

The first example is calculated by adding the distance AB to BC , which gives 10. The second example is calculated by adding AC to CB . But since there is no road from A to C , we give that a large dummy distance of 1000, so that total cost is 1003, which means it is never going to form a part of the shortest route. The third example is calculated by adding the distance AD to DE , which gives 8.

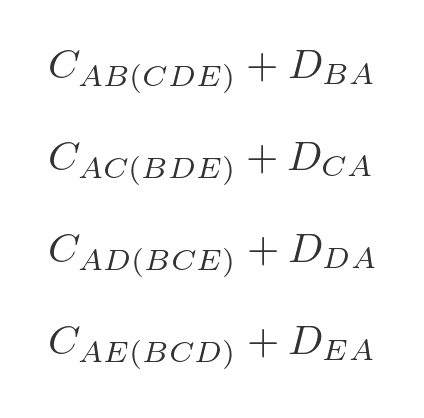

In the first step, we will calculate the value of every possible combination of 2 towns starting from A . There are 12 combinations: AB(C) , AB(D) , AB(E) , AC(B) , AC(D) , AC(E) , AD(B) , AD(C) , AD(E) , AE(B) , AE(C) , AE(D) .

These values will be stored for later use.

For step 2 we need to extend our notation slightly. We will use this to indicate the lowest cost from A to D via B and C (in either order):

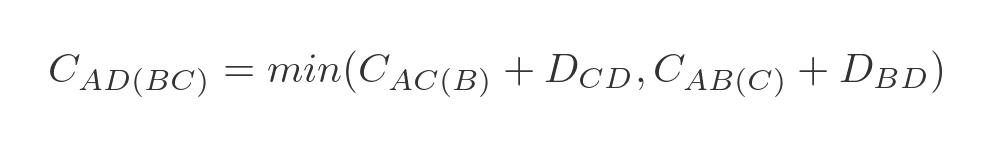

To be clear, this could represent one of two paths - ABCD or ACBD whichever is shorter. We can express this as a minimum of two values:

This represents the 2 ways to get from A to D via B and C :

- We can travel from A to C via B , and then from C to D .

- We can travel from A to B via C , and then from B to D .

We have already calculated A to C via B (and all the other combinations) in step 1, so all we need to do is add the values and find the smallest. In this case:

- A to C via B is 10, C to D is 6, so the total is 16.

- A to B via C is 1003, B to D is 1000, so the total is 2003.

Clearly, ABCD is the best route.

We need to repeat this for every possible combination of travelling from A to x via y and z . There are, again, 12 combinations: AB(CD) , AB(CE) , AB(DE) , AC(BD) , AC(BE) , AC(DE) , AD(BC) , AD(BE) , AD(CE) , AE(BC) , AE(BD) , AE(CD) .

We store these values, along with the optimal path, for later use.

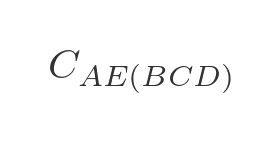

In the next step, we calculate the optimal route for travelling from A to any other town via 3 intermediate towns. For example, travelling from A to E via B , C , and D (in the optimal order) is written as:

There are 3 paths to do this:

- A to D via B and C , then from D to E .

- A to C via B and D , then from C to E .

- A to B via C and D , then from B to E .

We will choose the shortest of these three paths:

Again we have already calculated all the optimal intermediate paths. This time there are only 4 combinations we need to calculate: AB(CDE) , AC(BDE) , AD(BCE) , and AE(BCD) .

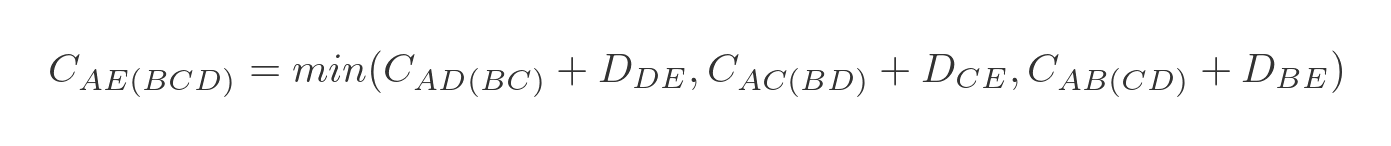

We are now in a position to calculate the optimal route for travelling from A back to A via all 4 other towns:

We need to evaluate each of the 4 options in step 3, adding on the extra distance to get back to A :

We can then choose the option that has the lowest total cost, and that will be the optimal route.

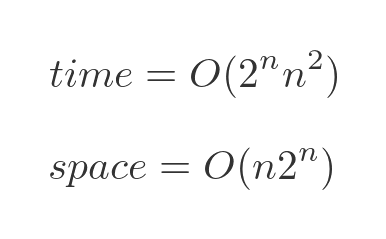

Performance

The performance of the Bellman–Held–Karp algorithm can be expressed in big-O notations as:

The time performance is approximately exponential. As the number of towns increases, the time taken will go up exponentially - each time an extra town is added, the time taken will double. We are ignoring the term in n squared because the exponential term is the most significant part.

Exponential growth is still quite bad, but it is far better than the factorial growth of the brute-force algorithm.

It is also important to notice that the algorithm requires memory to store the intermediate results, which also goes up approximately exponentially. The brute force algorithm doesn't have any significant memory requirements. However, that is usually an acceptable trade-off for a much better time performance.

The stages above describe how the algorithm works at a high level. We haven't gone into great detail about exactly how the algorithm keeps track of the various stages. Over the years since its discovery, a lot of work has been put into optimising the implementation. Optimising the implementation won't change the time complexity, which will still be roughly exponential. However, it might make the algorithm run several times faster than a poor implementation.

We won't cover this in detail, but if you ever need to use this algorithm for a serious purpose, it is worth considering using an existing, well-optimised implementation rather than trying to write it yourself. Unless you are just doing it for fun!

Other algorithms

Even the Bellman–Held–Karp algorithm has poor performance for modestly large numbers of towns. 100 towns, for example, would be well beyond the capabilities of a modern PC.

There are various heuristic algorithms that are capable of finding a good solution, but not necessarily the best possible solution, in a reasonable amount of time. I hope to cover these in a future article.

- Adjacency matrices

- The seven bridges of Königsberg

- Dijkstra's algorithm

Join the GraphicMaths Newletter

Sign up using this form to receive an email when new content is added:

Popular tags

adder adjacency matrix alu and gate angle area argand diagram binary maths cartesian equation chain rule chord circle cofactor combinations complex polygon complex power complex root cosh cosine cosine rule cpu cube decagon demorgans law derivative determinant diagonal directrix dodecagon ellipse equilateral triangle eulers formula exponent exponential exterior angle first principles flip-flop focus gabriels horn gradient graph hendecagon heptagon hexagon horizontal hyperbola hyperbolic function infinity integration by substitution interior angle inverse hyperbolic function inverse matrix irregular polygon isosceles trapezium isosceles triangle kite koch curve l system locus maclaurin series major axis matrix matrix algebra minor axis nand gate newton raphson method nonagon nor gate normal not gate octagon or gate parabola parallelogram parametric equation pentagon perimeter permutations polar coordinates polynomial power probability probability distribution product rule pythagoras proof quadrilateral radians radius rectangle regular polygon rhombus root set set-reset flip-flop sine sine rule sinh sloping lines solving equations solving triangles square standard curves star polygon straight line graphs surface of revolution symmetry tangent tanh transformations trapezium triangle turtle graphics vertical volume of revolution xnor gate xor gate

- Graph theory

- 7 bridges of Königsberg

- Binary numbers

- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

- Graph Data Structure And Algorithms

- Introduction to Graphs - Data Structure and Algorithm Tutorials

- Graph and its representations

- Types of Graphs with Examples

- Basic Properties of a Graph

- Applications, Advantages and Disadvantages of Graph

- Transpose graph

- Difference Between Graph and Tree

BFS and DFS on Graph

- Breadth First Search or BFS for a Graph

- Depth First Search or DFS for a Graph

- Applications, Advantages and Disadvantages of Depth First Search (DFS)

- Applications, Advantages and Disadvantages of Breadth First Search (BFS)

- Iterative Depth First Traversal of Graph

- BFS for Disconnected Graph

- Transitive Closure of a Graph using DFS

- Difference between BFS and DFS

Cycle in a Graph

- Detect Cycle in a Directed Graph

- Detect cycle in an undirected graph

- Detect Cycle in a directed graph using colors

- Detect a negative cycle in a Graph | (Bellman Ford)

- Cycles of length n in an undirected and connected graph

- Detecting negative cycle using Floyd Warshall

- Clone a Directed Acyclic Graph

Shortest Paths in Graph

- How to find Shortest Paths from Source to all Vertices using Dijkstra's Algorithm

- Bellman–Ford Algorithm

- Floyd Warshall Algorithm

- Johnson's algorithm for All-pairs shortest paths

- Shortest Path in Directed Acyclic Graph

- Multistage Graph (Shortest Path)

- Shortest path in an unweighted graph

- Karp's minimum mean (or average) weight cycle algorithm

- 0-1 BFS (Shortest Path in a Binary Weight Graph)

- Find minimum weight cycle in an undirected graph

Minimum Spanning Tree in Graph

- Kruskal’s Minimum Spanning Tree (MST) Algorithm

- Difference between Prim's and Kruskal's algorithm for MST

- Applications of Minimum Spanning Tree

- Total number of Spanning Trees in a Graph

- Minimum Product Spanning Tree

- Reverse Delete Algorithm for Minimum Spanning Tree

Topological Sorting in Graph

- Topological Sorting

- All Topological Sorts of a Directed Acyclic Graph

- Kahn's algorithm for Topological Sorting

- Maximum edges that can be added to DAG so that it remains DAG

- Longest Path in a Directed Acyclic Graph

- Topological Sort of a graph using departure time of vertex

Connectivity of Graph

- Articulation Points (or Cut Vertices) in a Graph

- Biconnected Components

- Bridges in a graph

- Eulerian path and circuit for undirected graph

- Fleury's Algorithm for printing Eulerian Path or Circuit

- Strongly Connected Components

- Count all possible walks from a source to a destination with exactly k edges

- Euler Circuit in a Directed Graph

- Word Ladder (Length of shortest chain to reach a target word)

- Find if an array of strings can be chained to form a circle | Set 1

- Tarjan's Algorithm to find Strongly Connected Components

- Paths to travel each nodes using each edge (Seven Bridges of Königsberg)

- Dynamic Connectivity | Set 1 (Incremental)

Maximum flow in a Graph

- Max Flow Problem Introduction

- Ford-Fulkerson Algorithm for Maximum Flow Problem

- Find maximum number of edge disjoint paths between two vertices

- Find minimum s-t cut in a flow network

- Maximum Bipartite Matching

- Channel Assignment Problem

- Introduction to Push Relabel Algorithm

- Introduction and implementation of Karger's algorithm for Minimum Cut

- Dinic's algorithm for Maximum Flow

Some must do problems on Graph

- Find size of the largest region in Boolean Matrix

- Count number of trees in a forest

- A Peterson Graph Problem

- Clone an Undirected Graph

- Introduction to Graph Coloring

Traveling Salesman Problem (TSP) Implementation

- Introduction and Approximate Solution for Vertex Cover Problem

- Erdos Renyl Model (for generating Random Graphs)

- Chinese Postman or Route Inspection | Set 1 (introduction)

- Hierholzer's Algorithm for directed graph

- Boggle (Find all possible words in a board of characters) | Set 1

- Hopcroft–Karp Algorithm for Maximum Matching | Set 1 (Introduction)

- Construct a graph from given degrees of all vertices

- Determine whether a universal sink exists in a directed graph

- Number of sink nodes in a graph

- Two Clique Problem (Check if Graph can be divided in two Cliques)

Travelling Salesman Problem (TSP) : Given a set of cities and distances between every pair of cities, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. Note the difference between Hamiltonian Cycle and TSP. The Hamiltonian cycle problem is to find if there exists a tour that visits every city exactly once. Here we know that Hamiltonian Tour exists (because the graph is complete) and in fact, many such tours exist, the problem is to find a minimum weight Hamiltonian Cycle. For example, consider the graph shown in the figure on the right side. A TSP tour in the graph is 1-2-4-3-1. The cost of the tour is 10+25+30+15 which is 80. The problem is a famous NP-hard problem. There is no polynomial-time known solution for this problem.

Examples:

In this post, the implementation of a simple solution is discussed.

- Consider city 1 as the starting and ending point. Since the route is cyclic, we can consider any point as a starting point.

- Generate all (n-1)! permutations of cities.

- Calculate the cost of every permutation and keep track of the minimum cost permutation.

- Return the permutation with minimum cost.

Below is the implementation of the above idea

Time complexity: O(n!) where n is the number of vertices in the graph. This is because the algorithm uses the next_permutation function which generates all the possible permutations of the vertex set. Auxiliary Space: O(n) as we are using a vector to store all the vertices.

Please Login to comment...

- NP Complete

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

12.9 Traveling Salesperson Problem

Learning objectives.

After completing this section, you should be able to:

- Distinguish between brute force algorithms and greedy algorithms.

- List all distinct Hamilton cycles of a complete graph.

- Apply brute force method to solve traveling salesperson applications.

- Apply nearest neighbor method to solve traveling salesperson applications.

We looked at Hamilton cycles and paths in the previous sections Hamilton Cycles and Hamilton Paths . In this section, we will analyze Hamilton cycles in complete weighted graphs to find the shortest route to visit a number of locations and return to the starting point. Besides the many routing applications in which we need the shortest distance, there are also applications in which we search for the route that is least expensive or takes the least time. Here are a few less common applications that you can read about on a website set up by the mathematics department at the University of Waterloo in Ontario, Canada:

- Design of fiber optic networks

- Minimizing fuel expenses for repositioning satellites

- Development of semi-conductors for microchips

- A technique for mapping mammalian chromosomes in genome sequencing

Before we look at approaches to solving applications like these, let's discuss the two types of algorithms we will use.

Brute Force and Greedy Algorithms

An algorithm is a sequence of steps that can be used to solve a particular problem. We have solved many problems in this chapter, and the procedures that we used were different types of algorithms. In this section, we will use two common types of algorithms, a brute force algorithm and a greedy algorithm . A brute force algorithm begins by listing every possible solution and applying each one until the best solution is found. A greedy algorithm approaches a problem in stages, making the apparent best choice at each stage, then linking the choices together into an overall solution which may or may not be the best solution.

To understand the difference between these two algorithms, consider the tree diagram in Figure 12.187 . Suppose we want to find the path from left to right with the largest total sum. For example, branch A in the tree diagram has a sum of 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36 .

To be certain that you pick the branch with greatest sum, you could list each sum from each of the different branches:

A : 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36

B : 10 + 2 + 11 + 8 = 31 10 + 2 + 11 + 8 = 31

C : 10 + 2 + 15 + 1 = 28 10 + 2 + 15 + 1 = 28

D : 10 + 2 + 15 + 6 = 33 10 + 2 + 15 + 6 = 33

E : 10 + 7 + 3 + 20 = 40 10 + 7 + 3 + 20 = 40

F : 10 + 7 + 3 + 14 = 34 10 + 7 + 3 + 14 = 34

G : 10 + 7 + 4 + 11 = 32 10 + 7 + 4 + 11 = 32

H : 10 + 7 + 4 + 5 = 26 10 + 7 + 4 + 5 = 26

Then we know with certainty that branch E has the greatest sum.

Now suppose that you wanted to find the branch with the highest value, but you only were shown the tree diagram in phases, one step at a time.

After phase 1, you would have chosen the branch with 10 and 7. So far, you are following the same branch. Let’s look at the next phase.

After phase 2, based on the information you have, you will choose the branch with 10, 7 and 4. Now, you are following a different branch than before, but it is the best choice based on the information you have. Let’s look at the last phase.

After phase 3, you will choose branch G which has a sum of 32.

The process of adding the values on each branch and selecting the highest sum is an example of a brute force algorithm because all options were explored in detail. The process of choosing the branch in phases, based on the best choice at each phase is a greedy algorithm. Although a brute force algorithm gives us the ideal solution, it can take a very long time to implement. Imagine a tree diagram with thousands or even millions of branches. It might not be possible to check all the sums. A greedy algorithm, on the other hand, can be completed in a relatively short time, and generally leads to good solutions, but not necessarily the ideal solution.

Example 12.42

Distinguishing between brute force and greedy algorithms.

A cashier rings up a sale for $4.63 cents in U.S. currency. The customer pays with a $5 bill. The cashier would like to give the customer $0.37 in change using the fewest coins possible. The coins that can be used are quarters ($0.25), dimes ($0.10), nickels ($0.05), and pennies ($0.01). The cashier starts by selecting the coin of highest value less than or equal to $0.37, which is a quarter. This leaves $ 0.37 − $ 0.25 = $ 0.12 $ 0.37 − $ 0.25 = $ 0.12 . The cashier selects the coin of highest value less than or equal to $0.12, which is a dime. This leaves $ 0.12 − $ 0.10 = $ 0.02 $ 0.12 − $ 0.10 = $ 0.02 . The cashier selects the coin of highest value less than or equal to $0.02, which is a penny. This leaves $ 0.02 − $ 0.01 = $ 0.01 $ 0.02 − $ 0.01 = $ 0.01 . The cashier selects the coin of highest value less than or equal to $0.01, which is a penny. This leaves no remainder. The cashier used one quarter, one dime, and two pennies, which is four coins. Use this information to answer the following questions.

- Is the cashier’s approach an example of a greedy algorithm or a brute force algorithm? Explain how you know.

- The cashier’s solution is the best solution. In other words, four is the fewest number of coins possible. Is this consistent with the results of an algorithm of this kind? Explain your reasoning.

- The approach the cashier used is an example of a greedy algorithm, because the problem was approached in phases and the best choice was made at each phase. Also, it is not a brute force algorithm, because the cashier did not attempt to list out all possible combinations of coins to reach this conclusion.

- Yes, it is consistent. A greedy algorithm does not always yield the best result, but sometimes it does.

Your Turn 12.42

The traveling salesperson problem.

Now let’s focus our attention on the graph theory application known as the traveling salesperson problem (TSP) in which we must find the shortest route to visit a number of locations and return to the starting point.

Recall from Hamilton Cycles , the officer in the U.S. Air Force who is stationed at Vandenberg Air Force base and must drive to visit three other California Air Force bases before returning to Vandenberg. The officer needed to visit each base once. We looked at the weighted graph in Figure 12.192 representing the four U.S. Air Force bases: Vandenberg, Edwards, Los Angeles, and Beal and the distances between them.

Any route that visits each base and returns to the start would be a Hamilton cycle on the graph. If the officer wants to travel the shortest distance, this will correspond to a Hamilton cycle of lowest weight. We saw in Table 12.11 that there are six distinct Hamilton cycles (directed cycles) in a complete graph with four vertices, but some lie on the same cycle (undirected cycle) in the graph.

Since the distance between bases is the same in either direction, it does not matter if the officer travels clockwise or counterclockwise. So, there are really only three possible distances as shown in Figure 12.193 .

The possible distances are:

So, a Hamilton cycle of least weight is V → B → E → L → V (or the reverse direction). The officer should travel from Vandenberg to Beal to Edwards, to Los Angeles, and back to Vandenberg.

Finding Weights of All Hamilton Cycles in Complete Graphs

Notice that we listed all of the Hamilton cycles and found their weights when we solved the TSP about the officer from Vandenberg. This is a skill you will need to practice. To make sure you don't miss any, you can calculate the number of possible Hamilton cycles in a complete graph. It is also helpful to know that half of the directed cycles in a complete graph are the same cycle in reverse direction, so, you only have to calculate half the number of possible weights, and the rest are duplicates.

In a complete graph with n n vertices,

- The number of distinct Hamilton cycles is ( n − 1 ) ! ( n − 1 ) ! .

- There are at most ( n − 1 ) ! 2 ( n − 1 ) ! 2 different weights of Hamilton cycles.

TIP! When listing all the distinct Hamilton cycles in a complete graph, you can start them all at any vertex you choose. Remember, the cycle a → b → c → a is the same cycle as b → c → a → b so there is no need to list both.

Example 12.43

Calculating possible weights of hamilton cycles.

Suppose you have a complete weighted graph with vertices N, M, O , and P .

- Use the formula ( n − 1 ) ! ( n − 1 ) ! to calculate the number of distinct Hamilton cycles in the graph.

- Use the formula ( n − 1 ) ! 2 ( n − 1 ) ! 2 to calculate the greatest number of different weights possible for the Hamilton cycles.

- Are all of the distinct Hamilton cycles listed here? How do you know? Cycle 1: N → M → O → P → N Cycle 2: N → M → P → O → N Cycle 3: N → O → M → P → N Cycle 4: N → O → P → M → N Cycle 5: N → P → M → O → N Cycle 6: N → P → O → M → N

- Which pairs of cycles must have the same weights? How do you know?

- There are 4 vertices; so, n = 4 n = 4 . This means there are ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 distinct Hamilton cycles beginning at any given vertex.

- Since n = 4 n = 4 , there are ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 possible weights.

- Yes, they are all distinct cycles and there are 6 of them.

- Cycles 1 and 6 have the same weight, Cycles 2 and 4 have the same weight, and Cycles 3 and 5 have the same weight, because these pairs follow the same route through the graph but in reverse.

TIP! When listing the possible cycles, ignore the vertex where the cycle begins and ends and focus on the ways to arrange the letters that represent the vertices in the middle. Using a systematic approach is best; for example, if you must arrange the letters M, O, and P, first list all those arrangements beginning with M, then beginning with O, and then beginning with P, as we did in Example 12.42.

Your Turn 12.43

The brute force method.

The method we have been using to find a Hamilton cycle of least weight in a complete graph is a brute force algorithm, so it is called the brute force method . The steps in the brute force method are:

Step 1: Calculate the number of distinct Hamilton cycles and the number of possible weights.

Step 2: List all possible Hamilton cycles.

Step 3: Find the weight of each cycle.

Step 4: Identify the Hamilton cycle of lowest weight.

Example 12.44

Applying the brute force method.

On the next assignment, the air force officer must leave from Travis Air Force base, visit Beal, Edwards, and Vandenberg Air Force bases each exactly once and return to Travis Air Force base. There is no need to visit Los Angeles Air Force base. Use Figure 12.194 to find the shortest route.

Step 1: Since there are 4 vertices, there will be ( 4 − 1 ) ! = 3 ! = 6 ( 4 − 1 ) ! = 3 ! = 6 cycles, but half of them will be the reverse of the others; so, there will be ( 4 − 1 ) ! 2 = 6 2 = 3 ( 4 − 1 ) ! 2 = 6 2 = 3 possible distances.

Step 2: List all the Hamilton cycles in the subgraph of the graph in Figure 12.195 .

To find the 6 cycles, focus on the three vertices in the middle, B, E, and V . The arrangements of these vertices are BEV, BVE, EBV, EVB, VBE , and VEB . These would correspond to the 6 cycles:

1: T → B → E → V → T

2: T → B → V → E → T

3: T → E → B → V → T

4: T → E → V → B → T

5: T → V → B → E → T

6: T → V → E → B → T

Step 3: Find the weight of each path. You can reduce your work by observing the cycles that are reverses of each other.

1: 84 + 410 + 207 + 396 = 1097 84 + 410 + 207 + 396 = 1097

2: 84 + 396 + 207 + 370 = 1071 84 + 396 + 207 + 370 = 1071

3: 370 + 410 + 396 + 396 = 1572 370 + 410 + 396 + 396 = 1572

4: Reverse of cycle 2, 1071

5: Reverse of cycle 3, 1572

6: Reverse of cycle 1, 1097

Step 4: Identify a Hamilton cycle of least weight.

The second path, T → B → V → E → T , and its reverse, T → E → V → B → T , have the least weight. The solution is that the officer should travel from Travis Air Force base to Beal Air Force Base, to Vandenberg Air Force base, to Edwards Air Force base, and return to Travis Air Force base, or the same route in reverse.

Your Turn 12.44

Now suppose that the officer needed a cycle that visited all 5 of the Air Force bases in Figure 12.194 . There would be ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 different arrangements of vertices and ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 distances to compare using the brute force method. If you consider 10 Air Force bases, there would be ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 different arrangements and ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 distances to consider. There must be another way!

The Nearest Neighbor Method

When the brute force method is impractical for solving a traveling salesperson problem, an alternative is a greedy algorithm known as the nearest neighbor method , which always visit the closest or least costly place first. This method finds a Hamilton cycle of relatively low weight in a complete graph in which, at each phase, the next vertex is chosen by comparing the edges between the current vertex and the remaining vertices to find the lowest weight. Since the nearest neighbor method is a greedy algorithm, it usually doesn’t give the best solution, but it usually gives a solution that is "good enough." Most importantly, the number of steps will be the number of vertices. That’s right! A problem with 10 vertices requires 10 steps, not 362,880. Let’s look at an example to see how it works.

Suppose that a candidate for governor wants to hold rallies around the state. They plan to leave their home in city A , visit cities B, C, D, E , and F each once, and return home. The airfare between cities is indicated in the graph in Figure 12.196 .

Let’s help the candidate keep costs of travel down by applying the nearest neighbor method to find a Hamilton cycle that has a reasonably low weight. Begin by marking starting vertex as V 1 V 1 for "visited 1st." Then to compare the weights of the edges between A and vertices adjacent to A : $250, $210, $300, $200, and $100 as shown in Figure 12.197 . The lowest of these is $100, which is the edge between A and F .

Mark F as V 2 V 2 for "visited 2nd" then compare the weights of the edges between F and the remaining vertices adjacent to F : $170, $330, $150 and $350 as shown in Figure 12.198 . The lowest of these is $150, which is the edge between F and D .

Mark D as V 3 V 3 for "visited 3rd." Next, compare the weights of the edges between D and the remaining vertices adjacent to D : $120, $310, and $270 as shown in Figure 12.199 . The lowest of these is $120, which is the edge between D and B .

So, mark B as V 4 V 4 for "visited 4th." Finally, compare the weights of the edges between B and the remaining vertices adjacent to B : $160 and $220 as shown in Figure 12.200 . The lower amount is $160, which is the edge between B and E .

Now you can mark E as V 5 V 5 and mark the only remaining vertex, which is C , as V 6 V 6 . This is shown in Figure 12.201 . Make a note of the weight of the edge from E to C , which is $180, and from C back to A , which is $210.

The Hamilton cycle we found is A → F → D → B → E → C → A . The weight of the circuit is $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 . This may or may not be the route with the lowest cost, but there is a good chance it is very close since the weights are most of the lowest weights on the graph and we found it in six steps instead of finding 120 different Hamilton cycles and calculating 60 weights. Let’s summarize the procedure that we used.

Step 1: Select the starting vertex and label V 1 V 1 for "visited 1st." Identify the edge of lowest weight between V 1 V 1 and the remaining vertices.

Step 2: Label the vertex at the end of the edge of lowest weight that you found in previous step as V n V n where the subscript n indicates the order the vertex is visited. Identify the edge of lowest weight between V n V n and the vertices that remain to be visited.

Step 3: If vertices remain that have not been visited, repeat Step 2. Otherwise, a Hamilton cycle of low weight is V 1 → V 2 → ⋯ → V n → V 1 V 1 → V 2 → ⋯ → V n → V 1 .

Example 12.45

Using the nearest neighbor method.

Suppose that the candidate for governor wants to hold rallies around the state but time before the election is very limited. They would like to leave their home in city A , visit cities B , C , D , E , and F each once, and return home. The airfare between cities is not as important as the time of travel, which is indicated in Figure 12.202 . Use the nearest neighbor method to find a route with relatively low travel time. What is the total travel time of the route that you found?

Step 1: Label vertex A as V 1 V 1 . The edge of lowest weight between A and the remaining vertices is 85 min between A and D .

Step 2: Label vertex D as V 2 V 2 . The edge of lowest weight between D and the vertices that remain to be visited, B, C, E , and F , is 70 min between D and F .

Repeat Step 2: Label vertex F as V 3 V 3 . The edge of lowest weight between F and the vertices that remain to be visited, B, C, and E , is 75 min between F and C .

Repeat Step 2: Label vertex C as V 4 V 4 . The edge of lowest weight between C and the vertices that remain to be visited, B and E , is 100 min between C and B .

Repeat Step 2: Label vertex B as V 5 V 5 . The only vertex that remains to be visited is E . The weight of the edge between B and E is 95 min.

Step 3: A Hamilton cycle of low weight is A → D → F → C → B → E → A . So, a route of relatively low travel time is A to D to F to C to B to E and back to A . The total travel time of this route is: 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min

Your Turn 12.45

Check your understanding, section 12.9 exercises.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Authors: Donna Kirk

- Publisher/website: OpenStax

- Book title: Contemporary Mathematics

- Publication date: Mar 22, 2023

- Location: Houston, Texas

- Book URL: https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Section URL: https://openstax.org/books/contemporary-mathematics/pages/12-9-traveling-salesperson-problem

© Dec 21, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- Architecture and Design

- Asian and Pacific Studies

- Business and Economics

- Classical and Ancient Near Eastern Studies

- Computer Sciences

- Cultural Studies

- Engineering

- General Interest

- Geosciences

- Industrial Chemistry

- Islamic and Middle Eastern Studies

- Jewish Studies

- Library and Information Science, Book Studies

- Life Sciences

- Linguistics and Semiotics

- Literary Studies

- Materials Sciences

- Mathematics

- Social Sciences

- Sports and Recreation

- Theology and Religion

- Publish your article

- The role of authors

- Promoting your article

- Abstracting & indexing

- Publishing Ethics

- Why publish with De Gruyter

- How to publish with De Gruyter

- Our book series

- Our subject areas

- Your digital product at De Gruyter

- Contribute to our reference works

- Product information

- Tools & resources

- Product Information

- Promotional Materials

- Orders and Inquiries

- FAQ for Library Suppliers and Book Sellers

- Repository Policy

- Free access policy

- Open Access agreements

- Database portals

- For Authors

- Customer service

- People + Culture

- Journal Management

- How to join us

- Working at De Gruyter

- Mission & Vision

- De Gruyter Foundation

- De Gruyter Ebound

- Our Responsibility

- Partner publishers

Your purchase has been completed. Your documents are now available to view.

The Traveling Salesman Problem

A computational study.

- David L. Applegate , Robert E. Bixby , Vašek Chvátal and William J. Cook

- X / Twitter

Please login or register with De Gruyter to order this product.

- Language: English

- Publisher: Princeton University Press

- Copyright year: 2007

- Edition: Course Book

- Audience: Professional and scholarly;College/higher education;

- Main content: 608

- Other: 200 line illus.

- Keywords: Algorithm ; Instance (computer science) ; Computation ; Linear programming ; Cutting-plane method ; Time complexity ; Subset ; Solver ; Simplex algorithm ; Implementation ; Iteration ; Result ; Addition ; Test set ; Hypergraph ; Calculation ; Variable (computer science) ; Estimation ; Quantity ; Upper and lower bounds ; Mathematical optimization ; Search tree ; Special case ; Big O notation ; Tree (data structure) ; Summation ; Greedy algorithm ; Variable (mathematics) ; Applied mathematics ; Analysis of algorithms ; Dynamic programming ; Parity (mathematics) ; CPLEX ; Theorem ; Connected component (graph theory) ; Approximation algorithm ; CPU time ; Pairwise ; AT&T Labs ; Linear inequality ; Search algorithm ; Engineering ; Mathematics ; Computational resource ; Optimization problem ; Basic solution (linear programming) ; George Dantzig ; Travelling salesman problem ; Princeton University ; Trade-off ; Disjoint sets ; Pricing ; Convex hull ; Percentage ; Subsequence ; Rice University ; Project ; Combinatorial optimization ; Notation ; Georgia Institute of Technology ; Rutgers University ; Best ; worst and average case ; Bifurcation theory ; Hospitality ; Reduced cost ; Institute ; Parameter (computer programming) ; Stochastic ; Polytope ; Polyhedron ; Approximation ; Theory ; Hamiltonian path ; Chaos theory ; Solution set ; Column generation ; Computer ; Scientific notation ; Processing (programming language) ; Equation ; Operations research ; For loop ; Genetic algorithm ; Subroutine ; Self-similarity ; Euclidean distance ; Order by ; Family of sets ; Accuracy and precision ; Determinism ; Connectivity (graph theory) ; Ear decomposition ; Source code ; Neuroscience ; Model of computation ; Requirement ; Integer ; Delaunay triangulation ; Euclidean space ; Enumeration

- Published: September 19, 2011

- ISBN: 9781400841103

Open Access is an initiative that aims to make scientific research freely available to all. To date our community has made over 100 million downloads. It’s based on principles of collaboration, unobstructed discovery, and, most importantly, scientific progression. As PhD students, we found it difficult to access the research we needed, so we decided to create a new Open Access publisher that levels the playing field for scientists across the world. How? By making research easy to access, and puts the academic needs of the researchers before the business interests of publishers.

We are a community of more than 103,000 authors and editors from 3,291 institutions spanning 160 countries, including Nobel Prize winners and some of the world’s most-cited researchers. Publishing on IntechOpen allows authors to earn citations and find new collaborators, meaning more people see your work not only from your own field of study, but from other related fields too.

Brief introduction to this section that descibes Open Access especially from an IntechOpen perspective

Want to get in touch? Contact our London head office or media team here

Our team is growing all the time, so we’re always on the lookout for smart people who want to help us reshape the world of scientific publishing.

Home > Books > Algorithm Analysis

Traveling Salesman Problem, Theory and Applications

Book metrics overview

71,092 Chapter Downloads

Impact of this book and its chapters

Total Chapter Downloads on intechopen.com

Total Chapter Views on intechopen.com

Overall attention for this book and its chapters

Book Citations

Total Chapter Citations

Academic Editor

Central Washington University , United States of America

Published 30 December 2010

Doi 10.5772/547

ISBN 978-953-307-426-9

eBook (PDF) ISBN 978-953-51-5501-0

Copyright year 2010

Number of pages 338

This book is a collection of current research in the application of evolutionary algorithms and other optimal algorithms to solving the TSP problem. It brings together researchers with applications in Artificial Immune Systems, Genetic Algorithms, Neural Networks and Differential Evolution Algorithm. Hybrid systems, like Fuzzy Maps, Chaotic Maps and Parallelized TSP are also presented. Most import...

This book is a collection of current research in the application of evolutionary algorithms and other optimal algorithms to solving the TSP problem. It brings together researchers with applications in Artificial Immune Systems, Genetic Algorithms, Neural Networks and Differential Evolution Algorithm. Hybrid systems, like Fuzzy Maps, Chaotic Maps and Parallelized TSP are also presented. Most importantly, this book presents both theoretical as well as practical applications of TSP, which will be a vital tool for researchers and graduate entry students in the field of applied Mathematics, Computing Science and Engineering.

By submitting the form you agree to IntechOpen using your personal information in order to fulfil your library recommendation. In line with our privacy policy we won’t share your details with any third parties and will discard any personal information provided immediately after the recommended institution details are received. For further information on how we protect and process your personal information, please refer to our privacy policy .

Cite this book

There are two ways to cite this book:

Edited Volume and chapters are indexed in

Table of contents.

By Rajesh Matai, Surya Singh and Murari Lal Mittal

By Yuan-bin Mo

By Jun Sakuma and Shigenobu Kobayashi

By Ivan Zelinka, Roman Senkerik, Magdalena Bialic-Davendra and Donald Davendra

By Xuesong Yan, Qinghua Wu and Hui Li

By Jingui Lu and Min Xie

By Hirotaka Itoh

By Fang Liu, Yutao Qi, Jingjing Ma, Maoguo Gong, Ronghua Shang, Yangyang Li and Licheng Jiao

By Setsuo Tsuruta and Yoshitaka Sakurai

By Yong-hyun Cho

By Paulo Siqueira, Maria Teresinha Arns Steiner and Sérgio Scheer

By Kajal De and Arindam Chaudhuri

By Francisco Chagas De Lima Júnior, Adriao Duarte Doria Neto and Jorge Dantas De Melo

By Dolores Rexachs, Emilio Luque and Paula Cecilia Fritzsche

By Moustapha Diaby

By Hugo Hernandez-Saldana

By Liana Lupsa, Ioana Chiorean, Radu Lupsa and Luciana Neamtiu

IMPACT OF THIS BOOK AND ITS CHAPTERS

71,092 Total Chapter Downloads

7,897 Total Chapter Views

199 Crossref Citations

167 Web of Science Citations

297 Dimensions Citations

5 Altmetric Score

Order a print copy of this book

Available on

Delivered by

£139 (ex. VAT)*

Hardcover | Printed Full Colour

FREE SHIPPING WORLDWIDE

* Residents of European Union countries need to add a Book Value-Added Tax Rate based on their country of residence. Institutions and companies, registered as VAT taxable entities in their own EU member state, will not pay VAT by providing IntechOpen with their VAT registration number. This is made possible by the EU reverse charge method.

As an IntechOpen contributor, you can buy this book for an Exclusive Author price with discounts from 30% to 50% on retail price.

Log in to your Author Panel to purchase a book at the discounted price.

For any assistance during ordering process, contact us at [email protected]

Related books

Novel trends in the traveling salesman problem.

Edited by Donald Davendra

Evolutionary Algorithms

Edited by Eisuke Kita

Advances in Evolutionary Algorithms

Edited by Witold Kosinski

Bio-Inspired Computational Algorithms and Their Applications

Edited by Shangce Gao

Greedy Algorithms

Edited by Witold Bednorz

Search Algorithms and Applications

Edited by Nashat Mansour

Tabu Search

Edited by Jaziri Wassim

Infrared Spectroscopy

Edited by Theophile Theophanides

Frontiers in Guided Wave Optics and Optoelectronics

Edited by Bishnu Pal

Abiotic Stress in Plants

Edited by Arun Shanker

Call for authors

Submit your work to intechopen.

- Data Structures & Algorithms

- DSA - Overview

- DSA - Environment Setup

- DSA - Algorithms Basics

- DSA - Asymptotic Analysis

- Data Structures

- DSA - Data Structure Basics

- DSA - Data Structures and Types

- DSA - Array Data Structure