This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Work with Delta Lake table history

- 4 contributors

Each operation that modifies a Delta Lake table creates a new table version. You can use history information to audit operations, rollback a table, or query a table at a specific point in time using time travel.

Databricks does not recommend using Delta Lake table history as a long-term backup solution for data archival. Databricks recommends using only the past 7 days for time travel operations unless you have set both data and log retention configurations to a larger value.

Retrieve Delta table history

You can retrieve information including the operations, user, and timestamp for each write to a Delta table by running the history command. The operations are returned in reverse chronological order.

Table history retention is determined by the table setting delta.logRetentionDuration , which is 30 days by default.

Time travel and table history are controlled by different retention thresholds. See What is Delta Lake time travel? .

For Spark SQL syntax details, see DESCRIBE HISTORY .

See the Delta Lake API documentation for Scala/Java/Python syntax details.

Catalog Explorer provides a visual view of this detailed table information and history for Delta tables. In addition to the table schema and sample data, you can click the History tab to see the table history that displays with DESCRIBE HISTORY .

History schema

The output of the history operation has the following columns.

- JDBC or ODBC

- Run a command using the REST API

- Some task types for jobs

- Columns added in the future will always be added after the last column.

Operation metrics keys

The history operation returns a collection of operations metrics in the operationMetrics column map.

The following tables list the map key definitions by operation.

What is Delta Lake time travel?

Delta Lake time travel supports querying previous table versions based on timestamp or table version (as recorded in the transaction log). You can use time travel for applications such as the following:

- Re-creating analyses, reports, or outputs (for example, the output of a machine learning model). This could be useful for debugging or auditing, especially in regulated industries.

- Writing complex temporal queries.

- Fixing mistakes in your data.

- Providing snapshot isolation for a set of queries for fast changing tables.

Table versions accessible with time travel are determined by a combination of the retention threshold for transaction log files and the frequency and specified retention for VACUUM operations. If you run VACUUM daily with the default values, 7 days of data is available for time travel.

Delta time travel syntax

You query a Delta table with time travel by adding a clause after the table name specification.

- '2018-10-18T22:15:12.013Z' , that is, a string that can be cast to a timestamp

- cast('2018-10-18 13:36:32 CEST' as timestamp)

- '2018-10-18' , that is, a date string

- current_timestamp() - interval 12 hours

- date_sub(current_date(), 1)

- Any other expression that is or can be cast to a timestamp

- version is a long value that can be obtained from the output of DESCRIBE HISTORY table_spec .

Neither timestamp_expression nor version can be subqueries.

Only date or timestamp strings are accepted. For example, "2019-01-01" and "2019-01-01T00:00:00.000Z" . See the following code for example syntax:

You can also use the @ syntax to specify the timestamp or version as part of the table name. The timestamp must be in yyyyMMddHHmmssSSS format. You can specify a version after @ by prepending a v to the version. See the following code for example syntax:

What are transaction log checkpoints?

Delta Lake records table versions as JSON files within the _delta_log directory, which is stored alongside table data. To optimize checkpoint querying, Delta Lake aggregates table versions to Parquet checkpoint files, preventing the need to read all JSON versions of table history. Azure Databricks optimizes checkpointing frequency for data size and workload. Users should not need to interact with checkpoints directly. The checkpoint frequency is subject to change without notice.

Configure data retention for time travel queries

To query a previous table version, you must retain both the log and the data files for that version.

Data files are deleted when VACUUM runs against a table. Delta Lake manages log file removal automatically after checkpointing table versions.

Because most Delta tables have VACUUM run against them regularly, point-in-time queries should respect the retention threshold for VACUUM , which is 7 days by default.

In order to increase the data retention threshold for Delta tables, you must configure the following table properties:

- delta.logRetentionDuration = "interval <interval>" : controls how long the history for a table is kept. The default is interval 30 days .

- delta.deletedFileRetentionDuration = "interval <interval>" : determines the threshold VACUUM uses to remove data files no longer referenced in the current table version. The default is interval 7 days .

You can specify Delta properties during table creation or set them with an ALTER TABLE statement. See Delta table properties reference .

You must set both of these properties to ensure table history is retained for longer duration for tables with frequent VACUUM operations. For example, to access 30 days of historical data, set delta.deletedFileRetentionDuration = "interval 30 days" (which matches the default setting for delta.logRetentionDuration ).

Increasing data retention threshold can cause your storage costs to go up, as more data files are maintained.

Restore a Delta table to an earlier state

You can restore a Delta table to its earlier state by using the RESTORE command. A Delta table internally maintains historic versions of the table that enable it to be restored to an earlier state. A version corresponding to the earlier state or a timestamp of when the earlier state was created are supported as options by the RESTORE command.

- You can restore an already restored table.

- You can restore a cloned table.

- You must have MODIFY permission on the table being restored.

- You cannot restore a table to an older version where the data files were deleted manually or by vacuum . Restoring to this version partially is still possible if spark.sql.files.ignoreMissingFiles is set to true .

- The timestamp format for restoring to an earlier state is yyyy-MM-dd HH:mm:ss . Providing only a date( yyyy-MM-dd ) string is also supported.

For syntax details, see RESTORE .

Restore is considered a data-changing operation. Delta Lake log entries added by the RESTORE command contain dataChange set to true. If there is a downstream application, such as a Structured streaming job that processes the updates to a Delta Lake table, the data change log entries added by the restore operation are considered as new data updates, and processing them may result in duplicate data.

For example:

In the preceding example, the RESTORE command results in updates that were already seen when reading the Delta table version 0 and 1. If a streaming query was reading this table, then these files will be considered as newly added data and will be processed again.

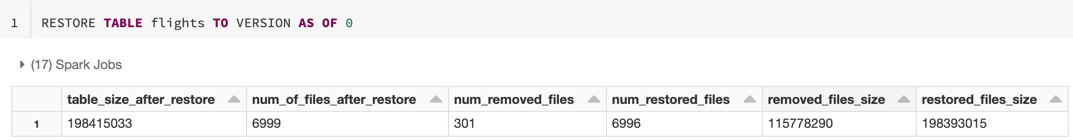

Restore metrics

RESTORE reports the following metrics as a single row DataFrame once the operation is complete:

table_size_after_restore : The size of the table after restoring.

num_of_files_after_restore : The number of files in the table after restoring.

num_removed_files : Number of files removed (logically deleted) from the table.

num_restored_files : Number of files restored due to rolling back.

removed_files_size : Total size in bytes of the files that are removed from the table.

restored_files_size : Total size in bytes of the files that are restored.

Examples of using Delta Lake time travel

Fix accidental deletes to a table for the user 111 :

Fix accidental incorrect updates to a table:

Query the number of new customers added over the last week.

How do I find the last commit’s version in the Spark session?

To get the version number of the last commit written by the current SparkSession across all threads and all tables, query the SQL configuration spark.databricks.delta.lastCommitVersionInSession .

If no commits have been made by the SparkSession , querying the key returns an empty value.

If you share the same SparkSession across multiple threads, it’s similar to sharing a variable across multiple threads; you may hit race conditions as the configuration value is updated concurrently.

Was this page helpful?

Additional resources

- Write For US

- Join for Ad Free

Time Travel with Delta Tables in Databricks?

- Post author: rimmalapudi

- Post category: Apache Spark

- Post last modified: March 27, 2024

- Reading time: 11 mins read

What is time travel in the delta table on Databricks? In modern-day to day ETL activities, we see a huge amount of data trafficking into the data lake. There are always a few rows inserted, updated, and deleted. Time travel is a key feature present in Delta Lake technology in Databricks.

Delta Lake uses transaction logging to store the history of changes on your data and with this feature, you can access the historical version of data that is changing over time and helps to go back in time travel on the delta table and see the previous snapshot of the data and also helps in auditing, logging, and data tracking.

Table of contents

1. challenges in data transition, 2. what is delta table in databricks, 3 create a delta table in databricks, 4. update delta records and check history, 5.1. using the timestamp, 5.2. using version number, 6. conclusion.

- Auditing : Looking over the data changes is critical to keep data in compliance and for debugging any changes. Data Lake without a time travel feature is failed in such scenarios as we can’t roll back to the previous state once changes are done.

- Reproduce experiments & reports : ML engineers try to create many models using some given set of data. When they try to reproduce the model after a period of time, typically the source data has been modified and they struggle to reproduce their experiments.

- Rollbacks : In the case of Data transitions that do simple appends, rollbacks could be possible by date-based partitioning. But in the case of upserts or changes by mistake, it’s very complicated to roll back data to the previous state.

Delta’s time travel capabilities in Azure Databricks simplify building data pipelines for the above challenges. As you write into a Delta table or directory, every operation is automatically versioned and stored in transactional logs. You can access the different versions of the data in two different ways:

Now, let us create a Delta table and perform some modifications on the same table and try to play with the Time Travel feature.

In Databricks the time travel with delta table is achieved by using the following.

- Using a timestamp

- Using a version number

Note: By default, all the tables that are created in Databricks are Delta tables.

Here, I am using the community Databricks version to achieve this ( https://community.cloud.databricks.com/ ). Create a cluster and attach the cluster to your notebook.

Let’s create the table and insert a few rows to the table.

You will get the below output.

Here we have created a student table with some records and as you can see it’s by default provided as delta.

Let’s update the student delta table with id’s 1,3 and delete records with id 2. Add another cell to simulate the update and delete the row in the table

You will see something below on databricks.

As you can see from the above screenshot, there are total 4 versions since the table is created, with the top record being the most recent change

- version0: Created the student table at 2022-11-14T12:09:24.000+0000

- version1: Inserted records into the table at 2022-11-14T12:09:29.000+0000

- version2: Updated the values of id’s 1,3 at 2022-11-14T12:09:35.000+0000

- version3: Deleted the record of id’s 2 at 2022-11-14T12:09:39.000+0000

Notice that the describe result shows the version , timestamp of the transaction that occurred, operation , parameters , and metrics . Metrics in the results show the number of rows and files changed.

5. Query Delta Table in Time Travel

As we already know Delta tables in Databricks have the time travel functionality which can be explored either using timestamp or by version number.

Note : Regardless of what approach you use, it’s just a simple SQL SELECT command with extending “ as of ”

First, let’s see our initial table, the table before I run the update and delete. To get this let’s use the timestamp column.

Now let’s see how the data is updated and deleted after each statement.

From the above examples, I hope it is clear how to query the table back in time.

As every operation on the delta table is marked with a version number, and you can use the version to travel back in time as well.

Below I have executed some queries to get the initial version of the table, after updating and deleting. The result would be the same as what we got with a timestamp .

Time travel of Delta table in databricks improves developer productivity tremendously. It helps:

- Data scientists and ML experts manage their experiments better by going back to the source of truth.

- Data engineers simplify their pipelines and roll back bad writes.

- Data analysts do easy reporting.

- Rollback to the previous state.

Related Articles

- Spark Timestamp – Extract hour, minute and second

- Spark Timestamp Difference in seconds, minutes and hours

- Spark – What is SparkSession Explained

- Spark Read XML file using Databricks API

- Spark Performance Tuning & Best Practices

- Spark Shell Command Usage with Examples

- datalake-vs-data-warehouse

- What is Apache Spark and Why It Is Ultimate for Working with Big Data

- Apache Spark Interview Questions

This Post Has One Comment

Nice article!

Comments are closed.

How to Rollback a Delta Lake Table to a Previous Version with Restore

When you’re working with a plain vanilla data lake, rolling back errors can be extremely challenging, if not impossible – especially if files were deleted. The ability to undo mistakes is a huge benefit that Delta Lake offers end users. Unlike, say, a plain vanilla Parquet table, Delta Lake preserves a history of the changes you make over time, storing different versions of your data. Rolling back your Delta Lake table to a previous version with the restore command can be a great way to reverse bad data inserts or undo an operation that mutated the table in unexpected ways.

This post shows you how to roll back a Delta Lake table, and explains when you can’t roll back because a table has been vacuumed. We’ll also look at Delta Lake’s time travel capabilities along the way. Let’s start with the syntax, and then dive into a full example to demonstrate the functionality.

TL;DR Delta Lake restore API

You can roll back a Delta Lake table to any previous version with the restoreToVersion command in PySpark:

You can also roll back a Delta Lake table with SQL:

In addition, you can restore Delta Lake tables to different points in time with restoreToTimestamp .

Delta Lake restore use cases

Having a versioned data lake is excellent for time travel, but it’s also invaluable for undoing mistakes. Delta Lake’s restore functionality provides great flexibility. You can use it to easily roll back unwanted operations, preserving a full change history.

Perhaps your data ingestion process broke, and you loaded the same data twice – you can undo this operation with a single command. You might also decide to roll back your Delta Lake table to a previous version because you executed a command with unintended consequences. Or perhaps a data vendor sent you some information mistakenly and they’d like you to delete it from your data lake – you can roll back to a prior version and then vacuum the table to remove the data permanently, as you'll see later in this post. In short, the Delta Lake restore functionality is yet another example of how Delta Lake makes your life easier as a developer.

Example setup: Create a Delta Lake table with multiple versions

To demonstrate the restore functionality, let’s create a Delta Lake table with three different versions. We’ll start by creating a simple table with a few rows of data. This will be stored as version 0:

Now we’ll overwrite that table with some other data. This will be stored as version 1:

Finally, we’ll create version 2 by again overwriting the previous data with some new data:

This image shows the three different versions of the Delta Lake table:

You can see that the Delta Lake contains the latest version of the data when it’s read, by default:

Time traveling in Delta Lake

Delta Lake makes it easy to access different versions of your data. For example, you can time travel back to version 0 of your Delta Lake table to see the original data that was stored when you created it. During time travel we are loading the table up to some version - in this case, we’re loading up to the initial version.

Here’s how to time travel back to version 0 of our example Delta Lake table:

And here’s how to time travel back to version 1:

Time travel is a temporary read operation, though you can write the result of a time travel operation into a new Delta table if you wish. If you read the contents of your table again after issuing one of the previous commands, you will see the latest version of the data (in our case, version 2); an earlier version is only returned if you explicitly time travel.

The restore command permits a more permanent form of time travel: you can use this to revert the Delta Lake table back to a prior version.

Delta Lake restore under the hood

Let’s restore our example Delta Lake table to version 1:

Now when you read the table, you can see it contains the data as of version 1:

Note, however, that this change does not erase version 2; instead, a metadata-only operation is performed in which the changes in version 2 are undone. This means you can still time travel to versions 0, 1, or 2 of the Delta Lake table, even after running the restoreToVersion command. Let’s time travel to version 2 of the Delta Lake table to demonstrate the data is preserved:

Using the restore command resets the table’s content to an earlier version, but doesn’t remove any data. It simply updates the transaction log to indicate that certain files should not be read. The following diagram provides a visual representation of the transaction log entries for each transaction.

“Add file” and “Remove file” in the transaction log don’t refer to physical filesystem operations on disk; they are logical metadata entries that Delta Lake uses to determine which files should be read.

To completely remove a later version of the data after restoring to a previous version, you need to run the Delta Lake vacuum command. We’ll look at that command and its effects next.

Delta Lake restore after vacuum

vacuum is a widely used command that removes files that are not needed by the latest version of the table. Running vacuum doesn’t make your Delta Lake operations any faster, but it removes files on disk, which reduces storage costs.

Now that we’ve restored our Delta Lake table to version 1, let’s run the vacuum command and see what happens:

As expected, reading the contents still returns the table’s data as of version 1:

But now that we’ve vacuumed, we can no longer time travel to version 2. The vacuum command has removed the version 2 data, so we can’t access this version anymore. We can’t access version 0 anymore either, as shown in the diagram.

Suppose we try to run this code to restore to version 2:

Here’s the error we'll get:

This post showed you how to view and restore earlier versions of your Delta Lake tables and taught you a little about how the code works under the hood. The ability to roll back changes and restore your data to a previous version is a huge advantage of Delta Lake compared to plain vanilla Parquet data lakes. Having access to versioned data makes your life easier and saves you from costly mistakes.

You can follow the Delta Lake project on LinkedIn or join our Slack community . We have a large, friendly, and growing community and we encourage you to join. We’re welcoming to newcomers and are happy to help you with your questions.

Follow our authors on

- +(1) 647-467-4396

- hello@knoldus.com

- hello@knoldus.com

Time Travel: Data versioning in Delta Lake

In today’s Big Data world, we process large amounts of data continuously and store the resulting data into data lake. This keeps changing the state of the data lake. But, sometimes we would like to access a historical version of our data. This requires versioning of data. Such kinds of data management simplifies our data pipeline by making it easy for professionals or organizations to audit the data changes, roll back to the previous version in case of accidental bad writes or deletes and so on. Apache Spark, alone can’t provide these kinds of capabilities but with the help of Databricks Delta, the next-gen unified analytics engine built on top of Apache Spark, introduces such unique Time Travel capabilities.

Time Traveling using Delta Lake

When we write our data into a Delta table, every operation is automatically versioned and we can access any version of data. This allows us to travel back to a different version of the current delta table.

This time-traveling can be achieved using 2 approaches: 1. Using a version number 2. Using a timestamp Let’s understand these approaches with an example:

In the above code snippet, Job-1 writes a record of 100 integers from 100 to 200 into the delta table. Similarly, Job-2 updates the record created by Job-1 with 100 integers from 1 to 100.

Now, let’s find the latest history of the delta table.

As seen above, both operations have unique version numbers and time stamps. This helps to audit the data changes in the data lake.

1. Using a version number

In the above code snippet, read() reads version 0 of the current delta table. In the same manner, we can pass our required version number to read(). Thus, using the version number of the operation, we can read the required version of the data table.

2. Using a timestamp

First, find the timestamp of Job-1

Second, using the timestamp read the historical version of the delta table

Time Travel Use Cases

Delta Lake time travel allows us to query an older snapshot of a Delta Lake table. Time travel has many use cases, including:

- Time travel makes it easy to do rollbacks in case of bad writes, playing an important role in fixing mistakes in our data.

- It helps in re-creating analysis, reports, or outputs (for example, the output of a machine learning model). This could be useful for debugging or auditing, especially in regulated industries.

- It also simplifies time-series analytics. For instance, in finding out how many new customers were added over the last week.

The source code can be found here

Thanks for reading!!

Share the Knol:

- Click to share on LinkedIn (Opens in new window)

- Click to share on Twitter (Opens in new window)

- Click to share on Telegram (Opens in new window)

- Click to share on Facebook (Opens in new window)

Written by kundankumarr

Exploring Big Data Technologies.

4 thoughts on “ Time Travel: Data versioning in Delta Lake 4 min read ”

The best post on Delta Lake I have ever read. Nice article!

Nice article very helpful

Very nicely written. It helped in understanding. But do we really need `option(“overwriteSchema”, “true”)` when Job2 is overwriting the data? As the schema is still of Integer type!

Hi Sarfaraz, Thanks for the comment. Actually there is no need of `option(“overwriteSchema”, “true”)` for the above example as schema will be same for both the jobs (id: long). It will be helpful to overcome schema mismatch when we write job-2 that results with different schema type only.

Comments are closed.

Discover more from Knoldus Blogs

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

Tech. Jobs. Biz. Success

Databricks time travel: tutorial on recovering delta tables.

- Posted on April 18, 2024 April 22, 2024

If you’ve accidentally written incorrect data to a Delta Lake table in Databricks, you can potentially recover the table using Delta Lake’s time travel feature. Time travel allows you to access previous versions of a Delta table, making it possible to revert to a point before the incorrect data was written.

Table of contents

Identify the Correct Version

Restore the table:, verify the data, databricks time travel to recover a delta lake table.

Determine the version of the Delta Lake table that contains the correct data. You can view the history of changes to a Delta table using the DESCRIBE HISTORY command in Databricks.

This command will show you a list of all the versions of the Delta table along with timestamps and actions performed.

Once you’ve identified the correct version, you can restore the Delta Lake table to that version using the RESTORE command.

After restoring the table, verify that the correct data has been recovered by querying the table.

Ensure that the data matches your expectations and that the incorrect data has been replaced with the correct version.

By using Delta Lake’s time travel feature, you can go back to an earlier version of a Delta table and restore it to a state where the wrong data wasn’t there. But keep in mind that time travel is affected by retention policies and older versions of a Delta table might be deleted automatically based on those policies. So it’s important to act quickly to recover the table before the version you want is removed.

Share this:

- Share on Tumblr

Author: Srini

Experienced Data Engineer, having skills in PySpark, Databricks, Python SQL, AWS, Linux, and Mainframe View all posts by Srini

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

You must be logged in to post a comment.

DataSimAntics

Less Talk, More Examples

Delta Table Time Travel – How Far Back Does it Go?

When it comes to Time Travel, let’s face it. We all have a lot of questions. If I go back in time and kill my father, do I kill myself, too? Or does it spawn a new timeline? Can I meet dinosaurs and if I step on a bug, will it end life as we know it? If I meet teenage me, what should I say? Should I say anything?

And maybe the biggest question of all:

If I want to see the oldest data in my Delta table, how far back can I go?

For this question, the answer is, of course, it depends .

Microsoft Documents provides some answers there. In the Table utility commands page for Azure Databricks, we get some explanation. The answers apply to Delta tables in general as it is a standard format, though Databricks may sometimes be ahead of others on the version of Delta tables that it is using. We can also get our answer from Delta.io docs article Table batch reads and writes .

You Have to Have Both Data File and Log File to Time Travel

This is the most important caveat. You can go as far back as you have both the data file and the log file. If you don’t have both the log file and the data file, then there will be no time travel to that version of the data. The data will be gone. But you have to get rid of that data yourself for it to be gone, it won’t just disappear after a certain period of time.

Data Files are Never Deleted Automatically – They Must be Vacuum ed

The Good News: The vacuum command must be ran to remove data files no longer referenced by a Delta table.

This command will not be ran automatically, so it would have to be a command that you implement in your solution somewhere. Hence, if you don’t want it to be vacuum ed, don’t vacuum it. That is easier said than done as support personnel may inadvertently run a vacuum. There is no way to stop a vacuum from being run.

NOTE: vacuum the default retention is 7 days.

There are a couple of configurations that you can make in the event that a vacuum command is ran.

delta.deletedFileRetentionDuration – How Long Files are Kept

delta.deletedFileRetentionDuration = "interval <interval>" . Default is “ interval 7 days “.

The delta.deletedFileRetentionDuration command, for example, it can be set to "interval 30 days". That means even if someone runs a vacuum command, it won’t be applied to the first 30 days worth of versions that you have already deleted right now. In other words, files that have been deleted 29 days ago can’t be vacuumed.

Obviously, the more files you have in storage, the more expensive it will be. As with anything, it’s a balancing act to determine what your needs are, and will largely depend on use cases for each organization.

Log Files are Deleted Automatically After Checkpoints

For more information on how the delta log works, check out this article by Databricks, Diving Into Delta Lake: Unpacking the Transaction Log .

Checkpoint?!

“Don’t act so surprised, your highness.”

A checkpoint is essentially a re-write of the Delta table after ten commits are made. This enables Spark to more easily work with new data coming in as it can skip anything that is before the current checkpoint and get to the new data more quickly.

delta.logRetentionDuration – How Long Log History is Kept

delta.logRetentionDuration = "interval <interval>" : controls how long the history for a table is kept. The default is interval 30 days .

Having a multitude of log files is not something that will cause contention with reading or writing data files. It is an operation that happens in parallel. Your biggest expense here, again, is storage.

How to Configure Tables to Keep a Long History for Time Travel

First, do this when the tables are not being written to. Schedule some downtime. Delta table properties are write operations, and will conflict with other write operations.

Next, decide on if you want to use Python or SQL to do this and then pick a very large number.

Finally, run the following commands as needed. They are really the same whichever way you run it. The nice part of using the python version, of course, is that it can be easier to iterate through an entire list of tables to get this done quickly.

SQL Version

Python version, share this:, leave a comment cancel reply.

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

- Microsoft Azure

- Google Cloud Platform

- Documentation

- Databricks release notes

- Delta Live Tables release notes and the release upgrade process

Delta Live Tables release 2024.33

August 19 - 23, 2024

These features and improvements were released with the 2024.33 release of Delta Live Tables.

Databricks Runtime versions used by this release

CURRENT (default): Databricks Runtime 14.1

PREVIEW: Databricks Runtime 15.2

Because Delta Live Tables channel releases follow a rolling upgrade process, channel upgrades are deployed to different regions at different times. Your release, including Databricks Runtime versions, might not be updated until a week or more after the initial release date. To find the Databricks Runtime version for a pipeline, see Runtime information .

New features and improvements in this release

You can now use liquid clustering when you create materialized views or streaming tables in your pipelines. See the Delta Live Tables SQL and Python references. To use liquid clustering, your pipeline must be configured to use the preview channel .

You can now read the change data feed from streaming tables that are the target of APPLY CHANGES or APPLY CHANGES FROM SNAPSHOT processing and published to Unity Catalog. You read the change data feed from a target streaming table identically to reading a change data feed from other Delta tables. To read the change data feed in another Delta Live Tables pipeline, the pipeline must be configured to use the preview channel . See Read a change data feed from an APPLY CHANGES target table .

Databricks Knowledge Base

If you still have questions or prefer to get help directly from an agent, please submit a request. We’ll get back to you as soon as possible.

Please enter the details of your request. A member of our support staff will respond as soon as possible.

- All articles

Timestamp change to underlying Apache Parquet/change data files while using Change Data Capture (CDC)

For timestamp-based queries, ensure that the original file timestamps are preserved during the migration process.

Written by aimee.gonzalezcameron

When using Change Data Capture (CDC) to consume incremental data from an external table, you see that the underlying Apache Parquet or change data files’ timestamp changes.

This problem typically arises after moving the files to a different workspace and changing the underlying S3 bucket for the table. Despite copying all S3 files, including change data, to a new location and recreating the external table, CDC queries based on timestamp fail, while queries based on version number succeed.

Example of a failing query

Example of a successful query.

Both queries are intended to retrieve the same change data.

Delta Lake uses the file modification time to determine the timestamp of a commit.

When files are copied to a new S3 bucket, their timestamps change, and there is no option to preserve the original timestamps. As a result, CDC queries based on timestamp fail because they rely on the physical timestamp of the files, which no longer matches the original commit times. In contrast, version-based queries succeed because the delta_log versions remain consistent, regardless of the file timestamps.

Delta Lake's behavior is documented in a GitHub issue .

Cloning a Delta table creates a separate history, affecting time travel queries and change data feed. For more information, please refer to the Clone a table on Databricks ( AWS | Azure | GCP ) and Use Delta Lake change data feed on Databricks ( AWS | Azure | GCP ) documentation.

For timestamp-based queries, ensure that the original file timestamps are preserved during the migration process. If this is not possible, rely on version-based queries to retrieve change data.

Monitor the development of the in-commit timestamp feature, which is currently in preview. This feature aims to address the issue by using commit timestamps instead of file modification times. You can contact your account team to sign up for the Databricks private preview to access this feature earlier.

Review the Delta 4.0 roadmap and plan for its adoption once it becomes generally available in Databricks Runtime 16.x, as it includes enhancements to address this issue.

- Amazon Web Services

- Microsoft Azure

- Documentation

- Develop on Databricks

- Developer tools and guidance

- What is the Databricks extension for Visual Studio Code?

Configure your Databricks project using the Databricks extension for Visual Studio Code

This documentation is for the Databricks extension for Visual Studio Code, version 2, which is in Public Preview .

The Databricks extension for Visual Studio Code offers a Configuration view within the extension panel that allows you to easily configure and update settings for your Databricks project. These features include a target workspace deployment selector, easy configuration of authentication and compute, workspace folder sync, and simple steps for activating the Python virtual environment necessary for debugging.

The Configuration view within the Databricks extension for Visual Studio Code is available once you have created or migrated a project to a Databricks project. See Create a new Databricks project .

Earlier versions of the Databricks extension for Visual Studio Code defined configuration settings in a project JSON file and environment variables were set in the terminal. In the release version, project and environment configuration is found in the databricks.yml and databricks.env files.

If your project is a Databricks Asset Bundle , the Databricks extention UI also provides a Bundle Resource Explorer and a Bundle Variables View to manage your bundle resources and variables. See Databricks Asset Bundles extension features .

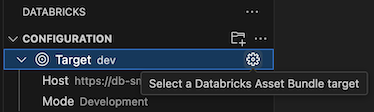

Change the target deployment workspace

To select or switch the deployment target for your Databricks project (for example, to switch from a dev target to a prod target):

In the Configuration view of the Databricks extension panel, click the gear icon ( Select a Databricks Asset Bundle target ) associated with Target .

In the Command Palette , select the desired deployment target.

Once a target is configured, the Host and deployment Mode is displayed. For information about Databricks Asset Bundles deployment modes, see Databricks Asset Bundle deployment modes .

The workspace host can be changed by modifying the target workspace setting in the databricks.yml configuration file associated with the project. See targets .

The following Databricks extension for Visual Studio Code features are only available when the target deployment mode is development:

Use the attached development cluster for bundle jobs

Sync workspace folder files

Select an interactive development cluster

Configure the Databricks profile for the project

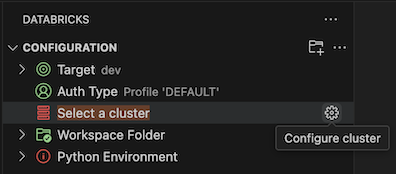

When you create a Databricks project or migrate a project to be a Databricks project, you configure a profile that includes authentication settings used to connect to Databricks. If you want to change the authentication profile used, click the gear icon associated with AuthType in the Configuration view.

For more information Databricks extension for Visual Studio Code authentication, see Authentication setup for the Databricks extension for Visual Studio Code .

Select a cluster for running code and jobs

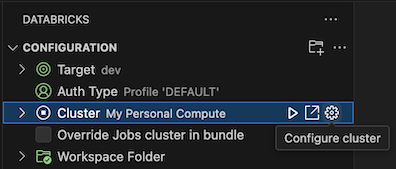

Using the Databricks extension for Visual Studio Code, you can select an existing Databricks cluster or create a new Databricks cluster for running your code and jobs. Once you have connected to compute, the cluster’s ID, Databricks Runtime version, creator, state, and access mode are displayed. You can also start and stop the cluster, and navigate directly to the cluster’s page details.

If you don’t want to wait for the jobs cluster to start up, check Override Jobs cluster in bundle just below the cluster selection to use the selected cluster for running bundle jobs in development mode.

Use an existing cluster

If you have an existing Databricks cluster that you want to use:

In the Configuration view, next to Cluster , click Select a cluster or the gear ( Configure cluster ) icon.

In the Command Palette , select the cluster that you want to use.

Create a new cluster

If you do not have an existing Databricks cluster, or you want to create a new one:

In the Configuration view, next to Cluster , click the gear ( Configure cluster ) icon.

In the Command Palette , click Create New Cluster .

When prompted to open the external website (your Databricks workspace), click Open .

If prompted, sign in to your Databricks workspace.

Follow the instructions to create a cluster .

Databricks recommends that you create a Personal Compute cluster. This enables you to start running workloads immediately, minimizing compute management overhead.

After the cluster is created and is running, go back to Visual Studio Code.

In the Command Palette , click the cluster that you want to use.

Sync your workspace folder with Databricks

You can sync the remote Databricks workspace folder associated with your Databricks project by clicking the sync icon ( Start synchronization ) associated with Workspace Folder in the Configuration view of the Databricks extension panel.

The Databricks extension for Visual Studio Code works only with workspace directories that it creates. You cannot use an existing workspace directory in your project unless it was created by the extension.

To navigate to the workspace view in Databricks, click the external link icon ( Open link externally ) icon associated with Workspace Folder .

The extension determines the Databricks workspace folder to use based on the file_path setting in the workspace mapping of the project’s associated Databricks Asset Bundle configuration. See workspace .

The Databricks extension for Visual Studio Code only performs one-way, automatic synchronization of file changes from your local Visual Studio Code project to the related workspace folder in your remote Databricks workspace. The files in this remote workspace directory are intended to be transient. Do not initiate changes to these files from within your remote workspace, as these changes will not be synchronized back to your local project.

For usage details on the workspace directory sync feature for earlier versions of the Databricks extension for Visual Studio Code, see Select a workspace directory for the Databricks extension for Visual Studio Code .

Set up your Python environment and Databricks Connect

The Python Environment section of the Configuration view enables easy setup of your Python virtual development environment and installation of Databricks Connect for running and debugging code and notebook cells. Python virtual environments make sure that your project is using compatible versions of Python and Python packages (in this case, the Databricks Connect package).

To configure the Python virtual environment for your project, in the Configuration view of the extension panel:

Click the red Activate Virtual Environment item under Python Environment .

In the Command Palette , select Venv or Conda.

Select the dependencies you want to install, if any.

To change environments, click the gear icon ( Change virtual environment ) associated with Active Environment .

For information about installing Databricks Connect, which enables running and debugging code and notebooks within Visual Studio Code, see Debug code using Databricks Connect for the Databricks extension for Visual Studio Code .

IMAGES

VIDEO

COMMENTS

Databricks solves this reproducibility problem by integrating Delta's time-travel capabilities with MLflow, an open source platform for the machine learning lifecycle. For reproducible machine learning training, you can simply log a timestamped URL to the path as an MLflow parameter to track which version of the data was used for each training job.

Each operation that modifies a Delta Lake table creates a new table version. You can use history information to audit operations, rollback a table, or query a table at a specific point in time using time travel. Databricks does not recommend using Delta Lake table history as a long-term backup solution for data archival.

Now, let us create a Delta table and perform some modifications on the same table and try to play with the Time Travel feature. In Databricks the time travel with delta table is achieved by using the following. Using a timestamp. Using a version number. Note: By default, all the tables that are created in Databricks are Delta tables.

Delta Lake time travel vs. data lake support. Delta Lake makes it easy to time travel between different versions of a Delta table. It's a straightforward operation that's a natural extension of the Delta Lake transaction log. Data lakes do not support time travel. When you're reading a data lake, you always have to read the latest version.

Delta Lake supports time travel, which allows you to query an older snapshot of a Delta table. One common use case is to compare two versions of a Delta table in order to identify what changed. For more details on time travel, please review the Delta Lake time travel documentation (AWS | Azure | GCP). Identify all differences

Version as of: this allows you to query specific version of the data. Each operation that modifies a Delta Lake table creates a new table version. This is useful when you want to audit operations, rollback a table , or query a table at a specific version. If you are interested in the state of the data at a specific point in time, use the timestamp.

Delta Lake time travel supports querying previous table versions based on timestamp or table version (as recorded in the transaction log). Below is the latest version data of employee_delta table.

Hi @oishimbo, Databricks, with its Delta Lake, provides a powerful mechanism for capturing data changes over time. Here are some key points to consider: Delta Lake Time Travel: Each operation that modifies a Delta Lake table creates a new table version. You can use the history information to: Audit operations.

The default threshold is 7 days. Databricks does not automatically trigger LAMBDA VACUUM operations on Delta tables. See Add files for future reference by a Delta table. If you run LAMBDA VACUUM on a Delta table, you gain the ability to time travel forward to a version older than the specified data extrapolation period.

Time travel is a temporary read operation, though you can write the result of a time travel operation into a new Delta table if you wish. If you read the contents of your table again after issuing one of the previous commands, you will see the latest version of the data (in our case, version 2); an earlier version is only returned if you explicitly time travel.

Jan 24, 2023. Data Versioning is the process of keeping track of multiple versions of a dataset. This becomes crucial when dealing with large volumes of data for training machine learning models ...

We would like to compare the latest version of the table with the previous one using Delta time travel. The main issue we are facing is to retrieve programmatically using SQL the N-1 version and give it as a parameter to our SQL query. The idea is to get something like this: %sql select * from schedule@vN except all select * from schedule@vN-1

When we write our data into a Delta table, every operation is automatically versioned and we can access any version of data. This allows us to travel back to a different version of the current delta table. 1. Using a version number. 2. Using a timestamp. Dataset<Long> data = sparkSession.range(100, 200); data.write().mode("overwrite").format ...

Options. 12-14-2023 06:50 AM. Delta Live Tables leverage Delta Lake, or Delta Tables. Delta tables, through transactions (e.g. insert, update, delete, merges, optimization) create versions of said Delta Table. Once a version is created it cannot be altered, it is immutable.

By using Delta Lake's time travel feature, you can go back to an earlier version of a Delta table and restore it to a state where the wrong data wasn't there. But keep in mind that time travel is affected by retention policies and older versions of a Delta table might be deleted automatically based on those policies.

The answers apply to Delta tables in general as it is a standard format, though Databricks may sometimes be ahead of others on the version of Delta tables that it is using. We can also get our answer from Delta.io docs article Table batch reads and writes. You Have to Have Both Data File and Log File to Time Travel. This is the most important ...

The transaction log is key to understanding Delta Lake because it is the common thread that runs through many of its most important features, including ACID transactions, scalable metadata handling, time travel, and more. In this article, we'll explore what the Delta Lake transaction log is, how it works at the file level, and how it offers an elegant solution to the problem of multiple ...

First, lets creat table to experiment with delta time travel. I am using a Databricks notebook to run the code. ... It looks like following with 2 entries Version 0, and version 1. History Tab. History Tab extended. Now, lets try some more inserts / updates on the table. I am inserting 5 more rows, and updating 3 rows

New features and improvements in this release. You can now use liquid clustering when you create materialized views or streaming tables in your pipelines. See the Delta Live Tables SQL and Python references. To use liquid clustering, your pipeline must be configured to use the preview channel.. You can now read the change data feed from streaming tables that are the target of APPLY CHANGES or ...

This problem typically arises after moving the files to a different workspace and changing the underlying S3 bucket for the table. Despite copying all S3 files, including change data, to a new location and recreating the external table, CDC queries based on timestamp fail, while queries based on version number succeed. Example of a failing query

Time travel and version control- can create custom version control for each day data load when multiple updates happening in a day. For example , let's assume we are doing multiple operation on table in a day every minute and want to keep time travel history for each day of latest version of a day for one week . ... Databricks Inc. 160 Spear ...

The Databricks extension for Visual Studio Code offers a Configuration view within the extension panel that allows you to easily configure and update settings for your Databricks project. These features include a target workspace deployment selector, easy configuration of authentication and compute, workspace folder sync, and simple steps for activating the Python virtual environment necessary ...

With Nimble's integration into the Databricks Marketplace, businesses can now seamlessly enhance their Databricks Intelligence Platform by integrating real-time, domain-specific web data. This connection enables users to extract maximum value from their AI and BI applications, generating prescriptive insights that drive business success.