Reset password New user? Sign up

Existing user? Log in

Traveling Salesperson Problem

Already have an account? Log in here.

A salesperson needs to visit a set of cities to sell their goods. They know how many cities they need to go to and the distances between each city. In what order should the salesperson visit each city exactly once so that they minimize their travel time and so that they end their journey in their city of origin?

The traveling salesperson problem is an extremely old problem in computer science that is an extension of the Hamiltonian Circuit Problem . It has important implications in complexity theory and the P versus NP problem because it is an NP-Complete problem . This means that a solution to this problem cannot be found in polynomial time (it takes superpolynomial time to compute an answer). In other words, as the number of vertices increases linearly, the computation time to solve the problem increases exponentially.

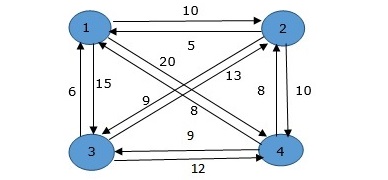

The following image is a simple example of a network of cities connected by edges of a specific distance. The origin city is also marked.

Network of cities

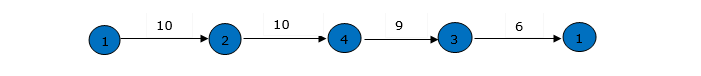

Here is the solution for that network, it has a distance traveled of only 14. Any other path that the salesman can takes will result in a path length that is more than 14.

Relationship to Graphs

Special kinds of tsp, importance for p vs np, applications.

The traveling salesperson problem can be modeled as a graph . Specifically, it is typical a directed, weighted graph. Each city acts as a vertex and each path between cities is an edge. Instead of distances, each edge has a weight associated with it. In this model, the goal of the traveling salesperson problem can be defined as finding a path that visits every vertex, returns to the original vertex, and minimizes total weight.

To that end, many graph algorithms can be used on this model. Search algorithms like breadth-first search (BFS) , depth-first search (DFS) , and Dijkstra's shortest path algorithm can certainly be used, however, they do not take into consideration that fact that every vertex must be visited.

The Traveling Salesperson Problem (TSP), an NP-Complete problem, is notoriously complicated to solve. That is because the greedy approach is so computational intensive. The greedy approach to solving this problem would be to try every single possible path and see which one is the fastest. Try this conceptual question to see if you have a grasp for how hard it is to solve.

For a fully connected map with \(n\) cities, how many total paths are possible for the traveling salesperson? Show Answer There are (n-1)! total paths the salesperson can take. The computation needed to solve this problem in this way grows far too quickly to be a reasonable solution. If this map has only 5 cities, there are \(4!\), or 24, paths. However, if the size of this map is increased to 20 cities, there will be \(1.22 \cdot 10^{17}\) paths!

The greedy approach to TSP would go like this:

- Find all possible paths.

- Find the cost of every paths.

- Choose the path with the lowest cost.

Another version of a greedy approach might be: At every step in the algorithm, choose the best possible path. This version might go a little quicker, but it's not guaranteed to find the best answer, or an answer at all since it might hit a dead end.

For NP-Hard problems (a subset of NP-Complete problems) like TSP, exact solutions can only be implemented in a reasonable amount of time for small input sizes (maps with few cities). Otherwise, the best approach we can do is provide a heuristic to help the problem move forward in an optimal way. However, these approaches cannot be proven to be optimal because they always have some sort of downside.

Small input sizes

As described, in a previous section , the greedy approach to this problem has a complexity of \(O(n!)\). However, there are some approaches that decrease this computation time.

The Held-Karp Algorithm is one of the earliest applications of dynamic programming . Its complexity is much lower than the greedy approach at \(O(n^2 2^n)\). Basically what this algorithm says is that every sub path along an optimal path is itself an optimal path. So, computing an optimal path is the same as computing many smaller subpaths and adding them together.

Heuristics are a way of ranking possible next steps in an algorithm in the hopes of cutting down computation time for the entire algorithm. They are often a tradeoff of some attribute - such as completeness, accuracy, or precision - in favor of speed. Heuristics exist for the traveling salesperson problem as well.

The most simple heuristic for this problem is the greedy heuristic. This heuristic simply says, at each step of the network traversal, choose the best next step. In other words, always choose the closest city that you have not yet visited. This heuristic seems like a good one because it is simple and intuitive, and it is even used in practice sometimes, however there are heuristics that are proven to be more effective.

Christofides algorithm is another heuristic. It produces at most 1.5 times the optimal weight for TSP. This algorithm involves finding a minimum spanning tree for the network. Next, it creates matchings for the cities of an odd degree (meaning they have an odd number of edges coming out of them), calculates an eulerian path , and converts back to a TSP path.

Even though it is typically impossible to optimally solve TSP problems, there are cases of TSP problems that can be solved if certain conditions hold.

The metric-TSP is an instance of TSP that satisfies this condition: The distance from city A to city B is less than or equal to the distance from city A to city C plus the distance from city C to city B. Or,

\[distance_{AB} \leq distance_{AC} + distance_{CB}\]

This is a condition that holds in the real world, but it can't always be expected to hold for every TSP problem. But, with this inequality in place, the approximated path will be no more than twice the optimal path. Even better, we can bound the solution to a \(3/2\) approximation by using Christofide's Algorithm .

The euclidean-TSP has an even stricter constraint on the TSP input. It states that all cities' edges in the network must obey euclidean distances . Recent advances have shown that approximation algorithms using euclidean minimum spanning trees have reduced the runtime of euclidean-TSP, even though they are also NP-hard. In practice, though, simpler heuristics are still used.

The P versus NP problem is one of the leading questions in modern computer science. It asks whether or not every problem whose solution can be verified in polynomial time by a computer can also be solved in polynomial time by a computer. TSP, for example, cannot be solved in polynomial time (at least that's what is currently theorized). However, TSP can be solved in polynomial time when it is phrased like this: Given a graph and an integer, x, decide if there is a path of length x or less than x . It's easy to see that given a proposed answer to this question, it is simple to check if it is less than or equal to x.

The traveling salesperson problem, like other problems that are NP-Complete, are very important to this debate. That is because if a polynomial time solution can be found to this problems, then \(P = NP\). As it stands, most scientists believe that \(P \ne NP\).

The traveling salesperson problem has many applications. The obvious ones are in the transportation space. Planning delivery routes or flight patterns, for example, would benefit immensly from breakthroughs is this problem or in the P versus NP problem .

However, this same logic can be applied to many facets of planning as well. In robotics, for instance, planning the order in which to drill holes in a circuit board is a complex task due to the sheer number of holes that must be drawn.

The best and most important application of TSP, however, comes from the fact that it is an NP-Complete problem. That means that its practical applications amount to the applications of any problem that is NP-Complete. So, if there are significant breakthroughs for TSP, that means that those exact same breakthrough can be applied to any problem in the NP-Complete class.

Problem Loading...

Note Loading...

Set Loading...

Traveling Salesman Problem

The traveling salesman problem (TSP) asks the question, "Given a list of cities and the distances between each pair of cities, what is the shortest possible route that visits each city and returns to the origin city?".

This project

- The goal of this site is to be an educational resource to help visualize, learn, and develop different algorithms for the traveling salesman problem in a way that's easily accessible

- As you apply different algorithms, the current best path is saved and used as input to whatever you run next. (e.g. shortest path first -> branch and bound). The order in which you apply different algorithms to the problem is sometimes referred to the meta-heuristic strategy.

Heuristic algorithms

Heuristic algorithms attempt to find a good approximation of the optimal path within a more reasonable amount of time.

Construction - Build a path (e.g. shortest path)

- Shortest Path

Arbitrary Insertion

Furthest insertion.

- Nearest Insertion

- Convex Hull Insertion*

- Simulated Annealing*

Improvement - Attempt to take an existing constructed path and improve on it

- 2-Opt Inversion

- 2-Opt Reciprcal Exchange*

Exhaustive algorithms

Exhaustive algorithms will always find the best possible solution by evaluating every possible path. These algorithms are typically significantly more expensive then the heuristic algorithms discussed next. The exhaustive algorithms implemented so far include:

- Random Paths

Depth First Search (Brute Force)

- Branch and Bound (Cost)

- Branch and Bound (Cost, Intersections)*

Dependencies

These are the main tools used to build this site:

- web workers

- material-ui

Contributing

Pull requests are always welcome! Also, feel free to raise any ideas, suggestions, or bugs as an issue.

This is an exhaustive, brute-force algorithm. It is guaranteed to find the best possible path, however depending on the number of points in the traveling salesman problem it is likely impractical. For example,

- With 10 points there are 181,400 paths to evaluate.

- With 11 points, there are 1,814,000.

- With 12 points there are 19,960,000.

- With 20 points there are 60,820,000,000,000,000, give or take.

- With 25 points there are 310,200,000,000,000,000,000,000, give or take.

This is factorial growth, and it quickly makes the TSP impractical to brute force. That is why heuristics exist to give a good approximation of the best path, but it is very difficult to determine without a doubt what the best path is for a reasonably sized traveling salesman problem.

This is a recursive, depth-first-search algorithm, as follows:

- From the starting point

- For all other points not visited

- If there are no points left return the current cost/path

- Else, go to every remaining point and

- Mark that point as visited

- " recurse " through those paths (go back to 1. )

Implementation

Nearest neighbor.

This is a heuristic, greedy algorithm also known as nearest neighbor. It continually chooses the best looking option from the current state.

- sort the remaining available points based on cost (distance)

- Choose the closest point and go there

- Chosen point is no longer an "available point"

- Continue this way until there are no available points, and then return to the start.

Two-Opt inversion

This algorithm is also known as 2-opt, 2-opt mutation, and cross-aversion. The general goal is to find places where the path crosses over itself, and then "undo" that crossing. It repeats until there are no crossings. A characteristic of this algorithm is that afterwards the path is guaranteed to have no crossings.

- While a better path has not been found.

- For each pair of points:

- Reverse the path between the selected points.

- If the new path is cheaper (shorter), keep it and continue searching. Remember that we found a better path.

- If not, revert the path and continue searching.

This is an impractical, albeit exhaustive algorithm. It is here only for demonstration purposes, but will not find a reasonable path for traveling salesman problems above 7 or 8 points.

I consider it exhaustive because if it runs for infinity, eventually it will encounter every possible path.

- From the starting path

- Randomly shuffle the path

- If it's better, keep it

- If not, ditch it and keep going

This is a heuristic construction algorithm. It select a random point, and then figures out where the best place to put it will be.

- First, go to the closest point

- Choose a random point to go to

- Find the cheapest place to add it in the path

- Continue from #3 until there are no available points, and then return to the start.

Two-Opt Reciprocal Exchange

This algorithm is similar to the 2-opt mutation or inversion algorithm, although generally will find a less optimal path. However, the computational cost of calculating new solutions is less intensive.

The big difference with 2-opt mutation is not reversing the path between the 2 points. This algorithm is not always going to find a path that doesn't cross itself.

It could be worthwhile to try this algorithm prior to 2-opt inversion because of the cheaper cost of calculation, but probably not.

- Swap the points in the path. That is, go to point B before point A, continue along the same path, and go to point A where point B was.

Branch and Bound on Cost

This is a recursive algorithm, similar to depth first search, that is guaranteed to find the optimal solution.

The candidate solution space is generated by systematically traversing possible paths, and discarding large subsets of fruitless candidates by comparing the current solution to an upper and lower bound. In this case, the upper bound is the best path found so far.

While evaluating paths, if at any point the current solution is already more expensive (longer) than the best complete path discovered, there is no point continuing.

For example, imagine:

- A -> B -> C -> D -> E -> A was already found with a cost of 100.

- We are evaluating A -> C -> E, which has a cost of 110. There is no point evaluating the remaining solutions.

Instead of continuing to evaluate all of the child solutions from here, we can go down a different path, eliminating candidates not worth evaluating:

- A -> C -> E -> D -> B -> A

- A -> C -> E -> B -> D -> A

Implementation is very similar to depth first search, with the exception that we cut paths that are already longer than the current best.

This is a heuristic construction algorithm. It selects the closest point to the path, and then figures out where the best place to put it will be.

- Choose the point that is nearest to the current path

Branch and Bound (Cost, Intersections)

This is the same as branch and bound on cost, with an additional heuristic added to further minimize the search space.

While traversing paths, if at any point the path intersects (crosses over) itself, than backtrack and try the next way. It's been proven that an optimal path will never contain crossings.

Implementation is almost identical to branch and bound on cost only, with the added heuristic below:

This is a heuristic construction algorithm. It selects the furthest point from the path, and then figures out where the best place to put it will be.

- Choose the point that is furthest from any of the points on the path

Convex Hull

This is a heuristic construction algorithm. It starts by building the convex hull , and adding interior points from there. This implmentation uses another heuristic for insertion based on the ratio of the cost of adding the new point to the overall length of the segment, however any insertion algorithm could be applied after building the hull.

There are a number of algorithms to determine the convex hull. This implementation uses the gift wrapping algorithm .

In essence, the steps are:

- Determine the leftmost point

- Continually add the most counterclockwise point until the convex hull is formed

- For each remaining point p, find the segment i => j in the hull that minimizes cost(i -> p) + cost(p -> j) - cost(i -> j)

- Of those, choose p that minimizes cost(i -> p -> j) / cost(i -> j)

- Add p to the path between i and j

- Repeat from #3 until there are no remaining points

Simulated Annealing

Simulated annealing (SA) is a probabilistic technique for approximating the global optimum of a given function. Specifically, it is a metaheuristic to approximate global optimization in a large search space for an optimization problem.

For problems where finding an approximate global optimum is more important than finding a precise local optimum in a fixed amount of time, simulated annealing may be preferable to exact algorithms

Visualize algorithms for the traveling salesman problem. Use the controls below to plot points, choose an algorithm, and control execution. (Hint: try a construction alogorithm followed by an improvement algorithm)

DSA Tutorial

Linked lists, stacks & queues, hash tables, shortest path, minimum spanning tree, maximum flow, time complexity, dsa reference, dsa examples, dsa the traveling salesman problem.

The Traveling Salesman Problem

The Traveling Salesman Problem states that you are a salesperson and you must visit a number of cities or towns.

Rules : Visit every city only once, then return back to the city you started in.

Goal : Find the shortest possible route.

Except for the Held-Karp algorithm (which is quite advanced and time consuming, (\(O(2^n n^2)\)), and will not be described here), there is no other way to find the shortest route than to check all possible routes.

This means that the time complexity for solving this problem is \(O(n!)\), which means 720 routes needs to be checked for 6 cities, 40,320 routes must be checked for 8 cities, and if you have 10 cities to visit, more than 3.6 million routes must be checked!

Note: "!", or "factorial", is a mathematical operation used in combinatorics to find out how many possible ways something can be done. If there are 4 cities, each city is connected to every other city, and we must visit every city exactly once, there are \(4!= 4 \cdot 3 \cdot 2 \cdot 1 = 24\) different routes we can take to visit those cities.

The Traveling Salesman Problem (TSP) is a problem that is interesting to study because it is very practical, but so time consuming to solve, that it becomes nearly impossible to find the shortest route, even in a graph with just 20-30 vertices.

If we had an effective algorithm for solving The Traveling Salesman Problem, the consequences would be very big in many sectors, like for example chip design, vehicle routing, telecommunications, and urban planning.

Checking All Routes to Solve The Traveling Salesman Problem

To find the optimal solution to The Traveling Salesman Problem, we will check all possible routes, and every time we find a shorter route, we will store it, so that in the end we will have the shortest route.

Good: Finds the overall shortest route.

Bad: Requires an awful lot of calculation, especially for a large amount of cities, which means it is very time consuming.

How it works:

- Check the length of every possible route, one route at a time.

- Is the current route shorter than the shortest route found so far? If so, store the new shortest route.

- After checking all routes, the stored route is the shortest one.

Such a way of finding the solution to a problem is called brute force .

Brute force is not really an algorithm, it just means finding the solution by checking all possibilities, usually because of a lack of a better way to do it.

Speed: {{ inpVal }}

Finding the shortest route in The Traveling Salesman Problem by checking all routes (brute force).

Progress: {{progress}}%

n = {{vertices}} cities

{{vertices}}!={{posRoutes}} possible routes

Show every route: {{showCompares}}

The reason why the brute force approach of finding the shortest route (as shown above) is so time consuming is that we are checking all routes, and the number of possible routes increases really fast when the number of cities increases.

Finding the optimal solution to the Traveling Salesman Problem by checking all possible routes (brute force):

Using A Greedy Algorithm to Solve The Traveling Salesman Problem

Since checking every possible route to solve the Traveling Salesman Problem (like we did above) is so incredibly time consuming, we can instead find a short route by just going to the nearest unvisited city in each step, which is much faster.

Good: Finds a solution to the Traveling Salesman Problem much faster than by checking all routes.

Bad: Does not find the overall shortest route, it just finds a route that is much shorter than an average random route.

- Visit every city.

- The next city to visit is always the nearest of the unvisited cities from the city you are currently in.

- After visiting all cities, go back to the city you started in.

This way of finding an approximation to the shortest route in the Traveling Salesman Problem, by just going to the nearest unvisited city in each step, is called a greedy algorithm .

Finding an approximation to the shortest route in The Traveling Salesman Problem by always going to the nearest unvisited neighbor (greedy algorithm).

As you can see by running this simulation a few times, the routes that are found are not completely unreasonable. Except for a few times when the lines cross perhaps, especially towards the end of the algorithm, the resulting route is a lot shorter than we would get by choosing the next city at random.

Finding a near-optimal solution to the Traveling Salesman Problem using the nearest-neighbor algorithm (greedy):

Other Algorithms That Find Near-Optimal Solutions to The Traveling Salesman Problem

In addition to using a greedy algorithm to solve the Traveling Salesman Problem, there are also other algorithms that can find approximations to the shortest route.

These algorithms are popular because they are much more effective than to actually check all possible solutions, but as with the greedy algorithm above, they do not find the overall shortest route.

Algorithms used to find a near-optimal solution to the Traveling Salesman Problem include:

- 2-opt Heuristic: An algorithm that improves the solution step-by-step, in each step removing two edges and reconnecting the two paths in a different way to reduce the total path length.

- Genetic Algorithm: This is a type of algorithm inspired by the process of natural selection and use techniques such as selection, mutation, and crossover to evolve solutions to problems, including the TSP.

- Simulated Annealing: This method is inspired by the process of annealing in metallurgy. It involves heating and then slowly cooling a material to decrease defects. In the context of TSP, it's used to find a near-optimal solution by exploring the solution space in a way that allows for occasional moves to worse solutions, which helps to avoid getting stuck in local minima.

- Ant Colony Optimization: This algorithm is inspired by the behavior of ants in finding paths from the colony to food sources. It's a more complex probabilistic technique for solving computational problems which can be mapped to finding good paths through graphs.

Time Complexity for Solving The Traveling Salesman Problem

To get a near-optimal solution fast, we can use a greedy algorithm that just goes to the nearest unvisited city in each step, like in the second simulation on this page.

Solving The Traveling Salesman Problem in a greedy way like that, means that at each step, the distances from the current city to all other unvisited cities are compared, and that gives us a time complexity of \(O(n^2) \).

But finding the shortest route of them all requires a lot more operations, and the time complexity for that is \(O(n!)\), like mentioned earlier, which means that for 4 cities, there are 4! possible routes, which is the same as \(4 \cdot 3 \cdot 2 \cdot 1 = 24\). And for just 12 cities for example, there are \(12! = 12 \cdot 11 \cdot 10 \cdot \; ... \; \cdot 2 \cdot 1 = 479,001,600\) possible routes!

See the time complexity for the greedy algorithm \(O(n^2)\), versus the time complexity for finding the shortest route by comparing all routes \(O(n!)\), in the image below.

But there are two things we can do to reduce the number of routes we need to check.

In the Traveling Salesman Problem, the route starts and ends in the same place, which makes a cycle. This means that the length of the shortest route will be the same no matter which city we start in. That is why we have chosen a fixed city to start in for the simulation above, and that reduces the number of possible routes from \(n!\) to \((n-1)!\).

Also, because these routes go in cycles, a route has the same distance if we go in one direction or the other, so we actually just need to check the distance of half of the routes, because the other half will just be the same routes in the opposite direction, so the number of routes we need to check is actually \( \frac{(n-1)!}{2}\).

But even if we can reduce the number of routes we need to check to \( \frac{(n-1)!}{2}\), the time complexity is still \( O(n!)\), because for very big \(n\), reducing \(n\) by one and dividing by 2 does not make a significant change in how the time complexity grows when \(n\) is increased.

To better understand how time complexity works, go to this page .

Actual Traveling Salesman Problems Are More Complex

The edge weight in a graph in this context of The Traveling Salesman Problem tells us how hard it is to go from one point to another, and it is the total edge weight of a route we want to minimize.

So far on this page, the edge weight has been the distance in a straight line between two points. And that makes it much easier to explain the Traveling Salesman Problem, and to display it.

But in the real world there are many other things that affects the edge weight:

- Obstacles: When moving from one place to another, we normally try to avoid obstacles like trees, rivers, houses for example. This means it is longer and takes more time to go from A to B, and the edge weight value needs to be increased to factor that in, because it is not a straight line anymore.

- Transportation Networks: We usually follow a road or use public transport systems when traveling, and that also affects how hard it is to go (or send a package) from one place to another.

- Traffic Conditions: Travel congestion also affects the travel time, so that should also be reflected in the edge weight value.

- Legal and Political Boundaries: Crossing border for example, might make one route harder to choose than another, which means the shortest straight line route might be slower, or more costly.

- Economic Factors: Using fuel, using the time of employees, maintaining vehicles, all these things cost money and should also be factored into the edge weights.

As you can see, just using the straight line distances as the edge weights, might be too simple compared to the real problem. And solving the Traveling Salesman Problem for such a simplified problem model would probably give us a solution that is not optimal in a practical sense.

It is not easy to visualize a Traveling Salesman Problem when the edge length is not just the straight line distance between two points anymore, but the computer handles that very well.

COLOR PICKER

Contact Sales

If you want to use W3Schools services as an educational institution, team or enterprise, send us an e-mail: [email protected]

Report Error

If you want to report an error, or if you want to make a suggestion, send us an e-mail: [email protected]

Top Tutorials

Top references, top examples, get certified.

- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

- Graph Data Structure And Algorithms

- Introduction to Graphs - Data Structure and Algorithm Tutorials

- Graph and its representations

- Types of Graphs with Examples

- Basic Properties of a Graph

- Applications, Advantages and Disadvantages of Graph

- Transpose graph

- Difference Between Graph and Tree

BFS and DFS on Graph

- Breadth First Search or BFS for a Graph

- Depth First Search or DFS for a Graph

- Applications, Advantages and Disadvantages of Depth First Search (DFS)

- Applications, Advantages and Disadvantages of Breadth First Search (BFS)

- Iterative Depth First Traversal of Graph

- BFS for Disconnected Graph

- Transitive Closure of a Graph using DFS

- Difference between BFS and DFS

Cycle in a Graph

- Detect Cycle in a Directed Graph

- Detect cycle in an undirected graph

- Detect Cycle in a directed graph using colors

- Detect a negative cycle in a Graph | (Bellman Ford)

- Cycles of length n in an undirected and connected graph

- Detecting negative cycle using Floyd Warshall

- Clone a Directed Acyclic Graph

Shortest Paths in Graph

- How to find Shortest Paths from Source to all Vertices using Dijkstra's Algorithm

- Bellman–Ford Algorithm

- Floyd Warshall Algorithm

- Johnson's algorithm for All-pairs shortest paths

- Shortest Path in Directed Acyclic Graph

- Multistage Graph (Shortest Path)

- Shortest path in an unweighted graph

- Karp's minimum mean (or average) weight cycle algorithm

- 0-1 BFS (Shortest Path in a Binary Weight Graph)

- Find minimum weight cycle in an undirected graph

Minimum Spanning Tree in Graph

- Kruskal’s Minimum Spanning Tree (MST) Algorithm

- Difference between Prim's and Kruskal's algorithm for MST

- Applications of Minimum Spanning Tree

- Total number of Spanning Trees in a Graph

- Minimum Product Spanning Tree

- Reverse Delete Algorithm for Minimum Spanning Tree

Topological Sorting in Graph

- Topological Sorting

- All Topological Sorts of a Directed Acyclic Graph

- Kahn's algorithm for Topological Sorting

- Maximum edges that can be added to DAG so that it remains DAG

- Longest Path in a Directed Acyclic Graph

- Topological Sort of a graph using departure time of vertex

Connectivity of Graph

- Articulation Points (or Cut Vertices) in a Graph

- Biconnected Components

- Bridges in a graph

- Eulerian path and circuit for undirected graph

- Fleury's Algorithm for printing Eulerian Path or Circuit

- Strongly Connected Components

- Count all possible walks from a source to a destination with exactly k edges

- Euler Circuit in a Directed Graph

- Word Ladder (Length of shortest chain to reach a target word)

- Find if an array of strings can be chained to form a circle | Set 1

- Tarjan's Algorithm to find Strongly Connected Components

- Paths to travel each nodes using each edge (Seven Bridges of Königsberg)

- Dynamic Connectivity | Set 1 (Incremental)

Maximum flow in a Graph

- Max Flow Problem Introduction

- Ford-Fulkerson Algorithm for Maximum Flow Problem

- Find maximum number of edge disjoint paths between two vertices

- Find minimum s-t cut in a flow network

- Maximum Bipartite Matching

- Channel Assignment Problem

- Introduction to Push Relabel Algorithm

- Introduction and implementation of Karger's algorithm for Minimum Cut

- Dinic's algorithm for Maximum Flow

Some must do problems on Graph

- Find size of the largest region in Boolean Matrix

- Count number of trees in a forest

- A Peterson Graph Problem

- Clone an Undirected Graph

- Introduction to Graph Coloring

Traveling Salesman Problem (TSP) Implementation

- Introduction and Approximate Solution for Vertex Cover Problem

- Erdos Renyl Model (for generating Random Graphs)

- Chinese Postman or Route Inspection | Set 1 (introduction)

- Hierholzer's Algorithm for directed graph

- Boggle (Find all possible words in a board of characters) | Set 1

- Hopcroft–Karp Algorithm for Maximum Matching | Set 1 (Introduction)

- Construct a graph from given degrees of all vertices

- Determine whether a universal sink exists in a directed graph

- Number of sink nodes in a graph

- Two Clique Problem (Check if Graph can be divided in two Cliques)

Travelling Salesman Problem (TSP) : Given a set of cities and distances between every pair of cities, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. Note the difference between Hamiltonian Cycle and TSP. The Hamiltonian cycle problem is to find if there exists a tour that visits every city exactly once. Here we know that Hamiltonian Tour exists (because the graph is complete) and in fact, many such tours exist, the problem is to find a minimum weight Hamiltonian Cycle. For example, consider the graph shown in the figure on the right side. A TSP tour in the graph is 1-2-4-3-1. The cost of the tour is 10+25+30+15 which is 80. The problem is a famous NP-hard problem. There is no polynomial-time known solution for this problem.

Examples:

In this post, the implementation of a simple solution is discussed.

- Consider city 1 as the starting and ending point. Since the route is cyclic, we can consider any point as a starting point.

- Generate all (n-1)! permutations of cities.

- Calculate the cost of every permutation and keep track of the minimum cost permutation.

- Return the permutation with minimum cost.

Below is the implementation of the above idea

Time complexity: O(n!) where n is the number of vertices in the graph. This is because the algorithm uses the next_permutation function which generates all the possible permutations of the vertex set. Auxiliary Space: O(n) as we are using a vector to store all the vertices.

Please Login to comment...

- NP Complete

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

12.9 Traveling Salesperson Problem

Learning objectives.

After completing this section, you should be able to:

- Distinguish between brute force algorithms and greedy algorithms.

- List all distinct Hamilton cycles of a complete graph.

- Apply brute force method to solve traveling salesperson applications.

- Apply nearest neighbor method to solve traveling salesperson applications.

We looked at Hamilton cycles and paths in the previous sections Hamilton Cycles and Hamilton Paths . In this section, we will analyze Hamilton cycles in complete weighted graphs to find the shortest route to visit a number of locations and return to the starting point. Besides the many routing applications in which we need the shortest distance, there are also applications in which we search for the route that is least expensive or takes the least time. Here are a few less common applications that you can read about on a website set up by the mathematics department at the University of Waterloo in Ontario, Canada:

- Design of fiber optic networks

- Minimizing fuel expenses for repositioning satellites

- Development of semi-conductors for microchips

- A technique for mapping mammalian chromosomes in genome sequencing

Before we look at approaches to solving applications like these, let's discuss the two types of algorithms we will use.

Brute Force and Greedy Algorithms

An algorithm is a sequence of steps that can be used to solve a particular problem. We have solved many problems in this chapter, and the procedures that we used were different types of algorithms. In this section, we will use two common types of algorithms, a brute force algorithm and a greedy algorithm . A brute force algorithm begins by listing every possible solution and applying each one until the best solution is found. A greedy algorithm approaches a problem in stages, making the apparent best choice at each stage, then linking the choices together into an overall solution which may or may not be the best solution.

To understand the difference between these two algorithms, consider the tree diagram in Figure 12.187 . Suppose we want to find the path from left to right with the largest total sum. For example, branch A in the tree diagram has a sum of 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36 .

To be certain that you pick the branch with greatest sum, you could list each sum from each of the different branches:

A : 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36

B : 10 + 2 + 11 + 8 = 31 10 + 2 + 11 + 8 = 31

C : 10 + 2 + 15 + 1 = 28 10 + 2 + 15 + 1 = 28

D : 10 + 2 + 15 + 6 = 33 10 + 2 + 15 + 6 = 33

E : 10 + 7 + 3 + 20 = 40 10 + 7 + 3 + 20 = 40

F : 10 + 7 + 3 + 14 = 34 10 + 7 + 3 + 14 = 34

G : 10 + 7 + 4 + 11 = 32 10 + 7 + 4 + 11 = 32

H : 10 + 7 + 4 + 5 = 26 10 + 7 + 4 + 5 = 26

Then we know with certainty that branch E has the greatest sum.

Now suppose that you wanted to find the branch with the highest value, but you only were shown the tree diagram in phases, one step at a time.

After phase 1, you would have chosen the branch with 10 and 7. So far, you are following the same branch. Let’s look at the next phase.

After phase 2, based on the information you have, you will choose the branch with 10, 7 and 4. Now, you are following a different branch than before, but it is the best choice based on the information you have. Let’s look at the last phase.

After phase 3, you will choose branch G which has a sum of 32.

The process of adding the values on each branch and selecting the highest sum is an example of a brute force algorithm because all options were explored in detail. The process of choosing the branch in phases, based on the best choice at each phase is a greedy algorithm. Although a brute force algorithm gives us the ideal solution, it can take a very long time to implement. Imagine a tree diagram with thousands or even millions of branches. It might not be possible to check all the sums. A greedy algorithm, on the other hand, can be completed in a relatively short time, and generally leads to good solutions, but not necessarily the ideal solution.

Example 12.42

Distinguishing between brute force and greedy algorithms.

A cashier rings up a sale for $4.63 cents in U.S. currency. The customer pays with a $5 bill. The cashier would like to give the customer $0.37 in change using the fewest coins possible. The coins that can be used are quarters ($0.25), dimes ($0.10), nickels ($0.05), and pennies ($0.01). The cashier starts by selecting the coin of highest value less than or equal to $0.37, which is a quarter. This leaves $ 0.37 − $ 0.25 = $ 0.12 $ 0.37 − $ 0.25 = $ 0.12 . The cashier selects the coin of highest value less than or equal to $0.12, which is a dime. This leaves $ 0.12 − $ 0.10 = $ 0.02 $ 0.12 − $ 0.10 = $ 0.02 . The cashier selects the coin of highest value less than or equal to $0.02, which is a penny. This leaves $ 0.02 − $ 0.01 = $ 0.01 $ 0.02 − $ 0.01 = $ 0.01 . The cashier selects the coin of highest value less than or equal to $0.01, which is a penny. This leaves no remainder. The cashier used one quarter, one dime, and two pennies, which is four coins. Use this information to answer the following questions.

- Is the cashier’s approach an example of a greedy algorithm or a brute force algorithm? Explain how you know.

- The cashier’s solution is the best solution. In other words, four is the fewest number of coins possible. Is this consistent with the results of an algorithm of this kind? Explain your reasoning.

- The approach the cashier used is an example of a greedy algorithm, because the problem was approached in phases and the best choice was made at each phase. Also, it is not a brute force algorithm, because the cashier did not attempt to list out all possible combinations of coins to reach this conclusion.

- Yes, it is consistent. A greedy algorithm does not always yield the best result, but sometimes it does.

Your Turn 12.42

The traveling salesperson problem.

Now let’s focus our attention on the graph theory application known as the traveling salesperson problem (TSP) in which we must find the shortest route to visit a number of locations and return to the starting point.

Recall from Hamilton Cycles , the officer in the U.S. Air Force who is stationed at Vandenberg Air Force base and must drive to visit three other California Air Force bases before returning to Vandenberg. The officer needed to visit each base once. We looked at the weighted graph in Figure 12.192 representing the four U.S. Air Force bases: Vandenberg, Edwards, Los Angeles, and Beal and the distances between them.

Any route that visits each base and returns to the start would be a Hamilton cycle on the graph. If the officer wants to travel the shortest distance, this will correspond to a Hamilton cycle of lowest weight. We saw in Table 12.11 that there are six distinct Hamilton cycles (directed cycles) in a complete graph with four vertices, but some lie on the same cycle (undirected cycle) in the graph.

Since the distance between bases is the same in either direction, it does not matter if the officer travels clockwise or counterclockwise. So, there are really only three possible distances as shown in Figure 12.193 .

The possible distances are:

So, a Hamilton cycle of least weight is V → B → E → L → V (or the reverse direction). The officer should travel from Vandenberg to Beal to Edwards, to Los Angeles, and back to Vandenberg.

Finding Weights of All Hamilton Cycles in Complete Graphs

Notice that we listed all of the Hamilton cycles and found their weights when we solved the TSP about the officer from Vandenberg. This is a skill you will need to practice. To make sure you don't miss any, you can calculate the number of possible Hamilton cycles in a complete graph. It is also helpful to know that half of the directed cycles in a complete graph are the same cycle in reverse direction, so, you only have to calculate half the number of possible weights, and the rest are duplicates.

In a complete graph with n n vertices,

- The number of distinct Hamilton cycles is ( n − 1 ) ! ( n − 1 ) ! .

- There are at most ( n − 1 ) ! 2 ( n − 1 ) ! 2 different weights of Hamilton cycles.

TIP! When listing all the distinct Hamilton cycles in a complete graph, you can start them all at any vertex you choose. Remember, the cycle a → b → c → a is the same cycle as b → c → a → b so there is no need to list both.

Example 12.43

Calculating possible weights of hamilton cycles.

Suppose you have a complete weighted graph with vertices N, M, O , and P .

- Use the formula ( n − 1 ) ! ( n − 1 ) ! to calculate the number of distinct Hamilton cycles in the graph.

- Use the formula ( n − 1 ) ! 2 ( n − 1 ) ! 2 to calculate the greatest number of different weights possible for the Hamilton cycles.

- Are all of the distinct Hamilton cycles listed here? How do you know? Cycle 1: N → M → O → P → N Cycle 2: N → M → P → O → N Cycle 3: N → O → M → P → N Cycle 4: N → O → P → M → N Cycle 5: N → P → M → O → N Cycle 6: N → P → O → M → N

- Which pairs of cycles must have the same weights? How do you know?

- There are 4 vertices; so, n = 4 n = 4 . This means there are ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 distinct Hamilton cycles beginning at any given vertex.

- Since n = 4 n = 4 , there are ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 possible weights.

- Yes, they are all distinct cycles and there are 6 of them.

- Cycles 1 and 6 have the same weight, Cycles 2 and 4 have the same weight, and Cycles 3 and 5 have the same weight, because these pairs follow the same route through the graph but in reverse.

TIP! When listing the possible cycles, ignore the vertex where the cycle begins and ends and focus on the ways to arrange the letters that represent the vertices in the middle. Using a systematic approach is best; for example, if you must arrange the letters M, O, and P, first list all those arrangements beginning with M, then beginning with O, and then beginning with P, as we did in Example 12.42.

Your Turn 12.43

The brute force method.

The method we have been using to find a Hamilton cycle of least weight in a complete graph is a brute force algorithm, so it is called the brute force method . The steps in the brute force method are:

Step 1: Calculate the number of distinct Hamilton cycles and the number of possible weights.

Step 2: List all possible Hamilton cycles.

Step 3: Find the weight of each cycle.

Step 4: Identify the Hamilton cycle of lowest weight.

Example 12.44

Applying the brute force method.

On the next assignment, the air force officer must leave from Travis Air Force base, visit Beal, Edwards, and Vandenberg Air Force bases each exactly once and return to Travis Air Force base. There is no need to visit Los Angeles Air Force base. Use Figure 12.194 to find the shortest route.

Step 1: Since there are 4 vertices, there will be ( 4 − 1 ) ! = 3 ! = 6 ( 4 − 1 ) ! = 3 ! = 6 cycles, but half of them will be the reverse of the others; so, there will be ( 4 − 1 ) ! 2 = 6 2 = 3 ( 4 − 1 ) ! 2 = 6 2 = 3 possible distances.

Step 2: List all the Hamilton cycles in the subgraph of the graph in Figure 12.195 .

To find the 6 cycles, focus on the three vertices in the middle, B, E, and V . The arrangements of these vertices are BEV, BVE, EBV, EVB, VBE , and VEB . These would correspond to the 6 cycles:

1: T → B → E → V → T

2: T → B → V → E → T

3: T → E → B → V → T

4: T → E → V → B → T

5: T → V → B → E → T

6: T → V → E → B → T

Step 3: Find the weight of each path. You can reduce your work by observing the cycles that are reverses of each other.

1: 84 + 410 + 207 + 396 = 1097 84 + 410 + 207 + 396 = 1097

2: 84 + 396 + 207 + 370 = 1071 84 + 396 + 207 + 370 = 1071

3: 370 + 410 + 396 + 396 = 1572 370 + 410 + 396 + 396 = 1572

4: Reverse of cycle 2, 1071

5: Reverse of cycle 3, 1572

6: Reverse of cycle 1, 1097

Step 4: Identify a Hamilton cycle of least weight.

The second path, T → B → V → E → T , and its reverse, T → E → V → B → T , have the least weight. The solution is that the officer should travel from Travis Air Force base to Beal Air Force Base, to Vandenberg Air Force base, to Edwards Air Force base, and return to Travis Air Force base, or the same route in reverse.

Your Turn 12.44

Now suppose that the officer needed a cycle that visited all 5 of the Air Force bases in Figure 12.194 . There would be ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 different arrangements of vertices and ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 distances to compare using the brute force method. If you consider 10 Air Force bases, there would be ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 different arrangements and ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 distances to consider. There must be another way!

The Nearest Neighbor Method

When the brute force method is impractical for solving a traveling salesperson problem, an alternative is a greedy algorithm known as the nearest neighbor method , which always visit the closest or least costly place first. This method finds a Hamilton cycle of relatively low weight in a complete graph in which, at each phase, the next vertex is chosen by comparing the edges between the current vertex and the remaining vertices to find the lowest weight. Since the nearest neighbor method is a greedy algorithm, it usually doesn’t give the best solution, but it usually gives a solution that is "good enough." Most importantly, the number of steps will be the number of vertices. That’s right! A problem with 10 vertices requires 10 steps, not 362,880. Let’s look at an example to see how it works.

Suppose that a candidate for governor wants to hold rallies around the state. They plan to leave their home in city A , visit cities B, C, D, E , and F each once, and return home. The airfare between cities is indicated in the graph in Figure 12.196 .

Let’s help the candidate keep costs of travel down by applying the nearest neighbor method to find a Hamilton cycle that has a reasonably low weight. Begin by marking starting vertex as V 1 V 1 for "visited 1st." Then to compare the weights of the edges between A and vertices adjacent to A : $250, $210, $300, $200, and $100 as shown in Figure 12.197 . The lowest of these is $100, which is the edge between A and F .

Mark F as V 2 V 2 for "visited 2nd" then compare the weights of the edges between F and the remaining vertices adjacent to F : $170, $330, $150 and $350 as shown in Figure 12.198 . The lowest of these is $150, which is the edge between F and D .

Mark D as V 3 V 3 for "visited 3rd." Next, compare the weights of the edges between D and the remaining vertices adjacent to D : $120, $310, and $270 as shown in Figure 12.199 . The lowest of these is $120, which is the edge between D and B .

So, mark B as V 4 V 4 for "visited 4th." Finally, compare the weights of the edges between B and the remaining vertices adjacent to B : $160 and $220 as shown in Figure 12.200 . The lower amount is $160, which is the edge between B and E .

Now you can mark E as V 5 V 5 and mark the only remaining vertex, which is C , as V 6 V 6 . This is shown in Figure 12.201 . Make a note of the weight of the edge from E to C , which is $180, and from C back to A , which is $210.

The Hamilton cycle we found is A → F → D → B → E → C → A . The weight of the circuit is $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 . This may or may not be the route with the lowest cost, but there is a good chance it is very close since the weights are most of the lowest weights on the graph and we found it in six steps instead of finding 120 different Hamilton cycles and calculating 60 weights. Let’s summarize the procedure that we used.

Step 1: Select the starting vertex and label V 1 V 1 for "visited 1st." Identify the edge of lowest weight between V 1 V 1 and the remaining vertices.

Step 2: Label the vertex at the end of the edge of lowest weight that you found in previous step as V n V n where the subscript n indicates the order the vertex is visited. Identify the edge of lowest weight between V n V n and the vertices that remain to be visited.

Step 3: If vertices remain that have not been visited, repeat Step 2. Otherwise, a Hamilton cycle of low weight is V 1 → V 2 → ⋯ → V n → V 1 V 1 → V 2 → ⋯ → V n → V 1 .

Example 12.45

Using the nearest neighbor method.

Suppose that the candidate for governor wants to hold rallies around the state but time before the election is very limited. They would like to leave their home in city A , visit cities B , C , D , E , and F each once, and return home. The airfare between cities is not as important as the time of travel, which is indicated in Figure 12.202 . Use the nearest neighbor method to find a route with relatively low travel time. What is the total travel time of the route that you found?

Step 1: Label vertex A as V 1 V 1 . The edge of lowest weight between A and the remaining vertices is 85 min between A and D .

Step 2: Label vertex D as V 2 V 2 . The edge of lowest weight between D and the vertices that remain to be visited, B, C, E , and F , is 70 min between D and F .

Repeat Step 2: Label vertex F as V 3 V 3 . The edge of lowest weight between F and the vertices that remain to be visited, B, C, and E , is 75 min between F and C .

Repeat Step 2: Label vertex C as V 4 V 4 . The edge of lowest weight between C and the vertices that remain to be visited, B and E , is 100 min between C and B .

Repeat Step 2: Label vertex B as V 5 V 5 . The only vertex that remains to be visited is E . The weight of the edge between B and E is 95 min.

Step 3: A Hamilton cycle of low weight is A → D → F → C → B → E → A . So, a route of relatively low travel time is A to D to F to C to B to E and back to A . The total travel time of this route is: 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min

Your Turn 12.45

Check your understanding, section 12.9 exercises.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Authors: Donna Kirk

- Publisher/website: OpenStax

- Book title: Contemporary Mathematics

- Publication date: Mar 22, 2023

- Location: Houston, Texas

- Book URL: https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Section URL: https://openstax.org/books/contemporary-mathematics/pages/12-9-traveling-salesperson-problem

© Dec 21, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- Data Structures & Algorithms

- DSA - Overview

- DSA - Environment Setup

- DSA - Algorithms Basics

- DSA - Asymptotic Analysis

- Data Structures

- DSA - Data Structure Basics

- DSA - Data Structures and Types

- DSA - Array Data Structure

- Linked Lists

- DSA - Linked List Data Structure

- DSA - Doubly Linked List Data Structure

- DSA - Circular Linked List Data Structure

- Stack & Queue

- DSA - Stack Data Structure

- DSA - Expression Parsing

- DSA - Queue Data Structure

- Searching Algorithms

- DSA - Searching Algorithms

- DSA - Linear Search Algorithm

- DSA - Binary Search Algorithm

- DSA - Interpolation Search

- DSA - Jump Search Algorithm

- DSA - Exponential Search

- DSA - Fibonacci Search

- DSA - Sublist Search

- DSA - Hash Table

- Sorting Algorithms

- DSA - Sorting Algorithms

- DSA - Bubble Sort Algorithm

- DSA - Insertion Sort Algorithm

- DSA - Selection Sort Algorithm

- DSA - Merge Sort Algorithm

- DSA - Shell Sort Algorithm

- DSA - Heap Sort

- DSA - Bucket Sort Algorithm

- DSA - Counting Sort Algorithm

- DSA - Radix Sort Algorithm

- DSA - Quick Sort Algorithm

- Graph Data Structure

- DSA - Graph Data Structure

- DSA - Depth First Traversal

- DSA - Breadth First Traversal

- DSA - Spanning Tree

- Tree Data Structure

- DSA - Tree Data Structure

- DSA - Tree Traversal

- DSA - Binary Search Tree

- DSA - AVL Tree

- DSA - Red Black Trees

- DSA - B Trees

- DSA - B+ Trees

- DSA - Splay Trees

- DSA - Tries

- DSA - Heap Data Structure

- DSA - Recursion Algorithms

- DSA - Tower of Hanoi Using Recursion

- DSA - Fibonacci Series Using Recursion

- Divide and Conquer

- DSA - Divide and Conquer

- DSA - Max-Min Problem

- DSA - Strassen's Matrix Multiplication

- DSA - Karatsuba Algorithm

- Greedy Algorithms

- DSA - Greedy Algorithms

- DSA - Travelling Salesman Problem (Greedy Approach)

- DSA - Prim's Minimal Spanning Tree

- DSA - Kruskal's Minimal Spanning Tree

- DSA - Dijkstra's Shortest Path Algorithm

- DSA - Map Colouring Algorithm

- DSA - Fractional Knapsack Problem

- DSA - Job Sequencing with Deadline

- DSA - Optimal Merge Pattern Algorithm

- Dynamic Programming

- DSA - Dynamic Programming

- DSA - Matrix Chain Multiplication

- DSA - Floyd Warshall Algorithm

- DSA - 0-1 Knapsack Problem

- DSA - Longest Common Subsequence Algorithm

- DSA - Travelling Salesman Problem (Dynamic Approach)

- Approximation Algorithms

- DSA - Approximation Algorithms

- DSA - Vertex Cover Algorithm

- DSA - Set Cover Problem

- DSA - Travelling Salesman Problem (Approximation Approach)

- Randomized Algorithms

- DSA - Randomized Algorithms

- DSA - Randomized Quick Sort Algorithm

- DSA - Karger’s Minimum Cut Algorithm

- DSA - Fisher-Yates Shuffle Algorithm

- DSA Useful Resources

- DSA - Questions and Answers

- DSA - Quick Guide

- DSA - Useful Resources

- DSA - Discussion

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

Travelling Salesman Problem (Dynamic Approach)

Travelling salesman dynamic programming algorithm, implementation.

Travelling salesman problem is the most notorious computational problem. We can use brute-force approach to evaluate every possible tour and select the best one. For n number of vertices in a graph, there are (n−1)! number of possibilities. Thus, maintaining a higher complexity.

However, instead of using brute-force, using the dynamic programming approach will obtain the solution in lesser time, though there is no polynomial time algorithm.

Let us consider a graph G = (V,E) , where V is a set of cities and E is a set of weighted edges. An edge e(u, v) represents that vertices u and v are connected. Distance between vertex u and v is d(u, v) , which should be non-negative.

Suppose we have started at city 1 and after visiting some cities now we are in city j . Hence, this is a partial tour. We certainly need to know j , since this will determine which cities are most convenient to visit next. We also need to know all the cities visited so far, so that we don't repeat any of them. Hence, this is an appropriate sub-problem.

For a subset of cities S $\epsilon$ {1,2,3,...,n} that includes 1 , and j $\epsilon$ S, let C(S, j) be the length of the shortest path visiting each node in S exactly once, starting at 1 and ending at j .

When |S|> 1 , we define 𝑪 C(S,1)= $\propto$ since the path cannot start and end at 1.

Now, let express C(S, j) in terms of smaller sub-problems. We need to start at 1 and end at j . We should select the next city in such a way that

$$C\left ( S,j \right )\, =\, min\, C\left ( S\, -\, \left\{j \right\},i \right )\, +\, d\left ( i,j \right )\: where\: i\: \epsilon \: S\: and\: i\neq j$$

There are at the most 2 n .n sub-problems and each one takes linear time to solve. Therefore, the total running time is O(2 n .n 2 ) .

In the following example, we will illustrate the steps to solve the travelling salesman problem.

From the above graph, the following table is prepared.

$$Cost\left ( 2,\Phi ,1 \right )\, =\, d\left ( 2,1 \right )\,=\,5$$

$$Cost\left ( 3,\Phi ,1 \right )\, =\, d\left ( 3,1 \right )\, =\, 6$$

$$Cost\left ( 4,\Phi ,1 \right )\, =\, d\left ( 4,1 \right )\, =\, 8$$

$$Cost(i,s)=min\left\{Cos\left ( j,s-(j) \right )\, +\,d\left [ i,j \right ] \right\}$$

$$Cost(2,\left\{3 \right\},1)=d[2,3]\, +\, Cost\left ( 3,\Phi ,1 \right )\, =\, 9\, +\, 6\, =\, 15$$

$$Cost(2,\left\{4 \right\},1)=d[2,4]\, +\, Cost\left ( 4,\Phi ,1 \right )\, =\, 10\, +\, 8\, =\, 18$$

$$Cost(3,\left\{2 \right\},1)=d[3,2]\, +\, Cost\left ( 2,\Phi ,1 \right )\, =\, 13\, +\, 5\, =\, 18$$

$$Cost(3,\left\{4 \right\},1)=d[3,4]\, +\, Cost\left ( 4,\Phi ,1 \right )\, =\, 12\, +\, 8\, =\, 20$$

$$Cost(4,\left\{3 \right\},1)=d[4,3]\, +\, Cost\left ( 3,\Phi ,1 \right )\, =\, 9\, +\, 6\, =\, 15$$

$$Cost(4,\left\{2 \right\},1)=d[4,2]\, +\, Cost\left ( 2,\Phi ,1 \right )\, =\, 8\, +\, 5\, =\, 13$$

$$Cost(2,\left\{3,4 \right\},1)=min\left\{\begin{matrix} d\left [ 2,3 \right ]\,+ \,Cost\left ( 3,\left\{ 4\right\},1 \right )\, =\, 9\, +\, 20\, =\, 29 \\ d\left [ 2,4 \right ]\,+ \,Cost\left ( 4,\left\{ 3\right\},1 \right )\, =\, 10\, +\, 15\, =\, 25 \\ \end{matrix}\right.\, =\,25$$

$$Cost(3,\left\{2,4 \right\},1)=min\left\{\begin{matrix} d\left [ 3,2 \right ]\,+ \,Cost\left ( 2,\left\{ 4\right\},1 \right )\, =\, 13\, +\, 18\, =\, 31 \\ d\left [ 3,4 \right ]\,+ \,Cost\left ( 4,\left\{ 2\right\},1 \right )\, =\, 12\, +\, 13\, =\, 25 \\ \end{matrix}\right.\, =\,25$$

$$Cost(4,\left\{2,3 \right\},1)=min\left\{\begin{matrix} d\left [ 4,2 \right ]\,+ \,Cost\left ( 2,\left\{ 3\right\},1 \right )\, =\, 8\, +\, 15\, =\, 23 \\ d\left [ 4,3 \right ]\,+ \,Cost\left ( 3,\left\{ 2\right\},1 \right )\, =\, 9\, +\, 18\, =\, 27 \\ \end{matrix}\right.\, =\,23$$

$$Cost(1,\left\{2,3,4 \right\},1)=min\left\{\begin{matrix} d\left [ 1,2 \right ]\,+ \,Cost\left ( 2,\left\{ 3,4\right\},1 \right )\, =\, 10\, +\, 25\, =\, 35 \\ d\left [ 1,3 \right ]\,+ \,Cost\left ( 3,\left\{ 2,4\right\},1 \right )\, =\, 15\, +\, 25\, =\, 40 \\ d\left [ 1,4 \right ]\,+ \,Cost\left ( 4,\left\{ 2,3\right\},1 \right )\, =\, 20\, +\, 23\, =\, 43 \\ \end{matrix}\right.\, =\, 35$$

The minimum cost path is 35.

Start from cost {1, {2, 3, 4}, 1}, we get the minimum value for d [1, 2]. When s = 3, select the path from 1 to 2 (cost is 10) then go backwards. When s = 2, we get the minimum value for d [4, 2]. Select the path from 2 to 4 (cost is 10) then go backwards.

When s = 1, we get the minimum value for d [4, 2] but 2 and 4 is already selected. Therefore, we select d [4, 3] (two possible values are 15 for d [2, 3] and d [4, 3], but our last node of the path is 4). Select path 4 to 3 (cost is 9), then go to s = ϕ step. We get the minimum value for d [3, 1] (cost is 6).

Following are the implementations of the above approach in various programming languages −

- Prone To Undertrades

- Prone To Overtrades

- Night of the Phantoms

- Jamaalidays

- Friendship Festival

- Steady Value

- Decent Demand

- High Demand

- Sapphire Items

- Sapphire Sets

- Traveling Salesman

- Unavailable

Salesman Member Items

- View history

Last Updated: 12/29/2023

The first Salesman Member Items were released on February 8, 2018. They are exclusive to a single appearance of the Traveling Salesman, often released in groups of three. All items sold before August 5, 2019 could be used by all Jammers.

Note: Non-member Salesman items are excluded from this page for being very low value. This is because they always return with the Salesman throughout the year.

Note: Many older releases are hard to find. However, due to their obscurity, they may go for less than listed.

Note: As of March 2021, Golden Snowflake items are obtainable by tapping Golden Snowflakes in Mt. Shiveer.

- 1 Pet Sugar Glider

- 2 Pet Unicorn

- 3 Rare Raccoon Tail

Help | Advanced Search

Computer Science > Artificial Intelligence

Title: distilling privileged information for dubins traveling salesman problems with neighborhoods.

Abstract: This paper presents a novel learning approach for Dubins Traveling Salesman Problems(DTSP) with Neighborhood (DTSPN) to quickly produce a tour of a non-holonomic vehicle passing through neighborhoods of given task points. The method involves two learning phases: initially, a model-free reinforcement learning approach leverages privileged information to distill knowledge from expert trajectories generated by the LinKernighan heuristic (LKH) algorithm. Subsequently, a supervised learning phase trains an adaptation network to solve problems independently of privileged information. Before the first learning phase, a parameter initialization technique using the demonstration data was also devised to enhance training efficiency. The proposed learning method produces a solution about 50 times faster than LKH and substantially outperforms other imitation learning and RL with demonstration schemes, most of which fail to sense all the task points.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

What a lovely hat

Is it made out of tin foil , paper 2024/626, exponential quantum speedup for the traveling salesman problem.

The traveling salesman problem is the problem of finding out the shortest route in a network of cities, that a salesman needs to travel to cover all the cities, without visiting the same city more than once. This problem is known to be $NP$-hard with a brute-force complexity of $O(N^N)$ or $O(N^{2N})$ for $N$ number of cities. This problem is equivalent to finding out the shortest Hamiltonian cycle in a given graph, if at least one Hamiltonian cycle exists in it. Quantum algorithms for this problem typically provide with a quadratic speedup only, using Grover's search, thereby having a complexity of $O(N^{N/2})$ or $O(N^N)$. We present a bounded-error quantum polynomial-time (BQP) algorithm for solving the problem, providing with an exponential speedup. The overall complexity of our algorithm is $O(N^3\log(N)\kappa/\epsilon + 1/\epsilon^3)$, where the errors $\epsilon$ are $O(1/{\rm poly}(N))$, and $\kappa$ is the not-too-large condition number of the matrix encoding all Hamiltonian cycles.

Note: 6 pages, 2 figures, comments welcome.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

blefev/ruby-travelling-salesman

Folders and files, repository files navigation, ruby-travelling-salesman.

Travelling salesman problem in ruby.

Includes brute force, dynamic programming, and greedy algorithms and a tool for benchmarking performance of each.

- Gnuplot 10.8%

IMAGES

VIDEO

COMMENTS

Here are a couple of tricks: 1: Lump locations that are relatively close into one graph, and turn those locations into a single node in your main graph. This lets you be greedy without too much work. 2: Use an approximation algorithm. 2a: My favorite is bitonic tours. They're pretty easy to hack up. See Update.

The travelling salesman problem is a classic optimization problem in computer science. It involves finding the shortest possible route that a salesman can take to visit a given set of locations and return to the starting point. In this article, we will explore how to solve the travelling salesman problem in Ruby, specifically for 50 locations.

The travelling salesman problem is a well known example of an NP-complete problem. This does not mean that it can't be solved; rather it can't be solved in polynomial time. A brute-force search could be used to solve the problem in time O(n!), however the dynamic programming approach take here provides a solution in O(n^2 . 2^n) which although ...

Itinerary for a Traveling Salesman (#142) - Ruby - Ruby-Forum. I don't know if the "grid" method mentioned is applicable for general TSP. It is not. It is only applicable to the special case TSP (points are on a euclidean grid) mentioned there. Secondly the method does not find optimal solution. Both of these limitations are mentioned on ...

The traveling salesperson problem can be modeled as a graph. Specifically, it is typical a directed, weighted graph. Each city acts as a vertex and each path between cities is an edge. Instead of distances, each edge has a weight associated with it. In this model, the goal of the traveling salesperson problem can be defined as finding a path ...

TSP Solver with Branch and Bound Algorithm 🗺️ Authored by Addin Munawwar Yusuf 13521085 - Informatics Engineering ITB Indonesia Requirements: Ruby 1.8, Ruby 1.9, JRuby Input Precondition: 1. Input graph must be a complete graph 2.

Points. Number of random points. Possible Paths: 1.524 x 1029. Dark Mode. Interactive solver for the traveling salesman problem to visualize different algorithms. Includes various Heuristic and Exhaustive algorithms.

The generalized travelling salesman problem, also known as the "travelling politician problem", deals with "states" that have (one or more) "cities", and the salesman must visit exactly one city from each state. One application is encountered in ordering a solution to the cutting stock problem in order to minimize knife changes.

The Traveling Salesman Problem states that you are a salesperson and you must visit a number of cities or towns. The Traveling Salesman Problem. Rules: Visit every city only once, then return back to the city you started in. Goal: Find the shortest possible route. Except for the Held-Karp algorithm (which is quite advanced and time consuming ...

Travelling Salesman Problem (TSP) : Given a set of cities and distances between every pair of cities, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. Note the difference between Hamiltonian Cycle and TSP. The Hamiltonian cycle problem is to find if there exists a tour that visits every city exactly once.

The traveling salesman problem is a well-known problem in mathematics and optimization. A salesperson must visit several different cities and return to the starting point. The problem involves determining the sequence in which the cities should be visited by a salesperson so that the resulting trip covers the shortest possible distance and each ...

GA Implementation of Travelling Salesman Problem in Ruby - GitHub - tommyco/TSP-in-Ruby: GA Implementation of Travelling Salesman Problem in Ruby

Travelling salesman problem takes a graph G {V, E} as an input and declare another graph as the output (say G') which will record the path the salesman is going to take from one node to another. The algorithm begins by sorting all the edges in the input graph G from the least distance to the largest distance. The first edge selected is the ...

A Ruby Travelling Salesman Problem Simulator with a focus on code-readability. - flo-l/tsp-simulator

Figure 12.186 Each door on the route of a traveling salesperson can be represented as a vertex on a graph. (credit: "Three in a row, Heriot Row" by Jason Mason/Flickr, CC BY 2.1) ... exercises to compare the results of the brute force method to the results of the nearest neighbor method for each traveling salesman problem of finding a ...

Travelling Salesman Problem (Dynamic Approach) - Travelling salesman problem is the most notorious computational problem. We can use brute-force approach to evaluate every possible tour and select the best one. For n number of vertices in a graph, there are (n−1)! number of possibilities. Thus, maintaining a higher complexity.

We introduce a novel approach using deep reinforcement learning to tackle the Dynamic Traveling Salesman Problem with time-dependent and stochastic travel times (DTSP-TDS). The main goal is to dynamically plan the route with the shortest tour duration that visits all customers while considering the uncertainties and time-dependence of travel times.

Last Updated: 12/29/2023 The first Salesman Member Items were released on February 8, 2018. They are exclusive to a single appearance of the Traveling Salesman, often released in groups of three. All items sold before August 5, 2019 could be used by all Jammers. Note: Non-member Salesman items are excluded from this page for being very low value. This is because they always return with the ...

The TM salesman needs no special requirements to appear in ORAS. The salesman is in the top right of the market. In RSE, you must first get the TM43 (Secret Power) on Route 111. Source. Hope I helped! Okay, I thought since it was a traveling salesman, it only appeared on certain days or times. I don't believe so, according to Bulbapedia.

This paper presents a novel learning approach for Dubins Traveling Salesman Problems(DTSP) with Neighborhood (DTSPN) to quickly produce a tour of a non-holonomic vehicle passing through neighborhoods of given task points. The method involves two learning phases: initially, a model-free reinforcement learning approach leverages privileged information to distill knowledge from expert ...

Traveling Salesperson Problem Hamiltonian Cycle Problem Quantum Algorithm Exponential Speedup Contact author(s) anantsharma2410 @ gmail com rajeshdeshpande @ students iisertirupati ac in sanchita ghosh14 @ gmail com sreetama das @ ino cnr it roy shibdas @ gmail com History 2024-04-26: approved 2024-04-23: received See all versions Short URL

I attempt to solve a version of the travelling salesman problem using ruby and machine learning techniques. - GitHub - oguzcanhuner/travelling_salesman: I attempt to ...

lab 8. Contribute to blefev/ruby-travelling-salesman development by creating an account on GitHub.